I. preliminary preparation

1.1 basic knowledge

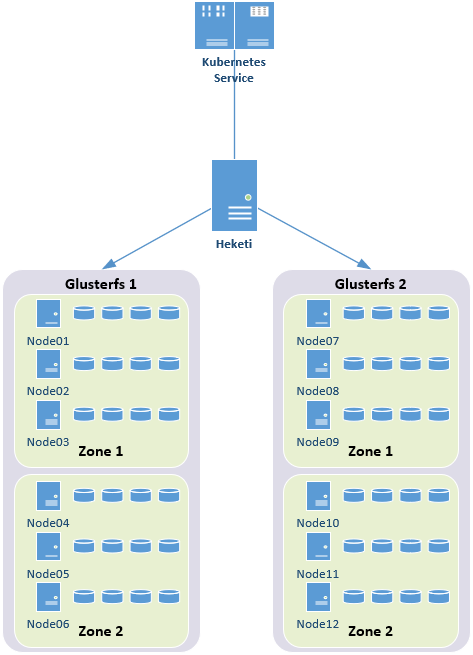

Heketi provides a RESTful management interface that can be used to manage the life cycle of a GlusterFS volume. Heketi will dynamically select bricks to build the required volumes in the cluster, so as to ensure that the copies of data will be distributed to different failure domains in the cluster. Heketi also supports any number of ClusterFS clusters.

Tip: this experiment is based on the separate deployment of glusterfs and kubernetes. Heketi manages glusterfs. Kubernetes uses the API provided by heketi to realize the permanent storage of glusterfs, instead of kubernetes deploying glusterfs.

1.2 architecture

Tip: Heketi only manages the glusterfs cluster of a single zone in this experiment.

1.3 relevant planning

|

Host

|

IP

|

disk

|

Remarks

|

|

servera

|

172.24.8.41

|

sdb

|

glusterfs node

|

|

serverb

|

172.24.8.42

|

sdb

|

glusterfs node

|

|

serverc

|

172.24.8.43

|

sdb

|

glusterfs node

|

|

heketi

|

172.24.8.44

|

Heketi host

|

|

servera

|

serverb

|

serverc

|

|||||||

|

PV

|

sdb1

|

sdb1

|

sdb1

|

||||||

|

VG

|

vg0

|

vg0

|

vg0

|

||||||

|

LV

|

datalv

|

datalv

|

datalv

|

||||||

|

bricks directory

|

/bricks/data

|

/bricks/data

|

/bricks/data

|

||||||

1.4 other preparations

NTP configuration of all nodes;

Add corresponding hostname resolution for all nodes:

172.24.8.41 servera

172.24.8.42 serverb

172.24.8.43 serverc

172.24.8.44 heketi

Note: if not necessary, it is recommended to turn off the firewall and SELinux.

2. Plan the corresponding storage volume

2.1 dividing LVM

1 [root@servera ~]# fdisk /dev/sdb #Create sdb1 of lvm, with a short process 2 [root@servera ~]# pvcreate /dev/sdb1 #Create PV with / dev/vdb1 3 [root@servera ~]# vgcreate vg0 /dev/sdb1 #Create vg 4 [root@servera ~]# lvcreate -L 15G -T vg0/thinpool #Create a thin enabled lv pool 5 [root@servera ~]# lvcreate -V 10G -T vg0/thinpool -n datalv #Create lv of corresponding brick 6 [root@servera ~]# vgdisplay #Verify vg information 7 [root@servera ~]# pvdisplay #Verify pv information 8 [root@servera ~]# lvdisplay #Verify lv information

Tip: serverb and serverc are similar operations, and all LVM based brick s are created according to the planning requirements.

Three install glusterfs

3.1 install the corresponding RPM source

1 [root@servera ~]# yum -y install centos-release-glusterPrompt: for similar operations of serverb, serverc and client, install the corresponding glusterfs source;

After installing the corresponding source, the file CentOS-Storage-common.repo will be added in the directory / etc/yum.repos.d/ as follows:

1 # CentOS-Storage.repo 2 # 3 # Please see http://wiki.centos.org/SpecialInterestGroup/Storage for more 4 # information 5 6 [centos-storage-debuginfo] 7 name=CentOS-$releasever - Storage SIG - debuginfo 8 baseurl=http://debuginfo.centos.org/$contentdir/$releasever/storage/$basearch/ 9 gpgcheck=1 10 enabled=0 11 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Storage

3.2 install glusterfs

1 [root@servera ~]# yum -y install glusterfs-serverNote: serverb and serverc are similar operations. Install the glusterfs server.

3.3 start glusterfs

1 [root@servera ~]# systemctl start glusterd 2 [root@servera ~]# systemctl enable glusterd

Prompt: serverb and serverc are similar. All nodes start the glusterfs server;

After installing glusterfs, it is recommended to exit the terminal and log in again, so as to complete the related commands of glusterfs.

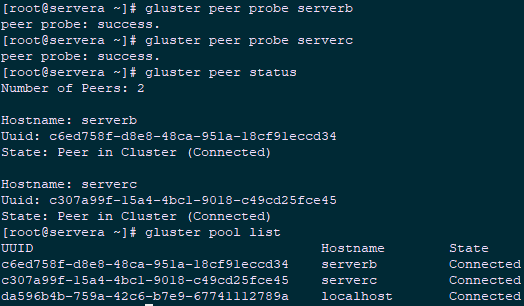

3.4 add trust pool

1 [root@servera ~]# gluster peer probe serverb 2 peer probe: success. 3 [root@servera ~]# gluster peer probe serverc 4 peer probe: success. 5 [root@servera ~]# gluster peer status #View trust pool status 6 [root@servera ~]# gluster pool list #View the list of trust pools

Tip: to add a trust pool, you only need to add the other three nodes on any one of the cluster node hosts servera, serverb, and serverc.

Tip: if the firewall is not closed, the corresponding rules of the firewall must be put through before adding the trust pool. The operation is as follows:

1 [root@servera ~]# firewallcmd permanent addservice=glusterfs 2 [root@servera ~]# firewallcmd permanent addservice=nfs 3 [root@servera ~]# firewallcmd permanent addservice=rpcbind 4 [root@servera ~]# firewallcmd permanent addservice=mountd 5 [root@servera ~]# firewallcmd permanent addport=5666/tcp 6 [root@servera ~]# firewallcmd reload

Deployment of Heketi

4.1 install heketi service

1 [root@heketi ~]# yum -y install centos-release-gluster 2 [root@heketi ~]# yum -y install heketi heketi-client

4.2 configure heketi

1 [root@heketi ~]# vi /etc/heketi/heketi.json 2 { 3 "_port_comment": "Heketi Server Port Number", 4 "port": "8080", #Default port 5 6 "_use_auth": "Enable JWT authorization. Please enable for deployment", 7 "use_auth": true, #Start authentication based on security considerations 8 9 "_jwt": "Private keys for access", 10 "jwt": { 11 "_admin": "Admin has access to all APIs", 12 "admin": { 13 "key": "admin123" #Administrator password 14 }, 15 "_user": "User only has access to /volumes endpoint", 16 "user": { 17 "key": "xianghy" #Ordinary users 18 } 19 }, 20 21 "_glusterfs_comment": "GlusterFS Configuration", 22 "glusterfs": { 23 "_executor_comment": [ 24 "Execute plugin. Possible choices: mock, ssh", 25 "mock: This setting is used for testing and development.", #For testing 26 " It will not send commands to any node.", 27 "ssh: This setting will notify Heketi to ssh to the nodes.", #ssh mode 28 " It will need the values in sshexec to be configured.", 29 "kubernetes: Communicate with GlusterFS containers over", #Used when GlusterFS was created by kubernetes 30 " Kubernetes exec api." 31 ], 32 "executor": "ssh", 33 34 "_sshexec_comment": "SSH username and private key file information", 35 "sshexec": { 36 "keyfile": "/etc/heketi/heketi_key", 37 "user": "root", 38 "port": "22", 39 "fstab": "/etc/fstab" 40 }, 41 ...... 42 ...... 43 "loglevel" : "warning" 44 } 45 }

4.3 configure the secret key

1 [root@heketi ~]# ssh-keygen -t rsa -q -f /etc/heketi/heketi_key -N "" 2 [root@heketi ~]# chown heketi:heketi /etc/heketi/heketi_key 3 [root@heketi ~]# ssh-copy-id -i /etc/heketi/heketi_key.pub root@servera 4 [root@heketi ~]# ssh-copy-id -i /etc/heketi/heketi_key.pub root@serverb 5 [root@heketi ~]# ssh-copy-id -i /etc/heketi/heketi_key.pub root@serverc

4.4 start heketi

1 [root@heketi ~]# systemctl enable heketi.service 2 [root@heketi ~]# systemctl start heketi.service 3 [root@heketi ~]# systemctl status heketi.service 4 [root@heketi ~]# curl http://localhost:8080/hello ා test access 5 Hello from Heketi

4.5 configure Heketi topology

Topology information is used to let heketi confirm the storage nodes, disks and clusters that can be used, and the fault domain of the nodes must be determined by itself. A fault domain is an integer value assigned to a group of nodes that share the same switch, power supply, or any other component that causes them to fail at the same time. It is necessary to confirm which nodes form a cluster, and heketi uses this information to ensure that replicas are created across the fault domain, thus providing data redundancy. Heketi supports multiple cluster of cluster storage.

Note the following points when configuring Heketi topology:

- You can define the GlusterFS cluster by using the topology.json file;

- topology specifies the hierarchical relationship: clusters > nodes > node / Devices > hostnames / zone;

- In the node / hostname field, it is recommended to fill in the host ip, which refers to the manage ment channel. Note that when the heketi server cannot access the GlusterFS node through the hostname, it cannot fill in the hostname;

- For the storage of node / hostname field, it is recommended to fill in the host ip, which refers to the storage data channel. Unlike manage, it is recommended to separate the production environment management network from the storage network;

- The node/zone field specifies the fault domain in which the node is located. heketi creates a replica across the fault domain to improve the high availability of data. For example, it can distinguish the zone values through the different rack values to create a fault domain across the rack;

- The devices field specifies the drive letter (which can be multiple disks) of each node of GlusterFS. It must be a raw device without file system creation.

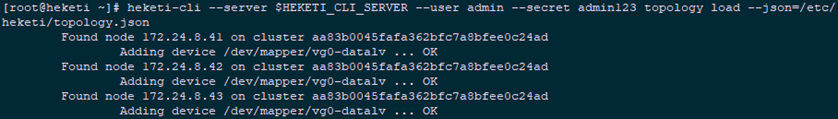

1 [root@heketi ~]# vi /etc/heketi/topology.json 2 { 3 "clusters": [ 4 { 5 "nodes": [ 6 { 7 "node": { 8 "hostnames": { 9 "manage": [ 10 "172.24.8.41" 11 ], 12 "storage": [ 13 "172.24.8.41" 14 ] 15 }, 16 "zone": 1 17 }, 18 "devices": [ 19 "/dev/mapper/vg0-datalv" 20 ] 21 }, 22 { 23 "node": { 24 "hostnames": { 25 "manage": [ 26 "172.24.8.42" 27 ], 28 "storage": [ 29 "172.24.8.42" 30 ] 31 }, 32 "zone": 1 33 }, 34 "devices": [ 35 "/dev/mapper/vg0-datalv" 36 ] 37 }, 38 { 39 "node": { 40 "hostnames": { 41 "manage": [ 42 "172.24.8.43" 43 ], 44 "storage": [ 45 "172.24.8.43" 46 ] 47 }, 48 "zone": 1 49 }, 50 "devices": [ 51 "/dev/mapper/vg0-datalv" 52 ] 53 } 54 ] 55 } 56 ] 57 } 58 59 [root@heketi ~]# echo "export HEKETI_CLI_SERVER=http://heketi:8080" >> /etc/profile.d/heketi.sh 60 [root@heketi ~]# echo "alias heketi-cli='heketi-cli --user admin --secret admin123'" >> .bashrc 61 [root@heketi ~]# source /etc/profile.d/heketi.sh 62 [root@heketi ~]# source .bashrc 63 [root@heketi ~]# echo $HEKETI_CLI_SERVER 64 http://heketi:8080 65 [root@heketi ~]# heketi-cli --server $HEKETI_CLI_SERVER --user admin --secret admin123 topology load --json=/etc/heketi/topology.json

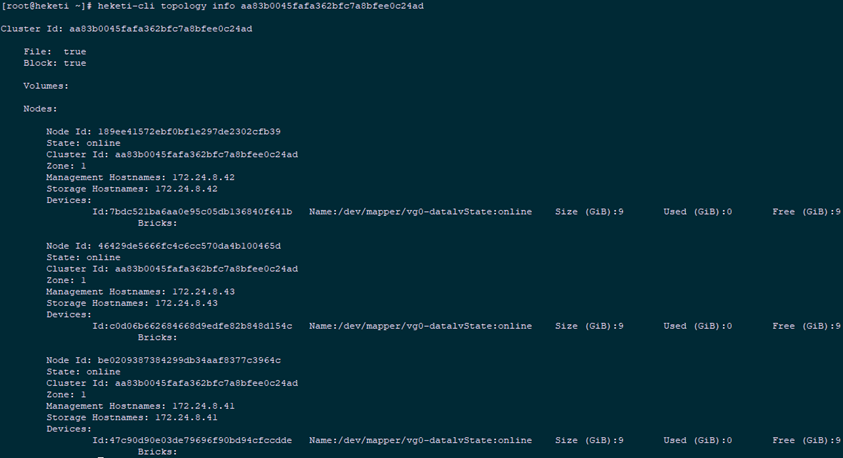

4.6 cluster management

1 [root@heketi ~]# heketi-cli cluster list #Cluster list 2 [root@heketi ~]# heketi-cli cluster info aa83b0045fafa362bfc7a8bfee0c24ad #Cluster details 3 Cluster id: aa83b0045fafa362bfc7a8bfee0c24ad 4 Nodes: 5 189ee41572ebf0bf1e297de2302cfb39 6 46429de5666fc4c6cc570da4b100465d 7 be0209387384299db34aaf8377c3964c 8 Volumes: 9 10 Block: true 11 12 File: true 13 [root@heketi ~]# heketi-cli topology info aa83b0045fafa362bfc7a8bfee0c24ad #View topology information

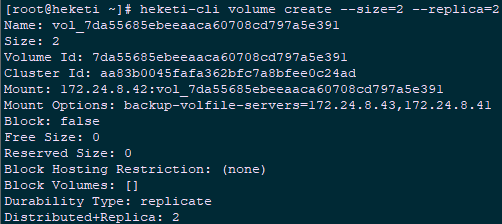

1 [root@heketi ~]# heketi-cli node list #Volume information 2 Id:189ee41572ebf0bf1e297de2302cfb39 Cluster:aa83b0045fafa362bfc7a8bfee0c24ad 3 Id:46429de5666fc4c6cc570da4b100465d Cluster:aa83b0045fafa362bfc7a8bfee0c24ad 4 Id:be0209387384299db34aaf8377c3964c Cluster:aa83b0045fafa362bfc7a8bfee0c24ad 5 [root@heketi ~]# heketi-cli node info 189ee41572ebf0bf1e297de2302cfb39 #Node information 6 [root@heketi ~]# heketi-cli volume create --size=2 --replica=2 #replica mode with 3 replicas by default

1 [root@heketi ~]# heketi-cli volume list #Volume information 2 [root@heketi ~]# heketi-cli volume info 7da55685ebeeaaca60708cd797a5e391 3 [root@servera ~]# gluster volume info #View through the glusterfs node

4.7 test verification

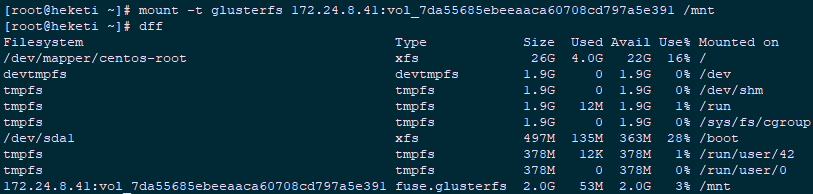

1 [root@heketi ~]# yum -y install centos-release-gluster 2 [root@heketi ~]# yum -y install glusterfs-fuse #Install glusterfs fuse 3 [root@heketi ~]# mount -t glusterfs 172.24.8.41:vol_7da55685ebeeaaca60708cd797a5e391 /mnt

1 [root@heketi ~]# umount /mnt 2 [root@heketi ~]# heketi-cli volume delete 7da55685ebeeaaca60708cd797a5e391 #Verification complete delete

Reference: https://www.jianshu.com/p/1069ddaea78

https://www.cnblogs.com/panwenbin-logs/p/10231859.html

Five Kubernetes dynamic mount glusterfs

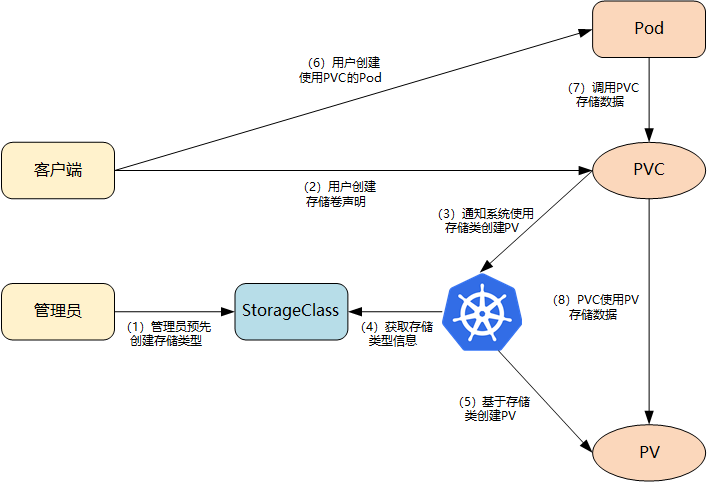

5.1 StorageClass dynamic storage

kubernetes shared storage provider mode:

Static: the Cluster Administrator creates PV manually, and sets the characteristics of back-end storage when defining PV;

Dynamic mode: the cluster administrator does not need to create PV manually, but describes the back-end storage through the StorageClass settings, marked as a "Class"; at this time, PVC is required to describe the storage type, and the system will automatically complete the creation of PV and binding with PVC; PVC can declare Class as "", indicating that PVC prohibits the use of dynamic mode.

The overall process of dynamic storage provisioning based on StorageClass is shown in the following figure:

- The Cluster Administrator creates the storage class in advance;

- The user creates a persistent storage claim (PVC: PersistentVolumeClaim) using the storage class;

- The storage persistent declaration notification system needs a persistent storage (PV: persistent volume);

- The system reads the information of the storage class;

- Based on the information of storage class, the system automatically creates the PV needed by PVC in the background;

- Users create a Pod using PVC;

- The applications in Pod are persistent by PVC;

- PVC uses PV for data persistence.

Note: for the deployment of Kubernetes, please refer to attachment 003. Kubedm deployment of Kubernetes.

5.2 define StorageClass

Keyword Description:

- provisioner: indicates the storage allocator, which needs to be changed according to the different back-end storage;

- reclaimPolicy: the default is "Delete". After pvc is deleted, the corresponding pv, volume and brick(lvm) at the back end are deleted together. When "Retain" is set, the data is retained. If it needs to be deleted, it needs to be processed manually;

- resturl: the url provided by the heketi API service;

- restauthenabled: optional parameter. The default value is "false". When the heketi service starts authentication, it must be set to "true";

- restuser: optional parameter; set corresponding user name when enabling authentication;

- secretNamespace: optional parameter. When authentication is enabled, it can be set to use the namespace of persistent storage;

- secretName: optional parameter. When enabling authentication, you need to save the authentication password of heketi service in the secret resource;

- clusterid: optional parameter. Specify the cluster id or a list of clusterids. The format is "id1,id2";

- Volumtype: optional parameter. Set the volume type and its parameters. If the volume type is not allocated, there is an allocator to determine the volume type. For example, "volumtype: replicate: 3" represents the replicate volume of the 3 replica, "volumtype: distribute: 4:2" represents the distribute volume, where "4" is the data, "2" is the redundancy check, "volumtype: None" represents the distribute volume

Note: see 004.RHGS-create volume for different types of glusterfs.

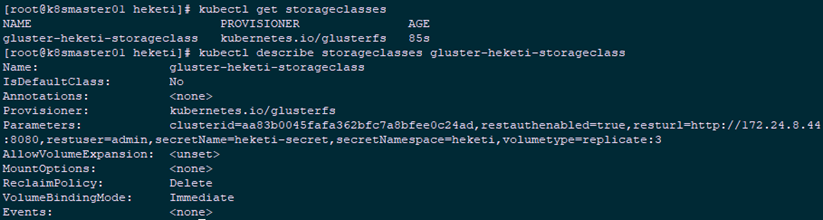

1 [root@k8smaster01 ~]# kubectl create ns heketi #Create namespace 2 [root@k8smaster01 ~]# echo -n "admin123" | base64 #Convert password to 64 bit encoding 3 YWRtaW4xMjM= 4 [root@k8smaster01 ~]# mkdir -p heketi 5 [root@k8smaster01 ~]# cd heketi/ 6 [root@k8smaster01 ~]# vi heketi-secret.yaml #Create a secret to save the password 7 apiVersion: v1 8 kind: Secret 9 metadata: 10 name: heketi-secret 11 namespace: heketi 12 data: 13 # base64 encoded password. E.g.: echo -n "mypassword" | base64 14 key: YWRtaW4xMjM= 15 type: kubernetes.io/glusterfs 16 [root@k8smaster01 heketi]# kubectl create -f heketi-secret.yaml #Create heketi 17 [root@k8smaster01 heketi]# kubectl get secrets -n heketi 18 NAME TYPE DATA AGE 19 default-token-5sn5d kubernetes.io/service-account-token 3 43s 20 heketi-secret kubernetes.io/glusterfs 1 5s 21 [root@kubenode1 heketi]# vim gluster-heketi-storageclass.yaml #Formally create StorageClass 22 apiVersion: storage.k8s.io/v1 23 kind: StorageClass 24 metadata: 25 name: gluster-heketi-storageclass 26 parameters: 27 resturl: "http://172.24.8.44:8080" 28 clusterid: "aa83b0045fafa362bfc7a8bfee0c24ad" 29 restauthenabled: "true" #If heketi turns on authentication, auth authentication must also be turned on here 30 restuser: "admin" 31 secretName: "heketi-secret" #name/namespace And secret Consistent definition in resources 32 secretNamespace: "heketi" 33 volumetype: "replicate:3" 34 provisioner: kubernetes.io/glusterfs 35 reclaimPolicy: Delete 36 [root@k8smaster01 heketi]# kubectl create -f gluster-heketi-storageclass.yaml

Note: the storageclass resource cannot be changed after creation. For example, modification can only be deleted and rebuilt.

1 [root@k8smaster01 heketi]# kubectl get storageclasses #View confirmation 2 NAME PROVISIONER AGE 3 gluster-heketi-storageclass kubernetes.io/glusterfs 85s 4 [root@k8smaster01 heketi]# kubectl describe storageclasses gluster-heketi-storageclass

5.3 definition of PVC

1 [root@k8smaster01 heketi]# cat gluster-heketi-pvc.yaml 2 apiVersion: v1 3 metadata: 4 name: gluster-heketi-pvc 5 annotations: 6 volume.beta.kubernetes.io/storage-class: gluster-heketi-storageclass 7 spec: 8 accessModes: 9 - ReadWriteOnce 10 resources: 11 requests: 12 storage: 1Gi

Note: accessModes can be abbreviated as follows:

- ReadWriteOnce: short RWO, read-write permission, and can only be mounted by a single node;

- ReadOnlyMany: ROX, read-only permission, allowed to be mounted by multiple node s;

- ReadWriteMany: shorthand RWX, read-write permission, allowed to be mounted by multiple node s.

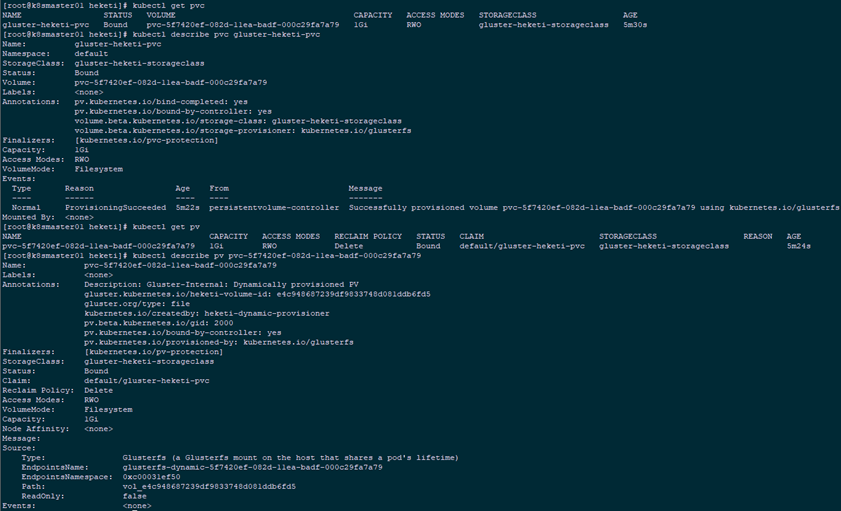

1 [root@k8smaster01 heketi]# kubectl create -f gluster-heketi-pvc.yaml 2 [root@k8smaster01 heketi]# kubectl get pvc 3 [root@k8smaster01 heketi]# kubectl describe pvc gluster-heketi-pvc 4 [root@k8smaster01 heketi]# kubectl get pv 5 [root@k8smaster01 heketi]# kubectl describe pv pvc-5f7420ef-082d-11ea-badf-000c29fa7a79

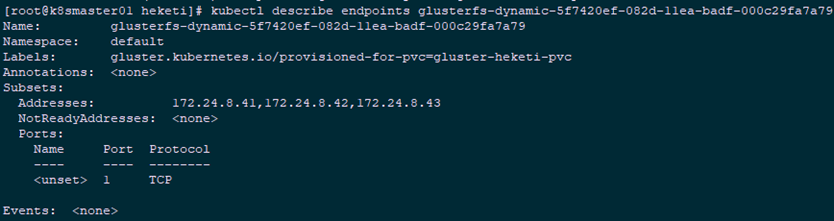

1 [root@k8smaster01 heketi]# kubectl describe endpoints glusterfs-dynamic-5f7420ef-082d-11ea-badf-000c29fa7a79Tip: it can be seen from the above: PVC status is Bound, and Capacity is 1G. View the PV details, in addition to Capacity, reference storageclass information, status, recycling strategy, etc., and know the Endpoint and path of GlusterFS. The EndpointsName is in a fixed format: GlusterFS dynamic PV? Name, and the specific address for mounting storage is specified in the endpoints resource.

5.4 confirm to view

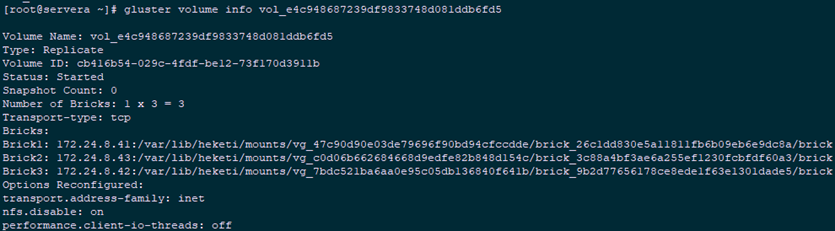

Information created through 5.3:

- volume and brick have been created;

- The main mount point (Communication) is 172.24.8.41 node, and the other two nodes are optional;

- In the case of three copies, all nodes create a brick.

1 [root@heketi ~]# heketi-cli topology info #heketi host view 2 [root@serverb ~]# lsblk #View the glusterfs node 3 [root@serverb ~]# df -hT #View the glusterfs node 4 [root@servera ~]# gluster volume list #View the glusterfs node 5 [root@servera ~]# gluster volume info vol_e4c948687239df9833748d081ddb6fd5

5.5 Pod mount test

1 [root@xxx ~]# yum -y install centos-release-gluster 2 [root@xxx ~]# yum -y install glusterfs-fuse #Install glusterfs fuse

Tip: all Kubernetes nodes that need to use the glusterfs volume must have glusterfs fuse installed for normal mounting, and the version should be consistent with the glusterfs node.

1 [root@k8smaster01 heketi]# vi gluster-heketi-pod.yaml 2 kind: Pod 3 apiVersion: v1 4 metadata: 5 name: gluster-heketi-pod 6 spec: 7 containers: 8 - name: gluster-heketi-container 9 image: busybox 10 command: 11 - sleep 12 - "3600" 13 volumeMounts: 14 - name: gluster-heketi-volume #Must be consistent with the name in volumes 15 mountPath: "/pv-data" 16 readOnly: false 17 volumes: 18 - name: gluster-heketi-volume 19 persistentVolumeClaim: 20 claimName: gluster-heketi-pvc #Must be consistent with the name in the PVC created in 5.3 21 [root@k8smaster01 heketi]# kubectl create -f gluster-heketi-pod.yaml -n heketi #Create Pod

5.6 validation

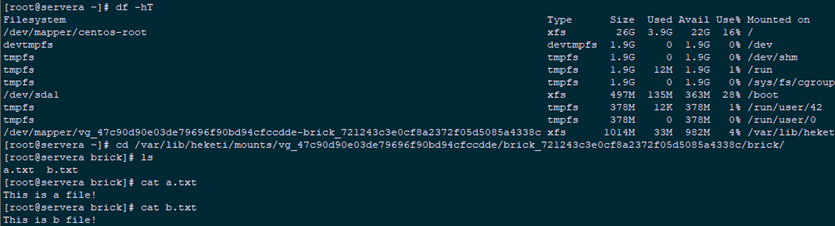

1 [root@k8smaster01 heketi]# kubectl get pod -n heketi | grep gluster 2 gluster-heketi-pod 1/1 Running 0 2m43s 3 [root@k8smaster01 heketi]# kubectl exec -it gluster-heketi-pod /bin/sh #Enter Pod to write test file 4 / # cd /pv-data/ 5 /pv-data # echo "This is a file!" >> a.txt 6 /pv-data # echo "This is b file!" >> b.txt 7 /pv-data # ls 8 a.txt b.txt 9 [root@servera ~]# df -hT #View the test file of Kubernetes node in the glusterfs node 10 [root@servera ~]# cd /var/lib/heketi/mounts/vg_47c90d90e03de79696f90bd94cfccdde/brick_721243c3e0cf8a2372f05d5085a4338c/brick/ 11 [root@servera brick]# ls 12 [root@servera brick]# cat a.txt 13 [root@servera brick]# cat b.txt

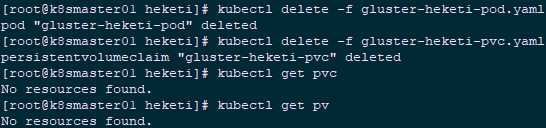

5.7 delete resources

1 [root@k8smaster01 heketi]# kubectl delete -f gluster-heketi-pod.yaml 2 [root@k8smaster01 heketi]# kubectl delete -f gluster-heketi-pvc.yaml 3 [root@k8smaster01 heketi]# kubectl get pvc 4 [root@k8smaster01 heketi]# kubectl get pv 5 [root@servera ~]# gluster volume list 6 No volumes present in cluster