1: Introduction to thread pool

Using thread pool, you can reuse threads and control the total number of threads. If the thread pool is not used, a new thread should be opened for each task. The overhead of repeatedly creating threads is large, and too many threads will occupy too much memory.

This is too expensive. We want to have a fixed number of threads to execute these 1000 threads, so as to avoid the overhead caused by repeatedly creating and destroying threads.

2: Benefits and scenarios of using thread pool

1: Benefits

Eliminate the delay caused by thread creation and speed up response.

Rational use of CPU and memory

Unified management of resources

2: Applicable occasions

When the server receives a large number of requests, it is very suitable to use thread pool counting. It can greatly reduce the number of thread creation and destruction and improve the work efficiency of the server.

In actual development, if you need to create more than 5 threads, you can use thread pool to manage them.

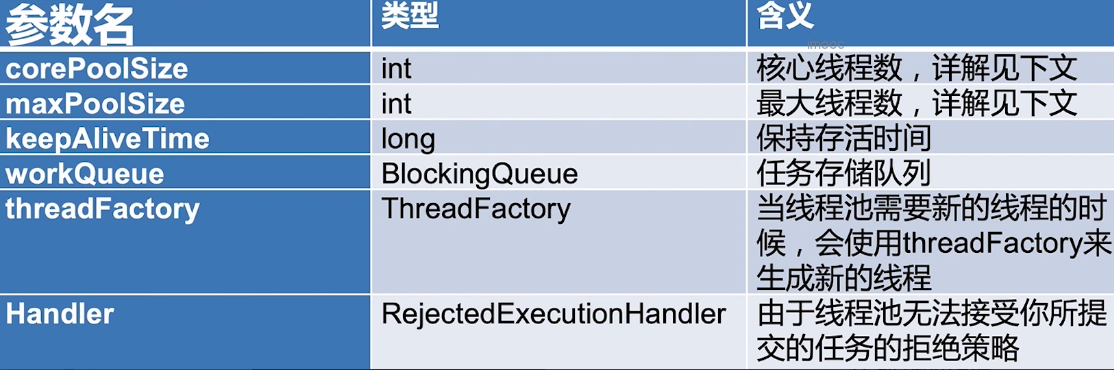

3: Thread pool parameters

1: corePoolSize number of core threads

After the initialization of the thread pool, by default, there are no threads in the thread pool. The thread pool will wait for a task to arrive before creating a new thread to execute the task.

2: maxPoolSize maximum number of threads

The thread pool may add some additional threads based on the number of core threads, but there is an upper limit to the number of newly added threads, which is maxPoolSize

3: The new thread is created by ThreadFactory

By default, Executors.defaultThreadFactory() is used. The created threads are in the same thread group and have the same NORM_PRIORITY priority and none of them are daemon threads. If you specify ThreadFactory, you can change the thread name, thread group, priority, and whether it is a daemon thread.

4: Work queue type

Direct exchange: SynchronousQueue. This queue has no capacity and cannot save work tasks.

Unbounded queue: LinkedBlockingQueue unbounded queue

Bounded queue: ArrayBlockingQueue bounded queue

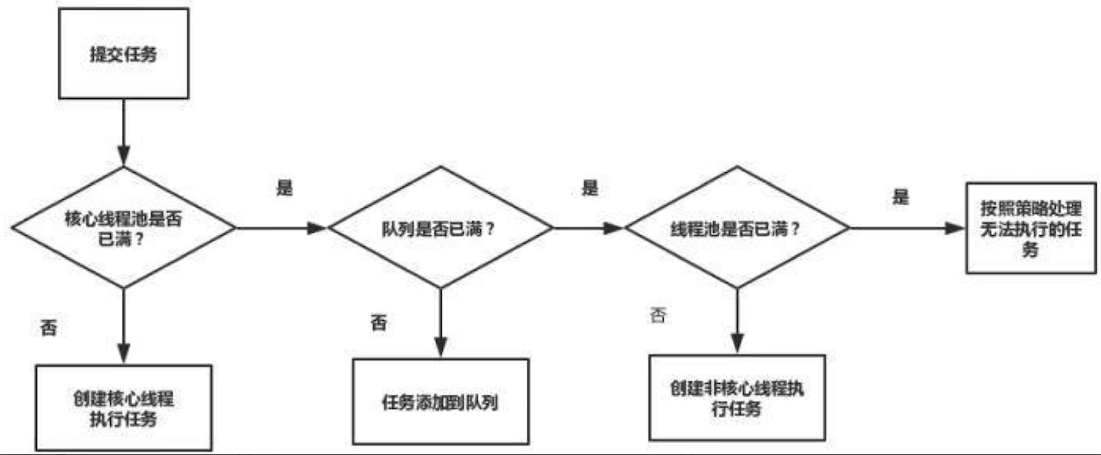

4: Thread pool principle

If the number of threads is less than corePoolSize, a new thread will be created to run the new task even if other worker threads are idle.

If the number of threads is greater than or equal to corePoolSize but less than maximumPoolSize, put the task on the queue.

If the queue is full and the number of threads is less than maxPoolSize, a new thread is created to run the task.

If the queue is full and the number of threads is greater than or equal to maxPoolSize, the task is rejected.

Example: the core pool size is 5, the maximum pool size is 10, and the queue size is 100

Because a maximum of 5 requests will be created in the thread, and then the task will be added to the queue until it reaches 100.

When the queue is full, the latest thread maxPoolSize will be created, up to 10 threads. If the task comes again, it will be rejected.

Characteristics of increasing and decreasing threads

By setting corePoolSize to be the same as maximumPoolSize, you can create a fixed size thread pool.

The thread pool wants to keep the number of threads small and will only increase when the load becomes large.

By setting maximumPoolSize to a high value, such as Integer.MAX_VALUE, which allows the thread pool to accommodate any number of concurrent tasks.

Threads with more than corePoolSize are created only when the queue is full, so if you use an unbounded queue (LinkedBlockingQueue), the number of threads will not exceed corePoolSize

5: Automatically create thread pool

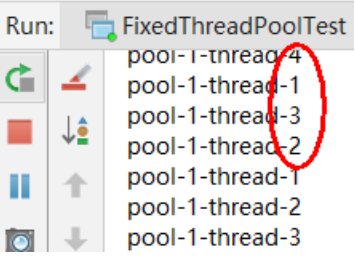

1: newFixedThreadPool

Manual creation is better because it can clarify the running rules of the thread pool and avoid the risk of resource exhaustion.

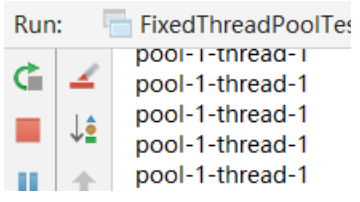

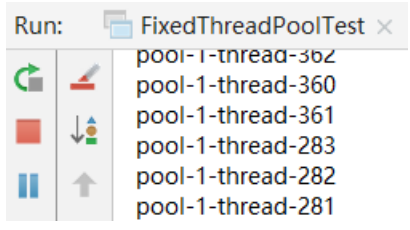

threadpool/FixedThreadPoolTest.java

No matter how many tasks there are, there are always only four threads executing.

package threadpool;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

/**

* Description: demonstrates newFixedThreadPool

*/

public class FixedThreadPoolTest {

public static void main(String[] args) {

ExecutorService executorService = Executors.newFixedThreadPool(4);

for (int i = 0; i < 1000; i++) {

executorService.execute(new Task());

}

}

}

class Task implements Runnable {

@Override

public void run() {

try {

Thread.sleep(500);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(Thread.currentThread().getName());

}

}

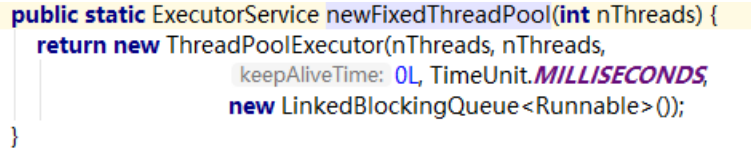

Source code interpretation

The first parameter is the number of core threads, and the second parameter is the maximum number of threads. It is set the same as the number of core threads, and no more threads than the number of core threads will appear. Therefore, the third parameter is set to 0, and the fourth parameter is unbounded queue. No matter how many tasks are placed in the queue, no new threads will be created.

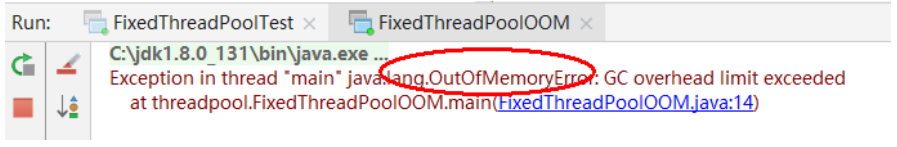

If the thread is slow in processing tasks, more and more tasks will be placed in an unbounded queue, which will occupy a lot of memory, which will lead to a memory overflow (OOM) error.

threadpool/FixedThreadPoolOOM.java

package threadpool;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

/**

* Description: demonstrates the error condition of newFixedThreadPool

*/

public class FixedThreadPoolOOM {

private static ExecutorService executorService = Executors.newFixedThreadPool(1);

public static void main(String[] args) {

for (int i = 0; i < Integer.MAX_VALUE; i++) {

executorService.execute(new SubThread());

}

}

}

class SubThread implements Runnable {

@Override

public void run() {

try {

//The slower the processing, the better. Demonstrate memory overflow

Thread.sleep(1000000000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

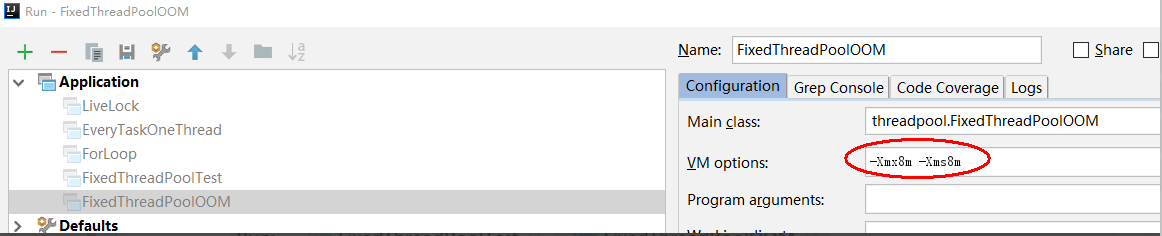

}When testing, you can set the memory smaller: Run - > Run.. - > Edit configurations

2: newSingleThreadExecutor

Only one thread

threadpool/SingleThreadExecutor.java

package threadpool;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

public class SingleThreadExecutor {

public static void main(String[] args) {

ExecutorService executorService = Executors.newSingleThreadExecutor();

for (int i = 0; i < 1000; i++) {

executorService.execute(new Task());

}

}

}

Its principle is the same as that of newFixedThreadPool, except that the number of threads is directly set to 1. When requests are stacked, it will also occupy a lot of memory.

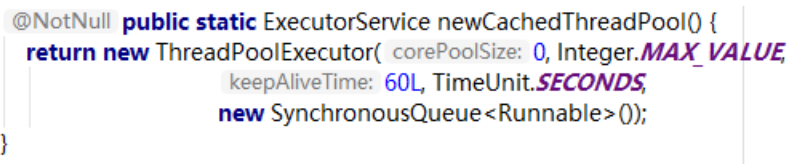

3: newCachedThreadPool

Cacheable thread pool, which is an unbounded thread pool and has the function of automatically recycling redundant threads.

threadpool/CachedThreadPool.java

package threadpool;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

public class CachedThreadPool {

public static void main(String[] args) {

ExecutorService executorService = Executors.newCachedThreadPool();

for (int i = 0; i < 1000; i++) {

executorService.execute(new Task());

}

}

}

Source code interpretation

Synchronous queue is a direct exchange queue with a capacity of 0, so tasks cannot be placed in the queue. After the task comes, it is directly handed over to the thread for execution. The maximum number of threads in the thread pool is the maximum value of an integer. It can be considered that there is no upper limit. Multiple threads are created to execute multiple tasks. After a certain time (60 seconds by default), the redundant threads will be recycled.

Disadvantages: the second parameter maximumPoolSize is set to Integer.MAX_VALUE, which may create very many threads, resulting in OOM

4: newScheduledThreadPool

Thread pool supporting scheduled and periodic task execution

threadpool/ScheduledThreadPoolTest.java

package threadpool;

import java.util.concurrent.Executors;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.TimeUnit;

public class ScheduledThreadPoolTest {

public static void main(String[] args) {

ScheduledExecutorService threadPool = Executors.newScheduledThreadPool(10);

//threadPool.schedule(new Task(), 5, TimeUnit.SECONDS);

//First delay the operation for one second, and then run it every 3 seconds

threadPool.scheduleAtFixedRate(new Task(), 1, 3, TimeUnit.SECONDS);

}

}6: Create thread pool manually

1: Create thread pool method correctly

According to different business scenarios, you can set the parameters of thread pool, such as memory size, thread name, how to log after a task is rejected, etc.

2: Set the number of thread pools

CPU intensive (such as encryption, computing hash, etc.): the optimal number of threads is 1-2 times the number of CPU cores.

Time consuming IO type (read / write database, file, network read / write): the optimal number of threads should be many times greater than the number of CPU cores. Based on the busy JVM thread monitoring display, it can ensure that threads can be connected when idle and make full use of CPU

Number of threads = number of CPU cores * (1 + average waiting time / average working time)

7: Reject policy

1: Refuse opportunity

1) When the Executor closes, submitting a new task is rejected.

2) When the Executor uses a finite boundary for the maximum thread and work queue capacity and is saturated

2: AbortPolicy

An exception is thrown directly, indicating that the task has not been submitted successfully

3: DiscardPolicy

The thread pool will silently discard the task and will not issue a notification

4: DiscardOldestPolicy

There are many tasks in the queue. Discard the tasks that exist the longest in the queue.

5: CallerRunsPolicy

When the thread pool cannot process a task, that thread submits the task and that thread is responsible for running it. The advantage is to avoid discarding tasks and reduce the speed of submitting tasks, and give a buffer time to the thread pool.

8: When the thread pool is full, what happens when a task is submitted to the thread pool

If you use the LinkedBlockingQueue (blocking queue), that is, the unbounded queue, it doesn't matter. Continue to add tasks to the blocking queue for execution, because the LinkedBlockingQueue can be regarded as an infinite queue, which can store tasks indefinitely;

If you use a bounded queue, such as ArrayBlockingQueue, the task will be added to the ArrayBlockingQueue first. If the ArrayBlockingQueue is full, the rejection policy RejectedExecutionHandler will be used to process the full task. The default is AbortPolicy.

Saturation policy of thread pool:

When the blocking queue is full and there are no idle worker threads, if you continue to submit a task, you must adopt a strategy to process the task. The thread pool provides four strategies