Hive installation deployment

1, Single node installation Apache hive

1. Environmental dependence

- CentOS7, JDK8, start Apache Hadoop, start Mysql5.7 (MySql configures remote connection permissions)

2. Software download

-

Download Apache hive version 2.3.6

Download addresses of various versions of Apache Hive http://archive.apache.org/dist/hive/

-

Create / hive file path and upload apache-hive-2.3.6-bin.tar.gz to this path

-

Unzip hive installation package

# tar -zxvf apache-hive-2.3.6-bin.tar.gz

3. Environment configuration

-

Add the system environment variable HIVE_HOME and modify the PATH variable

# vim /etc/profile

export JAVA_HOME=/usr/local/src/jdk8 export JRE_HOME=${JAVA_HOME}/jre export CLASS_PATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib export SPARK_HOME=/spark/spark2.4.8 export HADOOP_HOME=/hadoop/hadoop-2.7.7 export HIVE_HOME=/hive/apache-hive-2.3.6-bin export PATH=$PATH:$JAVA_HOME/bin:$SPARK_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin# Save and exit to make it effective source /etc/profile

4. Configuration file

-

Under the conf path of the installation path, create the configuration file hive-site.xml and write the corresponding mysql information

touch hive-site.xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://192.168.230.132:3306/hive?createDatabaseIfNotExist=true&verifyServerCertificate=false&useSSL=false</value> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>root</value> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>123456</value> </property> <property> <name>hive.metastore.warehouse.dir</name> <value>/user/hive/warehouse</value> </property> <property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> </configuration>

5. Add MySQL driver

- Add the MySQL driver package mysql-connector-java-5.1.40-bin.jar, which is placed under the installation root path of hive

In the lib directory, hive wants to read and write MySQL

6. Copy the configuration file of Hadoop cluster

- Place the core-site.xml and hdfs-site.xml configuration files in the corresponding Hadoop cluster in the Hive installation directory

Record it in the conf directory.

6.5. If Hive cluster is deployed, files can be distributed to nodes

copy hive To other nodes scp -r apache-hive-2.3.6-bin root@hadoop2:/hive scp -r apache-hive-2.3.6-bin root@hadoop3:/hive

7. Verify Hive

-

View hive --service version

[root@localhost bin]# hive --service version Hive 2.3.6 Git git://HW13934/Users/gates/tmp/hive-branch-2.3/hive -r 2c2fdd524e8783f6e1f3ef15281cc2d5ed08728f Compiled by gates on Tue Aug 13 11:56:35 PDT 2019 From source with checksum c44b0b7eace3e81ba3cf64e7c4ea3b19

8. Initialize metabase

-

In version 2.x, the metadata base mysql needs to be initialized. Execute schematool -dbType mysql -initSchema in the bin path

[root@localhost bin]# schematool -dbType mysql -initSchema SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/hive/apache-hive-2.3.6-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/hadoop/hadoop-2.7.7/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Metastore connection URL: jdbc:mysql://192.168.230.132:3306/hive?createDatabaseIfNotExist=true&verifyServerCertificate=false&useSSL=false Metastore Connection Driver : com.mysql.jdbc.Driver Metastore connection User: root Starting metastore schema initialization to 2.3.0 Initialization script hive-schema-2.3.0.mysql.sql Initialization script completed schemaTool completed [root@localhost bin]#

9. Start Hive client

-

Enter the bin path and execute the hive command

[root@localhost bin]# hive which: no hbase in (/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/local/src/jdk8/bin:/spark/spark2.4.8/bin:/hadoop/hadoop-2.7.7/bin:/hadoop/hadoop-2.7.7/sbin:/hive/apache-hive-2.3.6-bin/bin:/root/bin) SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/hive/apache-hive-2.3.6-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/hadoop/hadoop-2.7.7/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Logging initialized using configuration in jar:file:/hive/apache-hive-2.3.6-bin/lib/hive-common-2.3.6.jar!/hive-log4j2.properties Async: true Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. hive> show databases; OK default Time taken: 3.055 seconds, Fetched: 1 row(s) hive>

10. Exit hive client

-

exit;

hive> exit;

remarks

-

Create the required hdfs path in the hive configuration file and grant certain permissions

[root@localhost bin]# hdfs dfs -mkdir -p /usr/hive/warehouse [root@localhost bin]# hdfs dfs -mkdir -p /usr/hive/tmp [root@localhost bin]# hdfs dfs -mkdir -p /usr/hive/log [root@localhost bin]# hdfs dfs -chmod g+w /usr/hive/warehouse [root@localhost bin]# hdfs dfs -chmod g+w /usr/hive/tmp [root@localhost bin]# hdfs dfs -chmod g+w /usr/hive/log

2, Startup of HiveServer2

1. Create log path

[root@localhost apache-hive-2.3.6-bin]# mkdir log

2. Modify Hadoop configuration

The core-site.xml and hdfs-site.xml in the modified Hadoop cluster are all modified. Restart Hadoop cluster

-

Modify the hdfs-site.xml configuration file of hadoop cluster: add a configuration message to enable webhdfs

<property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> -

Modify the core-site.xml configuration file of hadoop cluster: add two pieces of configuration information: it indicates the proxy user setting hadoop cluster

<property> <name>hadoop.proxyuser.root.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.root.groups</name> <value>*</value> </property> -

Restart Hadoop service

-

Start the Hiveserver2 service

[root@localhost bin]# nohup hiveserver2 1>/hive/apache-hive-2.3.6-bin/log/hiveserver.log 2>/hive/apache-hive-2.3.6-bin/log/hiveserver.err &

-

Start the hive interface of beeline client, hue and HDP

[root@localhost bin]# beeline -u jdbc:hive2://192.168.230.132:10000 -n root

-

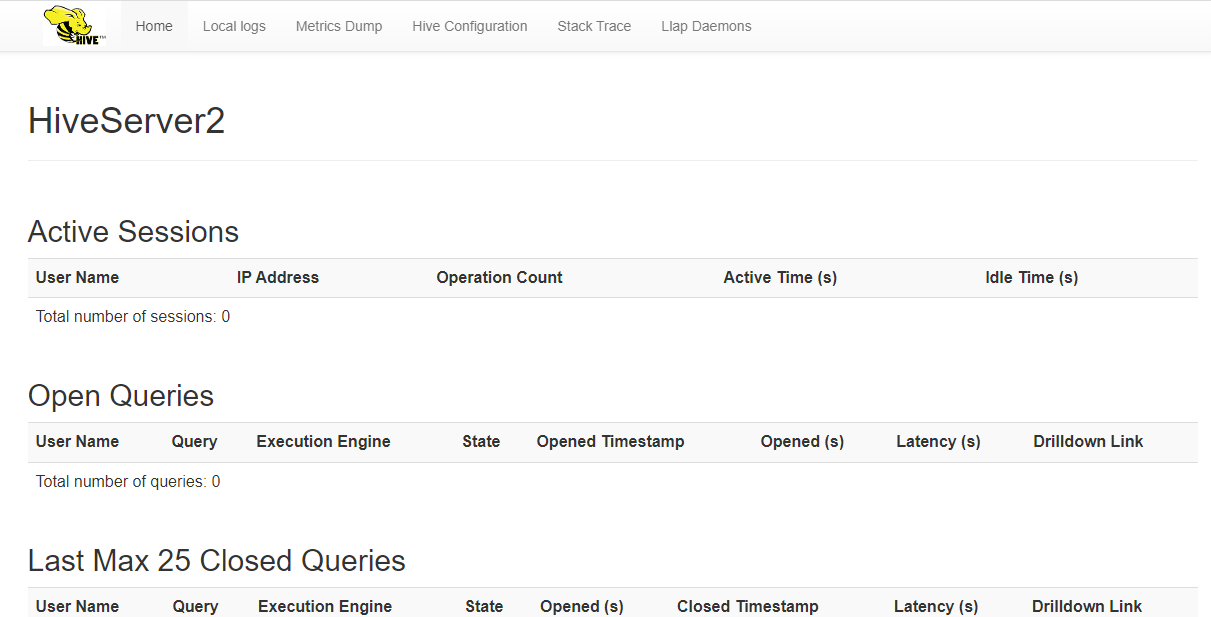

Extension: Hiveserver2 Web UI

-

Hive has provided a simple WEB UI interface for HiveServer2 since version 2.0, in which you can intuitively see the current chain

Connected sessions, historical logs, configuration parameters, and measurement information. -

Modify hive-site.xml configuration file

<property> <name>hive.server2.webui.host</name> <value>192.168.230.132</value> </property> <property> <name>hive.server2.webui.port</name> <value>15010</value> </property> -

Restart Hiveserver2 to access the Web UI: http://192.168.230.132:15010

hive.server2.webui.port 15010 ~~~<value>192.168.230.132</value>

-

Restart Hiveserver2 to access the Web UI: http://192.168.230.132:15010

-