android makes a question answering assistant software

At present, there will be question answering entertainment on all major platforms or in the game. However, if you manually type questions, the time for answering questions will be over before you finish typing, which is slow. So do a simple academic research.

Train of thought:

When the answer begins, get the question and answer first, then search for the answer in Baidu and google browsers as a reference.

1: First, get the questions and answers, two methods (let's use the second one for now)

(1): capture packets directly. This method is efficient, but it takes a long time to develop. Because of the time, this method is not used first, and may be grasped later.

(2): use image recognition to extract text and capture pictures according to the coordinates of the topic display position to improve efficiency. (but the whole is not as accurate as the first, fast)

2: According to the extraction of the title automatically open browser search, search out the results to provide a reference.

In the future, we can use paython to make the results more user-friendly, accurate and clear. Because we only do Android, let's not talk about it first

Specific process

1. First, after the title is displayed, the shortcut key of the mobile phone is screenshot

2. We monitor the screenshot of the mobile phone system

3. If there is a screenshot, extract the screenshot

4. Then cut the specified coordinates of the screenshot to get only the part of the title (because it will take a long time to identify all the pictures, we need to identify accurately)

5. After cutting, we use Baidu's recognition, which is very accurate, 5000 times a day for free, enough

6. After identifying the topic, we pull up the mobile browser to search automatically (android has a way to pull up the browser and search the link)

7. In this way, we can check the search content and answer the quick reference questions

code snippet

1,Screenshot monitoring of mobile phone system

import android.content.Context; import android.database.ContentObserver; import android.database.Cursor; import android.graphics.BitmapFactory; import android.graphics.Point; import android.net.Uri; import android.os.Build; import android.os.Handler; import android.os.Looper; import android.provider.MediaStore; import android.text.TextUtils; import android.util.Log; import android.view.Display; import android.view.WindowManager; import java.lang.reflect.Method; import java.util.ArrayList; import java.util.List; /** * Interception monitor * * //Permission to apply * Requires Permission: android.permission.READ_EXTERNAL_STORAGE * * * To call this method to listen, you need to enable it first * ScreenListenManager manager = ScreenListenManager.newInstance(context); * * manager.setListener( * new OnScreenShotListener() { * public void onShot(String imagePath) { * //Call after successful screenshots * } * } * ); * * manager.startListen(); //Turn on screenshot monitoring * * manager.stopListen(); //Turn off screenshot monitoring * */ public class ScreenListenManager { private static final String TAG = "ScreenListenManager"; /** Columns to read when reading media database */ private static final String[] MEDIA_PROJECTIONS = { MediaStore.Images.ImageColumns.DATA, MediaStore.Images.ImageColumns.DATE_TAKEN, }; /** The columns to be read when reading the media database. The WIDTH and HEIGHT fields are only available after API 16 */ private static final String[] MEDIA_PROJECTIONS_API_16 = { MediaStore.Images.ImageColumns.DATA, MediaStore.Images.ImageColumns.DATE_TAKEN, MediaStore.Images.ImageColumns.WIDTH, MediaStore.Images.ImageColumns.HEIGHT, }; /** Key words of path judgment in screen capture basis */ private static final String[] KEYWORDS = { "screenshot", "screen_shot", "screen-shot", "screen shot", "screencapture", "screen_capture", "screen-capture", "screen capture", "screencap", "screen_cap", "screen-cap", "screen cap" }; private static Point sScreenRealSize; /** Path recalled */ private final List<String> sHasCallbackPaths = new ArrayList<String>(); private Context mContext; private OnScreenShotListener mListener; private long mStartListenTime; /** Internal memory content viewer */ private MediaContentObserver mInternalObserver; /** External memory content viewer */ private MediaContentObserver mExternalObserver; /** Handler running in UI thread, used to run listener callback */ private final Handler mUiHandler = new Handler(Looper.getMainLooper()); private ScreenListenManager(Context context) { if (context == null) { throw new IllegalArgumentException("The context must not be null."); } mContext = context; // Get the real resolution of the screen if (sScreenRealSize == null) { sScreenRealSize = getRealScreenSize(); if (sScreenRealSize != null) { Log.d(TAG, "Screen Real Size: " + sScreenRealSize.x + " * " + sScreenRealSize.y); } else { Log.w(TAG, "Get screen real size failed."); } } } public static ScreenListenManager newInstance(Context context) { assertInMainThread(); return new ScreenListenManager(context); } /** * lsnrctl start */ public void startListen() { assertInMainThread(); sHasCallbackPaths.clear(); // Record the time stamp when listening starts mStartListenTime = System.currentTimeMillis(); // Create content watcher mInternalObserver = new MediaContentObserver(MediaStore.Images.Media.INTERNAL_CONTENT_URI, mUiHandler); mExternalObserver = new MediaContentObserver(MediaStore.Images.Media.EXTERNAL_CONTENT_URI, mUiHandler); // Registered content observer mContext.getContentResolver().registerContentObserver( MediaStore.Images.Media.INTERNAL_CONTENT_URI, false, mInternalObserver ); mContext.getContentResolver().registerContentObserver( MediaStore.Images.Media.EXTERNAL_CONTENT_URI, false, mExternalObserver ); } /** * Stop listening */ public void stopListen() { assertInMainThread(); // Log off content watcher if (mInternalObserver != null) { try { mContext.getContentResolver().unregisterContentObserver(mInternalObserver); } catch (Exception e) { e.printStackTrace(); } mInternalObserver = null; } if (mExternalObserver != null) { try { mContext.getContentResolver().unregisterContentObserver(mExternalObserver); } catch (Exception e) { e.printStackTrace(); } mExternalObserver = null; } // wipe data mStartListenTime = 0; sHasCallbackPaths.clear(); } /** * Handling content changes in the media database */ private void handleMediaContentChange(Uri contentUri) { Cursor cursor = null; try { // Query the last piece of data in the database when the data changes cursor = mContext.getContentResolver().query( contentUri, Build.VERSION.SDK_INT < 16 ? MEDIA_PROJECTIONS : MEDIA_PROJECTIONS_API_16, null, null, MediaStore.Images.ImageColumns.DATE_ADDED + " desc limit 1" ); if (cursor == null) { Log.e(TAG, "Deviant logic."); return; } if (!cursor.moveToFirst()) { Log.d(TAG, "Cursor no data."); return; } // Get the index of each column int dataIndex = cursor.getColumnIndex(MediaStore.Images.ImageColumns.DATA); int dateTakenIndex = cursor.getColumnIndex(MediaStore.Images.ImageColumns.DATE_TAKEN); int widthIndex = -1; int heightIndex = -1; if (Build.VERSION.SDK_INT >= 16) { widthIndex = cursor.getColumnIndex(MediaStore.Images.ImageColumns.WIDTH); heightIndex = cursor.getColumnIndex(MediaStore.Images.ImageColumns.HEIGHT); } // Get row data String data = cursor.getString(dataIndex); long dateTaken = cursor.getLong(dateTakenIndex); int width = 0; int height = 0; if (widthIndex >= 0 && heightIndex >= 0) { width = cursor.getInt(widthIndex); height = cursor.getInt(heightIndex); } else { // Before API 16, width and height should be obtained manually Point size = getImageSize(data); width = size.x; height = size.y; } // Process the first row of data obtained handleMediaRowData(data, dateTaken, width, height); } catch (Exception e) { e.printStackTrace(); } finally { if (cursor != null && !cursor.isClosed()) { cursor.close(); } } } private Point getImageSize(String imagePath) { BitmapFactory.Options options = new BitmapFactory.Options(); options.inJustDecodeBounds = true; BitmapFactory.decodeFile(imagePath, options); return new Point(options.outWidth, options.outHeight); } /** * Process the acquired row data */ private void handleMediaRowData(String data, long dateTaken, int width, int height) { if (checkScreenShot(data, dateTaken, width, height)) { Log.d(TAG, "ScreenShot: path = " + data + "; size = " + width + " * " + height + "; date = " + dateTaken); if (mListener != null && !checkCallback(data)) { mListener.onShot(data); } } else { // If there is data change in the observation interval media database, and it does not conform to the screenshot rule, it will be output to log for analysis Log.w(TAG, "Media content changed, but not screenshot: path = " + data + "; size = " + width + " * " + height + "; date = " + dateTaken); } } /** * Determine whether the specified data row meets the screen capture conditions */ private boolean checkScreenShot(String data, long dateTaken, int width, int height) { /* * Judgment basis 1: time judgment */ // If the time to join the database is before the start of monitoring, or the difference between the time and the current time is greater than 10 seconds, it is considered that there is no screenshot at present if (dateTaken < mStartListenTime || (System.currentTimeMillis() - dateTaken) > 10 * 1000) { return false; } /* * Judgment basis 2: Dimension judgment */ if (sScreenRealSize != null) { // If the picture size exceeds the screen, it is considered that there is no screen capture at present if ( !( (width <= sScreenRealSize.x && height <= sScreenRealSize.y) || (height <= sScreenRealSize.x && width <= sScreenRealSize.y) )) { return false; } } /* * Judgment basis 3: path judgment */ if (TextUtils.isEmpty(data)) { return false; } data = data.toLowerCase(); // Determine whether the image path contains one of the specified keywords. If yes, the current screen capture is considered for (String keyWork : KEYWORDS) { if (data.contains(keyWork)) { return true; } } return false; } /** * Judge whether it has been recalled, and some mobile ROM screenshots will send multiple content change notifications at a time; < br / > * Deleting a picture will also send a notice, and at the same time, prevent the previous picture that meets the screenshot rule from being taken as the current screenshot */ private boolean checkCallback(String imagePath) { if (sHasCallbackPaths.contains(imagePath)) { return true; } // About 15 to 20 records can be cached if (sHasCallbackPaths.size() >= 20) { for (int i = 0; i < 5; i++) { sHasCallbackPaths.remove(0); } } sHasCallbackPaths.add(imagePath); return false; } /** * Get screen resolution */ private Point getRealScreenSize() { Point screenSize = null; try { screenSize = new Point(); WindowManager windowManager = (WindowManager) mContext.getSystemService(Context.WINDOW_SERVICE); Display defaultDisplay = windowManager.getDefaultDisplay(); if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.JELLY_BEAN_MR1) { defaultDisplay.getRealSize(screenSize); } else { try { Method mGetRawW = Display.class.getMethod("getRawWidth"); Method mGetRawH = Display.class.getMethod("getRawHeight"); screenSize.set( (Integer) mGetRawW.invoke(defaultDisplay), (Integer) mGetRawH.invoke(defaultDisplay) ); } catch (Exception e) { screenSize.set(defaultDisplay.getWidth(), defaultDisplay.getHeight()); e.printStackTrace(); } } } catch (Exception e) { e.printStackTrace(); } return screenSize; } /** * Set screen capture monitor */ public void setListener(OnScreenShotListener listener) { mListener = listener; } public static interface OnScreenShotListener { public void onShot(String imagePath); } private static void assertInMainThread() { if (Looper.myLooper() != Looper.getMainLooper()) { StackTraceElement[] elements = Thread.currentThread().getStackTrace(); String methodMsg = null; if (elements != null && elements.length >= 4) { methodMsg = elements[3].toString(); } throw new IllegalStateException("Call the method must be in main thread: " + methodMsg); } } /** * Media content watcher (observe changes in media database) */ private class MediaContentObserver extends ContentObserver { private Uri mContentUri; public MediaContentObserver(Uri contentUri, Handler handler) { super(handler); mContentUri = contentUri; } @Override public void onChange(boolean selfChange) { super.onChange(selfChange); handleMediaContentChange(mContentUri); } } }

2. Cut the coordinates of the monitored pictures

//First convert to bitmap according to the image location url

Bitmap bit = BitmapFactory.decodeFile(imgUrl);

/**

* Then crop the picture according to the position coordinate of the topic display

* The first parameter: the starting position of the image clipping x coordinate

* The second parameter: the starting position of y coordinate of image clipping

* Third parameter: picture clipping width

* Fourth parameter: image clipping height

*/

Bitmap bitmap = Bitmap.createBitmap(bit, 486, 540, 568, 735);

//Convert the bitmap of the cropped image to byte (required by Baidu image recognition interface)

ByteArrayOutputStream baos = new ByteArrayOutputStream();

bitmap.compress(Bitmap.CompressFormat.PNG, 100, baos);

byte[] imgData = baos.toByteArray();3. Call Baidu image and text recognition interface, and pass in the

Baidu graphic recognition interface address

https://cloud.baidu.com/doc/OCR/index.html

4. After identifying the text, pull up the mobile browser and open the search url

//Jump to browser search (Xiaomi mobile phone with browser) str is the content of image recognition

String url = "https://www.baidu.com/s?ie=utf-8&f=8&rsv_bp=1&rsv_idx=2&tn=baiduhome_pg&wd="+str;

Uri uri = Uri.parse(url);

Intent intent = new Intent(Intent.ACTION_VIEW, uri);

intent.setClassName("com.android.browser", "com.android.browser.BrowserActivity");//Open miui browser

intent.setFlags(Intent.FLAG_ACTIVITY_NEW_TASK);

startActivity(intent);

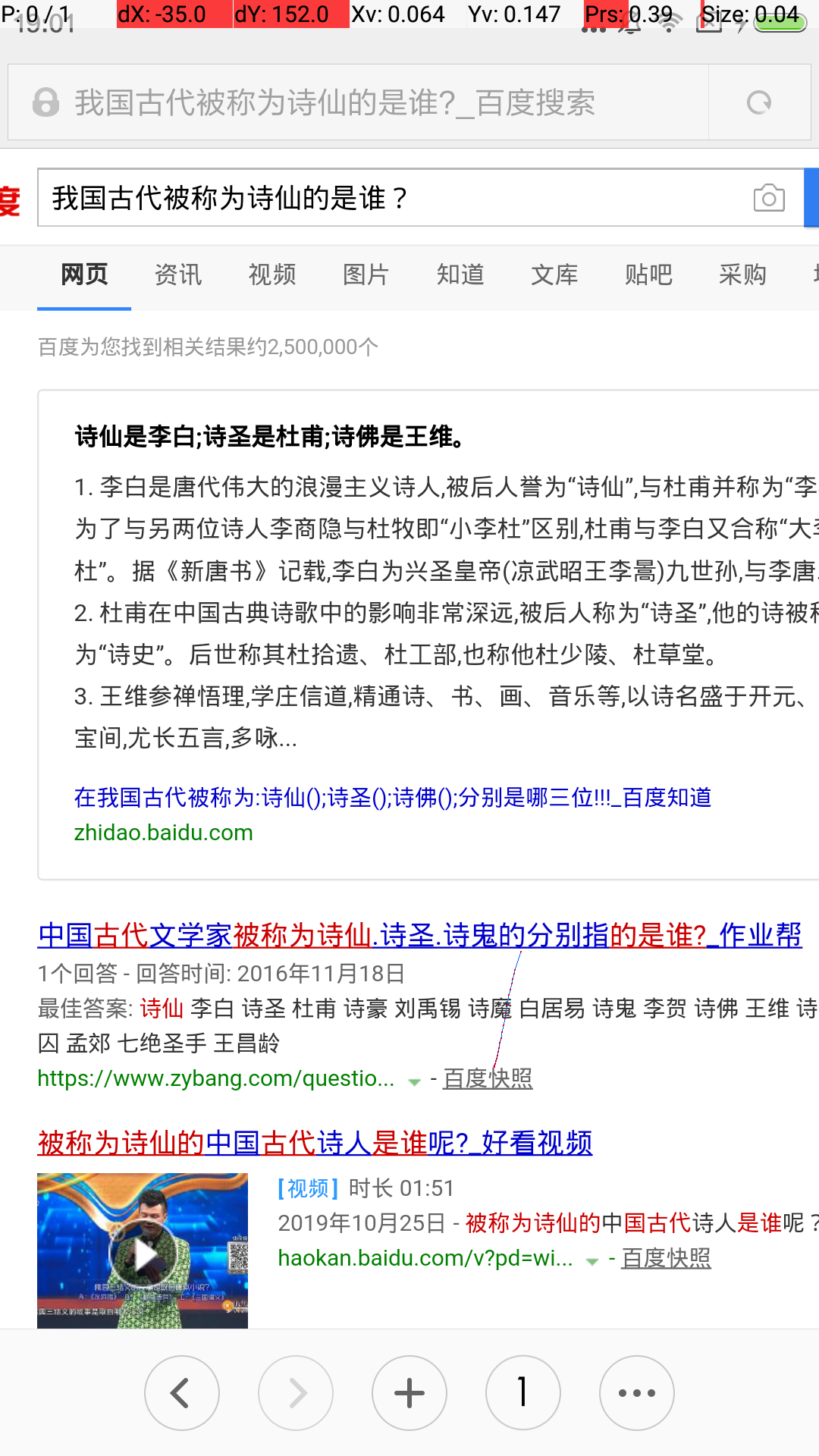

Design sketch