preface

if you have some knowledge of the face detection function of HMS ML Kit, I believe you have manually called the interface provided by us to write your own APP. At present, there are feedback from small partners in the process of calling the interface. It is not clear how to use the interface mlmaxsizefacetransportor in the HMS ML Kit document. In order to let you have a deeper understanding of our interface and facilitate the use of it in the scene, we are going to open up ideas and try more in this article. If you want to have a deeper understanding of more comprehensive and specific functions, please move https://developer.huawei.com/consumer/cn/hms/huawei-mlkit.

scene

I believe that everyone has the experience of going out to play on May 1st and 11th. Are they all such people mountain in people sea

.

.

It's like this

It's like this

There's something else

There's something else

I don't know if I don't look at it. My facial expression is so rich.. Is it very tired? Every time you want to send out a wave of friends circle, you have to take an hour to find a photo that can be seen in hundreds of similar photos taken in the daytime...

in order to solve similar problems, HMS ML Kit provides an interface to track and identify the largest face in the image, which can identify the largest face in the image and facilitate the relevant operation and processing of "key target" in the tracking image. In this paper, we simply call the mlmaxsizefacetrancor interface to realize the function of capturing the biggest smile.

Preparation before development

android studio installation

. Download link: Download link on Android studio website: https://developer.android.com/studio Android studio installation process reference link: https://www.cnblogs.com/xiadewang/p/7820377.html

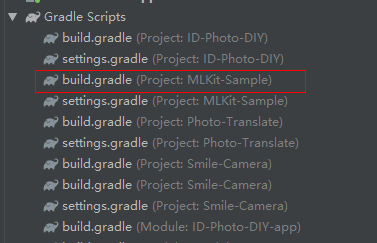

Adding Huawei maven warehouse to project level gradle

Open Android studio project level build.gradle file

Add the following maven address incrementally:

buildscript { { maven {url 'http://developer.huawei.com/repo/'} } } allprojects { repositories { maven { url 'http://developer.huawei.com/repo/'} } }

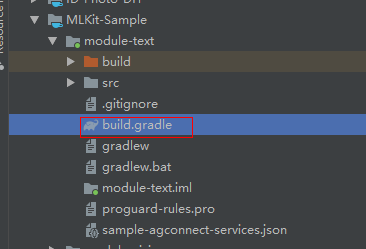

At the application level build.gradle SDK dependency added

stay AndroidManifest.xml Automatic download of incremental add model in file

to enable the application to automatically update the latest machine learning model to the user's device after the user installs your application from the Huawei application market, please add the following statement to the application's AndroidManifest.xml In the file:

<manifest ... <meta-data android:name="com.huawei.hms.ml.DEPENDENCY" android:value= " face"/> ... </manifest>

stay AndroidManifest.xml In the document, you can apply for camera, access network and storage rights

<!--Camera permissions--> <uses-feature android:name="android.hardware.camera" /> <uses-permission android:name="android.permission.CAMERA" /> <!--Write permission--> <uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

Key steps of code development

Dynamic permission application

@Override public void onCreate(Bundle savedInstanceState) { ...... if (!allPermissionsGranted()) { getRuntimePermissions(); }

Creating a face recognition detector

.

MLFaceAnalyzerSetting setting = new MLFaceAnalyzerSetting.Factory() .setFeatureType(MLFaceAnalyzerSetting.TYPE_FEATURES) .setKeyPointType(MLFaceAnalyzerSetting.TYPE_UNSUPPORT_KEYPOINTS) .setMinFaceProportion(0.1f) .setTracingAllowed(true) .create();

through MLM axSizeFaceTransactor.Creator Create an "mlmaxsizefacetransportor" object to process the largest face detected. The objectCreateCallback() method is called when the object is detected, and the objectUpdateCallback() method is called when the object is updated. In the method, a square is marked on the largest face recognized through Overlay, and MLFaceEmotion is obtained from the detection results to recognize the smile expression and trigger the photo taking.

MLMaxSizeFaceTransactor transactor = new MLMaxSizeFaceTransactor.Creator(analyzer, new MLResultTrailer<MLFace>() { @Override public void objectCreateCallback(int itemId, MLFace obj) { LiveFaceAnalyseActivity.this.overlay.clear(); if (obj == null) { return; } LocalFaceGraphic faceGraphic = new LocalFaceGraphic(LiveFaceAnalyseActivity.this.overlay, obj, LiveFaceAnalyseActivity.this); LiveFaceAnalyseActivity.this.overlay.addGraphic(faceGraphic); MLFaceEmotion emotion = obj.getEmotions(); if (emotion.getSmilingProbability() > smilingPossibility) { safeToTakePicture = false; mHandler.sendEmptyMessage(TAKE_PHOTO); } } @Override public void objectUpdateCallback(MLAnalyzer.Result<MLFace> var1, MLFace obj) { LiveFaceAnalyseActivity.this.overlay.clear(); if (obj == null) { return; } LocalFaceGraphic faceGraphic = new LocalFaceGraphic(LiveFaceAnalyseActivity.this.overlay, obj, LiveFaceAnalyseActivity.this); LiveFaceAnalyseActivity.this.overlay.addGraphic(faceGraphic); MLFaceEmotion emotion = obj.getEmotions(); if (emotion.getSmilingProbability() > smilingPossibility && safeToTakePicture) { safeToTakePicture = false; mHandler.sendEmptyMessage(TAKE_PHOTO); } } @Override public void lostCallback(MLAnalyzer.Result<MLFace> result) { LiveFaceAnalyseActivity.this.overlay.clear(); } @Override public void completeCallback() { LiveFaceAnalyseActivity.this.overlay.clear(); } }).create(); this.analyzer.setTransactor(transactor);

adopt LensEngine.Creator Creating lensengine instance for face detection of video stream

this.mLensEngine = new LensEngine.Creator(context, this.analyzer).setLensType(this.lensType) .applyDisplayDimension(640, 480) .applyFps(25.0f) .enableAutomaticFocus(true) .create();

Start camera preview for face detection

this.mPreview.start(this.mLensEngine, this.overlay);

Previous links: Quick service is common in top 3 Audit minefields, but the audit will collapse! Content source: https://developer.huawei.com/consumer/cn/forum/topicview?tid=0201256372685820478&fid=18 Original author: littlewhite