Introduction

There must be a lot of little friends who like to travel. It is better to go abroad for a circle. Before traveling, everyone will make all kinds of strategies on eating, wearing, staying, traveling and playing routes, and then go out with full expectation..

Imagine Travel

_Before departure, your imaginary destination might have beautiful buildings:

Good food:

_Beautiful Miss Sister:

Leisure life:

Actual Travel

_But in reality, if you go to places where language is not available, you may encounter the following problems:

Awkward Maps:

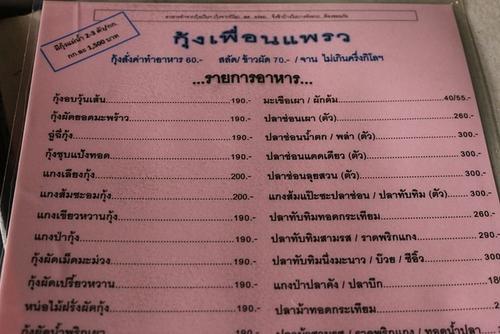

Dream menu:

Magic signpost signs:

A wide range of goods in the mall:

_Too difficult

Photo translation helps you

With the HMS ML Kit Text Recognition and Translation Service in Huawei, these are not problems. Today, let me introduce how to use the SDK provided by HMS ML Kit in Huawei to develop a photo translation service.Simply put, there are only two steps to complete the development of small photo translation applications:

Text Recognition

| _First take a photo to get a picture, then send the captured image frame to HMS ML Kit Text Recognition Service in Huawei for text recognition. Huawei Text Recognition Service provides both offline SDK (end-side) and cloud-side methods. The end-side is free for real-time detection, and cloud-side recognition is of higher type and accuracy.In this real battle, we use the capabilities provided by the cloud. |

Text Recognition Features | Specification (HMS 4.0) |

|---|---|---|

| End Side | Support for China, Japan and Korea | |

| Cloud Side Multilingual | 19 languages including Chinese, English, French, Western and Thai | |

| Tilt recognition | Recognizable even with a 30-degree tilt | |

| Curved Text Support | Successfully identifiable even with 45-degree bending support | |

| Text Tracking | End-side support tracking |

_The above specifications are for reference only, and are as follows: Huawei Developer Alliance website Precision

translate

| _Send the recognized text to the HMS MLKit translation service in Huawei for text translation, and you will get the results you want to translate. Translation is a service provided by the cloud. |

Text Translation Features | Specification (HMS 4.0) |

|---|---|---|

| Multilingual | 7 languages Chinese, English, French, Western, Tu, A, Thai | |

| time delay | 300ms/100 words | |

| BLEU value | >30 | |

| Dynamic Term Configuration | Support |

_The above specifications are for reference only, and are as follows: Huawei Developer Alliance website Precision

Photo translation APP development practice

_There's too much rubbish above, so get right to the point

1 Development Preparation

_Due to the use of cloud-side services, it is necessary to register a developer account with the Huawei Developer Consortium and open these services in the cloud. Let's not go into details here, just follow the steps in the official AppGallery Connect configuration and services section:

Register Developer, Open Service Reference Stamp:

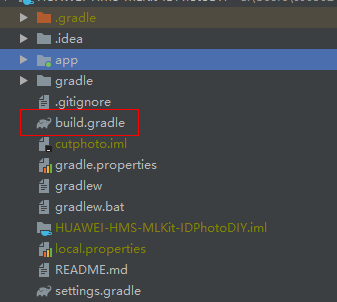

1.1 Add Huawei maven warehouse in project-level gradle

_Open Android Studio project levelBuild.gradleFile.

Incrementally add the following maven addresses:

buildscript {

repositories {

maven {url 'http://developer.huawei.com/repo/'}

} }allprojects {

repositories {

maven { url 'http://developer.huawei.com/repo/'}

}

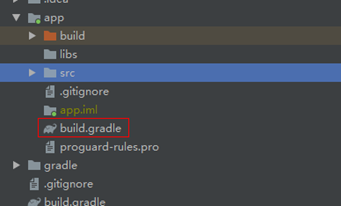

}1.2 at the application levelBuild.gradleAdd SDK dependency inside

_Integrated SDK.(With cloud side capabilities, only SDK base packages can be introduced)

dependencies{

implementation 'com.huawei.hms:ml-computer-vision:1.0.2.300'

implementation 'com.huawei.hms:ml-computer-translate:1.0.2.300'

}1.3 in AndroidManifest.xml Request camera and storage rights inside the file

_To enable applications to automatically update the latest machine learning model to user devices after users install your applications from the Huawei application market, add the following statements to the application AndroidManifest.xml File:

<manifest

<application

<meta-data

android:name="com.huawei.hms.ml.DEPENDENCY"

android:value= "imgseg "/>

</application>

</manifest> 1.4 in AndroidManifest.xml Request camera and storage rights inside the file

<uses-permission android:name="android.permission.CAMERA" /><uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" /><uses-feature android:name="android.hardware.camera" /><uses-feature android:name="android.hardware.camera.autofocus" />

2 Key steps in code development

2.1 Dynamic Permission Request

private static final int CAMERA_PERMISSION_CODE = 1; @Override

public void onCreate(Bundle savedInstanceState) {

// Checking camera permission

if (!allPermissionsGranted()) {

getRuntimePermissions();

}} 2.2 Create a Cloud Side Text Analyzer.Text parsers can be created from the text detection configurator "MLRemoteTextSetting"

MLRemoteTextSetting setting = (new MLRemoteTextSetting.Factory()).

setTextDensityScene(MLRemoteTextSetting.OCR_LOOSE_SCENE).create();this.textAnalyzer = MLAnalyzerFactory.getInstance().getRemoteTextAnalyzer(setting);2.3 PassedAndroid.graphics.BitmapCreate a MLFrame object for the analyzer to detect pictures

MLFrame mlFrame = new MLFrame.Creator().setBitmap(this.originBitmap).create();

2.4 Call the "asyncAnalyseFrame" method for text detection

Task<MLText> task = this.textAnalyzer.asyncAnalyseFrame(mlFrame);

task.addOnSuccessListener(new OnSuccessListener<MLText>() {

@Override public void onSuccess(MLText mlText) {

// Transacting logic for segment success.

if (mlText != null) {

RemoteTranslateActivity.this.remoteDetectSuccess(mlText);

} else {

RemoteTranslateActivity.this.displayFailure();

}

}

}).addOnFailureListener(new OnFailureListener() {

@Override public void onFailure(Exception e) {

// Transacting logic for segment failure.

RemoteTranslateActivity.this.displayFailure();

return;

}

});2.5 Create a text translator.A translator can be created by customizing the parameter class "MLRemoteTranslateSetting" with a text translator

MLRemoteTranslateSetting.Factory factory = new MLRemoteTranslateSetting

.Factory()

// Set the target language code. The ISO 639-1 standard is used.

.setTargetLangCode(this.dstLanguage);

if (!this.srcLanguage.equals("AUTO")) {

// Set the source language code. The ISO 639-1 standard is used.

factory.setSourceLangCode(this.srcLanguage);

}

this.translator = MLTranslatorFactory.getInstance().getRemoteTranslator(factory.create());2.6 Call the "asyncAnalyseFrame" method to translate the text obtained by text recognition

final Task<String> task = translator.asyncTranslate(this.sourceText);

task.addOnSuccessListener(new OnSuccessListener<String>() {

@Override public void onSuccess(String text) {

if (text != null) {

RemoteTranslateActivity.this.remoteDisplaySuccess(text);

} else {

RemoteTranslateActivity.this.displayFailure();

}

}

}).addOnFailureListener(new OnFailureListener() {

@Override public void onFailure(Exception e) {

RemoteTranslateActivity.this.displayFailure();

}

});2.7 Release resources after translation

if (this.textAnalyzer != null) {

try {

this.textAnalyzer.close();

} catch (IOException e) {

SmartLog.e(RemoteTranslateActivity.TAG, "Stop analyzer failed: " + e.getMessage());

}

}

if (this.translator != null) {

this.translator.stop();

}3 Sources

Old rule, simple little Demo source uploaded Github,github source address stamp: https://github.com/HMS-MLKit/HUAWEI-HMS-MLKit-Sample (The project directory is Photo-Translate), you can make reference for scene-based optimization.

4 Demo Effect

Concluding remarks

_This demonstration of the applet APP development uses both cloud-side capabilities of the HMS ML Kit in Huawei, as well as the ability to recognize and translate text. Huawei's text recognition and translation can also help developers do many other interesting and powerful functions, such as:

[General Text Recognition]

1. Bus License Plate Text Recognition

2. Text Recognition in Document Reading Scene

[Card Text Recognition]

1. The card number of a bank card can be recognized by word recognition for scenarios such as bank card binding

2. Of course, in addition to identifying bank cards, you can also identify various card numbers in your life, such as membership cards, discount cards

3. In addition, the identification of ID cards, Hong Kong-Macao passes and other certificate type numbers can be achieved.

[Translation]

1. Translation of signposts and signposts

2. Document translation

3. Web page translation, such as identifying the language type of the site's commentary area and translating it into the language of the corresponding country;

4. Introduction and translation of Haitao commodities

5. Translation of restaurant menu

More detailed development guide refers to the official website of the Huawei Developers Federation

Huawei Developer Consortium Machine Learning Service Development Guide

Forward links: Android | Shows you how to develop a certificate DIY applet

Content source: https://developer.huawei.com/consumer/cn/forum/topicview?tid=0201209905778120045&fid=18

Original Author: AI_Talk