ijkplayer is a cross platform player that supports Android and iOS playback. OpenGL ES is used for video rendering. mediacodec can be used for Android video decoding, while VideoToolbox can be used for iOS video decoding. The soft solution part uses the avcodec of FFmpeg.

1, iOS video decoding and playback

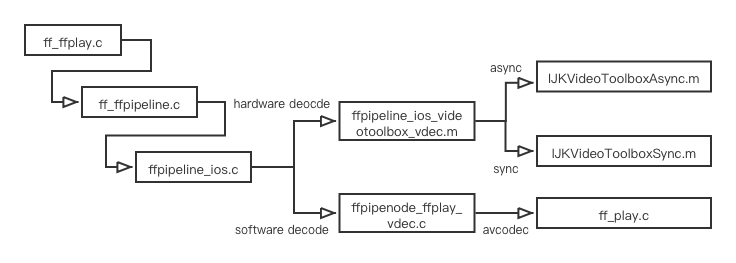

The video decoder is created in the form of pipeline, and the overall pipeline is as follows:

1. Create IjkMediaPlayer

Call ijkplayer first_ Ijkplayer of IOS. M_ ios_ Create () method to internally create IjkMediaPlayer, vout(gles) and pipeline. The code is as follows:

IjkMediaPlayer *ijkmp_ios_create(int (*msg_loop)(void*))

{

IjkMediaPlayer *mp = ijkmp_create(msg_loop);

mp->ffplayer->vout = SDL_VoutIos_CreateForGLES2();

mp->ffplayer->pipeline = ffpipeline_create_from_ios(mp->ffplayer);

return mp;

}Which calls ijk_ vout_ ios_ SDL of gles2. M_ VoutIos_ Createforgles2() method creates as Vout:

SDL_Vout *SDL_VoutIos_CreateForGLES2()

{

SDL_Vout *vout = SDL_Vout_CreateInternal(sizeof(SDL_Vout_Opaque));

SDL_Vout_Opaque *opaque = vout->opaque;

opaque->gl_view = nil;

vout->create_overlay = vout_create_overlay;

vout->free_l = vout_free_l;

vout->display_overlay = vout_display_overlay;

return vout;

}pipeline calls ffpipeline_ Ffpipeline of IOS. C_ create_ from_ The IOS () method is used to create and assign a value to the function pointer:

IJKFF_Pipeline *ffpipeline_create_from_ios(FFPlayer *ffp)

{

IJKFF_Pipeline *pipeline = ffpipeline_alloc(&g_pipeline_class, sizeof(IJKFF_Pipeline_Opaque));

IJKFF_Pipeline_Opaque *opaque = pipeline->opaque;

opaque->ffp = ffp;

pipeline->func_destroy = func_destroy;

pipeline->func_open_video_decoder = func_open_video_decoder;

pipeline->func_open_audio_output = func_open_audio_output;

return pipeline;

}2. Set glview

Call ijkplayer_ Ijkmp of IOS. M_ ios_ set_ Glview () method to set glview:

void ijkmp_ios_set_glview_l(IjkMediaPlayer *mp, IJKSDLGLView *glView)

{

SDL_VoutIos_SetGLView(mp->ffplayer->vout, glView);

}

void ijkmp_ios_set_glview(IjkMediaPlayer *mp, IJKSDLGLView *glView)

{

pthread_mutex_lock(&mp->mutex);

ijkmp_ios_set_glview_l(mp, glView);

pthread_mutex_unlock(&mp->mutex);

}Then call ijksdl_. vout_ ios_ SDL of gles2. M_ VoutIos_ Setglview() method, assign glview to Vout - > opaque:

static void SDL_VoutIos_SetGLView_l(SDL_Vout *vout, IJKSDLGLView *view)

{

SDL_Vout_Opaque *opaque = vout->opaque;

if (opaque->gl_view == view)

return;

if (opaque->gl_view) {

[opaque->gl_view release];

opaque->gl_view = nil;

}

if (view)

opaque->gl_view = [view retain];

}

void SDL_VoutIos_SetGLView(SDL_Vout *vout, IJKSDLGLView *view)

{

SDL_LockMutex(vout->mutex);

SDL_VoutIos_SetGLView_l(vout, view);

SDL_UnlockMutex(vout->mutex);

}3. Create decoder

Create entry for video decoder_ FFP of ffplay. C_ prepare_ async_ l->stream_ open->read_thread, in read_thread calls stream internally_ component_ Open() method to create and open:

static int stream_component_open(FFPlayer *ffp, int stream_index)

{

// find decoder

codec = avcodec_find_decoder(avctx->codec_id);

......

switch (avctx->codec_type) {

case AVMEDIA_TYPE_VIDEO:

// init and open decoder

if (ffp->async_init_decoder) {

if (ret || !ffp->node_vdec) {

decoder_init(&is->viddec, avctx, &is->videoq, is->continue_read_thread);

ffp->node_vdec = ffpipeline_open_video_decoder(ffp->pipeline, ffp);

}

} else {

decoder_init(&is->viddec, avctx, &is->videoq, is->continue_read_thread);

ffp->node_vdec = ffpipeline_open_video_decoder(ffp->pipeline, ffp);

}

ret = decoder_start(&is->viddec, video_thread, ffp, "ff_video_dec");

break;

}

return ret;

}To create a pipeline, call FF_ ffpipeline.c->ffpipeline_ Func of IOS. C_ open_ video_ Decoder() method:

static IJKFF_Pipenode *func_open_video_decoder(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{

IJKFF_Pipenode* node = NULL;

IJKFF_Pipeline_Opaque *opaque = pipeline->opaque;

// open open decoder from videotoolbox if have been setting true

if (ffp->videotoolbox) {

node = ffpipenode_create_video_decoder_from_ios_videotoolbox(ffp);

}

// then open decoder from ffplay

if (node == NULL) {

node = ffpipenode_create_video_decoder_from_ffplay(ffp);

ffp->stat.vdec_type = FFP_PROPV_DECODER_AVCODEC;

opaque->is_videotoolbox_open = false;

} else {

ffp->stat.vdec_type = FFP_PROPV_DECODER_VIDEOTOOLBOX;

opaque->is_videotoolbox_open = true;

}

return node;

}There are two ways to create a videotoolbox: synchronous and asynchronous. If it is not set, the synchronous method is used by default. It should be noted that the hard solution only supports h264 and hevc decoders:

IJKFF_Pipenode *ffpipenode_create_video_decoder_from_ios_videotoolbox(FFPlayer *ffp)

{

IJKFF_Pipenode *node = ffpipenode_alloc(sizeof(IJKFF_Pipenode_Opaque));

node->func_destroy = func_destroy;

node->func_run_sync = func_run_sync;

opaque->ffp = ffp;

opaque->decoder = &is->viddec;

switch (opaque->avctx->codec_id) {

case AV_CODEC_ID_H264:

case AV_CODEC_ID_HEVC:

if (ffp->vtb_async)

opaque->context = Ijk_VideoToolbox_Async_Create(ffp, opaque->avctx);

else

opaque->context = Ijk_VideoToolbox_Sync_Create(ffp, opaque->avctx);

break;

default:

ALOGI("Videotoolbox-pipeline:open_video_decoder: not H264 or H265\n");

goto fail;

}

return node;

fail:

ffpipenode_free_p(&node);

return NULL;

}Next, let's look at how to create a videotoolbox hard decoder in synchronization mode:

Ijk_VideoToolBox_Opaque* videotoolbox_sync_create(FFPlayer* ffp, AVCodecContext* avctx)

{

// check format

ret = vtbformat_init(&context_vtb->fmt_desc, context_vtb->codecpar);

if (ret)

goto fail;

vtbformat_destroy(&context_vtb->fmt_desc);

// create videotoolbox session

context_vtb->vt_session = vtbsession_create(context_vtb);

return context_vtb;

fail:

videotoolbox_sync_free(context_vtb);

return NULL;

}vtbformat_ The init () method mainly checks width, height, profile, level and extradata:

static int vtbformat_init(VTBFormatDesc *fmt_desc, AVCodecParameters *codecpar)

{

// check width and height

if (width < 0 || height < 0) {

goto fail;

}

if (extrasize < 7 || extradata == NULL) {

ALOGI("%s - avcC or hvcC atom data too small or missing", __FUNCTION__);

goto fail;

}

// check profile and level

if (extradata[0] == 1) {

if (level == 0 && sps_level > 0)

level = sps_level;

if (profile == 0 && sps_profile > 0)

profile = sps_profile;

if (profile == FF_PROFILE_H264_MAIN && level == 32 && fmt_desc->max_ref_frames > 4) {

goto fail;

}

} else {

if ((extradata[0] == 0 && extradata[1] == 0 && extradata[2] == 0 && extradata[3] == 1) ||

(extradata[0] == 0 && extradata[1] == 0 && extradata[2] == 1)) {

// check avcC

if (!validate_avcC_spc(extradata, extrasize, &fmt_desc->max_ref_frames, &sps_level, &sps_profile)) {

av_free(extradata);

goto fail;

}

}

}

return 0;

fail:

vtbformat_destroy(fmt_desc);

return -1;

}4. Video decoding

Video decoding is divided into two parts: soft decoding and hard decoding. In stream_ component_ Video has been created when opening_ Thread video decoding thread:

static int video_thread(void *arg)

{

FFPlayer *ffp = (FFPlayer *)arg;

int ret = 0;

if (ffp->node_vdec)

// run video decode pipeline

ret = ffpipenode_run_sync(ffp->node_vdec);

}

return ret;

}4.1 hard solution of videotoolbox

Hard solution call ffpipenode_ios_videotoolbox_vdec.m execute func_run_sync() method:

static int func_run_sync(IJKFF_Pipenode *node)

{

IJKFF_Pipenode_Opaque *opaque = node->opaque;

int ret = videotoolbox_video_thread(opaque);

if (opaque->context) {

opaque->context->free(opaque->context->opaque);

free(opaque->context);

opaque->context = NULL;

}

return ret;

}videotoolbox_ video_ The thread() method is mainly used to call videotoolbox for decoding in the for loop:

int videotoolbox_video_thread(void *arg)

{

for (;;) {

@autoreleasepool {

ret = opaque->context->decode_frame(opaque->context->opaque);

}

if (ret < 0)

goto the_end;

}

the_end:

return 0;

}Take synchronization mode as an example, call IJKVideoToolBoxSync.m for hard solution:

int videotoolbox_sync_decode_frame(Ijk_VideoToolBox_Opaque* context)

{

......

do {

// using videotoolbox to decode

ret = decode_video(context, d->avctx, &d->pkt_temp, &got_frame);

} while (!got_frame && !d->finished);

return got_frame;

}If the video resolution changes, it will automatically switch to the avcodec of ffmpeg for decoding:

static int decode_video(Ijk_VideoToolBox_Opaque* context, AVCodecContext *avctx, AVPacket *avpkt)

{

// switch to ffmpeg avcodec when resolution changed

if (context->ffp->vtb_handle_resolution_change &&

context->codecpar->codec_id == AV_CODEC_ID_H264) {

size_data = av_packet_get_side_data(avpkt, AV_PKT_DATA_NEW_EXTRADATA, &size_data_size);

// minimum avcC(sps,pps) = 7

if (size_data && size_data_size > 7) {

ret = avcodec_open2(new_avctx, avctx->codec, &codec_opts);

ret = avcodec_decode_video2(new_avctx, frame, &got_picture, avpkt);

av_frame_unref(frame);

avcodec_free_context(&new_avctx);

}

}

// using videotoolbox to decode internal

return decode_video_internal(context, avctx, avpkt, got_picture_ptr);

}Finally, come to the videotoolbox hard solution and uncover the mystery of videotoolbox decoding:

static int decode_video_internal(Ijk_VideoToolBox_Opaque* context, AVCodecContext *avctx, const AVPacket *avpkt)

{

if (context->refresh_request) {

context->vt_session = vtbsession_create(context);

}

......

// finally, decode video frame by videotoolbox

status = VTDecompressionSessionDecodeFrame(context->vt_session, sample_buff, decoder_flags, (void*)sample_info, 0);

return 0;

}4.2 ffmpeg soft solution

The soft solution is to call ffpipenode_ ffplay_ Func of vdec. C_ run_ Sync() method:

static int func_run_sync(IJKFF_Pipenode *node)

{

IJKFF_Pipenode_Opaque *opaque = node->opaque;

return ffp_video_thread(opaque->ffp);

}That is, call back FF_ FFP of ffplay. C_ video_ Thread() method, while ffp_video_thread() is only for ffplay_video_thread(). Let's mainly look at ffplay_ video_ Code of thread method:

static int ffplay_video_thread(void *arg)

{

......

for (;;) {

// decode video frame by avcodec

ret = get_video_frame(ffp, frame);

if (ret < 0)

goto the_end;

}

the_end:

av_frame_free(&frame);

return 0;

}Then get_video_frame() method to decode and handle the details of frame loss:

static int get_video_frame(FFPlayer *ffp, AVFrame *frame)

{

VideoState *is = ffp->is;

int got_picture;

if ((got_picture = decoder_decode_frame(ffp, &is->viddec, frame, NULL)) < 0)

return -1;

......

return got_picture;

}Finally, the decoder_decode_frame() enables true soft decoding:

static int decoder_decode_frame(FFPlayer *ffp, Decoder *d, AVFrame *frame, AVSubtitle *sub) {

int ret = AVERROR(EAGAIN);

for (;;) {

// receive frame

if (d->queue->serial == d->pkt_serial) {

do {

if (d->queue->abort_request)

return -1;

switch (d->avctx->codec_type) {

case AVMEDIA_TYPE_VIDEO:

ret = avcodec_receive_frame(d->avctx, frame);

if (ret >= 0) {

ffp->stat.vdps = SDL_SpeedSamplerAdd(&ffp->vdps_sampler, FFP_SHOW_VDPS_AVCODEC, "vdps[avcodec]");

if (ffp->decoder_reorder_pts == -1) {

frame->pts = frame->best_effort_timestamp;

} else if (!ffp->decoder_reorder_pts) {

frame->pts = frame->pkt_dts;

}

}

break;

case AVMEDIA_TYPE_AUDIO:

ret = avcodec_receive_frame(d->avctx, frame);

break;

default:

break;

}

} while (ret != AVERROR(EAGAIN));

}

if (pkt.data == flush_pkt.data) {

d->next_pts = d->start_pts;

} else {

if (d->avctx->codec_type == AVMEDIA_TYPE_SUBTITLE) {

// subtitle decode directly

ret = avcodec_decode_subtitle2(d->avctx, sub, &got_frame, &pkt);

} else {

// send packet

if (avcodec_send_packet(d->avctx, &pkt) == AVERROR(EAGAIN)) {

av_packet_move_ref(&d->pkt, &pkt);

}

}

av_packet_unref(&pkt);

}

}

}5. Video rendering

Let's first look at the call chain of the rendering process:

At FF_ Stream of ffplay. C_ Video has been created when opening_ refresh_ Thread video rendering thread is mainly used to cycle video frames for rendering, in which the refresh rate is REFRESH_RATE=0.01:

static int video_refresh_thread(void *arg)

{

FFPlayer *ffp = arg;

VideoState *is = ffp->is;

double remaining_time = 0.0;

while (!is->abort_request) {

if (remaining_time > 0.0)

av_usleep((int)(int64_t)(remaining_time * 1000000.0));

remaining_time = REFRESH_RATE;

if (is->show_mode != SHOW_MODE_NONE && (!is->paused || is->force_refresh))

video_refresh(ffp, &remaining_time);

}

return 0;

}Then watch video_refresh() method is mainly used to calculate and take a frame of image and call video_ Render with display2() method:

static void video_refresh(FFPlayer *opaque, double *remaining_time)

{

if (is->video_st) {

retry:

if (frame_queue_nb_remaining(&is->pictq) == 0) {

// nothing to do, no picture to display in the queue

} else {

/* compute nominal last_duration */

last_duration = vp_duration(is, lastvp, vp);

delay = compute_target_delay(ffp, last_duration, is);

......

frame_queue_next(&is->pictq);

}

display:

/* display picture */

if (!ffp->display_disable && is->force_refresh

&& is->show_mode == SHOW_MODE_VIDEO && is->pictq.rindex_shown)

video_display2(ffp);

}

is->force_refresh = 0;

}Actually, video_ The Display2 () method is for video_ image_ For the encapsulation of display2() method, mainly look at the following internal methods:

static void video_image_display2(FFPlayer *ffp)

{

vp = frame_queue_peek_last(&is->pictq);

if (vp->bmp) {

if (is->subtitle_st) {

if (frame_queue_nb_remaining(&is->subpq) > 0) {

sp = frame_queue_peek(&is->subpq);

}

}

// display video bitmap

SDL_VoutDisplayYUVOverlay(ffp->vout, vp->bmp);

// notify render first video frame

if (!ffp->first_video_frame_rendered) {

ffp->first_video_frame_rendered = 1;

ffp_notify_msg1(ffp, FFP_MSG_VIDEO_RENDERING_START);

}

}

}This is followed by a call to ijksdl_ SDL of Vout. C_ Voutdisplayyuvoverlay() method, internal ijksdl_ vout_ ios_ Vout of gles2. M_ display_ Overlay () method, which locks first and then calls the internal Vout_ display_ overlay_ (L) method:

static int vout_display_overlay_l(SDL_Vout *vout, SDL_VoutOverlay *overlay)

{

SDL_Vout_Opaque *opaque = vout->opaque;

IJKSDLGLView *gl_view = opaque->gl_view;

if (gl_view.isThirdGLView) {

IJKOverlay ijk_overlay;

ijk_overlay.w = overlay->w;

ijk_overlay.h = overlay->h;

ijk_overlay.format = overlay->format;

ijk_overlay.planes = overlay->planes;

ijk_overlay.pitches = overlay->pitches;

ijk_overlay.pixels = overlay->pixels;

ijk_overlay.sar_num = overlay->sar_num;

ijk_overlay.sar_den = overlay->sar_den;

#ifdef __APPLE__

if (ijk_overlay.format == SDL_FCC__VTB) {

ijk_overlay.pixel_buffer = SDL_VoutOverlayVideoToolBox_GetCVPixelBufferRef(overlay);

}

#endif

if ([gl_view respondsToSelector:@selector(display_pixels:)]) {

[gl_view display_pixels:&ijk_overlay];

}

} else {

[gl_view display:overlay];

}

return 0;

}

Finally, there is an exciting moment. Call the display() method of IJKSDLGLView.m to render:

- (void)display: (SDL_VoutOverlay *) overlay

{

// lock GL

if (![self tryLockGLActive]) {

return;

}

if (_context && !_didStopGL) {

EAGLContext *prevContext = [EAGLContext currentContext];

[EAGLContext setCurrentContext:_context];

// call internal display

[self displayInternal:overlay];

[EAGLContext setCurrentContext:prevContext];

}

// unlock GL

[self unlockGLActive];

}

- (void)displayInternal: (SDL_VoutOverlay *) overlay

{

glBindFramebuffer(GL_FRAMEBUFFER, _framebuffer);

glViewport(0, 0, _backingWidth, _backingHeight);

// really render overlay

if (!IJK_GLES2_Renderer_renderOverlay(_renderer, overlay))

ALOGE("[EGL] IJK_GLES2_render failed\n");

glBindRenderbuffer(GL_RENDERBUFFER, _renderbuffer);

[_context presentRenderbuffer:GL_RENDERBUFFER];

// caculate render framerate

int64_t current = (int64_t)SDL_GetTickHR();

int64_t delta = (current > _lastFrameTime) ? current - _lastFrameTime : 0;

if (delta <= 0) {

_lastFrameTime = current;

} else if (delta >= 1000) {

_fps = ((CGFloat)_frameCount) * 1000 / delta;

_frameCount = 0;

_lastFrameTime = current;

} else {

_frameCount++;

}

}It's still too early to be happy. You should calm down, delay satisfaction, see further, and internally call the ijk of renderer.c_ GLES2_ Renderer_ The rendereroverlay() method performs the real rendering:

GLboolean IJK_GLES2_Renderer_renderOverlay(IJK_GLES2_Renderer *renderer, SDL_VoutOverlay *overlay)

{

glClear(GL_COLOR_BUFFER_BIT);

......

if (renderer->vertices_changed ||

(buffer_width > 0 &&

buffer_width > visible_width &&

buffer_width != renderer->buffer_width &&

visible_width != renderer->visible_width)) {

renderer->vertices_changed = 0;

IJK_GLES2_Renderer_Vertices_apply(renderer);

IJK_GLES2_Renderer_Vertices_reloadVertex(renderer);

renderer->buffer_width = buffer_width;

renderer->visible_width = visible_width;

GLsizei padding_pixels = buffer_width - visible_width;

GLfloat padding_normalized = ((GLfloat)padding_pixels) / buffer_width;

IJK_GLES2_Renderer_TexCoords_reset(renderer);

IJK_GLES2_Renderer_TexCoords_cropRight(renderer, padding_normalized);

IJK_GLES2_Renderer_TexCoords_reloadVertex(renderer);

}

glDrawArrays(GL_TRIANGLE_STRIP, 0, 4);

return GL_TRUE;

}