background

Ambari is a powerful big data cluster management platform. In practice, the big data components we use are not limited to those provided on the official website. How to integrate other components in ambari?

Stacks & Services

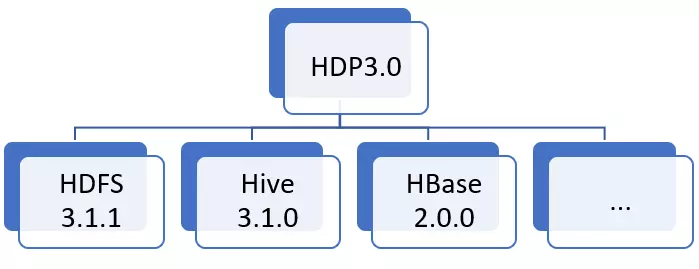

Stack is a collection of services. You can define multiple versions of stacks in Ambari. For example, HDP3.1 is a stack, which can contain Hadoop, Spark and other specific versions of services.

The relationship between Stack and Service

The configuration information related to stacks in Ambari can be found in:

- Source package: ambari server / SRC / main / resources / stacks

- After installation: / var / lib / ambari server / resources / stacks

If multiple stacks need to use the same service configuration, the configuration needs to be placed in common services. The contents stored in the common services directory can be directly used or inherited by any version of stack.

Common services directory

The common services directory is located in the ambari server / SRC / main / resources / common services directory of the source package. If a service needs to be shared among multiple stacks, the service needs to be defined in common services. Generally speaking, common services provide the common configuration of each service. For example, the components mentioned below are configured in the configuration section of ambari.

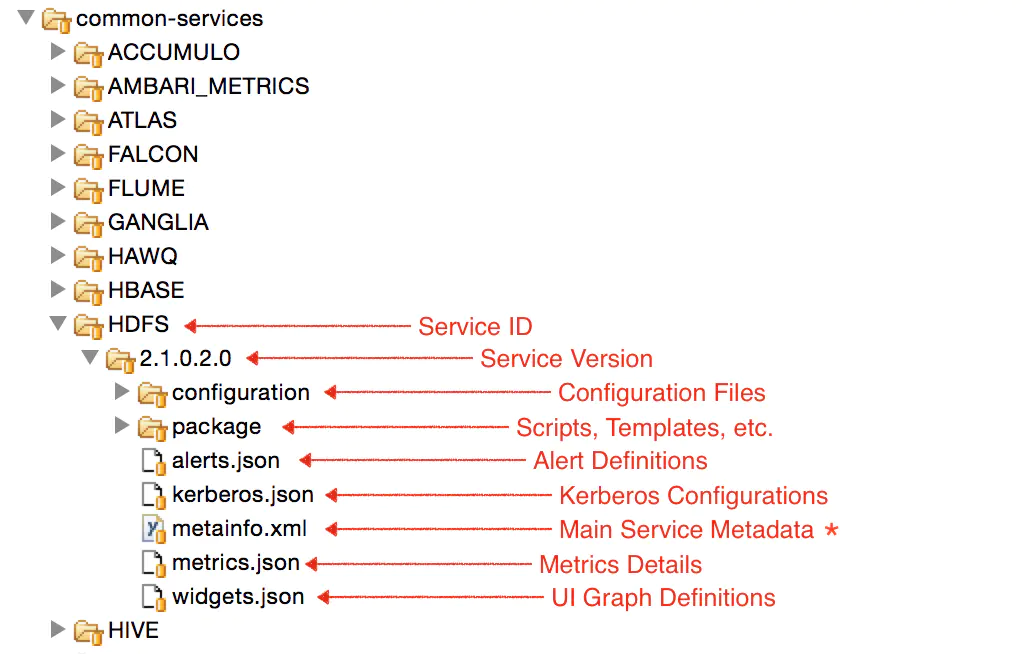

Service directory structure

The directory structure of the Service is as follows:

Service directory structure

As shown in the figure, taking HDFS as an example, the components of each service are explained as follows:

- Service ID: usually uppercase, service name.

- Configuration: the configuration file corresponding to the service is stored. The configuration file is in XML format. These XML files describe how the configuration items of the service are displayed on Ambari's component configuration page (that is, the configuration file of the service's graphical configuration page, and what configuration items are included in the configuration page).

- package: this directory contains multiple subdirectories. The service is used to control the script (start, stop, custom operation, etc.) and the component's profile template.

- alert.json: the alert information definition of service.

- kerberos.json: configuration information for the combination of service and Kerberos.

- metainfo.xml: the most important configuration file for service. The name, version number, introduction and control script name of the defined service.

- metrics.json: the monitoring information configuration file of the service.

- widgets.json: the configuration displayed in the monitoring graphical interface of the service.

Details of metainfo.xml

Not only does service have a metainfo.xml configuration file, but stack also has one. For the stack, metainfo.xml is basically used to specify the inheritance relationship between each stack.

Basic configuration items of service metalinfo.xml:

<services>

<service>

<name>HDFS</name>

<displayName>HDFS</displayName>

<comment>Hadoop Distributed file system.</comment>

<version>2.1.0.2.0</version>

</service>

</services>

The contents of displayName, comment and version will be displayed in the first step of installing the service. Check the list of required components.

component related configuration

The component configuration group specifies the deployment mode and control script of each component under the service. For example, for the HDFS service, its components include namenode, datanode, secondary namenode, and HDFS client. These components can be configured in the component configuration item.

Configuration of namenode component of HDFS:

<component>

<name>NAMENODE</name>

<displayName>NameNode</displayName>

<category>MASTER</category>

<cardinality>1-2</cardinality>

<versionAdvertised>true</versionAdvertised>

<reassignAllowed>true</reassignAllowed>

<commandScript>

<script>scripts/namenode.py</script>

<scriptType>PYTHON</scriptType>

<timeout>1800</timeout>

</commandScript>

<logs>

<log>

<logId>hdfs_namenode</logId>

<primary>true</primary>

</log>

<log>

<logId>hdfs_audit</logId>

</log>

</logs>

<customCommands>

<customCommand>

<name>DECOMMISSION</name>

<commandScript>

<script>scripts/namenode.py</script>

<scriptType>PYTHON</scriptType>

<timeout>600</timeout>

</commandScript>

</customCommand>

<customCommand>

<name>REBALANCEHDFS</name>

<background>true</background>

<commandScript>

<script>scripts/namenode.py</script>

<scriptType>PYTHON</scriptType>

</commandScript>

</customCommand>

</customCommands>

</component>

Explanation of each configuration item:

- Name: component name.

- displayName: the name of the component display.

- category: the type of component, including MASTER, SLAVE and CLIENT. Where MASTER and SLAVE are stateful (start and stop), and CLIENT is stateless.

- cardinality: this component can install several instances. The following formats can be supported. 1: An example. 1-2: 1 to 2 instances. 1 +: 1 or more instances.

- commandScript: the control script configuration of the component.

- logs: provides log access for the log search service.

The meaning of the configuration item in commandScript is as follows:

- Script: the relative path of the control script of this component.

- scriptType: script type, usually we use Python script.

- Timeout: timeout for script execution.

customCommands configuration

This configuration item is a component's custom command, that is, a command other than the system's own commands, such as start, stop, etc.

The following is an example of the REBALANCEHDFS command of HDFS.

<customCommand>

<name>REBALANCEHDFS</name>

<background>true</background>

<commandScript>

<script>scripts/namenode.py</script>

<scriptType>PYTHON</scriptType>

</commandScript>

</customCommand>

This configuration item will add a new menu item in the upper right menu of the service management page. Configuration items have the same meaning as CommandScript. Where background is true, this command is executed in the background.

Next, you may have a question: after clicking the menu item of the custom command, which function of the file named namenode.py does ambari call?

In fact, ambari will call python methods with the same name as customCommand, and all names are lowercase. This is shown below.

def rebalancehdfs(self, env): ...

osSpecifics configuration

The name of the same service installation package is usually different under different platforms. The corresponding relationship between the name of the installation package and the system is the responsibility of the configuration item.

Example of osspecifications configuration of Zookeeper

<osSpecifics>

<osSpecific>

<osFamily>amazon2015,redhat6,redhat7,suse11,suse12</osFamily>

<packages>

<package>

<name>zookeeper_${stack_version}</name>

</package>

<package>

<name>zookeeper_${stack_version}-server</name>

</package>

</packages>

</osSpecific>

<osSpecific>

<osFamily>debian7,ubuntu12,ubuntu14,ubuntu16</osFamily>

<packages>

<package>

<name>zookeeper-${stack_version}</name>

</package>

<package>

<name>zookeeper-${stack_version}-server</name>

</package>

</packages>

</osSpecific>

</osSpecifics>

Note: in this configuration, name is the full name of the component installation package except for the version number. You need to use apt search or yum search in the system to search. If the package cannot be searched, or there is no osFamily corresponding to the current system, the service will not report an error during the installation process, but the software package has not been installed, which must be noted.

Inheritance configuration of service

Take the HDP stack as an example. There is an inheritance relationship between different versions of HDP. The configuration of each component of the higher version of HDP inherits from the lower version of HDP. This inheritance line goes all the way back to HDP2.0.6.

At this point, the reason why the configuration in common services can be shared is explained. The service configuration in common services takes effect because in the most basic HDP stack (2.0.6), each service inherits the corresponding configuration in common services.

For example, ambari? Infra is a service.

<services>

<service>

<name>AMBARI_INFRA</name>

<extends>common-services/AMBARI_INFRA/0.1.0</extends>

</service>

</services>

The configuration of ambari? Infra in HDP is inherited from the configuration of ambari? Infra / 0.1.0 in common service s. Other components are similar. If you are interested, you can view the relevant source code.

Disable service

Add the deleted tag, and the service will be hidden in the list of new service wizard.

<services>

<service>

<name>FALCON</name>

<version>0.10.0</version>

<deleted>true</deleted>

</service>

</services>

Configuration dependencies configuration

Lists the configuration categories that the component depends on. If the dependent configuration class updates the configuration information, the component will be marked by ambari as requiring a reboot.

Other configuration items

For more details, please refer to the official documents: https://cwiki.apache.org/confluence/display/AMBARI/Writing+metainfo.xml

configuration profile

Configuration contains one or more xml configuration files. Each of these xml configuration files represents a configuration group. The configuration group name is the xml file name.

Each xml file specifies the name, value type and description of the service configuration item.

The following is an example of some configuration items of HDFS.

<property>

<!-- Configuration item name -->

<name>dfs.https.port</name>

<!-- Default value for configuration -->

<value>50470</value>

<!-- Description of the configuration, i.e. the prompt that pops up when the mouse moves to the text box -->

<description>

This property is used by HftpFileSystem.

</description>

<on-ambari-upgrade add="true"/>

</property>

<property>

<name>dfs.datanode.max.transfer.threads</name>

<value>1024</value>

<description>Specifies the maximum number of threads to use for transferring data in and out of the datanode.

</description>

<display-name>DataNode max data transfer threads</display-name>

<!-- It is specified that the type of property value is int,Minimum 0, maximum 48000 -->

<value-attributes>

<type>int</type>

<minimum>0</minimum>

<maximum>48000</maximum>

</value-attributes>

<on-ambari-upgrade add="true"/>

</property>

For more configuration items, please refer to the official document: https://cwiki.apache.org/confluence/display/AMBARI/Configuration+support+in+Ambari

Reading configuration item values in Python scripts

For example, here we need to read the value of the instance Gu name configuration item filled in by the user on the page in the control script.

The configuration file of the configuration item is: configuration/sample.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>instance_name</name>

<value>instance1</value>

<description>Instance name for samplesrv</description>

</property>

</configuration>

The read method in python script is:

params.py

from resource_management.libraries.script import Script config = Script.get_config() # config is encapsulated in dictionary format, with the hierarchy of configurations / filename / attribute name instance_name = config['configurations']['sample']['instance_name']

Component control script writing

The control script for the component is located in package/scripts. The script must inherit the resource · management · script class.

- package/scripts: control scripts

- package/files: files used to control scripts

- package/templates: template files that generate configuration files, such as core-site.xml and hdfs-site.xml.

One of the simplest control script files:

import sys

from resource_management import Script

class Master(Script):

def install(self, env):

# How to install components

print 'Install the Sample Srv Master';

def stop(self, env):

# Method to execute when component is stopped

print 'Stop the Sample Srv Master';

def start(self, env):

# How to start a component

print 'Start the Sample Srv Master';

def status(self, env):

# Component running state detection method

print 'Status of the Sample Srv Master';

def configure(self, env):

# How to perform component configuration update

print 'Configure the Sample Srv Master';

if __name__ == "__main__":

Master().execute()

ambari provides the following libraries for writing control scripts:

- resource_management

- ambari_commons

- ambari_simplejson

These libraries provide common operation commands without introducing additional Python packages.

If you need to write different scripts for different operating systems, you need to add different @ OsFamilyImpl() annotations when inheriting resource management.script.

Here are some common ways to write partial control script fragments.

Check if PID file exists (process is running)

from resource_management import * # If the pid file does not exist, an exception of ComponentIsNotRunning will be thrown check_process_status(pid_file_full_path)

Template fill profile template

The user fills in the value in the service page configuration to fill in the component configuration template and generate the final configuration file.

# params file reads in the value of the configuration item filled in by the user in the configuration page in advance

# All template variables of config-template.xml.j2 must be defined in params file, otherwise template filling will report an error, that is to say, all template contents must be filled correctly.

import params

env.set_params(params)

# Config template is the j2 file name in the configuration folder

file_content = Template('config-template.xml.j2')

The Python replacement profile template uses the Jinja2 template

InlineTemplate

Same as Template, except that the Template of the configuration file comes from the variable value, not the xml Template in the Template

file_content = InlineTemplate(self.getConfig()['configurations']['gateway-log4j']['content'])

File

Write content to file

File(path,

content=file_content,

owner=owner_user,

group=sample_group)

Directory

Create directory

Directory(directories,

create_parents=True,

mode=0755,

owner=params.elastic_user,

group=params.elastic_group

)

User

User operation

# Create user User(user_name, action = "create", groups = group_name)

Execute

Execute specific script

Execute('ls -al', user = 'user1')

Package installs the specified package

Package(params.all_lzo_packages,

retry_on_repo_unavailability=params.agent_stack_retry_on_unavailability,

retry_count=params.agent_stack_retry_count)

Epilogue

This blog points out the basic configuration of Ambari's integrated big data components. I will show you how to integrate elastic search service for Ambari in the following blog.

Ambari official website reference

https://cwiki.apache.org/confluence/display/AMBARI/Defining+a+Custom+Stack+and+Services