preface

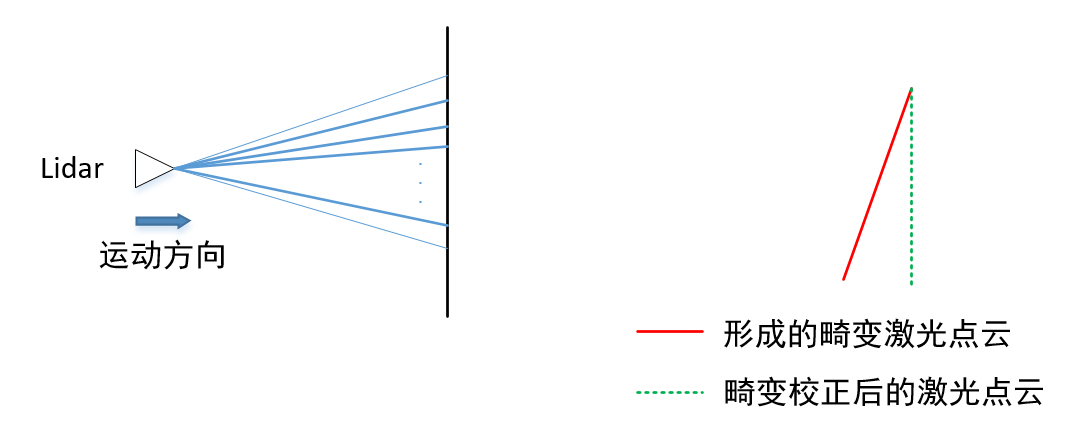

What is the motion distortion of lidar?

One frame of lidar data is all the data formed in the past cycle. The data has only one time stamp, not the data at a certain time. Therefore, the lidar or its carrier will usually move within this frame time. Therefore, the inconsistent origin of this frame will lead to some problems, which is motion distortion.

Therefore, it is necessary to remove motion distortion, also known as distortion correction.

How to make motion compensation?

The purpose of motion compensation is to compensate all point clouds to a certain time, so that the point clouds collected in the past 100ms can be unified to a time point. This time point can be the start time, the end time, or any time in the middle. It is common to compensate to the start time.

Pstart = T_start_current * Pcurrent

Distortion calibration method

Therefore, motion compensation needs to know the pose t corresponding to each point time_ start_ Current usually has several methods:

1 if there is a high-frequency odometer, it is convenient to obtain the position and attitude of each point relative to the starting scanning time.

2 if there is imu, it is convenient to calculate the rotation of each point relative to the starting point

3 if there are no other sensors, the uniform model hypothesis can be used, and the results of the previous inter frame odometer can be used as the motion between the current two frames. At the same time, assuming that the current frame is also a uniform motion, the pose of each point relative to the starting time can also be estimated

The motion of k-1 to K frames and K to k+1 frames are consistent. Using the pose transformation from k-1 to K frames as the pose transformation from K to k+1 frames, the pose transformation of each point from K to k+1 frames can be obtained. ALOAM uses the pure lidar method, so the third method is used.

Code

Turn the point in the middle of the frame to the starting point coordinate system

// undistort lidar point

void TransformToStart(PointType const *const pi, PointType *const po)

{Name of function: TransformToStart The pointer pi passed in by the formal parameter is the point cloud address of the input point, and po is the point cloud address of the output point after conversion. When calling, use the following method.

TransformToStart(&(cornerPointsSharp->points[i]), &pointSel);

//interpolation ratio

double s;

//Since lidar on kitti dataset has been motion compensated, no specific compensation will be made here

if (DISTORTION)

// The real part of intensity stores the id of the point on scan, and the imaginary part stores the time difference between this point and the starting point of this frame

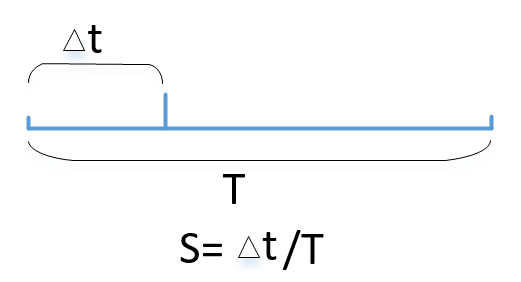

s = (pi->intensity - int(pi->intensity)) / SCAN_PERIOD;//The time ratio of points is calculated

else

s = 1.0; //s = 1 indicates the time when all compensation reaches the end of the point clouds represents the ratio of points to be converted in this frame according to time SCAN_PERIOD is the time of one frame, 10 Hz lidar, then the period is 0.1s.

In the preprocessing of the previous point, the real part of intensity with other values is stored in the id imaginary part of the point on scan, and the time difference between this point and the starting point of this frame.

The whole of intensity minus the real part is the time difference, so divided by the cycle, that is, the time proportion.

If some lidar s are internally de distorted, you can set DISTORTION to 1 and s to 1. According to the above definition, it is the time when all points are compensated to the end of the point cloud.

// This is equivalent to the assumption of a uniform velocity model

// The pose transformation is decomposed into translation and rotation

Eigen::Quaterniond q_point_last = Eigen::Quaterniond::Identity().slerp(s, q_last_curr);

Eigen::Vector3d t_point_last = s * t_last_curr;Make a uniform model assumption, that is, the pose transformation of the previous frame is the pose transformation of this frame, so as to calculate the pose transformation from the input point coordinate system to the starting point coordinate system, and calculate the time proportion s through the above calculation.

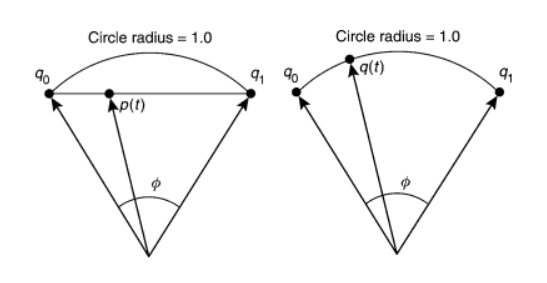

Here, the pose transformation is decomposed into rotation + translation. Since the quaternion is a hypercomplex and not a simple multiplication, the proportion is calculated by Eigen's slerp function (spherical linear interpolation).

The comparison between linear interpolation and spherical linear interpolation is shown in the figure above.

Interpolation between two unit quaternions, such as the linear interpolation in the left figure, the quaternion obtained must not be the unit quaternion. We expect that the interpolation for rotation should not change the length, so it is obvious that the Slerp interpolation in the right figure is more reasonable.

Eigen::Vector3d point(pi->x, pi->y, pi->z);//Take out the coordinates of the current point

Eigen::Vector3d un_point = q_point_last * point + t_point_last;//Rotate and move the current point to the coordinates in the frame start time coordinate systemWith rotation and translation above, the following is simple. Take out the coordinates of the current point and turn the current point to the coordinates in the frame start time coordinate system through rotation and translation.

//Assign the obtained converted coordinates to the output point

po->x = un_point.x();

po->y = un_point.y();

po->z = un_point.z();

po->intensity = pi->intensity;//Assign the original intensity

}Assign the obtained converted coordinates to the output point and assign the original intensity.

Turn the point in the middle of the frame to the starting point coordinate system

This is through the idea of inverse transformation. Firstly, the points are unified to the starting time coordinate system, and then through inverse transformation, the points in the ending time coordinate system are obtained.

void TransformToEnd(PointType const *const pi, PointType *const po)

{Name of function: TransformToEnd The pointer pi passed in by the formal parameter is the point cloud address of the input point, and po is the point cloud address of the converted output point.

When calling, just use it in the following way.

TransformToEnd(&cornerPointsLessSharp->points[i], &cornerPointsLessSharp->points[i]);

// First, do distortion correction and turn to the starting time

pcl::PointXYZI un_point_tmp;//Go to the point in the frame start time coordinate system

//First unify to the starting time

TransformToStart(pi, &un_point_tmp);First, do distortion correction and turn to the starting time.

//Then, the point in the start time coordinate system is transferred to the end time coordinate system by inverse transformation

Eigen::Vector3d un_point(un_point_tmp.x, un_point_tmp.y, un_point_tmp.z);//Take out the point in the starting time coordinate system

//q_last_curr \ t_last_curr is the rotation and translation from the end time coordinate system to the start time coordinate system

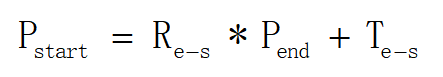

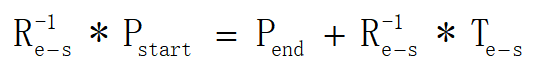

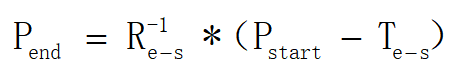

Eigen::Vector3d point_end = q_last_curr.inverse() * (un_point - t_last_curr);//Through the inverse transformation, the point coordinates in the coordinate system at the end time are obtainedq_last_curr \ t_last_curr is the rotation and translation from the end time coordinate system to the start time coordinate system. The formula of the code can be deduced as follows:

Inverse of double multiplication Re-s

Merge under

//Assign the obtained converted coordinates to the output point

po->x = point_end.x();

po->y = point_end.y();

po->z = point_end.z();

//Remove the information of intensity relative time

po->intensity = int(pi->intensity);

}Assign the obtained converted coordinates to the output point and remove the information of intensity relative time.

Overall code

// undistort lidar point

void TransformToStart(PointType const *const pi, PointType *const po)

{

//interpolation ratio

double s;

//Since lidar on kitti dataset has been motion compensated, no specific compensation will be made here

if (DISTORTION)

// The real part of intensity stores the id of the point on scan, and the imaginary part stores the time difference between this point and the starting point of this frame

s = (pi->intensity - int(pi->intensity)) / SCAN_PERIOD;//The time ratio of points is calculated

else

s = 1.0; //s = 1 indicates the time when all compensation reaches the end of the point cloud

//s = 1;

//The operation mode of all points is consistent, which is equivalent to compensation from the end time to the start time

// This is equivalent to the assumption of a uniform velocity model

// The pose transformation is decomposed into translation and rotation

Eigen::Quaterniond q_point_last = Eigen::Quaterniond::Identity().slerp(s, q_last_curr);//Spherical interpolation of attitude

Eigen::Vector3d t_point_last = s * t_last_curr;//Linear interpolation of displacement

Eigen::Vector3d point(pi->x, pi->y, pi->z);//Take out the coordinates of the current point

Eigen::Vector3d un_point = q_point_last * point + t_point_last;//Rotate and move the current point to the coordinates in the frame start time coordinate system

//Assign the obtained converted coordinates to the output point

po->x = un_point.x();

po->y = un_point.y();

po->z = un_point.z();

po->intensity = pi->intensity;//Assign the original intensity

}

// transform all lidar points to the start of the next frame

void TransformToEnd(PointType const *const pi, PointType *const po)

{

// First, do distortion correction and turn to the starting time

pcl::PointXYZI un_point_tmp;//Go to the point in the frame start time coordinate system

//First unify to the starting time

TransformToStart(pi, &un_point_tmp);

//Then, the point in the start time coordinate system is transferred to the end time coordinate system by inverse transformation

Eigen::Vector3d un_point(un_point_tmp.x, un_point_tmp.y, un_point_tmp.z);//Take out the point in the starting time coordinate system

//q_last_curr \ t_last_curr is the rotation and translation from the end time coordinate system to the start time coordinate system

Eigen::Vector3d point_end = q_last_curr.inverse() * (un_point - t_last_curr);//Through the inverse transformation, the point coordinates in the coordinate system at the end time are obtained

//Assign the obtained converted coordinates to the output point

po->x = point_end.x();

po->y = point_end.y();

po->z = point_end.z();

//Remove the information of intensity relative time

po->intensity = int(pi->intensity);

}The above is the code analysis of motion distortion and compensation mode of lidar.