There are many caching strategies, which can be selected according to the situation in the application system. Usually, some static data with low frequency of change are put into the cache, such as configuration parameters, dictionary tables, etc. Some scenarios may need to find alternatives, for example, to improve the speed of full-text retrieval, in complex scenarios, the use of search engines such as Solr or Elastic Search is recommended.

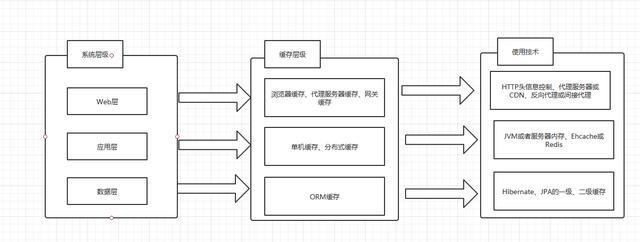

Usually in Web development, the caching requirements and caching strategies at different levels are totally different, as shown in the following figure:

Let's look at two important basic concepts in caching:

1. Cache hit rate

That is, the ratio of the number of times read from the cache to the total number of times read. Generally speaking, the higher the hit rate, the better.

Hit ratio = number of times read from the cache /(total number of times read from the cache + number of times read from slow devices)

Miss Rate = Number of times not read from the cache /(Total number of times read [Number of times read from the cache + Number of times read from slow devices])

If you want to cache, you must monitor this indicator to see if the cache works well.

2. Overdue strategy

- FIFO (First In First Out): First in first out strategy.

- LRU (Least Current Used): The oldest unused policy is that the data with the lowest utilization rate is overflowed over a certain period of time.

- TTL(Time to Live): Lifetime, a period of time from the creation point in the cache to expiration.

- TTI (Time To Idle): Free time, the time when a data is removed from the cache without being accessed for a long time.

Customize a cache manager:

First, customize a User entity class.

public class User implements Serializable {

private String userId;

private String userName;

private int age;

public User(String userId) {

this.userId = userId;

}

public String getUserId() {

return userId;

}

public void setUserId(String userId) {

this.userId = userId;

}

public String getUserName() {

return userName;

}

public void setUserName(String userName) {

this.userName = userName;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

}Next, define a cache manager:

public class CacheManager<T> {

private Map<String, T> cache = new ConcurrentHashMap<String, T>();

public T getValue(Object key) {

return cache.get(key);

}

public void addOrUpdate(String key, T value) {

cache.put(key, value);

}

public void delCache(String key) {

if (cache.containsKey(key)) {

cache.remove(key);

}

}

public void clearCache() {

cache.clear();

}

}The service class that provides user queries uses a cache manager to support user queries.

public class UserService {

private CacheManager<User> cacheManager;

public UserService() {

cacheManager = new CacheManager<User>();

}

@Cacheable(cacheNames = "users")

public User getUserById(String userId) {

// The method implements the business directly without considering the cache logic.

System.out.println("read quert user. " + userId);

return getFromDB(userId);

}

public void reload() {

cacheManager.clearCache();

}

private User getFromDB(String userId) {

return new User(userId);

}

}Use Spring Cache to implement the above example:

public class UserServiceUseSpringCache {

private CacheManager<User> cacheManager;

public UserServiceUseSpringCache() {

cacheManager = new CacheManager<User>();

}

public User getUserById(String userId) {

User result = cacheManager.getValue(userId);

if (result != null) {

System.out.println("get from cache..." + userId);

// If in the cache, the cached result is returned directly

return result;

}

// Otherwise, query from the database

result = getFromDB(userId);

if (result != null) {

// Update the results of database queries to the cache

cacheManager.addOrUpdate(userId, result);

}

return result;

}

public void reload() {

cacheManager.clearCache();

}

private User getFromDB(String userId) {

return new User(userId);

}

}Now you need a Spring configuration file to support annotation-based caching:

<beans xmlns="http://www.springframework.org/schema/beans" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:cache="http://www.springframework.org/schema/cache" xmlns:p="http://www.springframework.org/schema/p" xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd http://www.springframework.org/schema/cache http://www.springframework.org/schema/cache/spring-cache.xsd"> <!-- Starting an annotation-based cache driver is a configuration item that uses a definition by default cacheManager Cache Manager. --> <cache:annotation-driven /> <bean id="accountServiceBean" class="cacheOfAnno.AccountService"/> <!-- generic cache manager --> <bean id="cacheManager" class="org.springframework.cache.support.SimpleCacheManager"> <property name="caches"> <set> <bean class="org.springframework.cache.concurrent.ConcurrentMapCacheFactoryBean" p:name="default" /> <bean class="org.springframework.cache.concurrent.ConcurrentMapCacheFactoryBean" p:name="users" /> </set> </property> </bean> </beans>

There is no caching logic code in UserService code above. Just one annotation @Cacheable(cacheNames="users") is needed to implement the basic caching scheme. The code becomes very elegant and concise. Using Spring Cache requires only the following two steps:

- Caching Definition: Determining the Method and Caching Strategy for Caching

- Cache Configuration: Configuring Cache