This issue continues to share the SVM practice project: license plate detection and recognition, and also introduces some dry goods

In retrospect, Last issue introduced the SVM model training of OpenCv , this issue will continue to introduce the recognition process.

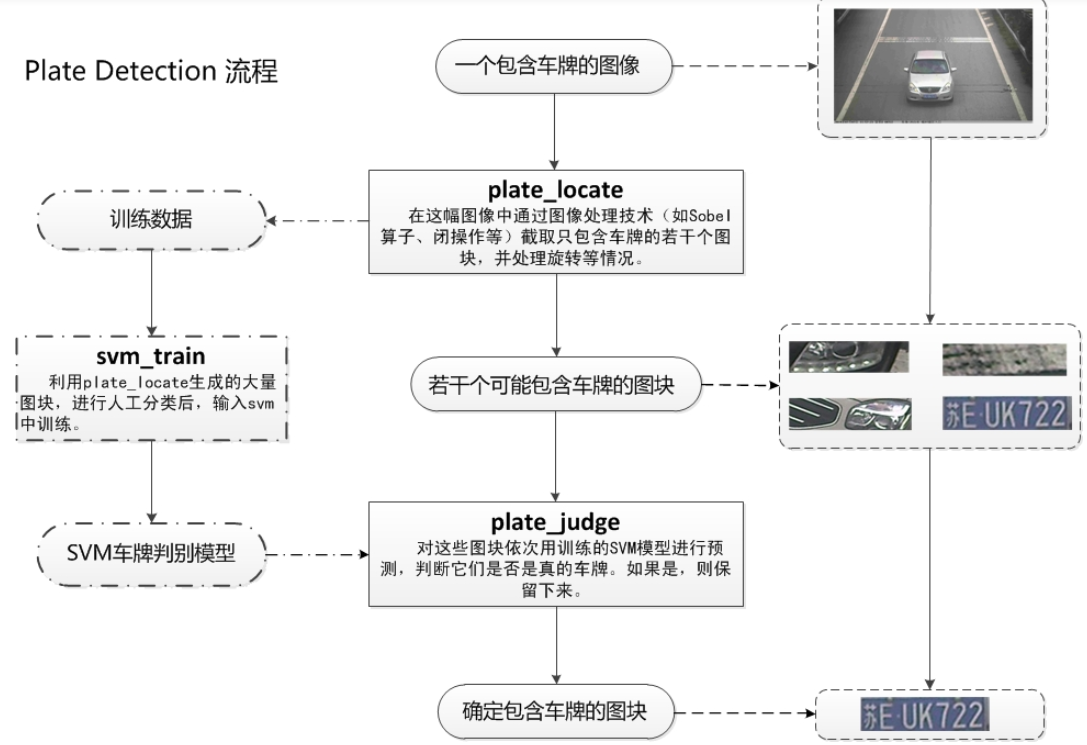

This flow chart is still classic and intuitive.

Let's share what we said in the previous issue:

Chinese display method of OpenCv

I use the display method of PIL. Here is an introduction to the tutorial:

1: The font simhei.ttf needs to be downloaded, and then specify the path of simhei.ttf in font = ImageFont.truetype("./simhei.ttf", 20, encoding = "utf-8"). Similarly, you need to find the path where the font is placed or put it at the same level of the running code.

2: The Chinese code is UTF-8. Otherwise, Chinese will be displayed as a rectangle. str1 = str1.decode(‘utf-8’)

3: Upper Code:

from PIL import Image, ImageDraw, ImageFont import cv2 import numpy as np # cv2 reading pictures img = cv2.imread(r'C:\Users\acer\Desktop\black.jpg') # Name cannot have Chinese characters cv2img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) # The storage order of color hex codes in cv2 and PIL is different pilimg = Image.fromarray(cv2img) # Print Chinese characters on PIL pictures draw = ImageDraw.Draw(pilimg) # Print on picture font = ImageFont.truetype("simhei.ttf", 20, encoding="utf-8") # Parameter 1: font file path, parameter 2: font size draw.text((0, 0), "Hi", 1.8, (255, 0, 0), font=font) # Parameter 1: print coordinate, parameter 2: text, parameter 3: font color, parameter 4: font # PIL picture to cv2 picture cv2charimg = cv2.cvtColor(np.array(pilimg), cv2.COLOR_RGB2BGR) # cv2.imshow("picture", cv2charimg) # Chinese window title display garbled cv2.imshow("photo", cv2charimg) cv2.waitKey(0) cv2.destroyAllWindows()

It should be noted that:

1) After opencv reads the image, the image color channels are arranged by BGR, while the images read by PIL are arranged by RGB. Pay attention to the conversion of image color channel arrangement cv2.cvtColor(img, cv2.COLOR_BGR2RGB).

2) The format of image storage after opencv reading is numpy. PIL is a self-defined format. The method to call PIL needs to convert numpy to its own format. pilimg = Image.fromarray(cv2img). Instead, after the PIL is processed, the opencv method is called to return the format to numpy.

cv2charimg = cv2.cvtColor(np.array(pilimg), cv2.COLOR_RGB2BGR).

If you don't turn around, you will report an error. TypeError: Expected cv::UMat for argument ‘src’

There is another common way: freetype mode:

Download the same font first: for example, simhei.ttf above, and msyh.ttf (Baidu is OK, many of them):

#-*- coding: utf-8 -*- import cv2 import ft2 img = cv2.imread('pic_url.jpg') line = 'Hello' color = (0, 255, 0) # Green pos = (3, 3) text_size = 24 # ft = put_chinese_text('wqy-zenhei.ttc') ft = ft2.put_chinese_text('msyh.ttf') image = ft.draw_text(img, pos, line, text_size, color) name = u'Picture display' cv2.imshow(name, image) cv2.waitKey(0)

I recommend the first one!

Next, continue license plate detection~

There may be many rectangular areas formed by the whole edge of the image. The license plate is in one of the rectangular areas (this is also the disadvantage of the program or algorithm, however, it does not affect the results)

try: contours, hierarchy = cv2.findContours(img_edge2, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) except ValueError: image, contours, hierarchy = cv2.findContours(img_edge2, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) contours = [cnt for cnt in contours if cv2.contourArea(cnt) > Min_Area]

It should be noted that the cv2.findcircuits() function accepts a binary image, that is, a black-and-white image (not a gray-scale image), so the read image should be converted to a gray-scale image first, and then to a binary image!

Results screening (due to the above multiple possibilities):

car_contours = [] for cnt in contours: rect = cv2.minAreaRect(cnt) area_width, area_height = rect[1] if area_width < area_height: area_width, area_height = area_height, area_width wh_ratio = area_width / area_height #print(wh_ratio) #It is required that the aspect ratio of the rectangular area is between 2 and 5.5, 2 and 5.5 are the aspect ratio of the license plate, and other rectangles are excluded if wh_ratio > 2 and wh_ratio < 5.5: car_contours.append(rect) box = cv2.boxPoints(rect) box = np.int0(box)

next:

Rectangular areas may be slanted rectangles and need to be corrected to use color positioning

for rect in car_contours: if rect[2] > -1 and rect[2] < 1:#Create angle to get right value for left, high, right and low angle = 1 else: angle = rect[2] rect = (rect[0], (rect[1][0]+5, rect[1][1]+5), angle)#Expand the scope to avoid exclusion of license plate edge box = cv2.boxPoints(rect) heigth_point = right_point = [0, 0] left_point = low_point = [pic_width, pic_hight] for point in box: if left_point[0] > point[0]: left_point = point if low_point[1] > point[1]: low_point = point if heigth_point[1] < point[1]: heigth_point = point if right_point[0] < point[0]: right_point = point if left_point[1] <= right_point[1]:#Positive angle new_right_point = [right_point[0], heigth_point[1]] pts2 = np.float32([left_point, heigth_point, new_right_point])#Characters just need to change height pts1 = np.float32([left_point, heigth_point, right_point]) M = cv2.getAffineTransform(pts1, pts2) dst = cv2.warpAffine(oldimg, M, (pic_width, pic_hight)) point_limit(new_right_point) point_limit(heigth_point) point_limit(left_point) card_img = dst[int(left_point[1]):int(heigth_point[1]), int(left_point[0]):int(new_right_point[0])] card_imgs.append(card_img) elif left_point[1] > right_point[1]:#Negative angle new_left_point = [left_point[0], heigth_point[1]] pts2 = np.float32([new_left_point, heigth_point, right_point])#Characters just need to change height pts1 = np.float32([left_point, heigth_point, right_point]) M = cv2.getAffineTransform(pts1, pts2) dst = cv2.warpAffine(oldimg, M, (pic_width, pic_hight)) point_limit(right_point) point_limit(heigth_point) point_limit(new_left_point) card_img = dst[int(right_point[1]):int(heigth_point[1]), int(new_left_point[0]):int(right_point[0])] card_imgs.append(card_img)

Start to use color positioning to exclude the rectangle that is not a license plate. Currently, only the blue, green and yellow license plates are recognized

colors = [] for card_index,card_img in enumerate(card_imgs): green = yello = blue = black = white = 0 card_img_hsv = cv2.cvtColor(card_img, cv2.COLOR_BGR2HSV) #There is a possibility that the conversion fails because the above correction rectangle is wrong if card_img_hsv is None: continue row_num, col_num= card_img_hsv.shape[:2] card_img_count = row_num * col_num for i in range(row_num): for j in range(col_num): H = card_img_hsv.item(i, j, 0) S = card_img_hsv.item(i, j, 1) V = card_img_hsv.item(i, j, 2) if 11 < H <= 34 and S > 34:#Image resolution adjustment yello += 1 elif 35 < H <= 99 and S > 34:#Image resolution adjustment green += 1 elif 99 < H <= 124 and S > 34:#Image resolution adjustment blue += 1 if 0 < H <180 and 0 < S < 255 and 0 < V < 46: black += 1 elif 0 < H <180 and 0 < S < 43 and 221 < V < 225: white += 1 color = "no" limit1 = limit2 = 0 if yello*2 >= card_img_count: color = "yello" limit1 = 11 limit2 = 34#Some pictures are green elif green*2 >= card_img_count: color = "green" limit1 = 35 limit2 = 99 elif blue*2 >= card_img_count: color = "blue" limit1 = 100 limit2 = 124#Some pictures are purple elif black + white >= card_img_count*0.7:#TODO color = "bw" print(color) colors.append(color) print(blue, green, yello, black, white, card_img_count) cv2.imshow("color", card_img) cv2.waitKey(1110) if limit1 == 0: continue #The above is to determine the license plate color #The following is the re positioning according to the license plate color to reduce the edge of non license plate boundary xl, xr, yh, yl = self.accurate_place(card_img_hsv, limit1, limit2, color) if yl == yh and xl == xr: continue need_accurate = False if yl >= yh: yl = 0 yh = row_num need_accurate = True if xl >= xr: xl = 0 xr = col_num need_accurate = True card_imgs[card_index] = card_img[yl:yh, xl:xr] if color != "green" or yl < (yh-yl)//4 else card_img[yl-(yh-yl)//4:yh, xl:xr] if need_accurate:#Maybe the x or y direction is not reduced, you need to try again card_img = card_imgs[card_index] card_img_hsv = cv2.cvtColor(card_img, cv2.COLOR_BGR2HSV) xl, xr, yh, yl = self.accurate_place(card_img_hsv, limit1, limit2, color) print('size', xl,xr,yh,yl) if yl == yh and xl == xr: continue if yl >= yh: yl = 0 yh = row_num if xl >= xr: xl = 0 xr = col_num card_imgs[card_index] = card_img[yl:yh, xl:xr] if color != "green" or yl < (yh-yl)//4 else card_img[yl-(yh-yl)//4:yh, xl:xr]

Last expression to keep my brother from falling asleep

Here comes the core part. Let's explain it in detail:

predict_result = [] roi = None card_color = None for i, color in enumerate(colors): if color in ("blue", "yello", "green"): card_img = card_imgs[i] gray_img = cv2.cvtColor(card_img, cv2.COLOR_BGR2GRAY) #The characters of the yellow and green license plates are darker than the background and just opposite to the blue license plates, so the yellow and green license plates need to be reversed if color == "green" or color == "yello": gray_img = cv2.bitwise_not(gray_img) ret, gray_img = cv2.threshold(gray_img, 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU) #Find the peak of horizontal histogram x_histogram = np.sum(gray_img, axis=1) x_min = np.min(x_histogram) x_average = np.sum(x_histogram)/x_histogram.shape[0] x_threshold = (x_min + x_average)/2 wave_peaks = find_waves(x_threshold, x_histogram) if len(wave_peaks) == 0: print("peak less 0:") continue #It is considered that the maximum wave crest in horizontal direction is the license plate area wave = max(wave_peaks, key=lambda x:x[1]-x[0]) gray_img = gray_img[wave[0]:wave[1]] #Find vertical histogram peaks row_num, col_num= gray_img.shape[:2] #Remove one pixel from the upper and lower edge of license plate to avoid the influence of white edge on threshold judgment gray_img = gray_img[1:row_num-1] y_histogram = np.sum(gray_img, axis=0) y_min = np.min(y_histogram) y_average = np.sum(y_histogram)/y_histogram.shape[0] y_threshold = (y_min + y_average)/5#U and 0 require a smaller threshold, otherwise u and 0 will be split into two parts wave_peaks = find_waves(y_threshold, y_histogram) #for wave in wave_peaks: # cv2.line(card_img, pt1=(wave[0], 5), pt2=(wave[1], 5), color=(0, 0, 255), thickness=2) #The number of characters of license plate should be greater than 6 if len(wave_peaks) <= 6: print("peak less 1:", len(wave_peaks)) continue wave = max(wave_peaks, key=lambda x:x[1]-x[0]) max_wave_dis = wave[1] - wave[0] #Judge whether it is the left license plate edge if wave_peaks[0][1] - wave_peaks[0][0] < max_wave_dis/3 and wave_peaks[0][0] == 0: wave_peaks.pop(0) #Combining and separating Chinese characters cur_dis = 0 for i,wave in enumerate(wave_peaks): if wave[1] - wave[0] + cur_dis > max_wave_dis * 0.6: break else: cur_dis += wave[1] - wave[0] if i > 0: wave = (wave_peaks[0][0], wave_peaks[i][1]) wave_peaks = wave_peaks[i+1:] wave_peaks.insert(0, wave) #Remove the separation points on the license plate point = wave_peaks[2] if point[1] - point[0] < max_wave_dis/3: point_img = gray_img[:,point[0]:point[1]] if np.mean(point_img) < 255/5: wave_peaks.pop(2) if len(wave_peaks) <= 6: print("peak less 2:", len(wave_peaks)) continue part_cards = seperate_card(gray_img, wave_peaks) for i, part_card in enumerate(part_cards): #It could be the rivets that fix the license plate if np.mean(part_card) < 255/5: print("a point") continue part_card_old = part_card w = abs(part_card.shape[1] - SZ)//2 part_card = cv2.copyMakeBorder(part_card, 0, 0, w, w, cv2.BORDER_CONSTANT, value = [0,0,0]) part_card = cv2.resize(part_card, (SZ, SZ), interpolation=cv2.INTER_AREA) #part_card = deskew(part_card) part_card = preprocess_hog([part_card]) if i == 0: resp = self.modelchinese.predict(part_card) charactor = provinces[int(resp[0]) - PROVINCE_START] else: resp = self.model.predict(part_card) charactor = chr(resp[0]) #Judge whether the last number is the edge of license plate, assuming that the edge of license plate is considered as 1 if charactor == "1" and i == len(part_cards)-1: if part_card_old.shape[0]/part_card_old.shape[1] >= 7:#Too thin, think it's edge continue predict_result.append(charactor) roi = card_img card_color = color break return predict_result, roi, card_color#Characters recognized, license plate image located, license plate color

Some codes have comments, roughly speaking:

This is to identify the characters in the license plate

gray_img = cv2.bitwise_not(gray_img)

This is the mask method. Let's introduce it in a unified way later. The general idea is to pick out the area where the logo should be placed in the original image, and then put the logo in it.

According to the set threshold and image histogram, find out the wave crest, which is used to separate characters

def find_waves(threshold, histogram): up_point = -1#Rising point is_peak = False if histogram[0] > threshold: up_point = 0 is_peak = True wave_peaks = [] for i,x in enumerate(histogram): if is_peak and x < threshold: if i - up_point > 2: is_peak = False wave_peaks.append((up_point, i)) elif not is_peak and x >= threshold: is_peak = True up_point = i if is_peak and up_point != -1 and i - up_point > 4: wave_peaks.append((up_point, i)) return wave_peaks

According to the peaks found, separate the pictures to get character by character pictures

def seperate_card(img, waves): part_cards = [] for wave in waves: part_cards.append(img[:, wave[0]:wave[1]]) return part_cards

def deskew(img): m = cv2.moments(img) if abs(m['mu02']) < 1e-2: return img.copy() skew = m['mu11']/m['mu02'] M = np.float32([[1, skew, -0.5*SZ*skew], [0, 1, 0]]) img = cv2.warpAffine(img, M, (SZ, SZ), flags=cv2.WARP_INVERSE_MAP | cv2.INTER_LINEAR) return img

Among them:

m = cv2.moments(img)

Introduction to the next period of moment calculation

Finally, the results were screened

#The number of characters of license plate should be greater than 6 if len(wave_peaks) <= 6: print("peak less 1:", len(wave_peaks)) continue wave = max(wave_peaks, key=lambda x:x[1]-x[0]) max_wave_dis = wave[1] - wave[0] #Judge whether it is the left license plate edge if wave_peaks[0][1] - wave_peaks[0][0] < max_wave_dis/3 and wave_peaks[0][0] == 0: wave_peaks.pop(0) #Combining and separating Chinese characters cur_dis = 0 for i,wave in enumerate(wave_peaks): if wave[1] - wave[0] + cur_dis > max_wave_dis * 0.6: break else: cur_dis += wave[1] - wave[0] if i > 0: wave = (wave_peaks[0][0], wave_peaks[i][1]) wave_peaks = wave_peaks[i+1:] wave_peaks.insert(0, wave) #Remove the separation points on the license plate point = wave_peaks[2] if point[1] - point[0] < max_wave_dis/3: point_img = gray_img[:,point[0]:point[1]] if np.mean(point_img) < 255/5: wave_peaks.pop(2) if len(wave_peaks) <= 6: print("peak less 2:", len(wave_peaks)) continue part_cards = seperate_card(gray_img, wave_peaks) for i, part_card in enumerate(part_cards): #It could be the rivets that fix the license plate if np.mean(part_card) < 255/5: print("a point") continue part_card_old = part_card w = abs(part_card.shape[1] - SZ)//2 part_card = cv2.copyMakeBorder(part_card, 0, 0, w, w, cv2.BORDER_CONSTANT, value = [0,0,0]) part_card = cv2.resize(part_card, (SZ, SZ), interpolation=cv2.INTER_AREA) #part_card = deskew(part_card) part_card = preprocess_hog([part_card]) if i == 0: resp = self.modelchinese.predict(part_card) charactor = provinces[int(resp[0]) - PROVINCE_START] else: resp = self.model.predict(part_card) charactor = chr(resp[0]) #Judge whether the last number is the edge of license plate, assuming that the edge of license plate is considered as 1 if charactor == "1" and i == len(part_cards)-1: if part_card_old.shape[0]/part_card_old.shape[1] >= 7:#Too thin, think it's edge continue predict_result.append(charactor) roi = card_img card_color = color break return predict_result, roi, card_color

Return recognized characters, license plate image and license plate color

main function:

if __name__ == '__main__': c = CardPredictor() c.train_svm() r, roi, color = c.predict("test//car7.jpg") print(r, roi.shape[0],roi.shape[1],roi.shape[2]) img = cv2.imread("test//car7.jpg") img = cv2.resize(img,(480,640),interpolation=cv2.INTER_LINEAR) r = ','.join(r) r = r.replace(',', '') print(r) from PIL import Image, ImageDraw, ImageFont cv2img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) # The storage order of color hex codes in cv2 and PIL is different pilimg = Image.fromarray(cv2img) # Print Chinese characters on PIL pictures draw = ImageDraw.Draw(pilimg) # Print on picture font = ImageFont.truetype("simhei.ttf", 30, encoding="utf-8") # Parameter 1: font file path, parameter 2: font size draw.text((0, 0), r, (255, 0, 0), font=font) # Parameter 1: print coordinate, parameter 2: text, parameter 3: font color, parameter 4: font # PIL picture to cv2 picture cv2charimg = cv2.cvtColor(np.array(pilimg), cv2.COLOR_RGB2BGR) # cv2.imshow("picture", cv2charimg) # Chinese window title display garbled cv2.imshow("photo", cv2charimg) cv2.waitKey(0) cv2.destroyAllWindows()

At last, I want to explain: the code is not my original, it comes from my friend Bi set. It will be open source later. Please pay attention to the blogger, thank you

To summarize:

The SVC training model of OPENCV's SVM -- > OPENCV carries out image acquisition / control camera -- > image preprocessing (binary operation, edge calculation, etc.) - > locates the license plate position, and is being placed -- > determines the license plate color -- > relocates according to the license plate color, reduces the edge and non license plate boundary -- > the following are the recognition characters in the license plate -- > return results -- > finally ptrd ICT returns the recognized characters, license plate image and license plate color - > result display, and uses PIL method to display Chinese

Finally, I want to say that according to my ability to find bugs, I have found a lot of bugs, but there is no denying that this machine learning project has been written very well, at least I can't achieve this effect in a short period of time, but it's still not too difficult to write it out. The logic is there, and it's done! In addition, the SVM algorithm problem based on machine learning and the optimization problem in data preprocessing are still very deficient. The biggest problem is the accuracy problem and the under fitting problem. These two problems are my project's problems, and it will be much better to change into deep learning!

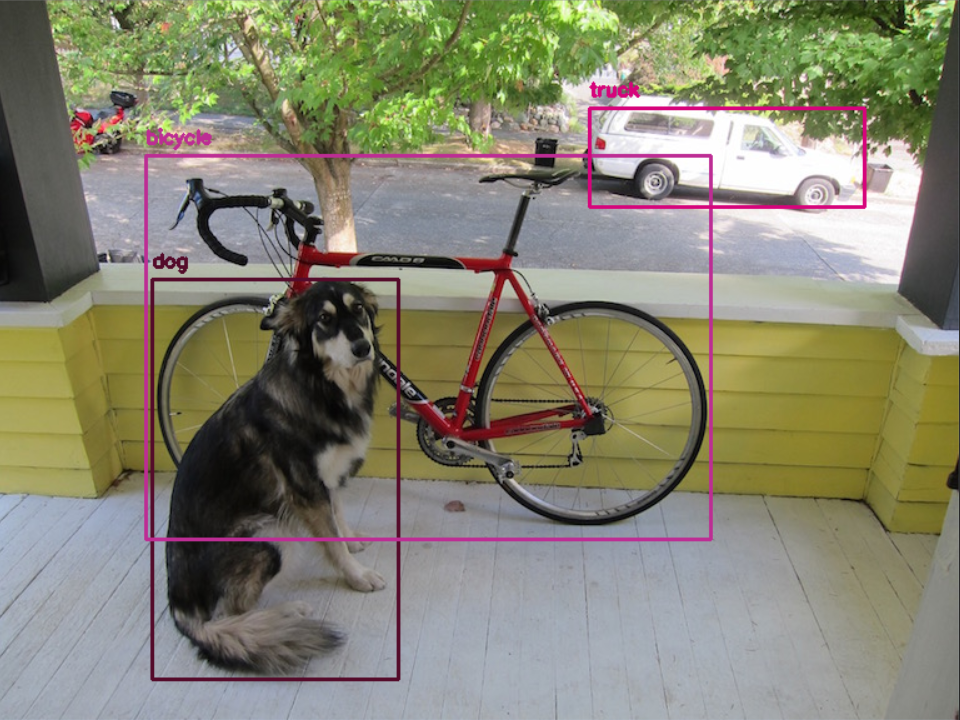

Above, introduce the content of the next issue: first understand CVLIB, and finally don't forget to give the blogger a compliment and attention ~. It's not easy to code words, and make progress together!

Finally, welcome to my wechat group to learn and exchange, machine & deep learning technology exchange group, and build up heroes! Let's make progress together and attach wechat.

Zhou Xiaoxia, sophomore of intelligent science and technology, Shanghai No.2 University of Technology