Original pickle seven, reprint please indicate the source.

Methods used: Mysql database, python language, regular expression re

With the comment url obtained (url method to get JD comments) , now we can crawl the user nickname and url. As a beginner, I use the regular expression to match the url data.

1, According to the content, write the appropriate regular expression

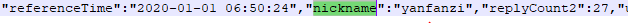

By analyzing the data of the opened url address, we can find two laws needed:

1. User nickname section:

Therefore, our regular expressions for extracting users are as follows:

r'\"nickname\":\"([^",]+)\",\"replyCount2\"'

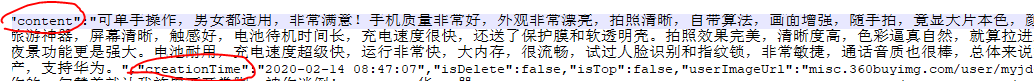

2. Comments:

In the comment part, there will be no follow-up comments, resulting in inconsistent ending:

Situation 1:

Situation two:

The regular expression for matching comments is:

r'\"content\":\"([^"]+)\",\"(?:creationTime|vcontent)\"'

2, Main code of crawling data:

Code skill is not good, don't abandon it, and hurry up, just use it, ha ha ha ha ha ha ha ha ha

Imported libraries:

import requests import re import pymysql

The first part

In this section, I'm assembling the url and looping through four kinds of comments. Then each class circulates the page number, so that it can read the url data of each class and page, and then call the method to crawl. I have created four tables of the database and stored them separately. If there is no such requirement, it would be nice to save one table.

if __name__ == "__main__": #Every time you change a product, remember to change the name of the table you want to store. table_list = creat_table("comment33") #Create 4 database tables and get the list for index in range(4): #Build URLs for four kinds of comments print("The current stage in is......................................................................"+ str(index)) '''All: 0, poor: 1, medium: 2, good: 3''' #Every time you change a product, you only need to modify the url of the base ﹣ url ﹣ Part1 part. The latter parts do not need to be modified. base_url_part1 = "https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=100007651578&score=" base_url_part2 = str(index) base_url_part3 = "&sortType=5&page=" base_url_part4 = "0" #This is the comment page number base_url_part5 = "&pageSize=10" base_url = base_url_part1 + base_url_part2 + base_url_part3 +base_url_part4 + base_url_part5 commentCount = get_CommentCount(base_url) #Get all comments pagenum_max = int(commentCount / 10 ) #Jd.com sets the number of comments for 10 users per page, and calculates the number of comment pages (even if there are many total comments, the website also provides limited comments) for pagenum in range(pagenum_max): print("current pagenum is ..............: " + str(pagenum)) '''pagenum Page number, cycle through each page, get data''' url = base_url_part1 + base_url_part2 + base_url_part3 + str(pagenum) + base_url_part5 fake_headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.79 Safari/537.36' } response = requests.get(url, headers = fake_headers) print(response.status_code) try: page_connent = response.content.decode('gbk') except UnicodeDecodeError as err: print("catch UnicodeDecodeError.....!") continue # page_connent = response.content.decode('gbk') currentPage_user , currentPage_comment = get_comment(page_connent) if currentPage_user == [] or currentPage_comment == []: #If the user nickname does not exist, it can be considered that there is no comment, and execution is terminated break input_database(currentPage_user , currentPage_comment, table_list[index]) #Store in database

The second part: the code automatically creates four database tables.

The main idea is to be lazy. At that time, the comments of a product were put into one category, so many database tables would be built, so the code would create them. Of course, if there is no demand, a table can store tens of thousands of data.

def creat_table(table_baseName): '''Realize automatic creation of database tables (4), avoid manual creation, table_name Table name''' table_list = [] #Assemble sql statements and create 4 tables sql_part1 = "CREATE TABLE IF NOT EXISTS `" # sql_part2 = "comment6" sql_part3 = "`( `id` INT UNSIGNED AUTO_INCREMENT,\ `product` VARCHAR(100) ,\ `username` VARCHAR(40),\ `comment` LONGTEXT,\ PRIMARY KEY ( `id` )\ )ENGINE=InnoDB DEFAULT CHARSET=gbk;" for i in range(4): db = pymysql.connect(host="localhost",user="root",password="123456",db="jdcomment",charset="utf8") cur = db.cursor() #Remember to change every time you create a table sql_part2 = table_baseName if i ==0 : sql_part2 = sql_part2 elif i== 1: sql_part2 = sql_part2 + '_bad' elif i== 2: sql_part2 = sql_part2 + '_middle' elif i== 3: sql_part2 = sql_part2 + '_good' table_list.append(sql_part2) sql = sql_part1 + sql_part2 + sql_part3 cur.execute(sql) #Execute sql statement to create 4 tables print('table creat......!') db.commit() cur.close() db.close() return(table_list) #Returns a list of four database table names

Part 3: total number of comments obtained

At that time, I thought I saw hundreds of thousands of commodities and I thought I could climb them down. Unexpectedly, you can see 100 pages at most, that is, 1000 comments. There are only a few hundred comments, so you need to give a reference value to the loop. The number of comments is in the data returned from the request url. I used regular expressions to match them.

def get_CommentCount(base_url): '''Total number of comments obtained''' fake_headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.79 Safari/537.36' } response = requests.get(base_url, headers = fake_headers) all_content = response.content.decode('gbk') # "commentCount":698813, re_model = re.compile(r'\"commentCount\":([^,]+),') result_list = re_model.findall(all_content) commentCount = int(result_list[0]) return(commentCount)

Part four: matching user nicknames and comments

Using regular expression matching, we get a list of these two kinds of data.

def get_comment(page_connent): '''Match to get user nicknames and comments,''' remodel_user = re.compile(r'\"nickname\":\"([^",]+)\",\"replyCount2\"') #Match user nickname username_list = remodel_user.findall(page_connent) remodel_comment = re.compile(r'\"content\":\"([^"]+)\",\"(?:creationTime|vcontent)\"') #Matching comments comment_list = remodel_comment.findall(page_connent) # print(username_list) # print(len(comment_list)) # print(comment_list) return username_list, comment_list

Part V: storage in database

Save the user's nickname and comments to our MySQL database for manufacturing.

def input_database(currentPage_user , currentPage_comment, table_name): db = pymysql.connect(host="localhost",user="root",password="123456",db="jdcomment",charset="utf8") cur = db.cursor() for i in range(10): '''The list,Take out a single item and store it in the database one by one''' try: username = currentPage_user[i] comment = currentPage_comment[i] #Assembling sql statements sql_part1 = 'INSERT INTO ' sql_part2 = table_name sql_part3 = '(username, comment) VALUES ("'"%s"'", "'"%s"'");' sql = sql_part1 + sql_part2 + sql_part3 #Assemble sql statements and store them in different databases sql = sql%(username, comment) cur.execute(sql) print('data input ......ok!') db.commit() except IndexError as err: print("list index out of range") continue cur.close() db.close() print("to next pagenum.......!")

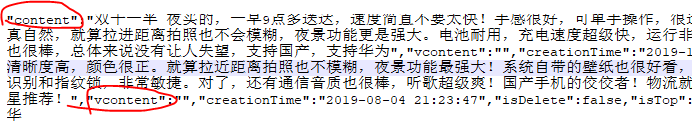

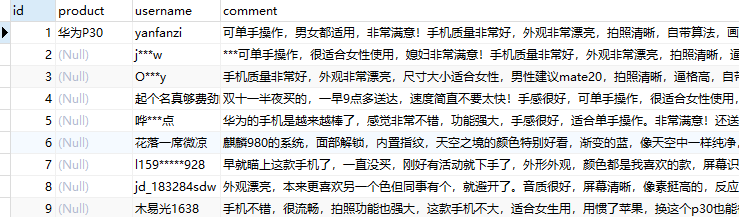

Part VI: results stored in the database

The database automatically creates four tables at a time, with the name determined by the base table name.

Data in the table:

(product, this part, I annotated one myself, not written in code)

Summary: for the above code, there is no problem running and using it. If the network is not good, it may be very slow to request url in the later stage. This is your network problem.. What I designed is that different products and different categories of comments are stored in different tables. When using the code, I only need to modify the basic table name passed in by the create table ("comment33") function in the "first part" code and the url fragment of "base uurl uupart1".