What is an interrupt

Interrupt is a mechanism to notify the CPU after the external device completes some work (for example, after the hard disk completes the read-write operation, it informs the CPU that it has completed through interrupt). In the early days, computers without interrupt mechanism had to query the status of external devices through polling. Because polling is exploratory (that is, the device is not necessarily ready), they often have to do a lot of useless queries, resulting in very low efficiency. Since the interrupt is actively notified to the CPU by the external device, the CPU does not need to poll to query, and the efficiency is greatly improved.

From the perspective of physics, interrupt is an electrical signal generated by hardware equipment and directly sent to the input pin of interrupt controller (such as 8259A), and then the interrupt controller sends the corresponding signal to the processor. Once the processor detects the signal, it interrupts the work it is currently processing and processes the interrupt instead. After that, the processor notifies the OS that an interrupt has been generated. In this way, the OS can properly handle this interrupt. Different devices have different interrupts, and each interrupt is identified by a unique number. These values are usually called interrupt request line.

Interrupt controller

The CPU of X86 computer only provides two external pins for interrupt: NMI and INTR. NMI is a non maskable interrupt, which is usually used for power failure and physical memory parity; INTR is a maskable interrupt, which can be masked by setting the interrupt mask bit. It is mainly used to receive interrupt signals from external hardware, which are transmitted to the CPU by the interrupt controller.

There are two common interrupt controllers:

Programmable Interrupt Controller 8259A

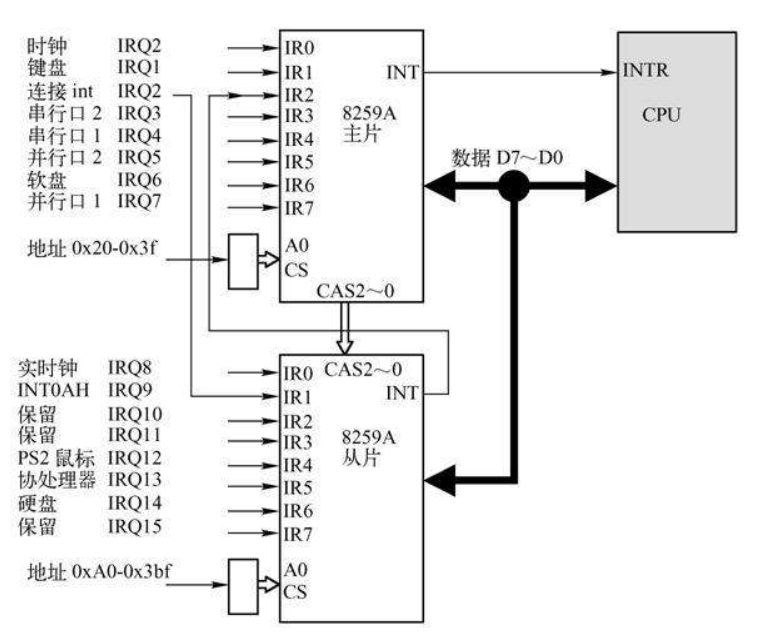

The traditional PIC (Programmable Interrupt Controller) is connected by two 8259A style external chips in a "cascade" way. Each chip can process up to 8 different IRQs. Because the INT output line of the PIC is connected to the IRQ2 pin of the main PIC, the number of available IRQ lines reaches 15, as shown in the figure below.

8259A

Advanced programmable interrupt controller (APIC)

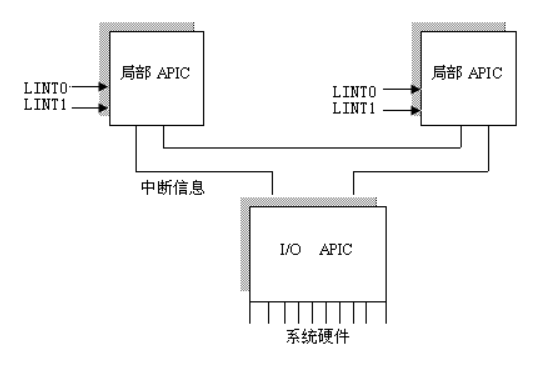

8259A is only suitable for single CPU. In order to fully tap the parallelism of SMP architecture, it is very important to be able to pass interrupts to each CPU in the system. For this reason, Intel introduced a new component called I/O advanced programmable controller to replace the old 8259A Programmable Interrupt Controller. The component consists of two parts: one is "local APIC", which is mainly responsible for transmitting interrupt signals to the specified processor; For example, if a machine has three processors, it must have three local APICS. Another important part is the I/O APIC, which mainly collects interrupt signals from I/O devices and sends signals to the local APIC when those devices need to be interrupted. The system can have up to 8 I/O APICS.

Each local APIC has a 32-bit register, an internal clock, a local timing device, and two additional IRQ lines LINT0 and LINT1 reserved for local interrupts. All local APICS are connected to I / O APICS to form a multi-level APIC system, as shown in the figure below.

APIC

At present, most single processor systems contain an I/O APIC chip, which can be configured in the following two ways:

- As a standard 8259A working mode. The local APIC is disabled, the external I/O APIC is connected to the CPU, and the two LINT0 and LINT1 are connected to the INTR and NMI pins respectively.

- As a standard external I/O APIC. The local APIC is activated and all external interrupts are received through the I/O APIC.

To identify whether a system is using I/O APIC, you can enter the following commands on the command line:

# cat /proc/interrupts

CPU0

0: 90504 IO-APIC-edge timer

1: 131 IO-APIC-edge i8042

8: 4 IO-APIC-edge rtc

9: 0 IO-APIC-level acpi

12: 111 IO-APIC-edge i8042

14: 1862 IO-APIC-edge ide0

15: 28 IO-APIC-edge ide1

177: 9 IO-APIC-level eth0

185: 0 IO-APIC-level via82cxxx

...

If IO-APIC is listed in the output result, your system is using APIC. If you see XT-PIC, it means that your system is using 8259A chip.

Interrupt classification

Interrupts can be divided into synchronous interrupts and asynchronous interrupts:

- Synchronization interrupt is generated by the CPU control unit when an instruction is executed. It is called synchronization because the CPU will issue an interrupt only after an instruction is executed, rather than during the execution of code instructions, such as system calls.

- Asynchronous interrupts are randomly generated by other hardware devices according to the CPU clock signal, which means that interrupts can occur between instructions, such as keyboard interrupts.

According to Intel official data, synchronous interrupts are called exception s, and asynchronous interrupts are called interrupts.

Interrupts can be divided into Maskable interrupt and non Maskable interrupt. Exceptions can be divided into fault, trap and abort.

Broadly speaking, interrupts can be divided into four categories: interrupt, fault, trap and termination. See table for similarities and differences between these categories.

Table: interrupt categories and their behaviors

category | reason | Asynchronous / synchronous | Return behavior |

|---|---|---|---|

interrupt | Signals from I/O devices | asynchronous | Always return to the next instruction |

trap | Intentional anomaly | synchronization | Always return to the next instruction |

fault | Potentially recoverable errors | synchronization | Returns to the current instruction |

termination | Unrecoverable error | synchronization | No return |

Each interrupt in the X86 architecture is given a unique number or vector (8-bit unsigned integer). The unshielded interrupt and exception vectors are fixed, while the maskable interrupt vector can be changed by programming the interrupt controller.

Interrupt handling - upper half (hard interrupt)

Because the APIC interrupt controller is a little small and complex, this paper mainly introduces the interrupt processing process of Linux through 8259A interrupt controller.

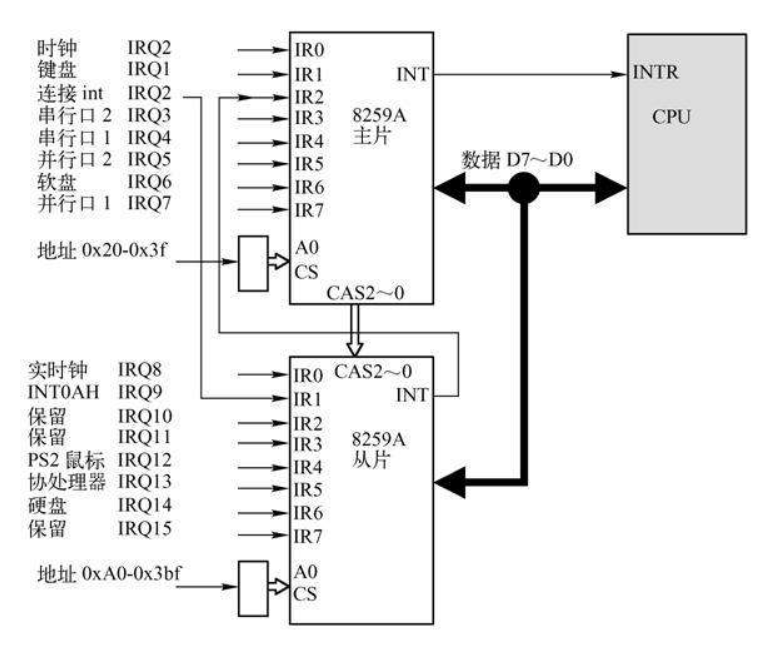

Interrupt processing related structure

As mentioned earlier, the 8259A interrupt controller is connected by two 8259A style external chips in a cascade manner. Each chip can process up to 8 different IRQs (interrupt requests), so the number of available IRQ lines reaches 15. As shown below:

8259A

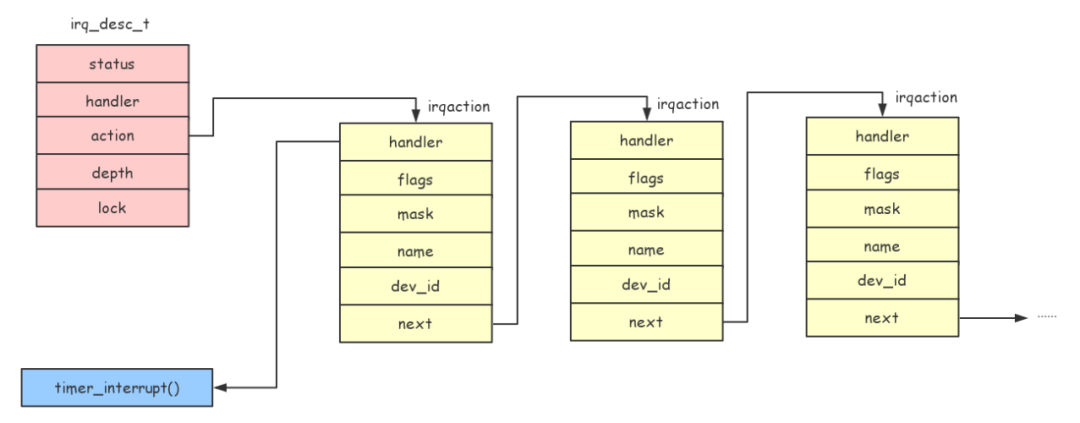

In the kernel, each IRQ line consists of the structure irq_desc_t to describe, irq_desc_t is defined as follows:

typedef struct {

unsigned int status; /* IRQ status */

hw_irq_controller *handler;

struct irqaction *action; /* IRQ action list */

unsigned int depth; /* nested irq disables */

spinlock_t lock;

} irq_desc_t;

Let's introduce IRQ_ desc_ Functions of each field of T structure:

- Status: status of the IRQ line.

- handler: type HW_ interrupt_ The type structure indicates the hardware related processing function corresponding to the IRQ line. For example, when the 8259A interrupt controller receives an interrupt signal, it needs to send an acknowledgement signal to continue receiving the interrupt signal. The function of sending the acknowledgement signal is hw_ interrupt_ ack function in type.

- Action: the type is irqaction structure, which is the processing entry of interrupt signal. Since an IRQ line can be shared by multiple hardware, an action is a linked list, and each action represents an interrupt processing entry of the hardware.

- depth: prevent multiple opening and closing of IRQ line.

- Lock: a spin lock that prevents multiple core CPU s from operating on IRQ at the same time.

hw_ interrupt_ The structure of type is related to hardware, so we won't introduce it here. Let's take a look at the structure of irqaction:

struct irqaction {

void (*handler)(int, void *, struct pt_regs *);

unsigned long flags;

unsigned long mask;

const char *name;

void *dev_id;

struct irqaction *next;

};

Let's talk about the functions of each field of the irqaction structure:

- Handler: the entry function of interrupt processing. The first parameter of handler is the interrupt number, the second parameter is the ID corresponding to the device, and the third parameter is the value of each register saved by the kernel when the interrupt occurs.

- flags: flag bit, used to indicate some behaviors of irqaction, such as whether IRQ line can be shared with other hardware.

- Name: the name used to save interrupt processing.

- dev_id: device ID.

- Next: the interrupt processing entry of each hardware corresponds to an irqaction structure. Since multiple hardware can share the same IRQ line, different hardware interrupt processing entries are connected through the next field.

irq_desc_t structure relationship is shown in the figure below:

irq_desc_t

Register interrupt processing entry

In the kernel, you can use setup_irq() function to register an interrupt handling entry. setup_ The irq() function code is as follows:

int setup_irq(unsigned int irq, struct irqaction * new)

{

int shared = 0;

unsigned long flags;

struct irqaction *old, **p;

irq_desc_t *desc = irq_desc + irq;

...

spin_lock_irqsave(&desc->lock,flags);

p = &desc->action;

if ((old = *p) != NULL) {

if (!(old->flags & new->flags & SA_SHIRQ)) {

spin_unlock_irqrestore(&desc->lock,flags);

return -EBUSY;

}

do {

p = &old->next;

old = *p;

} while (old);

shared = 1;

}

*p = new;

if (!shared) {

desc->depth = 0;

desc->status &= ~(IRQ_DISABLED | IRQ_AUTODETECT | IRQ_WAITING);

desc->handler->startup(irq);

}

spin_unlock_irqrestore(&desc->lock,flags);

register_irq_proc(irq); // Registering the proc file system

return 0;

}

setup_ The IRQ () function is relatively simple, which is to find the corresponding IRQ through the IRQ number_ desc_ T structure and connect the new irqaction to irq_desc_t structure in the action linked list. Note that if the device does not support shared IRQ lines (that is, the SA_SHIRQ flag is not set in the flags field), an EBUSY error is returned.

Let's take a look at the registration instance of the clock interrupt processing entry:

static struct irqaction irq0 = { timer_interrupt, SA_INTERRUPT, 0, "timer", NULL, NULL};

void __init time_init(void)

{

...

setup_irq(0, &irq0);

}

You can see that the IRQ number of the clock interrupt processing entry is 0 and the processing function is timer_interrupt(), and shared IRQ lines are not supported (the SA_SHIRQ flag is not set in the flags field).

Processing interrupt requests

When an interrupt occurs, the interrupt control layer will send a signal to the CPU, and the CPU will interrupt the current execution and execute the interrupt processing process instead. The interrupt process first saves the value of the register to the stack, and then calls do_. Irq() function for further processing, do_ The irq() function code is as follows:

asmlinkage unsigned int do_IRQ(struct pt_regs regs)

{

int irq = regs.orig_eax & 0xff; /* Get IRQ number */

int cpu = smp_processor_id();

irq_desc_t *desc = irq_desc + irq;

struct irqaction * action;

unsigned int status;

kstat.irqs[cpu][irq]++;

spin_lock(&desc->lock);

desc->handler->ack(irq);

status = desc->status & ~(IRQ_REPLAY | IRQ_WAITING);

status |= IRQ_PENDING; /* we _want_ to handle it */

action = NULL;

if (!(status & (IRQ_DISABLED | IRQ_INPROGRESS))) { // The current IRQ is not in process

action = desc->action; // Get action linked list

status &= ~IRQ_PENDING; // Remove IRQ_ The pending flag, which is used to record whether another interrupt occurred while processing the IRQ request

status |= IRQ_INPROGRESS; // Set IRQ_ The inprogress flag indicates that an IRQ is being processed

}

desc->status = status;

if (!action) // If the last IRQ has not been completed, exit directly

goto out;

for (;;) {

spin_unlock(&desc->lock);

handle_IRQ_event(irq, ®s, action); // Processing IRQ requests

spin_lock(&desc->lock);

if (!(desc->status & IRQ_PENDING)) // If another interrupt occurs while processing the IRQ request, continue processing the IRQ request

break;

desc->status &= ~IRQ_PENDING;

}

desc->status &= ~IRQ_INPROGRESS;

out:

desc->handler->end(irq);

spin_unlock(&desc->lock);

if (softirq_active(cpu) & softirq_mask(cpu))

do_softirq(); // Interrupt lower half processing

return 1;

}

do_ The IRQ () function first obtains its corresponding IRQ through the IRQ number_ desc_ T structure. Note that the same interrupt may occur multiple times, so judge whether the current IRQ is being processed (judge whether the IRQ_INPROGRESS flag is set in the status field of irq_desc_t structure). If it is not processing the current, obtain the action linked list, and then call handle_IRQ_event() function to execute the interrupt processing function in the action linked list.

If the same interrupt occurs during interrupt processing (irq_desc_t structure's status field is set with IRQ_INPROGRESS flag), continue to process the interrupt. After processing the interrupt, call do_. Softirq() function to process the lower half of the interrupt (described below).

Next, look at handle_ IRQ_ Implementation of event() function:

int handle_IRQ_event(unsigned int irq, struct pt_regs * regs, struct irqaction * action)

{

int status;

int cpu = smp_processor_id();

irq_enter(cpu, irq);

status = 1; /* Force the "do bottom halves" bit */

if (!(action->flags & SA_INTERRUPT)) // If interrupt processing can be performed with an interrupt open, the interrupt is opened

__sti();

do {

status |= action->flags;

action->handler(irq, action->dev_id, regs);

action = action->next;

} while (action);

if (status & SA_SAMPLE_RANDOM)

add_interrupt_randomness(irq);

__cli();

irq_exit(cpu, irq);

return status;

}

handle_ IRQ_ The event () function is very simple, which is to traverse the action linked list and execute the processing function. For example, for a clock interrupt, call timer_interrupt() function. It should be noted here that if the interrupt processing process can turn on the interrupt, it will turn on the interrupt (because the CPU will turn off the interrupt when receiving the interrupt signal).

Interrupt handling - lower half (soft interrupt)

Because interrupt processing is generally executed when the interrupt is closed, interrupt processing cannot be too time-consuming, otherwise subsequent interrupts cannot be processed in real time. For this reason, Linux divides interrupt processing into two parts, the upper part and the lower part. The upper part has been introduced earlier. Next, let's introduce the execution of the lower part.

Generally, the upper half of the interrupt will only do some basic operations (such as copying data from the network card to the cache), and then identify the lower half of the interrupt to be executed, and call do after identification_ Softirq() function.

softirq mechanism

The lower half of the interrupt is implemented by the softirq (soft interrupt) mechanism. In the Linux kernel, there is one called softirq_vec array, as follows:

static struct softirq_action softirq_vec[32];

Its type is softirq_ The action structure is defined as follows:

struct softirq_action

{

void (*action)(struct softirq_action *);

void *data;

};

softirq_vec array is the core of softirq mechanism_ Each element of the VEC array represents a soft interrupt. However, only four soft interrupts are defined in Linux, as follows:

enum

{

HI_SOFTIRQ=0,

NET_TX_SOFTIRQ,

NET_RX_SOFTIRQ,

TASKLET_SOFTIRQ

};

HI_SOFTIRQ is a high priority tasklet, while TASKLET_SOFTIRQ is a common tasklet, which is a task queue based on softirq mechanism (described below). NET_TX_SOFTIRQ and NET_RX_SOFTIRQ is specifically used for soft interrupts of network sub modules (not described).

Register softirq handler

To register a softirq handler, open_softirq() function. The code is as follows:

void open_softirq(int nr, void (*action)(struct softirq_action*), void *data)

{

unsigned long flags;

int i;

spin_lock_irqsave(&softirq_mask_lock, flags);

softirq_vec[nr].data = data;

softirq_vec[nr].action = action;

for (i=0; i<NR_CPUS; i++)

softirq_mask(i) |= (1<<nr);

spin_unlock_irqrestore(&softirq_mask_lock, flags);

}

open_ The main work of the softirq () function is to_ Add a softirq handler to the VEC array.

Linux registers two softirq processing functions during system initialization: TASKLET_SOFTIRQ and HI_SOFTIRQ:

void __init softirq_init()

{

...

open_softirq(TASKLET_SOFTIRQ, tasklet_action, NULL);

open_softirq(HI_SOFTIRQ, tasklet_hi_action, NULL);

}

Processing softirq

softirq is processed through do_softirq() function is implemented, and the code is as follows:

asmlinkage void do_softirq()

{

int cpu = smp_processor_id();

__u32 active, mask;

if (in_interrupt())

return;

local_bh_disable();

local_irq_disable();

mask = softirq_mask(cpu);

active = softirq_active(cpu) & mask;

if (active) {

struct softirq_action *h;

restart:

softirq_active(cpu) &= ~active;

local_irq_enable();

h = softirq_vec;

mask &= ~active;

do {

if (active & 1)

h->action(h);

h++;

active >>= 1;

} while (active);

local_irq_disable();

active = softirq_active(cpu);

if ((active &= mask) != 0)

goto retry;

}

local_bh_enable();

return;

retry:

goto restart;

}

Softirq was mentioned earlier_ VEC array has 32 elements, and each element corresponds to a type of softirq. How does Linux know which softirq needs to be executed? In Linux, each CPU has an IRQ type_ cpustat_ Variable of T structure, irq_cpustat_t structure is defined as follows:

typedef struct {

unsigned int __softirq_active;

unsigned int __softirq_mask;

...

} irq_cpustat_t;

Among them__ softirq_ The active field indicates which softirq is triggered (the int type has 32 bits, and each bit represents a softirq), and__ softirq_ The mask field indicates which softirq is masked. Linux pass__ softirq_ The active field knows which softirq needs to be executed (just set the corresponding bit to 1).

So, do_ The softirq() function first passes softirq_mask(cpu) to obtain the shielded softirq corresponding to the current CPU, and softirq_active (CPU) & mask is to get the softirq to be executed, and then compare it__ softirq_ Each bit of the active field to determine whether to execute this type of softirq.

tasklet mechanism

As mentioned earlier, the tasklet mechanism is based on the softirq mechanism. The tasklet mechanism is actually a task queue, which is then executed through softirq. There are two kinds of tasklets in the Linux kernel, one is high priority tasklet and the other is ordinary tasklet. The implementation of these two kinds of tasklets is basically the same. The only difference is the execution priority. High priority tasklets will be executed before ordinary tasklets.

Tasklet is essentially a queue, which passes through the structure tasklet_head storage, and each CPU has such a queue. Let's take a look at the structure tasklet_ Definition of head:

struct tasklet_head

{

struct tasklet_struct *list;

};

struct tasklet_struct

{

struct tasklet_struct *next;

unsigned long state;

atomic_t count;

void (*func)(unsigned long);

unsigned long data;

};

From tasklet_ You can know the definition of head, tasklet_ The head structure is a tasklet_struct structure is the head of the queue, while tasklet_ The func field of struct structure formally indicates the function pointer to be executed by the task. Linux defines two types of tasklet queues, namely tasklet_vec and tasklet_hi_vec, as defined below:

struct tasklet_head tasklet_vec[NR_CPUS]; struct tasklet_head tasklet_hi_vec[NR_CPUS];

As you can see, tasklet_vec and tasklet_ hi_ VECs are arrays. The number of elements in the array is the number of CPU cores, that is, each CPU core has a high priority tasklet queue and an ordinary tasklet queue.

Scheduling tasklet s

If we have a tasklet to execute, the high priority tasklet can be executed through the tasklet_ hi_ The schedule() function is used for scheduling, while ordinary tasklets can be used through tasklets_ Schedule(). The two functions are basically the same, so we only analyze one of them:

static inline void tasklet_hi_schedule(struct tasklet_struct *t)

{

if (!test_and_set_bit(TASKLET_STATE_SCHED, &t->state)) {

int cpu = smp_processor_id();

unsigned long flags;

local_irq_save(flags);

t->next = tasklet_hi_vec[cpu].list;

tasklet_hi_vec[cpu].list = t;

__cpu_raise_softirq(cpu, HI_SOFTIRQ);

local_irq_restore(flags);

}

}

The type of the function parameter is tasklet_ Pointer to struct structure, indicating the tasklet structure to be executed. tasklet_ hi_ The schedule () function first determines whether the tasklet has been added to the queue. If not, it will be added to the tasklet_ hi_ In the VEC queue and by calling__ cpu_raise_softirq(cpu, HI_SOFTIRQ) to tell softirq that hi needs to be executed_ Softirq type softirq, let's take a look__ cpu_ raise_ Implementation of softirq() function:

static inline void __cpu_raise_softirq(int cpu, int nr)

{

softirq_active(cpu) |= (1<<nr);

}

As you can see__ cpu_ raise_ The softirq () function is irq_cpustat_t-structured__ softirq_ The nr bit of the active field is set to 1. For tasklets_ hi_ The schedule () function is to set hi_ The softirq bit (bit 0) is set to 1.

As mentioned earlier, Linux will register two softirq and tasklet during initialization_ Softirq and HI_SOFTIRQ:

void __init softirq_init()

{

...

open_softirq(TASKLET_SOFTIRQ, tasklet_action, NULL);

open_softirq(HI_SOFTIRQ, tasklet_hi_action, NULL);

}

So when irq_cpustat_t-structured__ softirq_ Hi of active field_ When the softirq bit (bit 0) is set to 1, the softirq mechanism will execute the tasklet_hi_action() function, let's take a look at the tasklet_ hi_ Implementation of action() function:

static void tasklet_hi_action(struct softirq_action *a)

{

int cpu = smp_processor_id();

struct tasklet_struct *list;

local_irq_disable();

list = tasklet_hi_vec[cpu].list;

tasklet_hi_vec[cpu].list = NULL;

local_irq_enable();

while (list != NULL) {

struct tasklet_struct *t = list;

list = list->next;

if (tasklet_trylock(t)) {

if (atomic_read(&t->count) == 0) {

clear_bit(TASKLET_STATE_SCHED, &t->state);

t->func(t->data); // Call tasklet handler

tasklet_unlock(t);

continue;

}

tasklet_unlock(t);

}

...

}

}

tasklet_ hi_ The action () function is very simple, which is to traverse the tasklet_hi_vec queue and execute the handler function of tasklet therein.