This paper mainly analyzes the architecture and basic functions of several open source API gateways, such as NGINX, Kong, apisik, Tyk, Zuul and Gravitee, and tests the performance of each API gateway in a certain scenario.

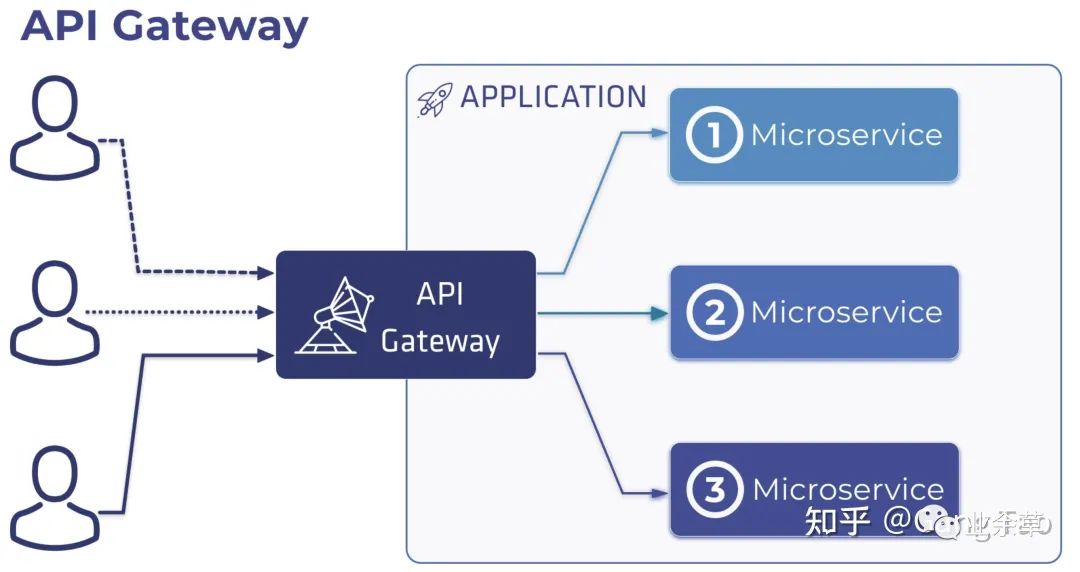

Under the microservice architecture, API gateway is a common architecture design pattern. The following are common problems in microservices, which need to be solved by introducing API gateway.

- The granularity of APIs provided by microservices is usually different from that required by clients. Microservices usually provide fine-grained APIs, which means that clients need to interact with multiple services. For example, as mentioned above, customers who need product details need to obtain data from many services.

- Different clients need different data. For example, the desktop browser version of the product details page is usually more detailed than the mobile version.

- For different types of clients, the network performance is different. For example, mobile networks are generally much slower and have higher latency than non mobile networks. And, of course, any WAN is much slower than a LAN. This means that the network performance used by native mobile clients is very different from that of the LAN used by server-side Web applications. The server-side Web application can send multiple requests to the back-end service without affecting the user experience, while the mobile client can only provide a few requests.

- The number of microservice instances and their location (host + port) change dynamically

- Service partitioning changes over time and should be hidden from clients

- The service may use a variety of protocols, some of which may be network unfriendly

Common API gateways mainly provide the following functions:

- "Reverse proxy and routing" - the main reason why most projects adopt gateway solutions. It gives a single entry to all clients accessing the back-end API, and hides the details of internal service deployment.

- Load balancing - the gateway can route a single incoming request to multiple back-end destinations.

- "Authentication and authorization" - the gateway should be able to successfully authenticate and allow only trusted clients to access the API, and can also use methods such as RBA and C to authorize.

- "IP list whitelist / blacklist" - allow or block the passage of some IP addresses.

- Performance analysis - provides a way to record usage and other useful metrics related to API calls.

- "Speed limit and flow control" - the ability to control API calls.

- "Request deformation" - before further forwarding, the request and response (including Header and Body) can be converted before forwarding.

- "Version control" - use different versions of API options at the same time, or provide slow launch API in the form of Canary release or blue / Green deployment

- "Circuit breaker" - micro service architecture mode is useful to avoid use interruption

- "Multi protocol support" WebSocket/GRPC

- "Caching" - reduce network bandwidth and round trip time consumption. If you can cache frequently requested data, you can improve performance and response time

- "API documentation" - if you plan to expose the API to developers outside the organization, you must consider using API documentation, such as Swagger or OpenAPI.

There are many open source software that can provide API gateway support. Let's take a look at their respective architectures and functions.

In order to verify the basic functions of these open source gateways, I created some code and generated four basic API services using OpenAPI, including Golang, Nodejs, python, flask and Java Spring. The API uses examples of common pet stores, which are declared as follows:

openapi: "3.0.0"

info:

version: 1.0.0

title: Swagger Petstore

license:

name: MIT

servers:

- url: http://petstore.swagger.io/v1

paths:

/pets:

get:

summary: List all pets

operationId: listPets

tags:

- pets

parameters:

- name: limit

in: query

description: How many items to return at one time (max 100)

required: false

schema:

type: integer

format: int32

responses:

'200':

description: A paged array of pets

headers:

x-next:

description: A link to the next page of responses

schema:

type: string

content:

application/json:

schema:

$ref: "#/components/schemas/Pets"

default:

description: unexpected error

content:

application/json:

schema:

$ref: "#/components/schemas/Error"

post:

summary: Create a pet

operationId: createPets

tags:

- pets

responses:

'201':

description: Null response

default:

description: unexpected error

content:

application/json:

schema:

$ref: "#/components/schemas/Error"

/pets/{petId}:

get:

summary: Info for a specific pet

operationId: showPetById

tags:

- pets

parameters:

- name: petId

in: path

required: true

description: The id of the pet to retrieve

schema:

type: string

responses:

'200':

description: Expected response to a valid request

content:

application/json:

schema:

$ref: "#/components/schemas/Pet"

default:

description: unexpected error

content:

application/json:

schema:

$ref: "#/components/schemas/Error"

components:

schemas:

Pet:

type: object

required:

- id

- name

properties:

id:

type: integer

format: int64

name:

type: string

tag:

type: string

Pets:

type: array

items:

$ref: "#/components/schemas/Pet"

Error:

type: object

required:

- code

- message

properties:

code:

type: integer

format: int32

message:

type: stringBuilt Web services are deployed in containers through Docker Compose.

version: "3.7"

services:

goapi:

container_name: goapi

image: naughtytao/goapi:0.1

ports:

- "18000:8080"

deploy:

resources:

limits:

cpus: '1'

memory: 256M

reservations:

memory: 256M

nodeapi:

container_name: nodeapi

image: naughtytao/nodeapi:0.1

ports:

- "18001:8080"

deploy:

resources:

limits:

cpus: '1'

memory: 256M

reservations:

memory: 256M

flaskapi:

container_name: flaskapi

image: naughtytao/flaskapi:0.1

ports:

- "18002:8080"

deploy:

resources:

limits:

cpus: '1'

memory: 256M

reservations:

memory: 256M

springapi:

container_name: springapi

image: naughtytao/springapi:0.1

ports:

- "18003:8080"

deploy:

resources:

limits:

cpus: '1'

memory: 256M

reservations:

memory: 256M

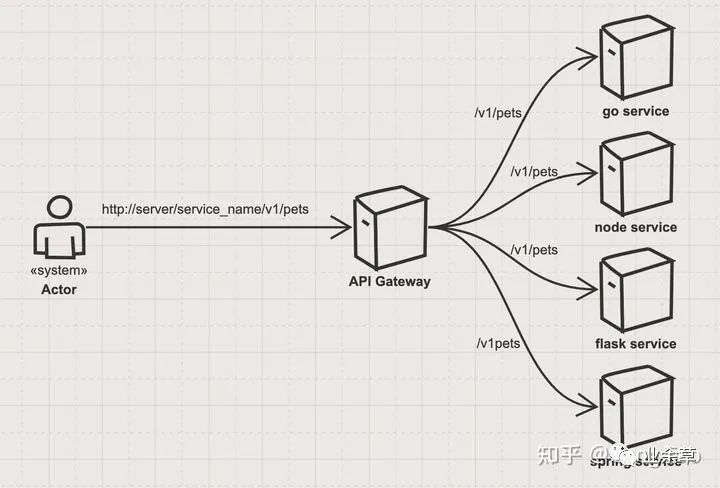

Gateway forwarding data

While studying these open source gateway architectures, we will also verify their most basic routing and forwarding functions. Here is the request sent by the user http://server/service\_name/v1/pets will be sent to the API gateway, which will route to different back-end services through service name.

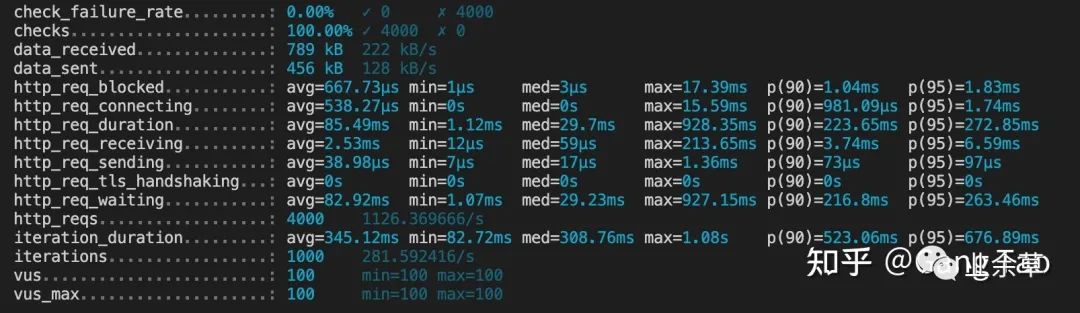

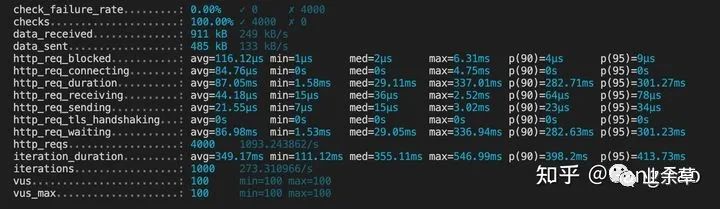

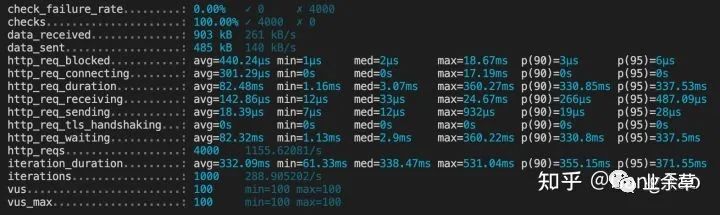

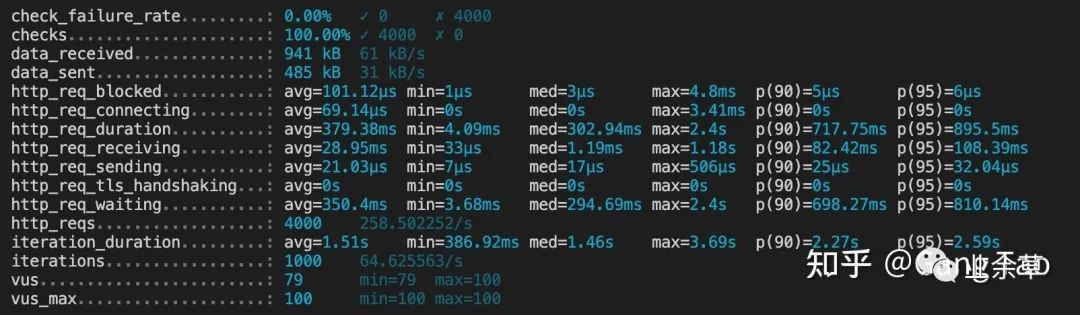

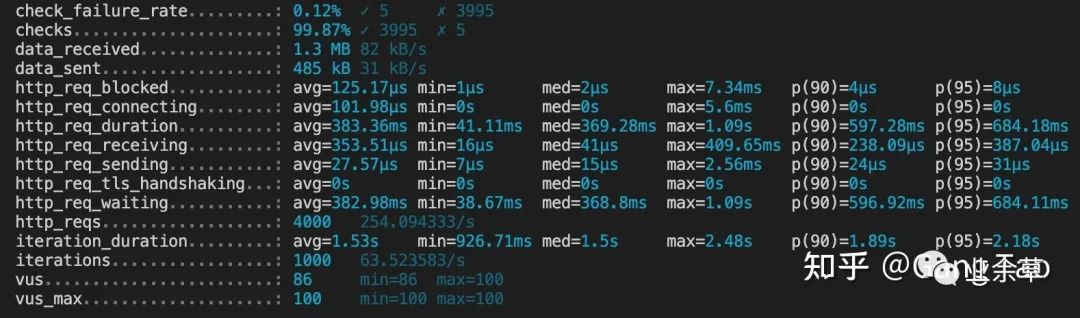

Run 1000 tests concurrently

We use K6 to run 1000 times with 100 concurrent operations. The test results are shown in the figure above. We can see the comprehensive response of direct connection. The number of requests that can be processed per second is about 1100 +.

Nginx

Nginx is a web server of asynchronous framework and can also be used as reverse proxy, load balancer and HTTP cache. The software was created by Igor sesoyev and first released to the public in 2004. A company of the same name was established in 2011 to provide support. On March 11, 2019, nginx was acquired by F5 Networks for USD 670 million.

Nginx has the following characteristics:

- Written in C, it occupies low resources and memory and has high performance.

- Single process multithreading. When the nginx server is started, a master process will be generated. The master process will fork out multiple worker processes, and the worker thread will process the client's requests.

- Support reverse proxy and 7-tier load balancing (expand the benefits of load balancing).

- High concurrency, nginx is asynchronous and non blocking, and adopts epollandqueue mode

- Fast processing of static files

- Highly modular and simple configuration. The community is active and various high-performance modules are produced rapidly.

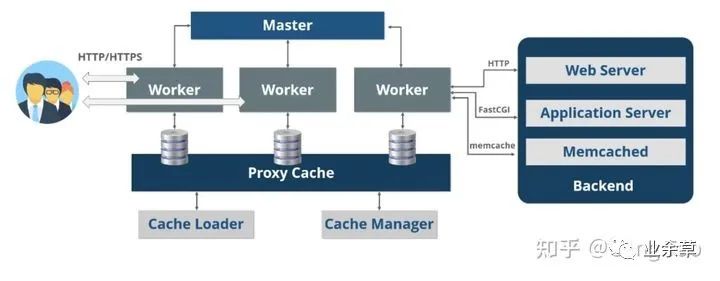

Nginx architecture diagram

As shown in the figure above, Nginx is mainly composed of Master, Worker and Proxy Cache.

- Master Master: NGINX follows the master-slave architecture. It will assign work to the Worker according to the customer's requirements. After assigning the work to the Worker, the master will look for the customer's next request because it will not wait for the Worker's response. Once the response comes from the Worker, the master sends the response to the client

- Worker work unit: worker is a Slave in NGINX architecture. Each unit of work can process more than 1000 requests in a single thread. Once processing is complete, the response is sent to the primary server. Single thread will save RAM and ROM size by working on the same memory space instead of different memory space. Multithreading will work on different memory spaces.

- Cache cache: Nginx cache is used to render pages very quickly by getting from the cache rather than from the server. On the first page request, the page is stored in the cache.

In order to realize the routing and forwarding of API, you only need to configure Nginx as follows:

server {

listen 80 default_server;

location /goapi {

rewrite ^/goapi(.*) $1 break;

proxy_pass http://goapi:8080;

}

location /nodeapi {

rewrite ^/nodeapi(.*) $1 break;

proxy_pass http://nodeapi:8080;

}

location /flaskapi {

rewrite ^/flaskapi(.*) $1 break;

proxy_pass http://flaskapi:8080;

}

location /springapi {

rewrite ^/springapi(.*) $1 break;

proxy_pass http://springapi:8080;

}

}We configure a route based on different service goapi, nodeapi, flash API and spring API. Before forwarding, we need to use rewrite to remove the service name and send it to the corresponding service.

The container is used to deploy the four services of ngnix and the back-end in the same network and route the forwarding through the gateway connection. The deployment of Nginx is as follows:

version: "3.7"

services:

web:

container_name: nginx

image: nginx

volumes:

- ./templates:/etc/nginx/templates

- ./conf/default.conf:/etc/nginx/conf.d/default.conf

ports:

- "8080:80"

environment:

- NGINX_HOST=localhost

- NGINX_PORT=80

deploy:

resources:

limits:

cpus: '1'

memory: 256M

reservations:

memory: 256MThe test results of K6 passing the Nginx gateway are as follows:

Performance test of nginx gateway

The number of requests processed per second is 1093, which is very close to that without forwarding through the gateway.

Functionally, Nginx can meet most of users' needs for API gateway. It can support different functions through configuration and plug-ins. The performance is very excellent. The disadvantage is that there is no management UI and Management API. Most of the work needs to be done by manually configuring config files. The function of the commercial version will be more perfect.

Kong

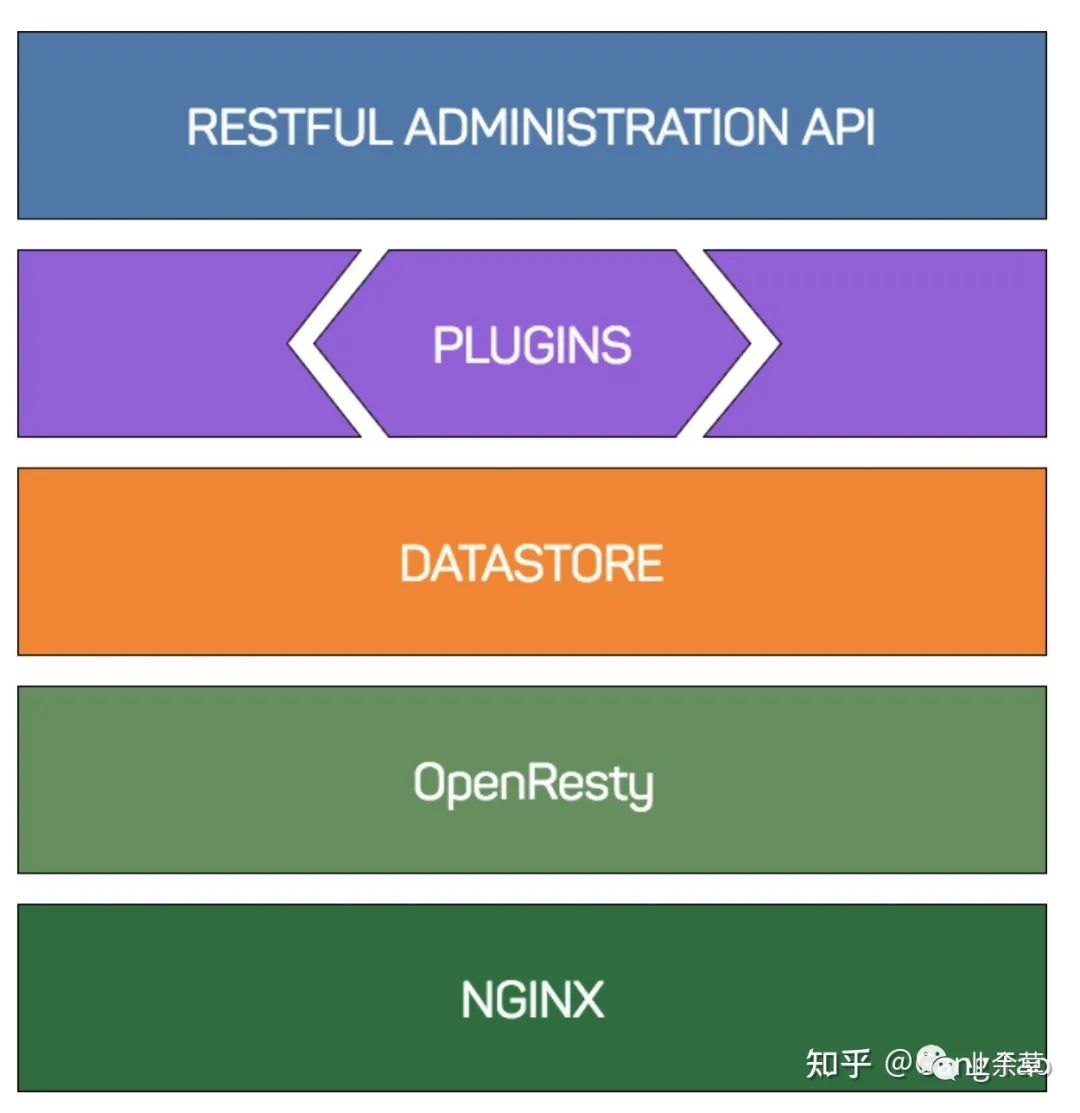

Kong is an open source API gateway based on NGINX and OpenResty.

Kong's overall infrastructure consists of three main parts: NGINX provides protocol implementation and work process management, OpenResty provides Lua integration and hooks to NGINX's request processing stage, and Kong itself uses these hooks to route and transform requests. The database supports Cassandra or Postgres to store all configurations.

Kong

Kong comes with various plug-ins to provide access control, security, caching and document functions. It also allows you to write and use custom plug-ins in the Lua language. Kong can also be deployed as Kubernetes Ingress and supports GRPC and WebSockets agents.

NGINX provides a powerful HTTP server infrastructure. It handles HTTP request processing, TLS encryption, request logging, and operating system resource allocation (for example, listening and managing client connections and generating new processes).

NGINX has a declarative configuration file in the file system of its host operating system. Although some Kong functions can be implemented only through NGINX configuration (for example, determining the upstream request route based on the requested URL), modifying the configuration requires a certain level of operating system access to edit the configuration files and require NGINX to reload them, and Kong allows users to update the configuration through the RESTful HTTP API. Kong's NGINX configuration is quite basic: in addition to configuring standard headers, listening ports and log paths, most configurations are delegated to OpenResty.

In some cases, it is useful to add your own NGINX configuration next to Kong, such as providing a static website next to the API gateway. In this case, you can modify the configuration template used by Kong.

The request processed by NGINX goes through a series of stages. Many features of NGINX (for example, modules written in C) provide the ability to enter these stages (for example, gzip compression). Although you can write your own modules, NGINX must be recompiled every time you add or update modules. To simplify the process of adding new features, Kong uses OpenResty.

OpenResty is a software suite that bundles NGINX, a set of modules, LuaJIT and a set of lua libraries. The most important one is ngx_http_lua_module is an NGINX module that embeds Lua and provides Lua equivalents for most NGINX request phases. This effectively allows the development of NGINX modules in Lua while maintaining high performance (LuaJIT is quite fast), and Kong uses it to provide its core configuration management and plug-in management infrastructure.

Kong provides a framework through its plug-in architecture that can be hooked to the above request phase. Starting from the above example, both the Key Auth and ACL plug-ins control whether the client (also known as the user) should be able to make requests. Each plug-in defines its own access function in its handler, and the function executes kong.access() for each plug-in enabled through a given route or service. The execution order is determined by the priority value - if the priority of Key Auth is 1003 and the priority of ACL is 950, Kong will first execute the access function of Key Auth. If it does not give up the request, it will execute ACL, and then pass the ACL to the upstream proxy_pass.

Since Kong's request routing and processing configuration is controlled through its admin API, plug-in configuration can be added and deleted immediately without editing the underlying NGINX configuration, because Kong essentially provides a method to inject location blocks (defined through API) and configuration into the API. They (by assigning plug-ins, certificates, etc. to these APIs).

We use the following configuration to deploy Kong into the container (omitting the deployment of four microservices)

version: '3.7'

volumes:

kong_data: {}

networks:

kong-net:

external: false

services:

kong:

image: "${KONG_DOCKER_TAG:-kong:latest}"

user: "${KONG_USER:-kong}"

depends_on:

- db

environment:

KONG_ADMIN_ACCESS_LOG: /dev/stdout

KONG_ADMIN_ERROR_LOG: /dev/stderr

KONG_ADMIN_LISTEN: '0.0.0.0:8001'

KONG_CASSANDRA_CONTACT_POINTS: db

KONG_DATABASE: postgres

KONG_PG_DATABASE: ${KONG_PG_DATABASE:-kong}

KONG_PG_HOST: db

KONG_PG_USER: ${KONG_PG_USER:-kong}

KONG_PROXY_ACCESS_LOG: /dev/stdout

KONG_PROXY_ERROR_LOG: /dev/stderr

KONG_PG_PASSWORD_FILE: /run/secrets/kong_postgres_password

secrets:

- kong_postgres_password

networks:

- kong-net

ports:

- "8080:8000/tcp"

- "127.0.0.1:8001:8001/tcp"

- "8443:8443/tcp"

- "127.0.0.1:8444:8444/tcp"

healthcheck:

test: ["CMD", "kong", "health"]

interval: 10s

timeout: 10s

retries: 10

restart: on-failure

deploy:

restart_policy:

condition: on-failure

db:

image: postgres:9.5

environment:

POSTGRES_DB: ${KONG_PG_DATABASE:-kong}

POSTGRES_USER: ${KONG_PG_USER:-kong}

POSTGRES_PASSWORD_FILE: /run/secrets/kong_postgres_password

secrets:

- kong_postgres_password

healthcheck:

test: ["CMD", "pg_isready", "-U", "${KONG_PG_USER:-kong}"]

interval: 30s

timeout: 30s

retries: 3

restart: on-failure

deploy:

restart_policy:

condition: on-failure

stdin_open: true

tty: true

networks:

- kong-net

volumes:

- kong_data:/var/lib/postgresql/data

secrets:

kong_postgres_password:

file: ./POSTGRES_PASSWORDPostgreSQL is selected for the database.

There is no Dashboard in the open source version. We use the RestAPI to create all gateway routes:

curl -i -X POST http://localhost:8001/services \

--data name=goapi \

--data url='http://goapi:8080'

curl -i -X POST http://localhost:8001/services/goapi/routes \

--data 'paths[]=/goapi' \

--data name=goapiYou need to create a service first, and then create a route under the service.

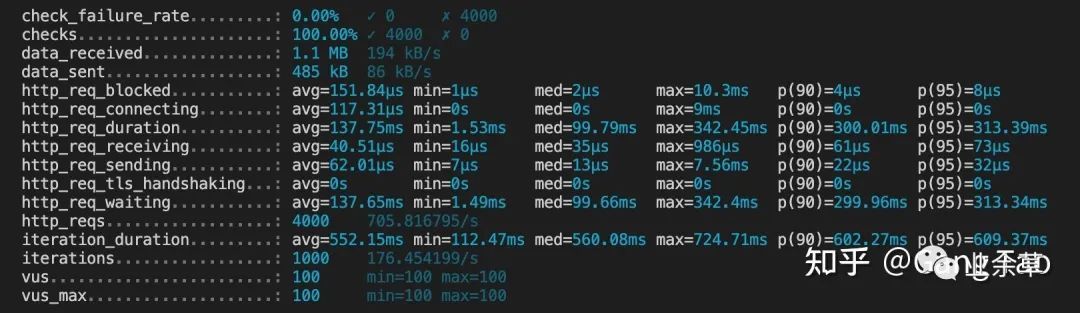

The results of K6 pressure test are as follows:

Kong pressure measurement results

705 requests per second is significantly weaker than Nginx, so all functions have costs.

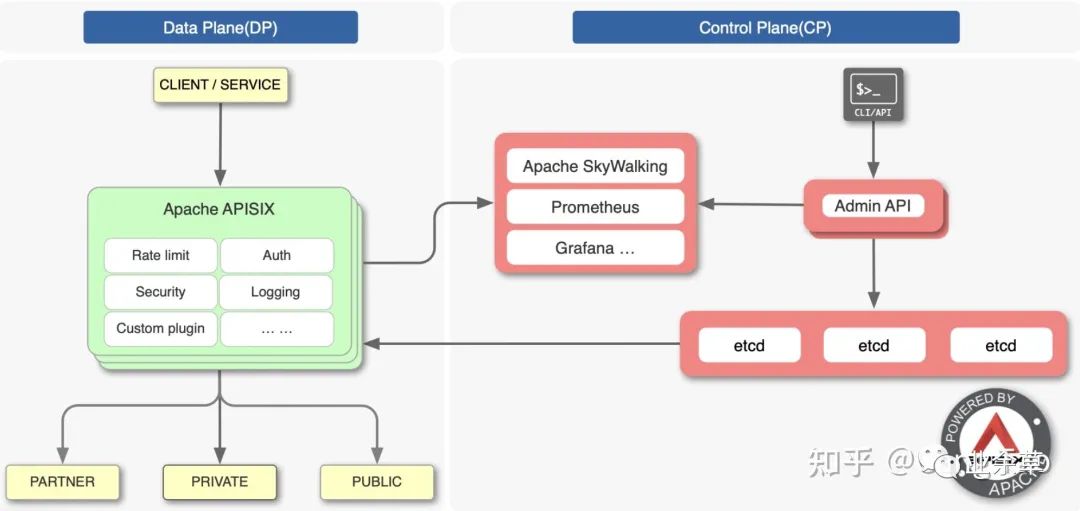

APISIX

Apache apisid is a dynamic, real-time and high-performance API gateway, which provides rich traffic management functions such as load balancing, dynamic upstream, gray publishing, service fusing, identity authentication, observability and so on.

Apisid was founded by China's tributary technology in April 2019, opened its source in June, and entered the Apache incubator in October of the same year. The name of the commercial product corresponding to tributary technology is API7:). APISIX is designed to handle a large number of requests and has a low secondary development threshold.

The main functions and features of apisik are:

- Cloud native design, lightweight and easy to container

- Integrated statistics and monitoring components, such as Prometheus, Apache Skywalking and Zipkin.

- Support proxy protocols such as gRPC, Dubbo, WebSocket and MQTT, as well as protocol transcoding from HTTP to gRPC to adapt to various situations

- Play the role of OpenID relying party and connect with Auth0, Okta and other authentication providers

- By dynamically executing user functions at run time to support server free, the edge nodes of the gateway are more flexible

- Support plug-in hot loading

- It does not lock users and supports hybrid cloud deployment architecture

- The gateway node is stateless and can be expanded flexibly

From this perspective, the API gateway can replace Nginx to handle north-south traffic, and can also play the role of Istio control plane and Envoy data plane to handle east-west traffic.

The architecture of apisik is shown in the following figure:

Architecture of APIs IX

APISIX includes a data plane for dynamically controlling request traffic; A control plane for storing and synchronizing gateway data configuration, an AI plane for coordinating plug-ins, and real-time analysis and processing of request traffic.

It is built on the of Nginx reverse proxy server and etcd to provide a lightweight gateway. It is mainly written in Lua, a programming language similar to Python. It uses a radius tree for routing and a prefix tree for IP matching.

Using etcd instead of relational database to store configuration can make it closer to cloud native, but it can ensure the availability of the whole gateway system even in the case of any server downtime.

All components are written as plug-ins, so their modular design means that functional developers only need to care about their own projects.

Built in plug-ins include flow control and speed limit, identity authentication, request rewriting, URI redirection, open tracking and no server. Apisex supports OpenResty and Tengine running environments and can run on bare metal machines in Kubernetes. It supports both X86 and ARM64.

We also use Docker Compose to deploy APIs IX.

version: "3.7"

services:

apisix-dashboard:

image: apache/apisix-dashboard:2.4

restart: always

volumes:

- ./dashboard_conf/conf.yaml:/usr/local/apisix-dashboard/conf/conf.yaml

ports:

- "9000:9000"

networks:

apisix:

ipv4_address: 172.18.5.18

apisix:

image: apache/apisix:2.3-alpine

restart: always

volumes:

- ./apisix_log:/usr/local/apisix/logs

- ./apisix_conf/config.yaml:/usr/local/apisix/conf/config.yaml:ro

depends_on:

- etcd

##network_mode: host

ports:

- "8080:9080/tcp"

- "9443:9443/tcp"

networks:

apisix:

ipv4_address: 172.18.5.11

deploy:

resources:

limits:

cpus: '1'

memory: 256M

reservations:

memory: 256M

etcd:

image: bitnami/etcd:3.4.9

user: root

restart: always

volumes:

- ./etcd_data:/etcd_data

environment:

ETCD_DATA_DIR: /etcd_data

ETCD_ENABLE_V2: "true"

ALLOW_NONE_AUTHENTICATION: "yes"

ETCD_ADVERTISE_CLIENT_URLS: "http://0.0.0.0:2379"

ETCD_LISTEN_CLIENT_URLS: "http://0.0.0.0:2379"

ports:

- "2379:2379/tcp"

networks:

apisix:

ipv4_address: 172.18.5.10

networks:

apisix:

driver: bridge

ipam:

config:

- subnet: 172.18.0.0/16Open source APIs IX supports Dashboard to manage routes, rather than limiting Dashboard functions to commercial versions like KONG. However, the Dashboard of APIs IX does not support rewriting the route URI, so we have to use the rest API to create the route.

The command to create a service route is as follows:

curl --location --request PUT 'http://127.0.0.1:8080/apisix/admin/routes/1' \

--header 'X-API-KEY: edd1c9f034335f136f87ad84b625c8f1' \

--header 'Content-Type: text/plain' \

--data-raw '{

"uri": "/goapi/*",

"plugins": {

"proxy-rewrite": {

"regex_uri": ["^/goapi(.*)$","$1"]

}

},

"upstream": {

"type": "roundrobin",

"nodes": {

"goapi:8080": 1

}

}

}'The results of K6 pressure test are as follows:

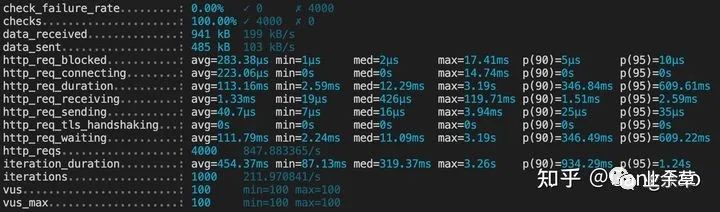

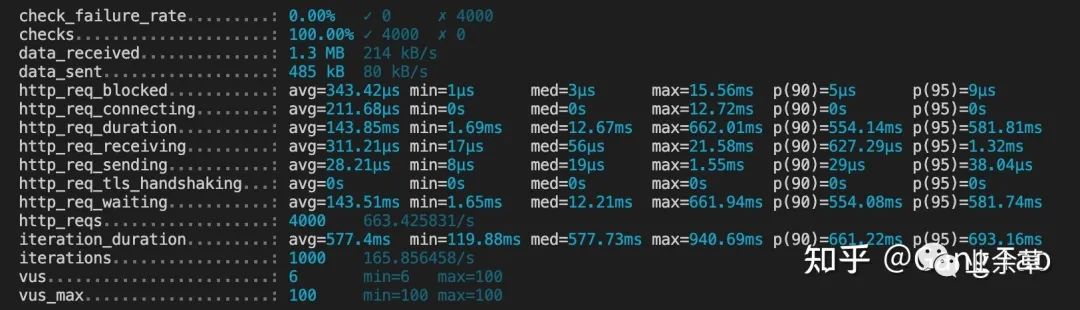

APISIX pressure test results

APISIX achieved 1155 good results, showing a performance close to that without passing through the gateway, and the cache may have a good effect.

Tyk

Tyk is an open source API gateway based on Golang and Redis. It was created in 2014, earlier than AWS's API gateway as a service function. Tyk is written in Golang and uses Golang's own HTTP server.

Tyk supports different modes of operation: cloud, hybrid (GW in its own infrastructure) and local.

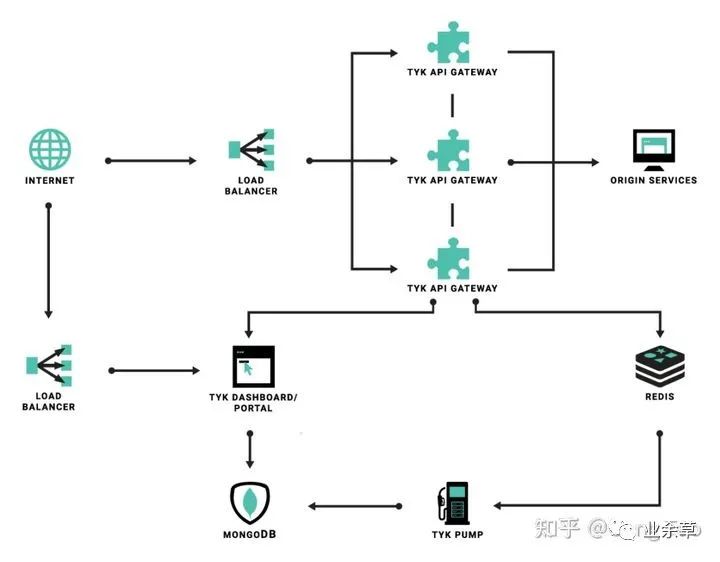

Tyk gateway

Tyk consists of three components:

- Gateway: an agent that handles all application traffic.

- Dashboard: you can manage Tyk and display the interface of indicators and organization API s.

- Pump: responsible for persistently saving indicator data and exporting it to MongoDB (built-in), ElasticSearch or InfluxDB, etc.

We also use Docker Compose to create a Tyk gateway for function verification.

version: '3.7'

services:

tyk-gateway:

image: tykio/tyk-gateway:v3.1.1

ports:

- 8080:8080

volumes:

- ./tyk.standalone.conf:/opt/tyk-gateway/tyk.conf

- ./apps:/opt/tyk-gateway/apps

- ./middleware:/opt/tyk-gateway/middleware

- ./certs:/opt/tyk-gateway/certs

environment:

- TYK_GW_SECRET=foo

depends_on:

- tyk-redis

tyk-redis:

image: redis:5.0-alpine

ports:

- 6379:6379Tyk's Dashboard also belongs to the category of commercial version, so we need to use API to create routes again. Tyk creates and manages routes through the concept of app. You can also write app files directly.

curl --location --request POST 'http://localhost:8080/tyk/apis/' \

--header 'x-tyk-authorization: foo' \

--header 'Content-Type: application/json' \

--data-raw '{

"name": "GO API",

"slug": "go-api",

"api_id": "goapi",

"org_id": "goapi",

"use_keyless": true,

"auth": {

"auth_header_name": "Authorization"

},

"definition": {

"location": "header",

"key": "x-api-version"

},

"version_data": {

"not_versioned": true,

"versions": {

"Default": {

"name": "Default",

"use_extended_paths": true

}

}

},

"proxy": {

"listen_path": "/goapi/",

"target_url": "http://host.docker.internal:18000/",

"strip_listen_path": true

},

"active": true

}'The results of K6 pressure test are as follows:

Tyk pressure test

The result of Tyk is about 400-600, and its performance is close to that of KONG.

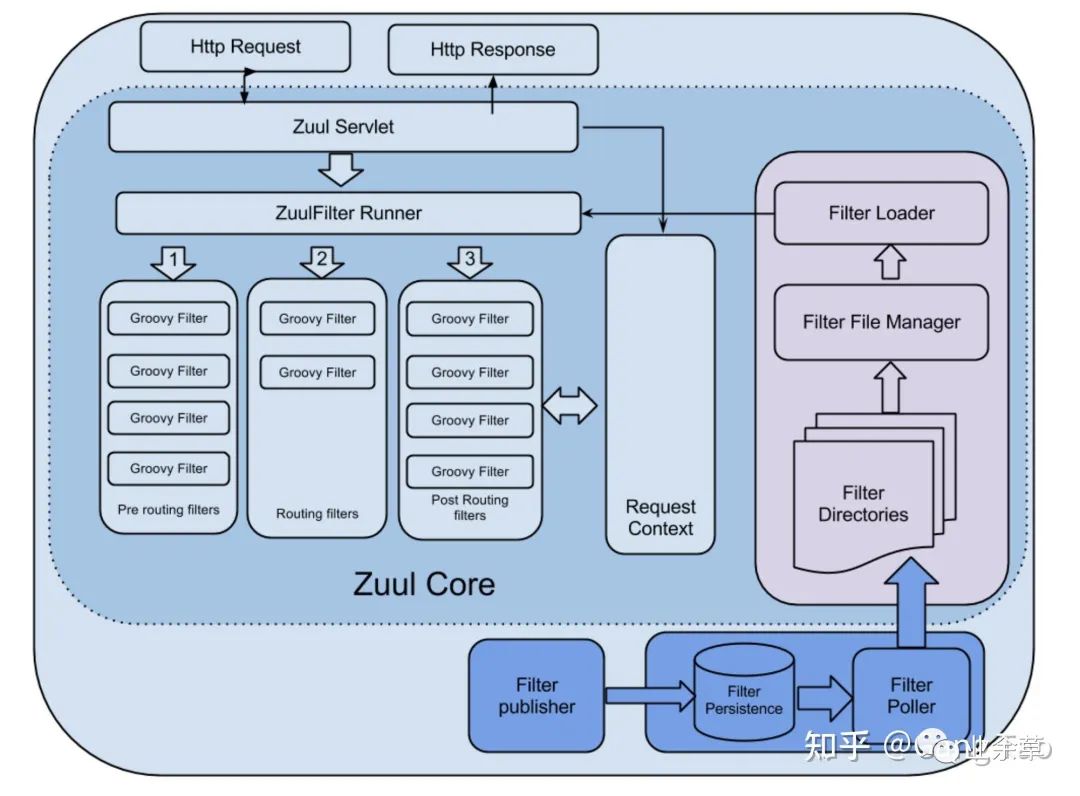

Zuul

Zuul is an open source Java based API gateway component of Netflix.

Zuul gateway

Zuul contains several components:

- Zuul core: this library contains the core functions of compiling and executing filters.

- Zuul simple Webapp: This Webapp shows a simple example of how to build an application using zuul core.

- Zuul Netflix: add other NetflixOSS components to zuul's Library - for example, use the Ribbon to route requests.

- Zuul Netflix webapp: a webapp that packages zuul core and zuul Netflix into an easy-to-use package.

Zuul provides flexibility and resilience, in part by leveraging other Netflix OSS components:

- "Hystrix" is used for flow control. Wrap the call to the origin, which allows us to discard traffic and prioritize traffic in the event of a problem.

- "Ribbon" is the customer of all outbound requests from Zuul. It provides detailed information about network performance and errors, and handles software load balancing to achieve uniform load distribution.

- "Turbine" summarizes fine-grained indicators in real time so that we can quickly observe problems and respond.

- Archius handles configuration and provides the ability to change properties dynamically.

Zuul's core is a series of filters that can perform a series of operations during routing HTTP requests and responses. The following are the main features of zuul filter:

- Type: usually defines the stage in the routing process where the filter is applied (although it can be any custom string)

- Execution order: used in types to define the execution order across multiple filters

- Criteria: conditions required to implement the filter

- Action: the action to be performed if the conditions are met

class DeviceDelayFilter extends ZuulFilter {

def static Random rand = new Random()

@Override

String filterType() {

return 'pre'

}

@Override

int filterOrder() {

return 5

}

@Override

boolean shouldFilter() {

return RequestContext.getRequest().

getParameter("deviceType")?equals("BrokenDevice"):false

}

@Override

Object run() {

sleep(rand.nextInt(20000)) // Sleep for a random number of

// seconds between [0-20]

}

}Zuul provides a framework to dynamically read, compile and run these filters. Filters do not communicate directly with each other - instead, they share state through a unique RequestContext for each request. Filters are written in Groovy.

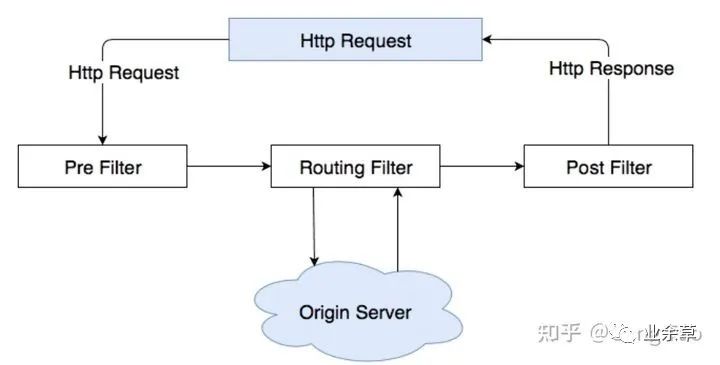

Zuul structure diagram

There are several standard filter types that correspond to the typical life cycle of a request:

- The "Pre" filter is executed before routing to the origin. Examples include requesting authentication, selecting the original server, and recording debugging information.

- The Route filter process routes requests to the source. This is where the original HTTP request is built and sent using Apache HttpClient or Netflix Ribbon.

- After the request is routed to the source, the "Post" filter is executed. Examples include adding standard HTTP headers to the response, collecting statistics and metrics, and transmitting the response from the source stream to the client.

- When an Error occurs in one of the other stages, the "Error" filter is executed.

Spring Cloud creates an embedded Zuul proxy to simplify the development of a very common use case in which the UI application wants the proxy to call one or more back-end services. This feature is useful for the back-end services required by the user interface agent, avoiding the need to manage CORS and authentication issues independently for all back-ends.

To enable it, annotate a Spring Boot main class with @ EnableZuulProxy, which forwards local calls to the appropriate service.

Zuul is a Java library. It is not an out of the box API gateway, so it is necessary to develop an application with zuul to test its functions.

The corresponding Java POM is as follows:

<project

xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>naughtytao.apigateway</groupId>

<artifactId>demo</artifactId>

<version>0.0.1-SNAPSHOT</version>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>1.4.7.RELEASE</version>

<relativePath />

<!-- lookup parent from repository -->

</parent>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding>

<java.version>1.8</java.version>

<!-- Dependencies -->

<spring-cloud.version>Camden.SR7</spring-cloud.version>

</properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${spring-cloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-zuul</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-log4j2</artifactId>

</dependency>

<!-- enable authentication if security is included -->

<!-- <dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-security</artifactId>

</dependency> -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!-- API, java.xml.bind module -->

<dependency>

<groupId>jakarta.xml.bind</groupId>

<artifactId>jakarta.xml.bind-api</artifactId>

<version>2.3.2</version>

</dependency>

<!-- Runtime, com.sun.xml.bind module -->

<dependency>

<groupId>org.glassfish.jaxb</groupId>

<artifactId>jaxb-runtime</artifactId>

<version>2.3.2</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.junit.jupiter</groupId>

<artifactId>junit-jupiter-api</artifactId>

<version>5.0.0-M5</version>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.3</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

</plugins>

</build>

</project>The main application codes are as follows:

package naughtytao.apigateway.demo;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.EnableAutoConfiguration;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.boot.autoconfigure.amqp.RabbitAutoConfiguration;

import org.springframework.cloud.netflix.zuul.EnableZuulProxy;

import org.springframework.context.annotation.ComponentScan;

import org.springframework.context.annotation.Bean;

import naughtytao.apigateway.demo.filters.ErrorFilter;

import naughtytao.apigateway.demo.filters.PostFilter;

import naughtytao.apigateway.demo.filters.PreFilter;

import naughtytao.apigateway.demo.filters.RouteFilter;

@SpringBootApplication

@EnableAutoConfiguration(exclude = { RabbitAutoConfiguration.class })

@EnableZuulProxy

@ComponentScan("naughtytao.apigateway.demo")

public class DemoApplication {

public static void main(String[] args) {

SpringApplication.run(DemoApplication.class, args);

}

}Docker build files are as follows:

FROM maven:3.6.3-openjdk-11 WORKDIR /usr/src/app COPY src ./src COPY pom.xml ./ RUN mvn -f ./pom.xml clean package -Dmaven.wagon.http.ssl.insecure=true -Dmaven.wagon.http.ssl.allowall=true -Dmaven.wagon.http.ssl.ignore.validity.dates=true EXPOSE 8080 ENTRYPOINT ["java","-jar","/usr/src/app/target/demo-0.0.1-SNAPSHOT.jar"]

The routing configuration is written in application.properties

#Zuul routes. zuul.routes.goapi.url=http://goapi:8080 zuul.routes.nodeapi.url=http://nodeapi:8080 zuul.routes.flaskapi.url=http://flaskapi:8080 zuul.routes.springapi.url=http://springapi:8080 ribbon.eureka.enabled=false server.port=8080

We also use Docker Compose to run Zuul's gateway for verification:

version: '3.7'

services:

gateway:

image: naughtytao/zuulgateway:0.1

ports:

- 8080:8080

volumes:

- ./config/application.properties:/usr/src/app/config/application.properties

deploy:

resources:

limits:

cpus: '1'

memory: 256M

reservations:

memory: 256MThe results of K6 pressure test are as follows:

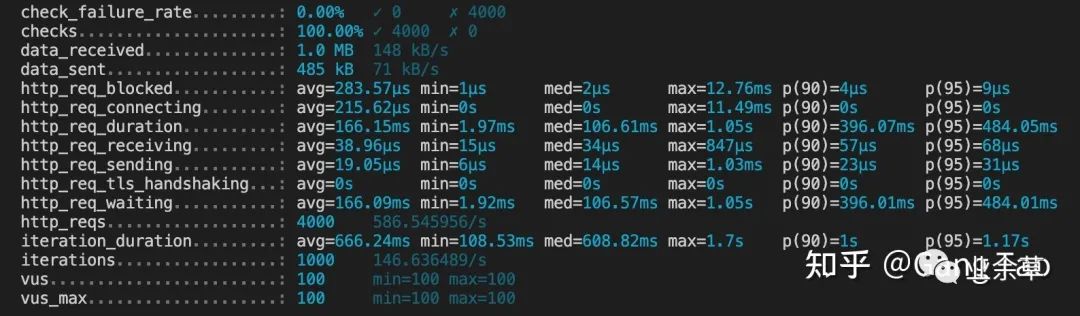

Zuul pressure measurement results

Under the same configuration conditions (single core, 256M), Zuul's pressure measurement results are significantly worse than the others, only about 200.

In the case of allocating more resources, the performance of 4-core 2G and Zuul has been improved to 600-800, so Zuul's demand for resources is still obvious.

In addition, we use zuul1, and Netflix has launched zuul2. Zuul2 has made great improvements to the architecture. Zuul1 is essentially a synchronous Servlet, using a multi-threaded blocking model. Zuul2 implements asynchronous non blocking programming model based on Netty. Synchronization is easier to debug, but multithreading itself consumes CPU and memory resources, so its performance is worse. Zuul, which adopts non blocking mode, supports more links and saves resources because of its low thread overhead.

Gravitee

Gravitee is http://Gravitee.io Open source, Java based, easy-to-use, high-performance and cost-effective open source API platform can help organizations protect, publish and analyze your API.

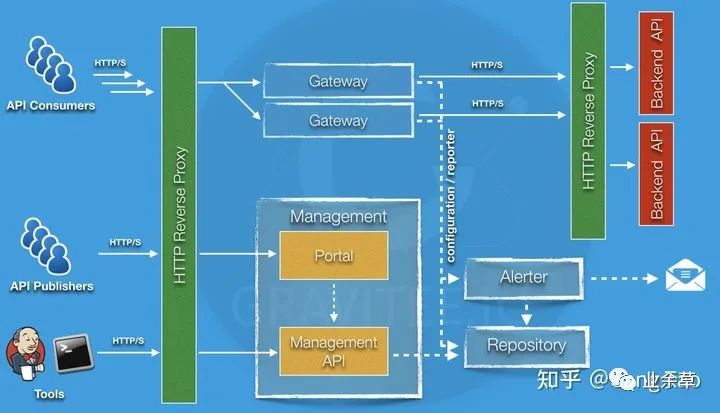

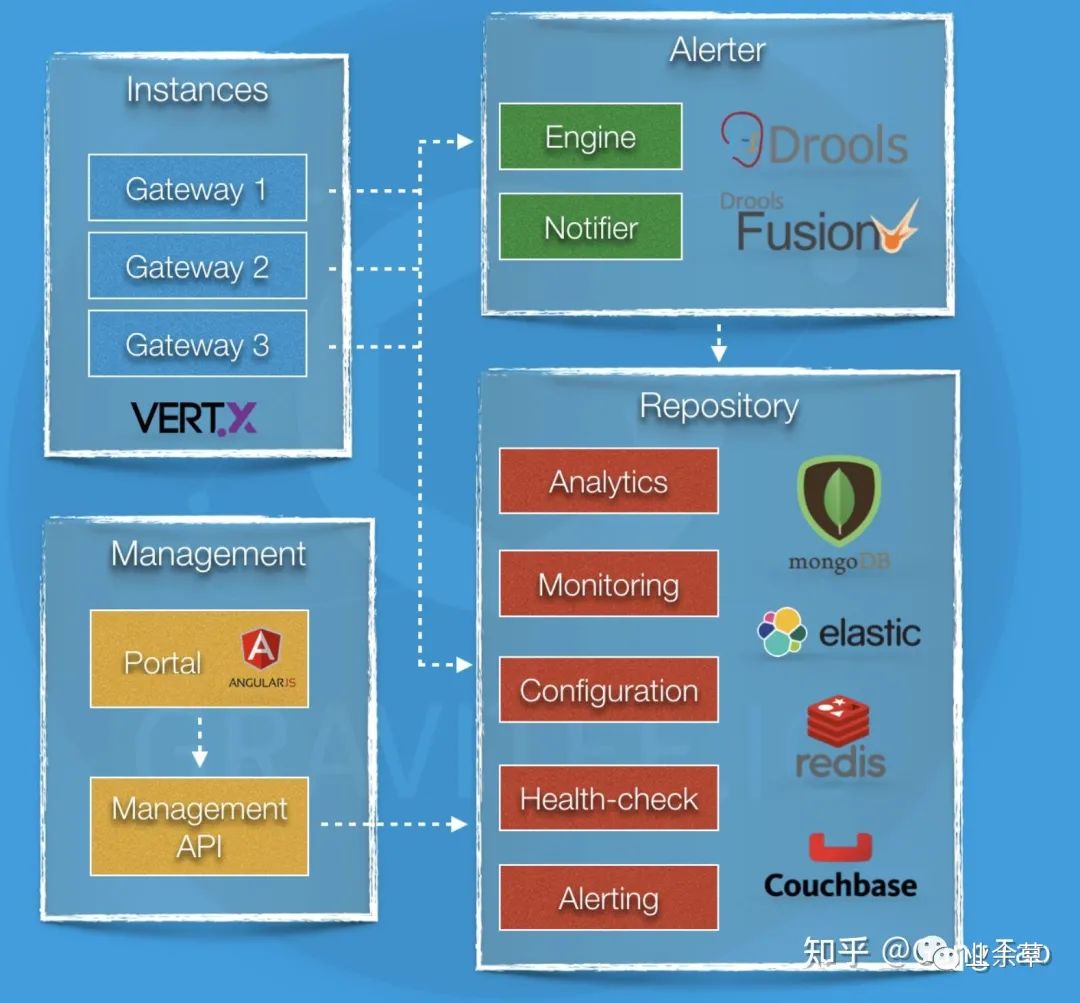

Gravitee gateway

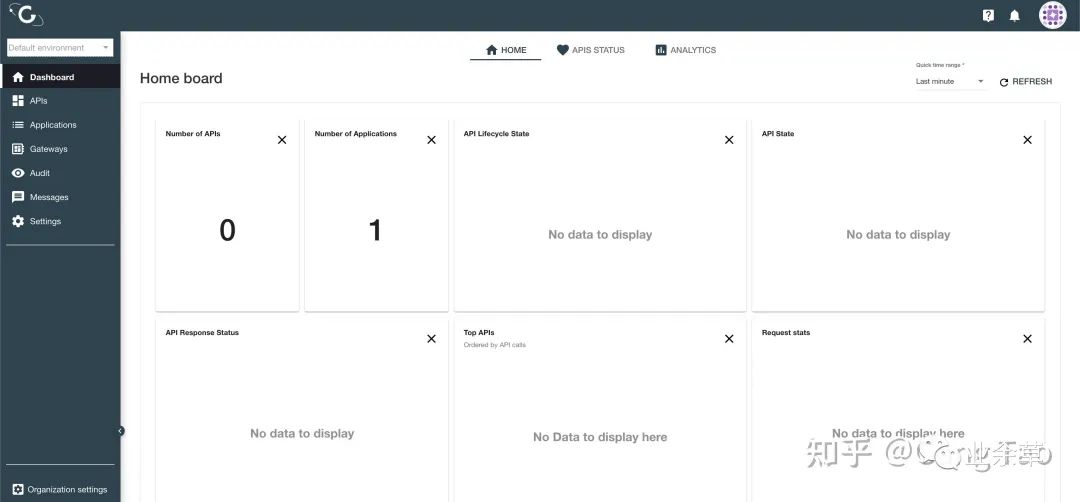

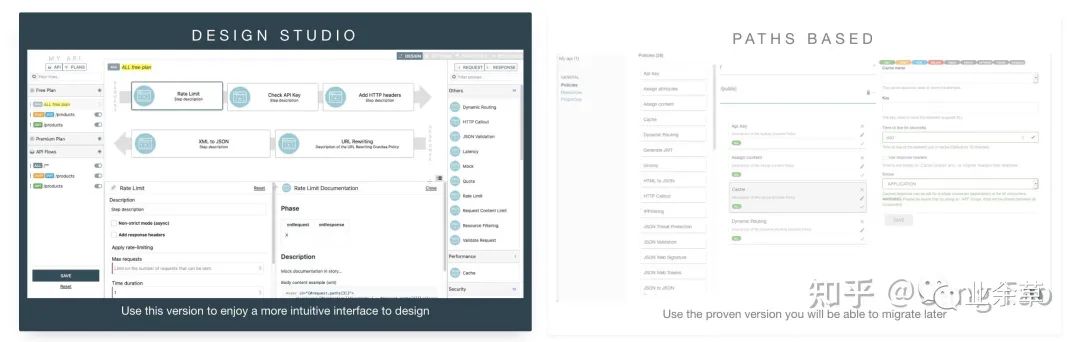

Gravitee can create and manage API s in two ways: design studio and path

Gravitee Management api

Gravity provides gateway, API portal and API management. The gateway and Management API are open source, and the portal needs to register a license to use.

Gravity

Gravity

MongoDB is used as storage in the background to support ES access.

We also use Docker Compose to deploy the entire Gravitee stack.

version: '3.7'

networks:

frontend:

name: frontend

storage:

name: storage

volumes:

data-elasticsearch:

data-mongo:

services:

mongodb:

image: mongo:${MONGODB_VERSION:-3.6}

container_name: gio_apim_mongodb

restart: always

volumes:

- data-mongo:/data/db

- ./logs/apim-mongodb:/var/log/mongodb

networks:

- storage

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:${ELASTIC_VERSION:-7.7.0}

container_name: gio_apim_elasticsearch

restart: always

volumes:

- data-elasticsearch:/usr/share/elasticsearch/data

environment:

- http.host=0.0.0.0

- transport.host=0.0.0.0

- xpack.security.enabled=false

- xpack.monitoring.enabled=false

- cluster.name=elasticsearch

- bootstrap.memory_lock=true

- discovery.type=single-node

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

nofile: 65536

networks:

- storage

gateway:

image: graviteeio/apim-gateway:${APIM_VERSION:-3}

container_name: gio_apim_gateway

restart: always

ports:

- "8082:8082"

depends_on:

- mongodb

- elasticsearch

volumes:

- ./logs/apim-gateway:/opt/graviteeio-gateway/logs

environment:

- gravitee_management_mongodb_uri=mongodb://mongodb:27017/gravitee?serverSelectionTimeoutMS=5000&connectTimeoutMS=5000&socketTimeoutMS=5000

- gravitee_ratelimit_mongodb_uri=mongodb://mongodb:27017/gravitee?serverSelectionTimeoutMS=5000&connectTimeoutMS=5000&socketTimeoutMS=5000

- gravitee_reporters_elasticsearch_endpoints_0=http://elasticsearch:9200

networks:

- storage

- frontend

deploy:

resources:

limits:

cpus: '1'

memory: 256M

reservations:

memory: 256M

management_api:

image: graviteeio/apim-management-api:${APIM_VERSION:-3}

container_name: gio_apim_management_api

restart: always

ports:

- "8083:8083"

links:

- mongodb

- elasticsearch

depends_on:

- mongodb

- elasticsearch

volumes:

- ./logs/apim-management-api:/opt/graviteeio-management-api/logs

environment:

- gravitee_management_mongodb_uri=mongodb://mongodb:27017/gravitee?serverSelectionTimeoutMS=5000&connectTimeoutMS=5000&socketTimeoutMS=5000

- gravitee_analytics_elasticsearch_endpoints_0=http://elasticsearch:9200

networks:

- storage

- frontend

management_ui:

image: graviteeio/apim-management-ui:${APIM_VERSION:-3}

container_name: gio_apim_management_ui

restart: always

ports:

- "8084:8080"

depends_on:

- management_api

environment:

- MGMT_API_URL=http://localhost:8083/management/organizations/DEFAULT/environments/DEFAULT/

volumes:

- ./logs/apim-management-ui:/var/log/nginx

networks:

- frontend

portal_ui:

image: graviteeio/apim-portal-ui:${APIM_VERSION:-3}

container_name: gio_apim_portal_ui

restart: always

ports:

- "8085:8080"

depends_on:

- management_api

environment:

- PORTAL_API_URL=http://localhost:8083/portal/environments/DEFAULT

volumes:

- ./logs/apim-portal-ui:/var/log/nginx

networks:

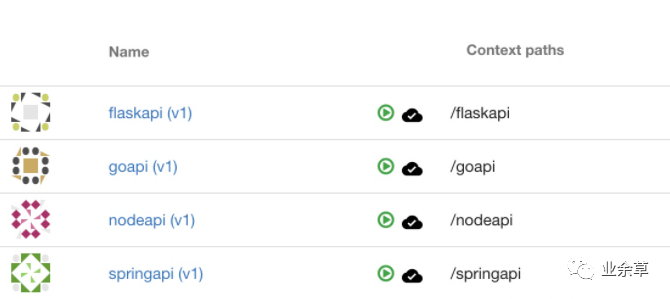

- frontendWe use the management UI to create four corresponding APIs to route the gateway. We can also use the API. Gravitee is the only open source product in this open source gateway.

Gravitee

The results of K6 pressure test are as follows:

Gravitee pressure measurement

Similar to Zuul, which also uses Java, Gravitee's response can only reach about 200, and there are some errors. We have to improve the resource allocation of the gateway to 4-core 2G again.

Gravitee pressure measurement

The performance after resource allocation is improved to 500-700, which is slightly better than Zuul.

summary

This paper analyzes the architecture and basic functions of several open source API gateways to provide some basic reference information for you in architecture selection. The artificial test data in this paper is relatively simple and the scene is relatively single, which can not be used as the basis for actual selection.

- Nginx: nginx is a high-performance API gateway developed based on C and has many plug-ins. If your API management needs are relatively simple and you accept manual routing configuration, nginx is a good choice.

- Kong: Kong is an API gateway based on Nginx. It uses OpenResty and Lua extensions. It uses PostgreSQL in the background. It has many functions and has a high popularity in the community. However, in terms of performance, it has a considerable loss compared with Nginx. If you have requirements for functionality and scalability, you can consider Kong.

- Apicix: the architecture of apicix is similar to that of Kong, but it adopts the cloud native design and uses ETCD as the background. It has considerable advantages over Kong in performance and is suitable for cloud native deployment scenarios with high performance requirements. In particular, Apis IX supports MQTT protocol and is very friendly for building IOT applications.

- Tyk: tyk is developed using Golang and Redis is used in the background. The performance is good. If you like Golang, you can consider it. It should be noted that tyk's open source protocol is MPL, which can not be closed after modifying the code. It is not very friendly to commercial applications.

- Zuul: zuul is an open source Java based API gateway component of Netflix. It is not an out of the box API gateway. It needs to be built with your Java application. All functions are used by integrating other components. It is suitable for applications familiar with Java and built in Java. The disadvantage is that the performance of other open source products is worse, Under the same performance conditions, there will be more requirements for resources.

- Gravitee: Gravitee is http://Gravitee.io The open source API management platform based on Java can manage the API life cycle. Even the open source version also has good UI support. However, due to the use of Java construction, the performance is also short board, which is suitable for scenarios with strong demand for API management.

All the code for this article is available here https://github.com/gangtao/api-gateway