Source:

Work done in the "South surveying and mapping Cup" scientific and Technological Paper Competition in those years

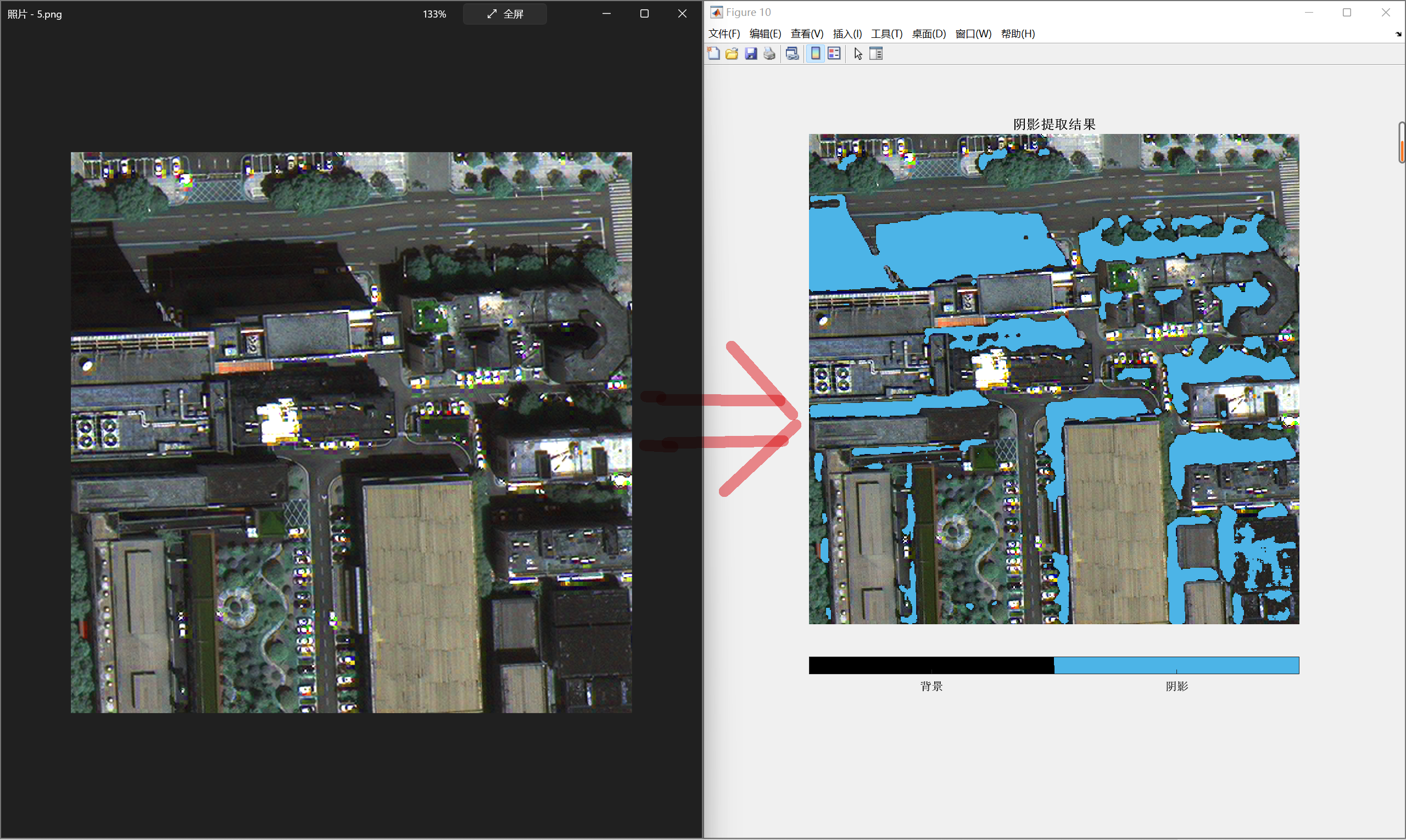

First look at the effect:

Implementation idea:

Due to the existence of obstructions, the radiant energy of radiation source (sun) can not reach some areas on the ground. These areas are the shadow areas on remote sensing images. They usually have an associated relationship with obstructions, and buildings are one of the obstructions. Therefore, there will be shadows near the building area, and the building area is related to the position relationship and solar azimuth of the corresponding shadow area. In view of this, this paper uses the positional relationship between buildings and shadows to detect vanishing buildings and obtain the approximate building area at the same time. The shadow extraction method in this paper includes the following four steps:

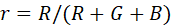

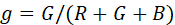

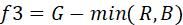

In the visible remote sensing image, most of the radiation energy is only composed of sunlight, and the chromaticity of the shadow area should be the same as that of direct illumination. Therefore, the shadow area and high brightness area will not be affected by the normalized color space [1]. Therefore, we characterize the shadow feature i by calculating the difference between the original color space and the normalized color space;

|

| (1) |

|

| (2) |

|

| (3) |

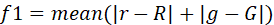

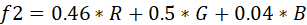

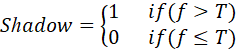

Due to the existence of occlusion, the shadow shows low brightness in the optical remote sensing image, so the brightness can be used as the feature ii. At present, there are many methods to calculate brightness, such as mean method, maximum method, conversion to HSV space, etc., but these methods do not take into account the sensitivity of human eyes to three kinds of RGB lights. Therefore, the following brightness calculation methods are designed according to the different sensitivity of human eyes to three kinds of RGB lights [2]

|

| (4) |

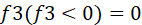

Due to the existence of gaps between leaves in the vegetation area, spotted shadow areas will be formed in the vegetation area. Therefore, it is necessary to construct vegetation area features and remove these spots to obtain purer shadows. In the visible light range, vegetation is generally green, so it can be characterized by the minimum value of green light band minus red light band and blue light band iii[3]

| | (5) |

|

| (6) |

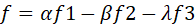

In the comprehensive decision-making part, after the above analysis, the shaded area and highlighted area show large values for feature i; For feature ii, the shaded area and vegetation area show smaller values, and the highlighted area shows larger values; For feature iii, vegetation presents a larger value and other parts show a smaller value. Therefore, the following formula can be constructed as the final decision item (the three features have been normalized to [0,1] before calculation), and then Otsu threshold segmentation can be used to obtain a better shadow extraction effect.

|

| (7) |

|

| (8) |

among α,β,λ They are the weights corresponding to the three features, which can be adjusted according to the actual situation.

Source code matlab:

%yangzhen

%2019.5.11

clear;

clc;

close all

%%

pathname = 'data/';

filenames = dir(pathname);

for i=3:length(filenames)

fileID = [pathname, filenames(i).name];

img = imread(fileID);

img = im2double(img);

%% Calculate chromaticity normalization

idist = GetColor(img);

%% Calculate brightness according to human vision

ilight = GetLight(img);

%% Calculate vegetation dimensional characteristics

ivege = GetVege(img);

%% Comprehensive decision

final = GetL_D_V(idist, ilight, ivege);

%% Result post-processing

shadow = FinalTrate(final);

% shadow=final;

%% Define visual colors

icolormap = [0,0,0; 76,180,231]/255;

%% visualization

figure;

colormap(icolormap);

imshow(img);

hold on

h = imagesc(shadow);

set(h,'alphadata',shadow)

title('Shadow extraction results', 'Interpreter', 'None', 'FontSize',12,'FontWeight','bold');

colorbar('horiz', 'Ticks',[0.25, 0.75],...

'TickLabels',{'background','shadow'}, ...

'FontSize',10,'FontWeight','bold');

%% Save results

% save('shadow.mat', 'shadow');

end

%% Standardized function

function result = standard( data )

[irow, icol, idim] = size(data);

data = reshape(data, [irow*icol, idim]);

temp1 = bsxfun(@minus, data, min(data));

result = bsxfun(@rdivide, temp1, max(data) - min(data));

result = reshape(result, [irow, icol,idim]);

end

%% Calculate image brightness according to human visual characteristics

function result = GetLight(img)

R = standard( img(:, :, 1) );

G = standard( img(:, :, 2) );

B = standard( img(:, :, 3) );

result = 0.04*R+0.5*G+0.46*B;

end

%% Chromaticity space normalization

function result = GetColor(img)

img1 = img;

misc = (img(:, :, 1) + img(:, :, 2) + img(:, :, 3));

misc(misc == 0) = 0.0000001;

img(:, :, 1) = img(:, :, 1) ./ misc;

img(:, :, 2) = img(:, :, 2) ./ misc;

result = imabsdiff(img, img1);

result = mean(result(:, :, 1:2), 3);

end

%% Obtain vegetation characteristics

function result = GetVege(img)

R = standard( img(:, :, 1) );

G = standard( img(:, :, 2) );

B = standard( img(:, :, 3) );

result = (G-min(R, B));

result( result<0 ) = 0;

end

%% General decision

function result = GetL_D_V(idist, ilight, ivege)

idist = standard(idist);

ilight = standard(ilight);

ivege = standard(ivege);

result = (idist-ilight-ivege);

result( result<0 ) = 0;

end

%% Result post-processing,Some parameters here can be adjusted according to the actual situation

function result = FinalTrate(img)

T = graythresh(img);

result = imbinarize(img, T);

%median filtering

result=medfilt2(result, [7, 7]);

endSource code - python:

#yangzhen

#2020.4.13

"""get the shadow proportion form images

of remote sensing"""

import numpy as np

import cv2

import os

import glob

import matplotlib.pyplot as plt

from matplotlib import cm

from matplotlib.colors import LinearSegmentedColormap

from pylab import mpl

import random

mpl.rcParams['font.sans-serif'] = ['FangSong']

mpl.rcParams['axes.unicode_minus'] = False

def standard(data):

'''Image file standardization

Input single channel image

Output standardized single channel image'''

mdata = data.copy()

irow, icol = mdata.shape[0:2]

mdata = np.reshape(mdata, [irow*icol, 1])

temp1 = mdata - np.min(data)

result = temp1/(np.max(data)-np.min(data))

result = np.reshape(result, [irow, icol])

return result

def GetLight(img):

'''Calculating the brightness of human visual characteristics'''

mimg = img.copy()

B = mimg[:,:,0]

G = mimg[:,:,1]

R = mimg[:,:,2]

result = 0.04*R+0.5*G+0.46*B

return result

def GetColor(img):

'''Chromaticity space normalization'''

mimg = img.copy()

misc = mimg[:,:,0]+mimg[:,:,1]+mimg[:,:,2]

misc[misc == 0] = 0.0000001

mimg[:,:,0] = img[:,:,0]/misc

mimg[:,:,1] = img[:,:,1]/misc

result = np.abs(mimg - img)

result = (result[:,:,0]+result[:,:,1])/2

return result

def GetVege(img):

'''Obtain vegetation characteristics'''

mimg = img.copy()

B = mimg[:,:,0]

G = mimg[:,:,1]

R = mimg[:,:,2]

result = G-np.minimum(R, B)

result[result<0] = 0

return result

def GetLDV(idist, ilight, ivege):

'''General decision'''

idist = standard(idist)

ilight = standard(ilight)

ivege = standard(ivege)

result = idist-ilight-ivege

result[result<0]=0

return result

def FinalTrare(img):

'''Result post-processing'''

mimg = img.copy()

mimg = np.uint8(standard(mimg)*255)

T, result = cv2.threshold(mimg, 0, 255, cv2.THRESH_OTSU)

result = cv2.medianBlur(result, 7)

return result

if __name__ == "__main__":

#Get input picture path

filepath = 'data/'

filenames = glob.glob(filepath + '*')

print(filenames)

for filename in filenames:

img = cv2.imread(filename)

# Get shadow

img1 = img.copy()

img1 = img1.astype(np.float)

img1[:,:,0] = standard(img1[:,:,0])

img1[:,:,1] = standard(img1[:,:,1])

img1[:,:,2] = standard(img1[:,:,2])

idist = GetColor(img1)

ilight = GetLight(img1)

ivege = GetVege(img1)

final = GetLDV(idist, ilight, ivege)

shadow = FinalTrare(final)

# visualization

color_list = ['#00000000', '#4cb4e7']

my_cmap = LinearSegmentedColormap.from_list('mcmp', color_list)

cm.register_cmap(cmap=my_cmap)

fig = plt.figure()

plt.title('Shadow extraction results', fontsize=12, fontweight='bold')

plt.imshow(img)

plt.imshow(shadow, cmap='mcmp')

plt.show()reference:

- Xu L Q, Landabaso, J.L., Pardas, M.Shadow removal with blob-based morphological reconstruction for error correction[C]. Acoustics, Speech, and Signal Processing, 2005. Proceedings. (ICASSP '05). IEEE International Conference on

- Liu Jiahang. Research on target recognition and extraction technology of high-resolution optical remote sensing image based on visual features [D]. Shanghai Jiaotong University, 2011

- Shen Xiaole. Building extraction from object high-resolution remote sensing images under visual attention mechanism [D]. Wuhan University, 2014

Test data:

Link: https://pan.baidu.com/s/1Mtew7ESJLFeqeGmvrOwvhQ

Extraction code: 1234