Memory sharing

-

Memory sharing is realized through value array

- Returns a ctypes object created from shared memory

- Requests and returns an array object of type ctypes from shared memory

-

Memory sharing via Manager

- The Manager object returned by the Manager controls a service process, which saves Python objects and allows other processes to manipulate objects through agents

- The returned manager supports list, dict, etc

- Note: synchronization may require locking, especially when + = update is encountered

from multiprocessing import Process

from multiprocessing import Manager, Lock

import time

import random

def register(d,name):

if name in d:

print('duplicated name found...register anyway...')

d[name]+=1

else:

d[name]=1

time.sleep(random.random())

def register_with_loc(d,name,lock):

with lock:

if name in d:

print('duplicated name found...register anyway...')

d[name]+=1

else:

d[name]=1

time.sleep(random.random())

if __name__=='__main__':

#Manager

names=['Amy','Lily','Dirk','Lily', 'Denial','Amy','Amy','Amy']

manager=Manager()

dic=manager.dict()

lock=Lock()

#manager.list()

students=[]

for i in range(len(names)):

#s=Process(target=register,args=(dic,names[i]))

s=Process(target=register_with_loc,args=(dic,names[i],lock))

students.append(s)

for s in students:

s.start()

for s in students:

s.join()

print('all processes ended...')

for k,v in dic.items():

print("{}\t{}".format(k,v))

You can share dictionaries

duplicated name found...register anyway... duplicated name found...register anyway... duplicated name found...register anyway... duplicated name found...register anyway... all processes ended... Amy 4 Dirk 1 Denial 1 Lily 2

- Process pool

- Too many processes open, resulting in reduced efficiency (synchronization and switching costs)

- The number of work processes should be fixed

- These processes perform all tasks instead of starting more processes

- Related to the number of cores of the CPU

Create process pool

-

Pooll([numprocess [,initializer [, initargs]]])

- numprocess: the number of processes to create. Os.cpu is used by default_ Value of count()

- initializer: the callable object to be executed when each worker process starts. The default is None

- Initargs: parameters of initializer

-

p.apply()

- Synchronous call

- Only one process executes (not in parallel)

- However, you can get the returned result directly (blocking to the returned result)

-

p.apply_async()

- Asynchronous call

- Parallel execution, the result may not be returned immediately (AsyncResult)

- There can be a callback function. The main process will be notified immediately after any task in the process pool is completed. The main process will call another function to process the result. This function is the callback function, and its parameter is the return result

-

p.close()

-

p.join()

- Wait for all work processes to exit, only after close() or teminate().

-

supplement

The callback function has only one parameter, that is, the result

The callback function is called by the main process

The callback function should end quickly

The order of callbacks is independent of the order in which child processes are started -

p.map()

In parallel, the main process waits for all child processes to finish -

p.map_async()

from multiprocessing import Process

from multiprocessing import Pool

from multiprocessing import current_process

import matplotlib.pyplot as plt

import os

import time

global_result=[]

def fib(max):

n,a,b=0,0,1

while n<max:

a,b=b,a+b

n+=1

return b

def job(n):

print('{} is working on {}...'.format(os.getpid(),n))

time.sleep(2)

return fib(n)

def add_result(res):#callback func

global global_result

print("called by {}, result is {}".format(current_process().pid,res))

#You can also return process identification information to identify the results (such as process parameters)

#It can be stored in a dictionary

global_result.append(res)

def add_result_map(res):

global global_result

print("called by {}, result is {}".format(current_process().pid,res))

for r in res:

global_result.append(r)

if __name__=='__main__':

p=Pool()#cpu determines

ms=range(1,20)

results=[]

#Synchronous call

#Create multiple processes, but only one execution. You need to wait until the execution is completed before returning the results

#Not parallel

for m in ms:

print('{} will be applied in main'.format(m))

res=p.apply(job,args=(m,))#Will wait for the execution to finish before executing the next one

print(res)

print('{} is applied in main'.format(m))

results.append(res)

p.close()#!!!

print(results)

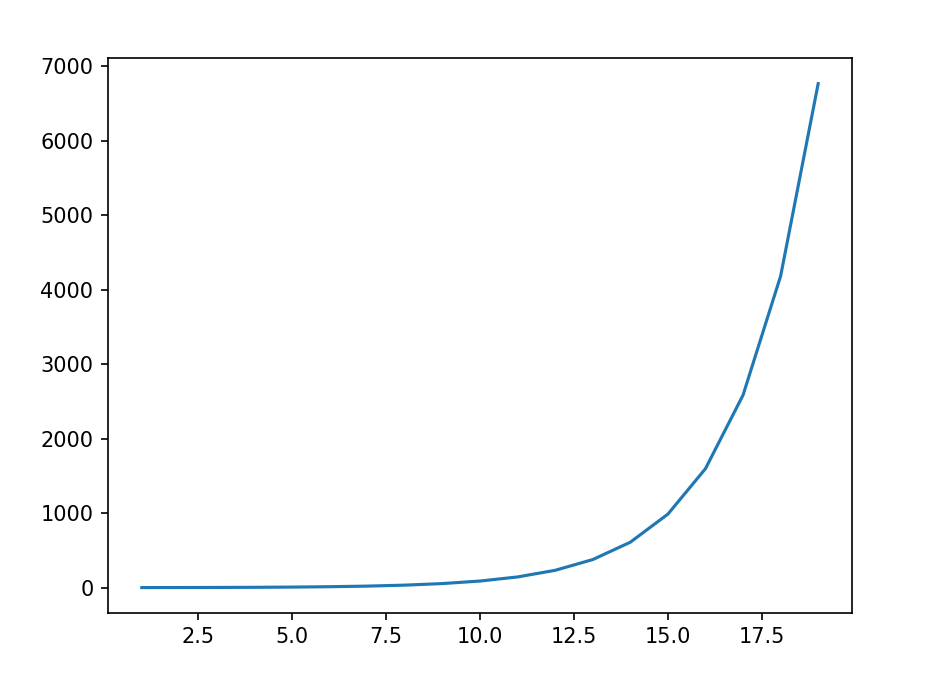

plt.figure()

plt.plot(ms,results)

plt.show()

plt.close()

#Asynchronous call

#Can be parallel

'''for m in ms:

res=p.apply_async(job,args=(m,))#Note that here res is just a reference

results.append(res)

#If you print immediately, there may be no results

print(res)

p.close()

p.join()

results2=[]

for res in results:

results2.append(res.get())

print(results2)

plt.figure()

plt.plot(ms,results2)

plt.show()

plt.close()'''

#callback

'''for m in ms:

p.apply_async(job,args=(m,),callback=add_result)

#The callback function has only one parameter

#The callback function is executed by the main process

p.close()

p.join()

plt.figure()

plt.plot(ms,sorted(global_result))#The order may be chaotic. You can sort and solve it here, but other problems are not necessarily

plt.show()

plt.close()'''

#Use map

'''results3=p.map(job,ms)

print(type(results3))#list

p.close()

plt.figure()

plt.plot(ms,results3)

plt.show()

plt.close()'''

#Use map_async

'''p.map_async(job,ms,callback=add_result_map)

p.close()

p.join()

plt.figure()

print(len(ms))

print(len(global_result))

plt.plot(ms,global_result)

plt.show()

plt.close()'''

During synchronous call, res=p.apply(job,args=(m,)) will wait for the execution to finish before executing the next one, so it will consume a lot of time and take a long time

1 will be applied in main 9028 is working on 1... 1 1 is applied in main 2 will be applied in main 3396 is working on 2... 2 2 is applied in main 3 will be applied in main 17520 is working on 3... 3 3 is applied in main 4 will be applied in main 12984 is working on 4... 5 4 is applied in main 5 will be applied in main 10720 is working on 5... 8 5 is applied in main 6 will be applied in main 18792 is working on 6... 13 6 is applied in main 7 will be applied in main 14768 is working on 7... 21 7 is applied in main 8 will be applied in main 19524 is working on 8... 34 8 is applied in main 9 will be applied in main 9028 is working on 9... 55 9 is applied in main 10 will be applied in main 3396 is working on 10... 89 10 is applied in main 11 will be applied in main 17520 is working on 11... 144 11 is applied in main 12 will be applied in main 12984 is working on 12... 233 12 is applied in main 13 will be applied in main 10720 is working on 13... 377 13 is applied in main 14 will be applied in main 18792 is working on 14... 610 14 is applied in main 15 will be applied in main 14768 is working on 15... 987 15 is applied in main 16 will be applied in main 19524 is working on 16... 1597 16 is applied in main 17 will be applied in main 9028 is working on 17... 2584 17 is applied in main 18 will be applied in main 3396 is working on 18... 4181 18 is applied in main 19 will be applied in main 17520 is working on 19... 6765 19 is applied in main [1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, 233, 377, 610, 987, 1597, 2584, 4181, 6765]

When calling asynchronously, no result will be printed in the middle. The result will be printed only after all execution is completed, but the running speed is fast.

<multiprocessing.pool.ApplyResult object at 0x000001EF6A9C16A0> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C1780> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C1828> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C18D0> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C1978> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C1A20> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C1AC8> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C1B70> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C1C18> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C1CC0> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C1D68> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C1E10> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C1EB8> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C1F60> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C1FD0> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C80F0> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C8198> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C8240> <multiprocessing.pool.ApplyResult object at 0x000001EF6A9C82E8> 21056 is working on 1... 16880 is working on 2... 13832 is working on 3... 17564 is working on 4... 21056 is working on 5... 16940 is working on 6... 12728 is working on 7... 6592 is working on 8... 18296 is working on 9... 16880 is working on 10... 13832 is working on 11... 17564 is working on 12... 21056 is working on 13... 16940 is working on 14... 12728 is working on 15... 6592 is working on 16... 18296 is working on 17... 16880 is working on 18... 13832 is working on 19... [1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, 233, 377, 610, 987, 1597, 2584, 4181, 6765]

Result of callback function

4600 is working on 1... 4584 is working on 2... 2892 is working on 3... 10088 is working on 4... 14404 is working on 5... 4600 is working on 6... called by 14812, result is 116212 is working on 7... 4608 is working on 8... called by 14812, result is 2 4584 is working on 9... 2632 is working on 10... called by 14812, result is 3 2892 is working on 11... called by 14812, result is 5 10088 is working on 12... called by 14812, result is 8 14404 is working on 13... called by 14812, result is 13 4600 is working on 14... called by 14812, result is 21 16212 is working on 15... called by 14812, result is 34 4608 is working on 16... called by 14812, result is 55 4584 is working on 17... called by 14812, result is 89 2632 is working on 18... called by 14812, result is 144 2892 is working on 19... called by 14812, result is 233 called by 14812, result is 377 called by 14812, result is 610 called by 14812, result is 987 called by 14812, result is 1597 called by 14812, result is 2584 called by 14812, result is 4181 called by 14812, result is 6765

ProcessPoolExecutor

- Further abstraction of multiprocessing

- Provide simpler and unified interface

- submit(fn, *args, **kwargs)

- returns a Future object representing the execution of the callable

- Add: calling result() of Future immediately will block

- map(func, *iterables, timeout=None)

• func is executed asynchronously, i.e., several calls to func may be made concurrently and returns an iterator of results

import concurrent.futures

from multiprocessing import current_process

import math

PRIMES = [

1112272535095293,

1112582705942171,

1112272535095291,

1115280095190773,

1115797848077099,

11099726899285419]

def is_prime(n):

print(f"{current_process().pid}")

if n % 2 == 0:

return False

sqrt_n = int(math.floor(math.sqrt(n)))

for i in range(3, sqrt_n + 1, 2):

if n % i == 0:

return False

return True

def main_submit():

results=[]

with concurrent.futures.ProcessPoolExecutor() as executor:

for number in PRIMES:

n_future=executor.submit(is_prime,number)

#print(n_future.result())#block

results.append(n_future)

#Note here that if you immediately get the result in the main process, that is, n_future.result(), that is, the main process will block and cannot be parallelized

#Therefore, it is recommended to replace n first_ Put future into the list and get the results after starting all processes.

for number, res in zip(PRIMES,results):

print("%d is prime: %s" % (number,res.result()))

def main_map():

with concurrent.futures.ProcessPoolExecutor() as executor:

for number, prime_or_not in zip(PRIMES, executor.map(is_prime, PRIMES)):

print('%d is prime: %s' % (number, prime_or_not))

if __name__ == '__main__':

main_submit()

#main_map()

8004 8004 1112272535095293 is prime: False 4332 18212 18212 18212 1112582705942171 is prime: True 1112272535095291 is prime: True 1115280095190773 is prime: False 1115797848077099 is prime: False 11099726899285419 is prime: False

Multi process

- Distributed multi process

- Multi machine environment

- Cross device data exchange

- Master worker model

- Expose Queue through manager

- GIL(Global Interpreter Lock)

- GIL is not a python feature, but a concept introduced when implementing the Python interpreter (Cpython)

- GIL is essentially a mutual exclusion lock, which controls that shared data can only be modified by one task at the same time to ensure data security

- GIL protects shared data at the interpreter level, while protecting data at the user programming level requires self locking

- In the Cpython interpreter, multiple threads started in the same process can only be executed by one thread at a time, which can not take advantage of multi-core

- You may need to get the GIL first

Multithreaded programming

| Multiprocess Process | Multithreaded thread |

|---|---|

| Multiple cores can be used | Unable to utilize multi-core |

| High cost | Low cost |

| Compute intensive | IO intensive |

| Financial analysis | socket, crawler, web |

-

Threading module

-

The multiprocessing module and the threading module are very similar at the use level

-

threading.currentThread(): returns the current thread instance

-

threading.enumerate(): returns a list of all running threads

-

threading.activeCount(): returns the number of running threads, which is the same as the result of len(threading.enumerate())

-

Create multithreading

- By specifying the target parameter

- By inheriting the Thread class

- Set daemon thread

- setDaemon(True)

- Expected before start()

from threading import Thread,currentThread

import time

def task(name):

time.sleep(2)

print('%s print name: %s' %(currentThread().name,name))

class Task(Thread):

def __init__(self,name):

super().__init__()

self._name=name

def run(self):

time.sleep(2)

print('%s print name: %s' % (currentThread().name,self._name))

if __name__ == '__main__':

n=100

var='test'

t=Thread(target=task,args=('thread_task_func',))

t.start()

t.join()

t=Task('thread_task_class')

t.start()

t.join()

print('main')

Thread-1 print name: thread_task_func thread_task_class print name: thread_task_class main

- Thread synchronization

- Lock (threading.Lock,threading.RLock, reentrant lock)

- Once the thread obtains the reentry lock, it will not block when it obtains it again

- The thread must be released once after each fetch

- Difference: recursive call

- Semaphore threading.Semaphore

- Event threading.Event

- Condition threading.Condition

- Timer threading.Timer

- Barrier

- Lock (threading.Lock,threading.RLock, reentrant lock)

- Thread local variable

- queue

- queue.Queue

- queue.LifoQueue

- queue.PriorityQueue

- Thread pool ThreadPoolExecutor

Comparison of threads and processes

from multiprocessing import Process

from threading import Thread

import os,time,random

def dense_cal():

res=0

for i in range(100000000):

res*=i

def dense_io():

time.sleep(2)#simulate the io delay

def diff_pt(P=True,pn=4,target=dense_cal):

tlist=[]

start=time.time()

for i in range(pn):

if P:

p=Process(target=target)

else:

p=Thread(target=target)

tlist.append(p)

p.start()

for p in tlist:

p.join()

stop=time.time()

if P:

name='multi-process'

else:

name='multi-thread'

print('%s run time is %s' %(name,stop-start))

if __name__=='__main__':

diff_pt(P=True)

diff_pt(P=False)

diff_pt(P=True,pn=100,target=dense_io)

diff_pt(P=False,pn=100,target=dense_io)

multi-process run time is 28.328214168548584 multi-thread run time is 64.33887600898743 multi-process run time is 13.335324048995972 multi-thread run time is 5.584061861038208

signal

- A restricted way of interprocess communication in signal operating system

- An asynchronous notification mechanism that alerts the process that an event has occurred

- When a signal is sent to a process, the operating system interrupts its execution

• any non atomic operation will be interrupted

• if the process defines the signal processing function, the function will be executed, No

The default processing function is executed

• signal.signal(signal.SIGTSTP, handler) - Available for process abort

- Python signal processing is performed only in the main thread

• even if the signal is received in another thread

• signals cannot be used as a means of inter thread communication

• only the main line is allowed to set a new signal processing program