1. Understanding of volatile?

volatile is a lightweight synchronization mechanism provided by Java virtual machine

- Ensure visibility

- Prohibit instruction sorting

- Atomicity is not guaranteed

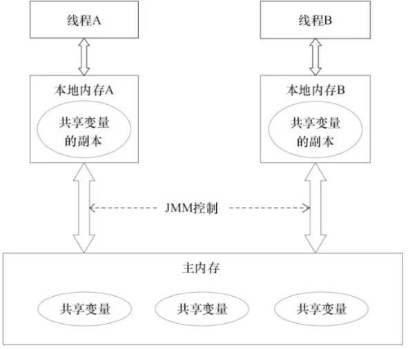

JMM (Java memory model)

- JMM itself is an abstract concept, which does not really exist. It describes a set of regulations or specifications, which define the access mode in the program.

JMM synchronization regulations:

1. Before the thread is unlocked, the value of the shared variable must be flushed back to main memory

2. Before a thread locks, it must read the latest value of the main memory to its own working memory

3. Locking and unlocking are the same lock

-

Because the entity of the JVM running program is a thread, and when each thread is created, the JVM will create a working memory for it. The working memory is the private data area of each thread. The Java Memory Model stipulates that all variables are stored in the main memory. The main memory is a shared memory area, which can be accessed by all threads, but the operation of threads on variables (reading, assignment, etc.) All working memory must be read.

-

First, copy the variable from the main memory to your own working memory space, and then operate the variable. After the operation is completed, write the variable back to the main memory. You can't directly operate the variable in the main memory. The working memory stores the copy of the variable in the main memory. As mentioned earlier, the working memory is the private data area of each thread, Therefore, different threads cannot access each other's working memory, and the communication (value transfer) between threads must be completed through the main memory.

-

Memory model diagram

Three characteristics of JMM model: -

visibility

-

Atomicity

-

Order

(1) Visibility. If the volatile keyword is not added, the main thread will enter an endless loop. If the volatile keyword is added, the main thread can exit. This indicates that the volatile keyword variable is added. When a thread modifies the value, it will be immediately perceived by another thread. The current value will be invalidated and the value will be obtained from the main memory. Visible to other threads, this is called visibility.

/**

* @Author: cuzz

* @Date: 2019/4/16 21:29

* @Description: Visibility code instance

*/

public class VolatileDemo {

public static void main(String[] args) {

Data data = new Data();

new Thread(() -> {

System.out.println(Thread.currentThread().getName() + " coming...");

try {

Thread.sleep(3000);

} catch (InterruptedException e) {

e.printStackTrace();

}

data.addOne(); // call

System.out.println(Thread.currentThread().getName() + " updated...");

}).start();

while (data.a == 0) {

// looping

}

System.out.println(Thread.currentThread().getName() + " job is done...");

}

}

class Data {

// int a = 0;

volatile int a = 0;

void addOne() {

this.a += 1;

}

}

(2) Atomicity. It is found that the following output cannot get 20000.

public class VolatileDemo {

public static void main(String[] args) {

// test01();

test02();

}

// Test atomicity

private static void test02() {

Data data = new Data();

for (int i = 0; i < 20; i++) {

new Thread(() -> {

for (int j = 0; j < 1000; j++) {

data.addOne();

}

}).start();

}

// There are main thread and gc thread by default

while (Thread.activeCount() > 2) {

Thread.yield();

}

System.out.println(data.a);

}

}

class Data {

volatile int a = 0;

void addOne() {

this.a += 1;

}

}

(3) Order

- When a computer executes a program, in order to improve the performance, the compiler processor often rearranges the instructions, which are generally divided into the following three types

1. Compiler optimized rearrangement

2. Instruction parallel rearrangement

3. Rearrangement of memory system - In the single thread environment, ensure that the final execution result of the program is consistent with the code execution result

- The processor must consider the data dependency between instructions when reordering

- In multi-threaded environment, threads execute alternately. Due to the existence of compiler optimization rearrangement, it is uncertain whether the variables used in the two threads can ensure the consistency of the variables used, and the results cannot be predicted

public class ReSortSeqDemo {

int a = 0;

boolean flag = false;

public void method01() {

a = 1; // flag = true;

// ----Thread switching----

flag = true; // a = 1;

}

public void method02() {

if (flag) {

a = a + 3;

System.out.println("a = " + a);

}

}

}

If two threads execute at the same time, method01 and method02. If thread 1 performs method01 reordering, and then switched thread 2 executes method02, different results will appear.

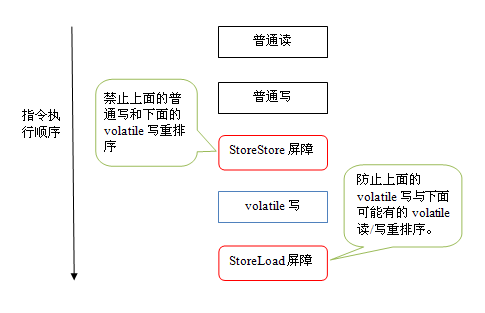

Prohibit instruction sorting

volatile implements the optimization of prohibiting instruction reordering, so as to avoid the disorder of program in multi-threaded environment

Let's first understand a concept. Memory Barrier, also known as Memory Barrier, is a CPU instruction. It has two functions:

- Ensure the execution sequence of specific operations

- Ensure memory visibility of some variables (use this feature to realize memory visibility of volatile)

Since both processors can perform instruction reordering optimization, if a Memory Barrier is inserted between the instructions, it will tell the compiler and CPU that no instruction can be reordered by this Memory Barrier, that is, it is prohibited to perform reordering optimization before and after the Memory Barrier by inserting the Memory Barrier. Another function of the Memory Barrier is to force all kinds of CPU cache data to be brushed out, so any thread on the CPU can read the latest version of these data.

The following is the schematic diagram of the instruction sequence generated after volatile write is inserted into the memory barrier under the conservative strategy:

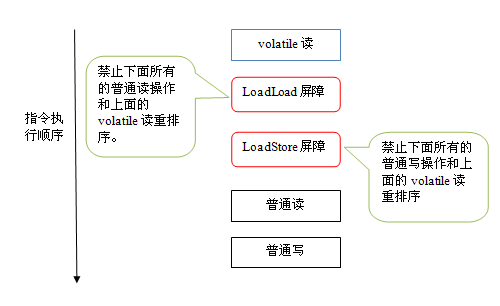

The following is a schematic diagram of the instruction sequence generated after volatile read is inserted into the memory barrier under the conservative strategy:

Thread safety assurance

- The synchronization delay between working memory and main memory causes visibility problems

It can be solved by using the synchronized or volatile keywords, which can be immediately visible to other threads with the modified variables of one thread - For instruction rearrangement, it leads to visibility problems and ordering problems

The volatile keyword can be used to solve this problem, because another function of volatile is to prohibit instruction reordering optimization

Where have you used volatile? Single case

@NotThreadSafe

public class Singleton01 {

private static Singleton01 instance = null;

private Singleton01() {

System.out.println(Thread.currentThread().getName() + " construction...");

}

public static Singleton01 getInstance() {

if (instance == null) {

instance = new Singleton01();

}

return instance;

}

public static void main(String[] args) {

ExecutorService executorService = Executors.newFixedThreadPool(10);

for (int i = 0; i < 10; i++) {

executorService.execute(()-> Singleton01.getInstance());

}

executorService.shutdown();

}

}

Double lock single example:

public class Singleton02 {

private static volatile Singleton02 instance = null;

private Singleton02() {

System.out.println(Thread.currentThread().getName() + " construction...");

}

public static Singleton02 getInstance() {

if (instance == null) {

synchronized (Singleton01.class) {

if (instance == null) {

instance = new Singleton02();

}

}

}

return instance;

}

public static void main(String[] args) {

ExecutorService executorService = Executors.newFixedThreadPool(10);

for (int i = 0; i < 10; i++) {

executorService.execute(()-> Singleton02.getInstance());

}

executorService.shutdown();

}

}

If volatile is not added, it is not necessarily thread safe because of the existence of instruction reordering. Adding volatile can prohibit instruction reordering. The reason is that when a thread performs the first detection and the read instance is not null, the reference object of * * instance may not have completed initialization** instance = new Singleton() can be completed in the following three steps.

memory = allocate(); // 1. Allocate object space instance(memory); // 2. Initialization object instance = memory; // 3. Set instance to point to the memory address just allocated. At this time, instance= null

There is no dependency between steps 2 and 3, and the execution result of the program does not change in a single thread whether before or after rearrangement. Therefore, this optimization is allowed and rearrangement occurs.

memory = allocate(); // 1. Allocate object space instance = memory; // 3. Set instance to point to the memory address just allocated. At this time, instance= Null, but the object has not been initialized yet instance(memory); // 2. Initialization object