What are Python's support modules for concurrent programming?

| Module name | effect |

|---|---|

| threading | Realize multithreading, and make use of the principle that the computer CPU and IO can execute at the same time, so that the CPU can access another task during the computer IO operation. |

| multprocessing | multprocessing |

| asyncio | Realize asynchronous IO, and realize the asynchronous execution of function granularity by using the principle of simultaneous execution of CPU and IO in a single thread. |

| Lock | Lock resources to prevent resource competition and access conflict. |

| Queue | Realize the data communication between different threads and processes, and realize the producer consumer mode |

| Thread pool / process pool | Simplify the task submission of threads and processes, wait for the end, obtain results, etc. |

| subprocess | Realize the process of starting external programs (such as. exe program) and input and output interaction. |

Why Python is slow

1. Dynamically typed language, executing while interpreting

2. There are no type restrictions on the definition of variables, and the data type needs to be checked at any time, resulting in performance degradation,

3. There is a lack of steps to translate the source code into machine code. The execution of machine code is very fast, but Python is very slow while translating

4. Existence of Gil:

CPU Intensive Computing & IO intensive computing

| type | explain | Common scenarios |

|---|---|---|

| CPU bound | CPU intensive computing, also known as computing intensive, means that I/O can be completed in a very short time. The CPU needs a lot of calculation and processing. It is characterized by a very high CPU occupancy rate. | Compression, decompression, regular expression search |

| IO intensive computing (I/O-bound) | IO intensive computing refers to the operation of the system. Most of the conditions are that the CPU is waiting for I/O (hard disk / memory) reading and writing operations, and the CPU occupancy is low. | File handler, network request, read / write database |

Multi process & multi thread & the use of multi coprocess

| name | advantage | shortcoming | scene |

|---|---|---|---|

| multprocessing | Multi core CPU can be used for parallel operation | It takes up the most resources and can start fewer than threads | CPU intensive computing |

| Multithreading | Compared with the process: it is lighter and occupies less resources | Compared with the process: it is lighter and occupies less resources | IO intensive computing, with a small number of tasks running at the same time |

| Coroutine (asyncio) | Coroutine (asyncio) | The supported libraries are limited (AIO HTTP supports, requests does not support), and the code implementation is complex | The supported libraries are limited (AIO HTTP supports, requests does not support), and the code implementation is complex |

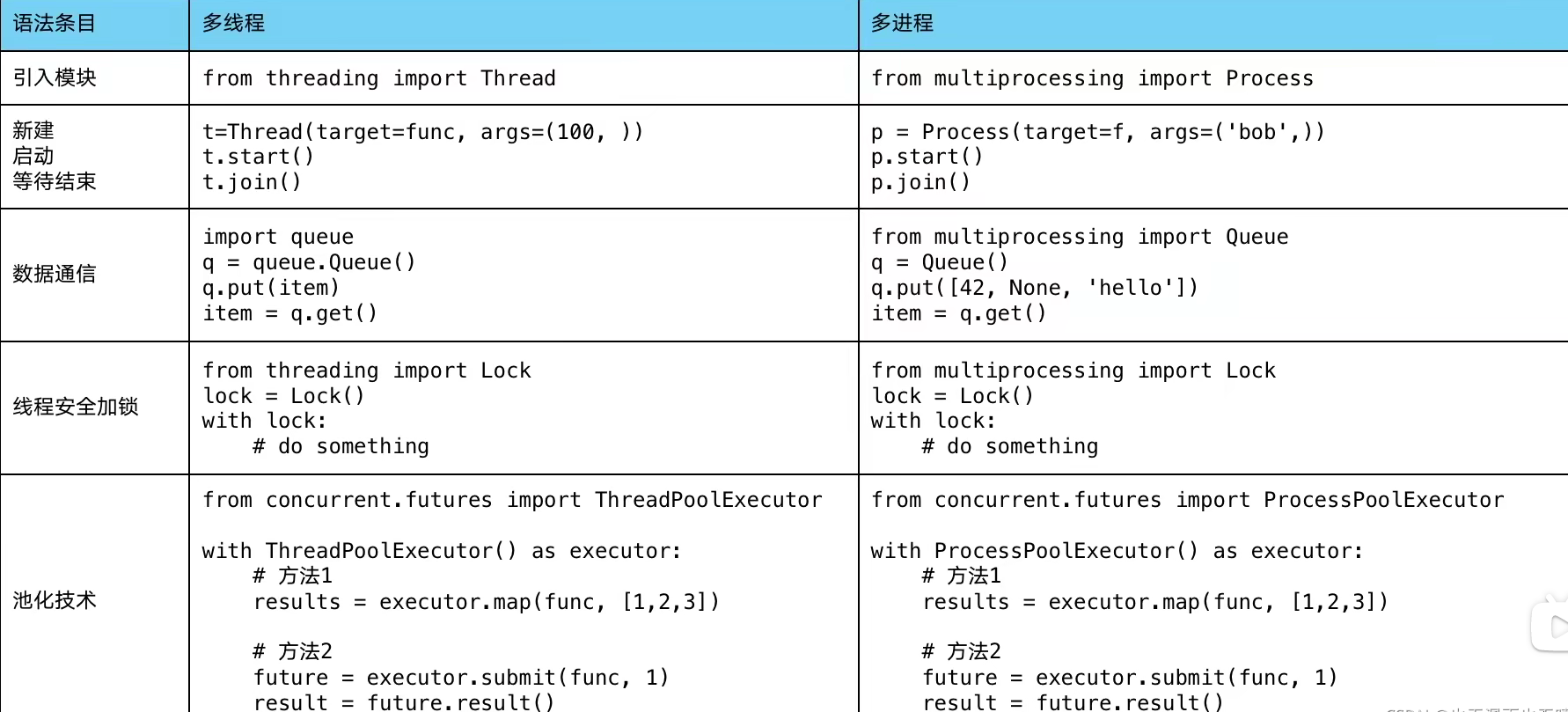

Multi process & multi thread implementation overview

1. Advantages of multi process

#Calculate whether one hundred larger numbers are prime numbers (CPU bound)

import math

import time

from concurrent.futures import ThreadPoolExecutor

from concurrent.futures import ProcessPoolExecutor

PRIMES = [112272535095293] * 100

def timer(f):

def inner():

start_time = time.time()

f()

print(time.time() - start_time)

return inner

def is_prime(n):

if n < 2:

return False

if n == 2:

return True

if n % 2 == 0:

return False

sqrt_n = int(math.floor(math.sqrt(n)))

for i in range(3, sqrt_n + 1, 2):

if n % i == 0:

return False

return True

@timer

def single_thread():

for n in PRIMES:

is_prime(n)

@timer

def multi_thread():

with ThreadPoolExecutor() as pool:

pool.map(is_prime, PRIMES)

@timer

def multi_process():

with ProcessPoolExecutor() as pool:

pool.map(is_prime, PRIMES)

if __name__ == '__main__':

single_thread()

multi_thread()

multi_process()

# 54.2112915 single thread

# 55.0219151 multithreading

# 10.2812801 multi process

#It can be seen that the speed of multithreading is lower and the speed of multiprocessing is the fastest in CPU intensive computing.

1. Common multithreading

#Speed comparison between single thread and multi thread

import time

import threading

# Task function

def run_task(task):

print(f'Thread:{threading.current_thread().name}', 'Task in progress:', task)

# Blocking for two seconds is used to simulate IO operations such as network requests

time.sleep(2)

# Running five tasks in a single thread

def single_thread():

start_time = time.time()

for task in range(1, 6):

run_task(task)

print('<single_thread> cost:', time.time() - start_time)

# Multithreading runs five tasks

def multi_thread():

start_time = time.time()

threads = []

# Five threads are opened here. Too many threads will have switching overhead, and the number of threads needs to be created reasonably.

for task in range(1, 6):

threads.append(

threading.Thread(target=run_task, args=(task,))

)

# Start thread

for thread in threads:

thread.start()

# Thread waiting

for thread in threads:

thread.join()

print('<multi_thread> cost', time.time() - start_time)

if __name__ == '__main__':

single_thread()

multi_thread()

-----------------

give the result as follows:

Thread: MainThread Task in progress: 1 # All run as the main thread

Thread: MainThread Task in progress: 2

Thread: MainThread Task in progress: 3

Thread: MainThread Task in progress: 4

Thread: MainThread Task in progress: 5

<single_thread> cost: 10.036925792694092

Thread: Thread-1 Task in progress: 1 # Threads created 1-5

Thread: Thread-2 Task in progress: 2

Thread: Thread-3 Task in progress: 3

Thread: Thread-4 Task in progress: 4

Thread: Thread-5 Task in progress: 5

<multi_thread> cost 2.003706693649292

# The speed is obviously improved. Of course, this example is only a simple demonstration, and the specific needs to be determined according to the scene.

2. Multithreading of producer consumer model

import time

import threading

import queue

# Task function

def run_task(queue_obj):

# Judge whether the task queue is empty and obtain the next task data

while not queue_obj.empty():

task = queue_obj.get()

print(f'Thread:{threading.current_thread().name}', 'Task in progress:', task)

# Blocking for two seconds is used to simulate IO operations such as network requests

time.sleep(2)

def single_thread(queue_obj):

start_time = time.time()

run_task(queue_obj)

print('<single_thread> cost:', time.time() - start_time)

# Multithreading, open up five threads for consumption of production data, namely queue_ Task data in obj

def multi_thread(queue_obj):

start_time = time.time()

threads = []

for task in range(1, 6):

threads.append(

threading.Thread(target=run_task, args=(queue_obj,))

)

for thread in threads:

thread.start()

for thread in threads:

thread.join()

print('<multi_thread> cost', time.time() - start_time)

if __name__ == '__main__':

# Open two producers respectively and fill in task data

queue_obj = queue.Queue()

queue_obj2 = queue.Queue()

for task in range(1, 6):

queue_obj.put(task)

single_thread(queue_obj)

for task in range(1, 6):

queue_obj2.put(task)

multi_thread(queue_obj2)

# The results are the same as above

Expose thread safety issues:

import threading

# lock = threading.Lock() # Use locks to resolve conflicts

# Account class, attribute balance

class Account:

def __init__(self, balance):

self.balance = balance

# Withdrawal, when the requested quantity > = balance, correct; Otherwise, the returned balance is insufficient`

def draw(account, amount):

# Lock conflict resolution

# with lock:

if account.balance >= amount:

time.sleep(0.1) # Simulate thread blocking

print(threading.current_thread().name, 'success')

account.balance -= amount

print(threading.current_thread().name, 'Balance:', account.balance)

else:

print(threading.current_thread().name, 'Sorry, your credit is running low')

if __name__ == '__main__':

account = Account(1000)

ta = threading.Thread(name='ta', target=draw, args=(account,800))

tb = threading.Thread(name='tb', target=draw, args=(account,800))

ta.start()

tb.start()

#give the result as follows

---------------

tb Successful withdrawal

tb Balance 200

ta Successful withdrawal

ta balance -600

# The problem is exposed. When the thread is switched and the account attribute has not changed, the data will be out of sync.

Principle of thread pool:

- The system needs to allocate resources for new threads and recycle resources for terminating threads. If the newly created threads can be saved and reused, the overhead of creating and terminating threads - thread pool can be reduced.

Advantages of thread pool:

1. Improve performance: reduce the overhead of system creation and termination, and reuse thread resources.

2. It is suitable for handling a large number of sudden requests or requiring a large number of threads to complete tasks, but the actual task processing is short.

3. Defense function: it can effectively avoid the problem of excessive system load caused by too many threads.

Usage 1:

import time

import threading

import concurrent.futures

def run_task(tasks):

time.sleep(2)

return f'{tasks}Execution complete'

# task list

tasks = [('task' + str(i)) for i in range(1, 6)]

start_time = time.time()

with concurrent.futures.ThreadPoolExecutor() as pool: # Create pool

result = pool.map(run_task, tasks)

# Transfer function and task list. The return value 'result' is a generator containing the return value of each task object

results = list(zip(tasks, result))

for i, j in results:

print(i, j)

print(time.time() - start_time)

# The results are as follows:

Task 1 task 1 execution completed

Task 2 task 2 execution completed

Task 3 task 3 execution completed

Task 4 task 4 execution completed

Task 5 task 5 execution completed

2.0206351280212402

Method 2:

import time

import threading

import concurrent.futures

def run_task(tasks):

time.sleep(2)

return f'{tasks}Execution complete'

# task list

tasks = [('task' + str(i)) for i in range(1, 6)]

start_time = time.time()

with concurrent.futures.ThreadPoolExecutor() as pool:

results = {}

for task in tasks:

# Transfer functions and individual tasks

# Return the 'future' object: < future at 0x246f486b1c0 state = pending >

# The object has a 'result' method that returns the return value of the current task

futures = pool.submit(run_task, task)

results[task] = futures

for i, j in results.items():

print(i, j.result()) # Note the call location

print(time.time() - start_time)

# The results are the same as above

Using thread pool to accelerate IO in flash

import json

import time

import flask

import cpncurrent

app = flask.Flask(__name__)

# Analog disk IO

def read_file():

time.sleep(0.1)

return "file result"

# Analog database IO

def connect_db():

time.sleep(0.2)

return "db result"

# Simulate calling apiIO

def create_api():

time.sleep(0.3)

return "api result"

@app.route('/')

def index():

file_io = read_file()

db_io = connect_db()

api_io = create_api()

return json.dumps({

"file_io": file_io,

"db_io": db_io,

"api_io": api_io,

})

if __name__ == '__main__':

app.run()

# Use time curl to return results:

0.623s

Using thread pool Transformation:

import json

import time

from concurrent.futures import ThreadPoolExecutor

import flask

app = flask.Flask(__name__)

pool = ThreadPoolExecutor() # Initialize thread pool object

def connect_db():

time.sleep(0.5)

return "db result"

def read_file():

time.sleep(0.3)

return "file result"

def create_api():

time.sleep(0.2)

return "api result"

@app.route('/')

def index():

# Submit task

file_io = pool.submit(read_file)

db_io = pool.submit(connect_db)

api_io = pool.submit(create_api)

return json.dumps({

"file_io": file_io.result(), # Get result object

"db_io": db_io.result(),

"api_io": api_io.result(),

})

if __name__ == '__main__':

# Multi process pools are defined here

app.run()

# Use time curl to return results:

0.318s

# Halve IO time

# A multi process pool is similar to a multi-threaded pool, but because it does not share the environment, it needs to be defined in the mian entry function.

asyncio implements asynchronous IO

import time

import asyncio

import aiohttp

urls = [

f"https://www.cnblogs.com/sitehome/p/{page}"

for page in range(1, 50 + 1)

]

# Define collaboration:

# The async keyword represents the steps that are called after the event cycle.

# await keyword means that when the IO arrives, it will not block, but carry out the next cycle and continue to call async code

async def async_spider(url):

async with aiohttp.ClientSession() as session:

async with session.get(url) as resp:

result = await resp.text()

print(f'{url},{len(result)}')

# Construct event loop object

loop = asyncio.get_event_loop()

# Create task list

tasks = [

loop.create_task(async_spider(url))

for url in urls

]

start_time = time.time()

# Cycle until the tasks in tasks are completed

loop.run_until_complete(asyncio.wait(tasks))

print(time.time() - start_time)

# 3.1056487560272217

Semaphores control the number of concurrent asynchronous IO S

import time

import asyncio

import aiohttp

urls = [

f"https://www.cnblogs.com/sitehome/p/{page}"

for page in range(1, 50 + 1)

]

# Define semaphore

semaphore = asyncio.Semaphore(10)

async def async_spider(url):

async with semaphore: # Semaphore control

async with aiohttp.ClientSession() as session:

async with session.get(url) as resp:

result = await resp.text()

await asyncio.sleep(3)

# Ten concurrencies are achieved every three seconds of blocking

print(f'{url},{len(result)}')

loop = asyncio.get_event_loop()

tasks = [

loop.create_task(async_spider(url))

for url in urls

]

start_time = time.time()

loop.run_until_complete(asyncio.wait(tasks))

print(time.time() - start_time)

# 3.1056487560272217