PS: if you want to reprint, please indicate the source. I own the copyright.

PS: This is only based on my own understanding,

If it conflicts with your principles and ideas, please understand and do not spray.

Environmental description

- MLU220 development board

- Ubuntu18.04 + one mlu270 development host

- Aarch64 Linux GNU GCC 6. X cross compiling environment

preface

before reading this article, please be sure to know the following pre article concepts:

- Fishing Guide for Cambrian acceleration platform (MLU200 Series) (I) - basic concepts and related introduction( https://blog.csdn.net/u011728480/article/details/121194076 )

- Fishing Guide for Cambrian acceleration platform (MLU200 Series) (II) - model transplantation - environment construction( https://blog.csdn.net/u011728480/article/details/121320982 )

- Fishing Guide for Cambrian acceleration platform (MLU200 Series) (III) - model transplantation - example of segmented network( https://blog.csdn.net/u011728480/article/details/121456789 )

here we review the contents of the fishing guide for the Cambrian acceleration platform (MLU200 Series) - basic concepts and related introduction. The Cambrian acceleration card is a hardware platform and the lowest layer. On the hardware platform, there is a driver, on the driver and runtime. The offline model in this paper is called and processed based on the runtime api to get the model results.

as the end of this series, this article will start from the offline model above and build our offline model reasoning code structure from scratch. After reasonable deployment, it can run normally on the MLU220 development board.

if there is infringement in the part quoted in the text, please contact me in time to delete it.

Instructions for offline model reasoning

after the previous introduction, we can know that we mainly call runtime related APIs. There are two sets of APIs available, one is cnrt and the other is easydk. Easydk is an api based on cnrt encapsulation, which greatly simplifies the development process of off-line model reasoning. However, the main line of our development is the same, that is, initializing the mlu device, loading the model, preprocessing, model reasoning, post-processing and processing results.

in addition, the Cambrian also provides a cnstream program framework, which is developed based on EasyDk and provides a simple and easy-to-use framework in the form of pipeline+observer. If you are interested, please check its official website https://github.com/Cambricon/CNStream .

we actually want to use the development method of EasyDK+CNRT to construct a program like CNStream.

Introduction and compilation of EasyDK

first of all, its official website is: https://github.com/Cambricon/easydk .

in addition to the dependence of CNToolKit (neuware), it also depends on GLOG, gflags and opencv, which need to be installed in advance. As for the introduction of the x86 version of CNToolKit, it has been introduced in the model migration environment section.

since we want to develop the edge end offline model inference program, generally speaking, we mainly use the content of EasyInfer in EasyDK. The compilation process is just like the official introduction:

- cmake ...

- make

if you are familiar with the compilation process of cmake, you know that the above process is very common. For the compilation of EasyDK, it is very simple to compile under x86, but if you want to cross compile, you'd better only generate the core library, and close other sample s and test s.

Complete the operation of offline model on MLU270

remember in the article "Fishing Guide (III) - model transplantation - segmentation network example of Cambrian acceleration platform (MLU200 Series)", we can see that through torch_mlu.core.mlu_model.set_core_version('MLU220 '), we can adjust the platform for running the generated offline model, which can be the MLU220 at the edge end or the MLU270 at the server deployment end. Yes, there is no mistake. MLU270 can be used for both model migration and offline model deployment. As mentioned at the beginning of this series, these platforms are only the difference of deployment scenarios.

why do we need to debug the offline model on MLU270? Because it's convenient. Because MLU220 generally requires cross compilation, especially the generation of related dependency libraries is troublesome. If the board of MLU220 is also convenient for debugging, it can also be developed and debugged directly based on MLU220.

since my side is in the form of EasyDK+CNRT hybrid call, I mainly comment and introduce it according to its official CNRT reasoning code, and mark what EasyDK can do in one step at relevant positions. Here are some simple answers. Why do you need the EasyDK library? Why not develop directly based on CNRT? My answer is to make choices according to the needs of project promotion. As for the final form of this program, in fact, I still expect to develop it directly based on CNRT, because it is effective, but the price is that you must be familiar with the relevant content.

the following is an introduction to the official reasoning code (there may be some changes in the order. The official code does not process some return values, so you should pay attention to it when writing it yourself. In addition, I modified some omissions in the official code):

/* Copyright (C) [2019] by Cambricon, Inc. */

/* offline_test */

/*

* A test which shows how to load and run an offline model.

* This test consists of one operation --mlp.

*

* This example is used for MLU270 and MLU220.

*

*/

#include "cnrt.h"

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

int offline_test(const char *name) {

// This example is used for MLU270 and MLU220. You need to choose the corresponding offline model.

// when generating an offline model, u need cnml and cnrt both

// when running an offline model, u need cnrt only

// First, you must call cnrtInit() to initialize the runtime environment

cnrtInit(0);

// The second step is to check the MLU device and set the current device. This step is actually prepared for multi device reasoning

cnrtDev_t dev;

cnrtGetDeviceHandle(&dev, 0);

cnrtSetCurrentDevice(dev);

// In fact, the first and second steps here may be completed by creating an EasyInfer object with complete error checking.

//

// prepare model name

// load model

// The third step is to load the model

cnrtModel_t model;

cnrtLoadModel(&model, "test.cambricon");

// get model total memory

// The fourth step is to obtain some properties of the model, such as memory occupation and parallelism. This step is optional.

int64_t totalMem;

cnrtGetModelMemUsed(model, &totalMem);

printf("total memory used: %ld Bytes\n", totalMem);

// get model parallelism

int model_parallelism;

cnrtQueryModelParallelism(model, &model_parallelism);

printf("model parallelism: %d.\n", model_parallelism);

// The fifth step is to establish the reasoning logic flow. This step is to dynamically generate the model reasoning structure in memory according to the offline model. "Subnet0" here is a kernel func name, which is not fixed, but the common name is subnet0,

// In. cambricon_twins is defined in the supporting documents.

// This is similar to cuda Programming. The model reasoning structure we just generated is actually regarded as a kernel function, and the function name is this.

// load extract function

cnrtFunction_t function;

cnrtCreateFunction(&function);

cnrtExtractFunction(&function, model, "subnet0");

// Step 6: obtain the number of input and output nodes and the size of input and output data. Note that many networks have multiple inputs and possibly multiple outputs. In this concept, subsequent operations are designed on the basis of multiple inputs and multiple outputs.

int inputNum, outputNum;

int64_t *inputSizeS, *outputSizeS;

cnrtGetInputDataSize(&inputSizeS, &inputNum, function);

cnrtGetOutputDataSize(&outputSizeS, &outputNum, function);

// prepare data on cpu

// Step 7: apply for the input / output memory on the cpu. Here is a two-dimensional pointer, representing multiple inputs / outputs

void **inputCpuPtrS = (void **)malloc(inputNum * sizeof(void *));

void **outputCpuPtrS = (void **)malloc(outputNum * sizeof(void *));

// allocate I/O data memory on MLU

// Step 8, apply for the input / output memory on the mlu. Here is a two-dimensional pointer ha, representing multiple inputs and outputs.

// At this time, the data memory has not been actually applied, but the handle (pointer) of the data node has been applied

void **inputMluPtrS = (void **)malloc(inputNum * sizeof(void *));

void **outputMluPtrS = (void **)malloc(outputNum * sizeof(void *));

// prepare input buffer

// In step 9, apply for real memory space for the data node handle in step 8. Inputcuptrs and inputMluPtrS here correspond one-to-one, because the memory addresses and memory managers are different. The same is true for output.

// malloc is a standard c application heap memory interface

// cnrtMalloc is the memory interface managed by the application mlu. Compared with cuda, it can be directly understood as applying for video memory

for (int i = 0; i < inputNum; i++) {

// converts data format when using new interface model

inputCpuPtrS[i] = malloc(inputSizeS[i]);

// malloc mlu memory

cnrtMalloc(&(inputMluPtrS[i]), inputSizeS[i]);

cnrtMemcpy(inputMluPtrS[i], inputCpuPtrS[i], inputSizeS[i], CNRT_MEM_TRANS_DIR_HOST2DEV);

}

// prepare output buffer

for (int i = 0; i < outputNum; i++) {

outputCpuPtrS[i] = malloc(outputSizeS[i]);

// malloc mlu memory

cnrtMalloc(&(outputMluPtrS[i]), outputSizeS[i]);

}

// Step 10: first, fill inputcputrs with the preprocessed image data, and copy the cpu input data memory to mlu input data memory

cv::Mat _in_img;

_in_img = cv::imread("test.jpg");

_in_img.convertTo(_in_img, CV_32FC3);

for (int i = 0; i < inputNum; i++) {

// Note that I added memcpy here. Generally speaking, the data input of the model is fp16 or fp32, but in general, uint8 generated by our opencv. It needs to be converted to fp32 or fp16.

// The important thing is that things happen three times. Pay attention to the data format after image preprocessing and the data format of model input. If different, it needs to be converted. cnrtCastDataType is officially provided to assist the conversion process.

// The important thing is that things happen three times. Pay attention to the data format after image preprocessing and the data format of model input. If different, it needs to be converted. cnrtCastDataType is officially provided to assist the conversion process.

// The important thing is that things happen three times. Pay attention to the data format after image preprocessing and the data format of model input. If different, it needs to be converted. cnrtCastDataType is officially provided to assist the conversion process.

::memcpy( inputCpuPtrS[i], _in_img.data, inputSizeS[i]);

cnrtMemcpy(inputMluPtrS[i], inputCpuPtrS[i], inputSizeS[i], CNRT_MEM_TRANS_DIR_HOST2DEV);

}

// Step 10 is mainly image preprocessing, which transmits the image data to the mlu.

// Step 11 is mainly to start setting reasoning parameters

// prepare parameters for cnrtInvokeRuntimeContext

void **param = (void **)malloc(sizeof(void *) * (inputNum + outputNum));

for (int i = 0; i < inputNum; ++i) {

param[i] = inputMluPtrS[i];

}

for (int i = 0; i < outputNum; ++i) {

param[inputNum + i] = outputMluPtrS[i];

}

// Step 12: bind the device and set the reasoning context

// setup runtime ctx

cnrtRuntimeContext_t ctx;

cnrtCreateRuntimeContext(&ctx, function, NULL);

// compute offline

cnrtQueue_t queue;

cnrtRuntimeContextCreateQueue(ctx, &queue);

// bind device

cnrtSetRuntimeContextDeviceId(ctx, 0);

cnrtInitRuntimeContext(ctx, NULL);

// Step 13, reason and wait for the reasoning to end.

// invoke

cnrtInvokeRuntimeContext(ctx, param, queue, NULL);

// sync

cnrtSyncQueue(queue);

// Step 14, copy the data from mlu back to cpu, and then perform subsequent post-processing

// copy mlu result to cpu

for (int i = 0; i < outputNum; i++) {

cnrtMemcpy(outputCpuPtrS[i], outputMluPtrS[i], outputSizeS[i], CNRT_MEM_TRANS_DIR_DEV2HOST);

}

// Step 15: clean up the environment.

// free memory space

for (int i = 0; i < inputNum; i++) {

free(inputCpuPtrS[i]);

cnrtFree(inputMluPtrS[i]);

}

for (int i = 0; i < outputNum; i++) {

free(outputCpuPtrS[i]);

cnrtFree(outputMluPtrS[i]);

}

free(inputCpuPtrS);

free(outputCpuPtrS);

free(param);

cnrtDestroyQueue(queue);

cnrtDestroyRuntimeContext(ctx);

cnrtDestroyFunction(function);

cnrtUnloadModel(model);

cnrtDestroy();

return 0;

}

int main() {

printf("mlp offline test\n");

offline_test("mlp");

return 0;

}

let me briefly list some operation sequences of EasyDK:

- The third, fourth and fifth steps above actually correspond to the ModelLoader module under EasyInfer. When the ModelLoader module is initialized and passed to the EasyInfer instance, the third, fourth and fifth steps are completed. In fact, these contents are fixed forms, not the focus. The focus is on the following data input, reasoning and data output.

- Steps 6, 7, 8, and 9 above are all about applying for memory space on cpu and mlu for the model. There is a corresponding interface in EasyDk to directly complete the memory application.

- Note that step 10 above is an important step, including image data preprocessing, image data type conversion, and image data input to mlu memory.

- Steps 11 and 12 above are to prepare parameters for reasoning

- Start reasoning in step 13 above

- In step 14 above, the inference result is copied from mlu memory to cpu memory for post-processing.

- Step 15 above, clean the environment.

Deployment of offline model on MLU220

in the previous section, we mainly completed the program development of offline model reasoning and ran the test on MLU270. The main content of this section is how to deploy our adjusted program to MLU220.

when deploying to mlu220, the first problem we face is cross compiling the program that generates aarch64. There are three partial dependencies: cntoolkit aarch64, easydk aarch64, and other third-party libraries, such as opencv aarch64. At this time, we get the offline reasoning program of aarch64, and cooperate with the offline model of mlu220 version we converted before.

when we put the generated program on the mlu220 board, the program may still not run because the driver may not be loaded. At this time, it is recommended to find the driver and firmware and let the mlu220 run. Then run the program.

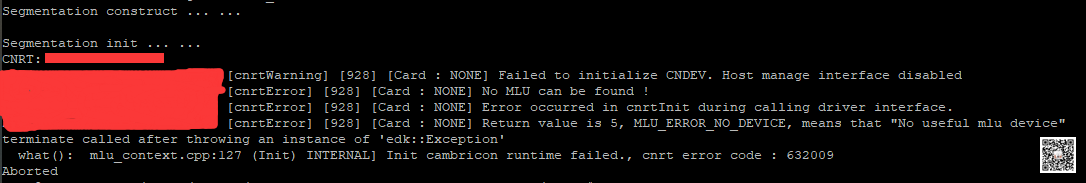

the following is an example of error reporting without mlu equipment:

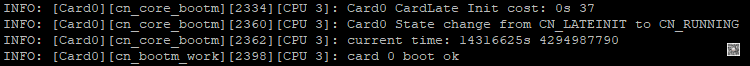

the following is the last log of loading the driver:

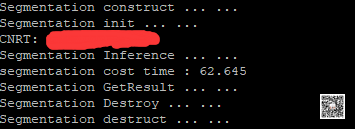

the following is the output of the running program:

Postscript

for RK3399pro and Cambrian MLU220 platforms, some models that have been out for a long time may have a performance improvement of 300% + compared with RK3399pro due to the optimization. However, for some new models and some non classical (non popular) models, due to the built-in optimization or network structure, there may be only 30% + performance improvement, but this is also a happy thing. After all, after the hardware upgrade, many things can reach quasi real time.

that's all for the basic introduction of this series. Sprinkle flowers at the end ~ ~ ~ ~ ~.

reference

- Fishing Guide for Cambrian acceleration platform (MLU200 Series) (I) - basic concepts and related introduction( https://blog.csdn.net/u011728480/article/details/121194076 )

- Fishing Guide for Cambrian acceleration platform (MLU200 Series) (II) - model transplantation - environment construction( https://blog.csdn.net/u011728480/article/details/121320982 )

- Fishing Guide for Cambrian acceleration platform (MLU200 Series) (III) - model transplantation - example of segmented network( https://blog.csdn.net/u011728480/article/details/121456789 )

- https://www.cambricon.com/

- https://www.cambricon.com/docs/cnrt/user_guide_html/example/offline_mode.html

- Other relevant confidential information.

PS: please respect the original, don't spray if you don't like it.

PS: if you want to reprint, please indicate the source. I own all rights.

PS: please leave a message if you have any questions. I will reply as soon as I see it.