Project Background

In back-end microservices, it is common to expose a unified gateway entry to the outside world, which enables the whole system services to have a unified entry and exit, and converge services. However, the front-end services which provide gateway entries and exits in a unified way are relatively rare, and often each application provides services independently. At present, there are also micro-front-end applications used in the industry to schedule and communicate applications, among which nginx forwarding is one of the solutions. In order to converge the entrances and exits of front-end applications, projects need to be deployed on the intranet. Public network ports are limited, so in order to better access more applications, this paper uses the ideas of back-end gateways for reference and implements a front-end gateway proxy forwarding scheme. The purpose of this paper is to summarize and summarize some thinking and trampling pits in the process of front-end gateway practice, and also hope to provide some solutions for students with related scenario applications.

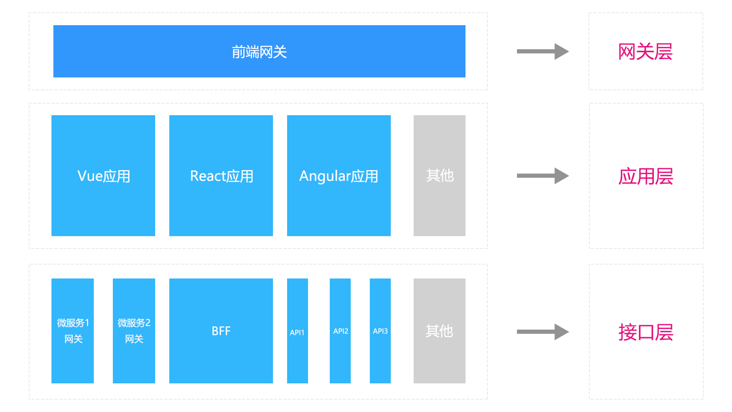

architecture design

| Name | Effect | Remarks |

|---|---|---|

| Gateway Layer | Used to carry front-end traffic as a unified entry | You can use either front-end or back-end routes to host, mainly for traffic slicing, or you can place a single application here as a mix of routing and scheduling |

| application layer | Used to deploy front-end applications, not just frameworks, where communication between applications can be distributed over http or to gateways, provided that the gateway layer has the ability to receive dispatches | Not limited to front-end frameworks and versions, each application has been deployed separately, and communication between each other needs to be between HTTPS or between containerized deployments such as k8s |

| Interface Layer | Used to obtain data from the back-end, there may be different micro-service gateways, separate third-party interfaces, or BFF interfaces such as node.js due to different forms of back-end deployment | For a uniformly shared interface form, it can be hosted to the gateway layer for proxy forwarding |

Scheme Selection

At present, the business logic of project application system is more complex, and it is not easy to unify the micro-front-end form in the form of SingleSPA-like. Therefore, the micro-front-end gateway with nginx as the main technical form is chosen to build. In addition, it is necessary to access the applications of multiple third parties in the future. Making an iframe form will also involve the problem of network connection. Because of the business form, public network ports are limited, so it is necessary to design a set of virtual ports that can be 1:n. Therefore, the final choice here is to use nginx as the main gateway to forward traffic and apply slicing scheme.

| Hierarchy | programme | Remarks |

|---|---|---|

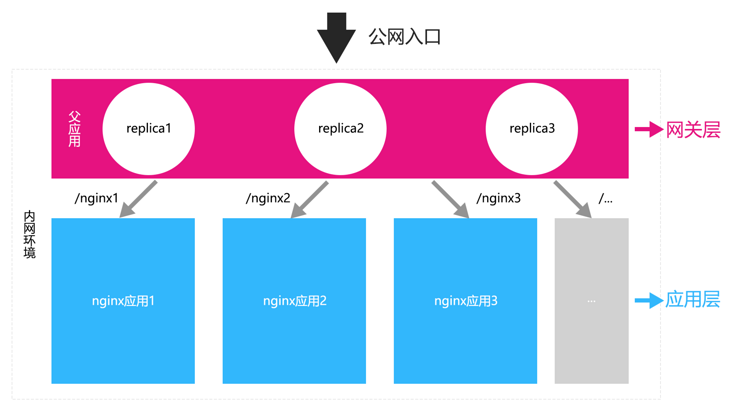

| Gateway Layer | Using a nginx as the public network traffic entry, slicing different sub-applications using paths | The parent nginx application, as the front-end application entry, needs to be a load balancing process, which uses the load balancing of k8s to configure three copies. If a pod hangs, it can be pulled up using the mechanism of k8 |

| application layer | Many different nginx applications, where the path is sliced, so resource orientation needs to be addressed, as detailed in the next section of the trampling case | This is handled using the docker mount directory |

| Interface Layer | After several different nginx applications have done the reverse proxy to the interface, the interface can not be forwarded here because it is sent forward by the browser. Here, we need to do a processing on the front-end code, as detailed in the case of treadmill | The ci, cd build scaffolding will be configured later, as well as some common front-end scaffolding configurations such as vue-cli, cra, umi access plug-in packages |

Trench case

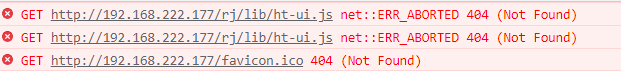

Static Resource 404 Error

[Case Description] We found that normal html resources can be located after the proxy has completed its path, but there will be 404 errors not found for js, css resources, etc.

[Case Study] Because most of the current applications are single-page applications, and the main purpose of single-page applications is to operate the dom by js, for mv* framework, the front-end routing and interception operations on some data usually occur, so relative path lookup for resource lookup is required in the corresponding template engine processing

[Solution] Our project builds are deployed primarily through docker+k8s, so here we want to unify the resource paths under a path directory that matches the name of the parent nginx application forward path, which means that the child application needs to register a route information in the parent application. Subsequent changes can be located through service registration, and so on.

Parent application nginx configuration

{

"rj": {

"name": "xxx application",

"path: "/rj/"

}

}server {

location /rj/ {

proxy_pass http://ip:port/rj/;

}

}Subapplications

FROM xxx/nginx:1.20.1 COPY ./dist /usr/share/nginx/html/rj/

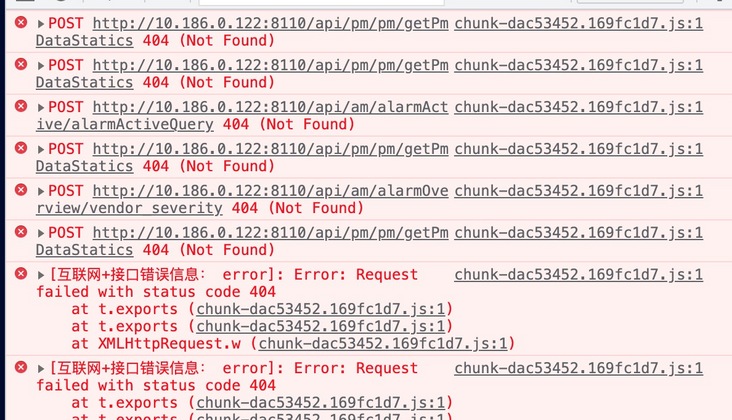

Interface proxy 404 error

[Case Description] After processing static resources, our parent application requested an interface and found that the interface even had a 404 query error

[Case Study] Because these are currently front-end and back-end separated projects, the back-end interfaces are usually implemented by directional proxy using nginx of the child application. This way, when forwarded through nginx of the parent application, there will be no resource because there is no proxy interface address in nginx of the parent application

[Solution] There are two solutions, one is through the interface address of the parent application to proxy the back end, so there will be a problem if the name of the child application proxy is the same and the interface is not only from a micro service, or there will be different static proxy and BFF forms. That way, the construction of the parent application can become uncontrollable in complexity. The other is to isolate the agreed path by changing the front-end request path in the sub-application, such as by adding the agreed path in the service registration. Here we have both. For the access of our self-study projects, we will configure a unified gateway and static resource forwarding agent in the multiple applications, and make a good path name agreement with the sub-applications, such as the back-end gateway forwarding with/api/for the access of non-self-study projects. For the access of non-self-study projects, we need the access applications to make the interface magic changes. Subsequently, we will provide a plug-in library for Common Scaffolding API modifications, such as vue-cli/cra/umi. For self-developed scaffolding architecture applications by third-party teams, manual changes are required, but generally, custom scaffolding teams will have a path to unify front-end requests. For older applications such as projects built with jq, manual changes will be required individually.

Here I'll demonstrate a scenario built with vue-cli3:

// config

export const config = {

data_url: '/rj/api'

};// Specific interface

// Usually there are some axios routing interceptions, etc.

import request from '@/xxx';

// There is a unified entry for baseUrl, just change the baseurl entry here

import { config } from '@/config';

// Specific interface

export const xxx = (params) =>

request({

url: config.data_url + '/xxx'

})Analysis of Source Code

nginx is a lightweight and high performance web server. Its architecture and design are very useful for reference. It has some guiding ideas for the design of node.js or other web framework.

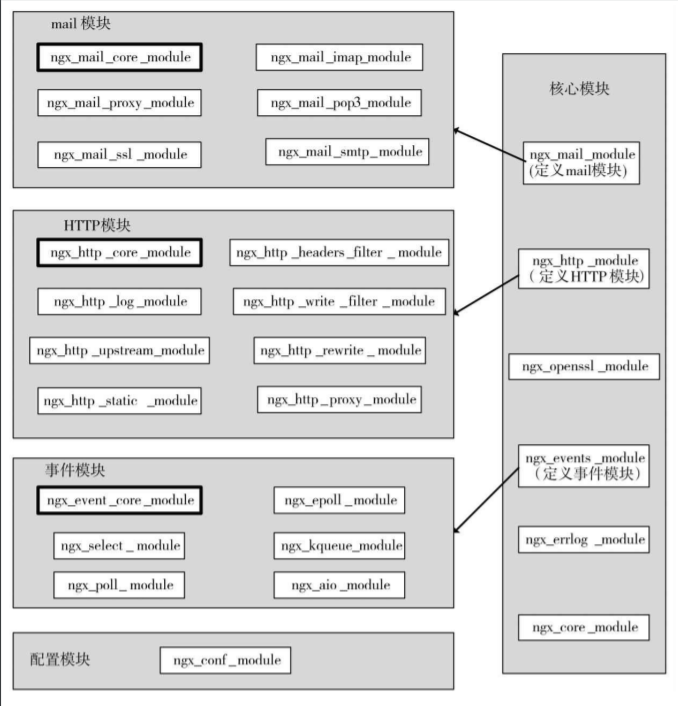

nginx is written in C language, so it combines the whole architecture through modules, which include common modules such as HTTP module, event module, configuration module and core module. Other modules are scheduled and loaded through the core module to achieve the interaction between modules.

Here we mainly need to go through proxy_in location Pass forwards the application, so let's look at proxy_in the proxy module Processing of pass

ngx_http_proxy_module

static char *ngx_http_proxy_pass(ngx_conf_t *cf, ngx_command_t *cmd, void *conf);

static ngx_command_t ngx_http_proxy_commands[] = {

{

ngx_string("proxy_pass"),

NGX_HTTP_LOC_CONF | NGX_HTTP_LIF_CONF | NGX_HTTP_LMT_CONF | NGX_CONF_TAKE1,

ngx_http_proxy_pass,

NGX_HTTP_LOC_CONF_OFFSET,

0,

NULL

}

};

static char *

ngx_http_proxy_pass(ngx_conf_t *cf, ngx_command_t *cmd, void *conf)

{

ngx_http_proxy_loc_conf_t *plcf = conf;

size_t add;

u_short port;

ngx_str_t *value, *url;

ngx_url_t u;

ngx_uint_t n;

ngx_http_core_loc_conf_t *clcf;

ngx_http_script_compile_t sc;

if (plcf->upstream.upstream || plcf->proxy_lengths) {

return "is duplicate";

}

clcf = ngx_http_conf_get_module_loc_conf(cf, ngx_http_core_module);

clcf->handler = ngx_http_proxy_handler;

if (clcf->name.len && clcf->name.data[clcf->name.len - 1] == '/') {

clcf->auto_redirect = 1;

}

value = cf->args->elts;

url = &value[1];

n = ngx_http_script_variables_count(url);

if (n) {

ngx_memzero(&sc, sizeof(ngx_http_script_compile_t));

sc.cf = cf;

sc.source = url;

sc.lengths = &plcf->proxy_lengths;

sc.values = &plcf->proxy_values;

sc.variables = n;

sc.complete_lengths = 1;

sc.complete_values = 1;

if (ngx_http_script_compile(&sc) != NGX_OK) {

return NGX_CONF_ERROR;

}

#if (NGX_HTTP_SSL)

plcf->ssl = 1;

#endif

return NGX_CONF_OK;

}

if (ngx_strncasecmp(url->data, (u_char *) "http://", 7) == 0) {

add = 7;

port = 80;

} else if (ngx_strncasecmp(url->data, (u_char *) "https://", 8) == 0) {

#if (NGX_HTTP_SSL)

plcf->ssl = 1;

add = 8;

port = 443;

#else

ngx_conf_log_error(NGX_LOG_EMERG, cf, 0,

"https protocol requires SSL support");

return NGX_CONF_ERROR;

#endif

} else {

ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "invalid URL prefix");

return NGX_CONF_ERROR;

}

ngx_memzero(&u, sizeof(ngx_url_t));

u.url.len = url->len - add;

u.url.data = url->data + add;

u.default_port = port;

u.uri_part = 1;

u.no_resolve = 1;

plcf->upstream.upstream = ngx_http_upstream_add(cf, &u, 0);

if (plcf->upstream.upstream == NULL) {

return NGX_CONF_ERROR;

}

plcf->vars.schema.len = add;

plcf->vars.schema.data = url->data;

plcf->vars.key_start = plcf->vars.schema;

ngx_http_proxy_set_vars(&u, &plcf->vars);

plcf->location = clcf->name;

if (clcf->named

#if (NGX_PCRE)

|| clcf->regex

#endif

|| clcf->noname)

{

if (plcf->vars.uri.len) {

ngx_conf_log_error(NGX_LOG_EMERG, cf, 0,

"\"proxy_pass\" cannot have URI part in "

"location given by regular expression, "

"or inside named location, "

"or inside \"if\" statement, "

"or inside \"limit_except\" block");

return NGX_CONF_ERROR;

}

plcf->location.len = 0;

}

plcf->url = *url;

return NGX_CONF_OK;

}ngx_http

static ngx_int_t

ngx_http_add_addresses(ngx_conf_t *cf, ngx_http_core_srv_conf_t *cscf,

ngx_http_conf_port_t *port, ngx_http_listen_opt_t *lsopt)

{

ngx_uint_t i, default_server, proxy_protocol;

ngx_http_conf_addr_t *addr;

#if (NGX_HTTP_SSL)

ngx_uint_t ssl;

#endif

#if (NGX_HTTP_V2)

ngx_uint_t http2;

#endif

/*

* we cannot compare whole sockaddr struct's as kernel

* may fill some fields in inherited sockaddr struct's

*/

addr = port->addrs.elts;

for (i = 0; i < port->addrs.nelts; i++) {

if (ngx_cmp_sockaddr(lsopt->sockaddr, lsopt->socklen,

addr[i].opt.sockaddr,

addr[i].opt.socklen, 0)

!= NGX_OK)

{

continue;

}

/* the address is already in the address list */

if (ngx_http_add_server(cf, cscf, &addr[i]) != NGX_OK) {

return NGX_ERROR;

}

/* preserve default_server bit during listen options overwriting */

default_server = addr[i].opt.default_server;

proxy_protocol = lsopt->proxy_protocol || addr[i].opt.proxy_protocol;

#if (NGX_HTTP_SSL)

ssl = lsopt->ssl || addr[i].opt.ssl;

#endif

#if (NGX_HTTP_V2)

http2 = lsopt->http2 || addr[i].opt.http2;

#endif

if (lsopt->set) {

if (addr[i].opt.set) {

ngx_conf_log_error(NGX_LOG_EMERG, cf, 0,

"duplicate listen options for %V",

&addr[i].opt.addr_text);

return NGX_ERROR;

}

addr[i].opt = *lsopt;

}

/* check the duplicate "default" server for this address:port */

if (lsopt->default_server) {

if (default_server) {

ngx_conf_log_error(NGX_LOG_EMERG, cf, 0,

"a duplicate default server for %V",

&addr[i].opt.addr_text);

return NGX_ERROR;

}

default_server = 1;

addr[i].default_server = cscf;

}

addr[i].opt.default_server = default_server;

addr[i].opt.proxy_protocol = proxy_protocol;

#if (NGX_HTTP_SSL)

addr[i].opt.ssl = ssl;

#endif

#if (NGX_HTTP_V2)

addr[i].opt.http2 = http2;

#endif

return NGX_OK;

}

/* add the address to the addresses list that bound to this port */

return ngx_http_add_address(cf, cscf, port, lsopt);

}

static ngx_int_t

ngx_http_add_addrs(ngx_conf_t *cf, ngx_http_port_t *hport,

ngx_http_conf_addr_t *addr)

{

ngx_uint_t i;

ngx_http_in_addr_t *addrs;

struct sockaddr_in *sin;

ngx_http_virtual_names_t *vn;

hport->addrs = ngx_pcalloc(cf->pool,

hport->naddrs * sizeof(ngx_http_in_addr_t));

if (hport->addrs == NULL) {

return NGX_ERROR;

}

addrs = hport->addrs;

for (i = 0; i < hport->naddrs; i++) {

sin = (struct sockaddr_in *) addr[i].opt.sockaddr;

addrs[i].addr = sin->sin_addr.s_addr;

addrs[i].conf.default_server = addr[i].default_server;

#if (NGX_HTTP_SSL)

addrs[i].conf.ssl = addr[i].opt.ssl;

#endif

#if (NGX_HTTP_V2)

addrs[i].conf.http2 = addr[i].opt.http2;

#endif

addrs[i].conf.proxy_protocol = addr[i].opt.proxy_protocol;

if (addr[i].hash.buckets == NULL

&& (addr[i].wc_head == NULL

|| addr[i].wc_head->hash.buckets == NULL)

&& (addr[i].wc_tail == NULL

|| addr[i].wc_tail->hash.buckets == NULL)

#if (NGX_PCRE)

&& addr[i].nregex == 0

#endif

)

{

continue;

}

vn = ngx_palloc(cf->pool, sizeof(ngx_http_virtual_names_t));

if (vn == NULL) {

return NGX_ERROR;

}

addrs[i].conf.virtual_names = vn;

vn->names.hash = addr[i].hash;

vn->names.wc_head = addr[i].wc_head;

vn->names.wc_tail = addr[i].wc_tail;

#if (NGX_PCRE)

vn->nregex = addr[i].nregex;

vn->regex = addr[i].regex;

#endif

}

return NGX_OK;

}summary

For front-end gateways, not only can they be separately hierarchized, but also can use SingleSPA-like scheme to process and apply gateways using front-end routes to control whether they are implemented or single-page applications, with separate sub-applications. This has the advantage that child applications can communicate with each other through their parent applications or buses, as well as share public resources and isolate their private resources. For this project, the current state of operation is more appropriate to use a separate gateway layer, while nginx allows for smaller configurations to access applications and converge front-end entries. There will be scaffolding for the construction of ci and cd process in the future, which will make it easier for application developers to join in the construction deployment, so as to achieve the effect of engineering. For operations that can be copied multiple times, we should all think of using engineering means to solve, rather than simply investing in labor. After all, the machine is better at handling a single constant batch and stable output. Excuse me!!!