This time we crawled a free ip proxy

Cough, what is Sao's operation? It is to crawl the ip address they provide, and then use that ip address to crawl their website resources

I won't put the website links here, or leave a little face.

Next come the old rules, Five Reptiles, Step on, Watch, Enter, Pick, Guess

Reptilian Five

Pedal

As the name implies, stepping on a spot is the place where we are going to Mississippi. For example, climbing Baidu, the stepping point is their address www.baidu.com. The same is true here. As mentioned above, this operation is a bit humble, so the victim's place will not be published here.

observation

The so-called observation is to observe the structure of the web page, to analyze what we want, how can we get it in our hands, and then to get the victim's scratch map

Through observation we found that he had hidden what we wanted in a pocket named tr. We took a small notebook and wrote it down to prepare for action later

Get into

Step on the spot, we also know where our goal is hiding what we want, and we're going to get closer

Cough, remember to prepare wrenches, screwdrivers, hammers before approaching target

Useragents make up to prevent victims from recognizing us

requests are like opening the key to the victim's home

Random is random and disguises itself more like the person the victim often comes into contact with

lxml is our most important tool in this crime. Everything before it was for this step, to pull what we want from him

from fake_useragent import UserAgent # This module gets UAS from the network import requests # Requests are used to initiate requests import random # For Random from lxml import etree # xpath parsing module

With the crime tools on, let's get the keys into his house

In order not to be found, we have to wear a hidden clothing for ourselves, the ip agent

proxies_list=[

'106.55.15.244:8889',

'119.183.250.1:9000'

'220.173.37.128:7890',

'223.96.90.216:8085',

'47.100.34.198:8001',

'103.103.3.6:8080',

'27.192.200.7:9000',

'113.237.3.178:9999',

'45.228.188.241:999',

'211.24.95.49:47615',

'191.101.39.193:80',

'103.205.15.97:8080',

'185.179.30.130:8080',

'190.108.88.97:999',

'182.87.136.228:9999',

'167.172.180.46:33555',

'58.255.7.90:9999',

'190.85.244.70:999',

'175.146.211.158:9999',

'36.56.102.35:9999',

'131.153.151.250:8003',

'195.9.61.22:45225',

'43.239.152.254:8080'

]#ip list

random_proxies = random.choice(proxies_list)#Randomly select ip from list

# Create headers

proxies = {'http' : random_proxies} #Stitching ip

That proxies_above List is the wardrobe where we put our invisible clothes. You can see that I put a lot of invisible clothes in the wardrobe. Because this invisible clothes has time limitations, if we discover it, we will change to another one so that we can go to his house to get stuff all the time.

Since this invisible clothing is not perfect and defective, we need to process it once, proxies is to process the invisible clothing, making the invisible clothing more perfect

ua=UserAgent() # Call function

user_agent=ua.random #Random selection of ua

headers={'User-Agent':user_agent} #Stitching ua

Here's the top-level cosmetic surgery from a distant world of Wanzi

ua=useragent() is the face selection of our country, from the vast sea of people to choose which star to show home

user_agent=ua.random This seems a bit arbitrary. Others are not easy to do so much money for grooming. You even choose one randomly and don't cough professionally at all.

headers={'User-Agent': user_agent} Now it's time to start an operation. Now that the nose is selected, we'll pick up the scalpel and start a face-shaving operation. headers will accept our best face. Finally, when we go to another place (the victim) to pick something up, we'll put the face on its own head so he can't tell if we're bad people.

Cough

Everything is ready, then open the door with a screwdriver

res=requests.get(url,headers=headers,proxies=proxies).text # Initiate Request

Above are the tools we use to open doors. The url is the address, the headers are the covers we use to groom in Banzi, and the proxies are our invisible clothes

requests is the main tool, behind get you can understand what the door is, whether it's an anti-theft (https) or a wooden door (http)

Let's expand it here. The theft-proof door just adds something called a condom-binding layer, the s that comes out behind it. When we open it, we need to change the get to post and pass in some value.

Grab

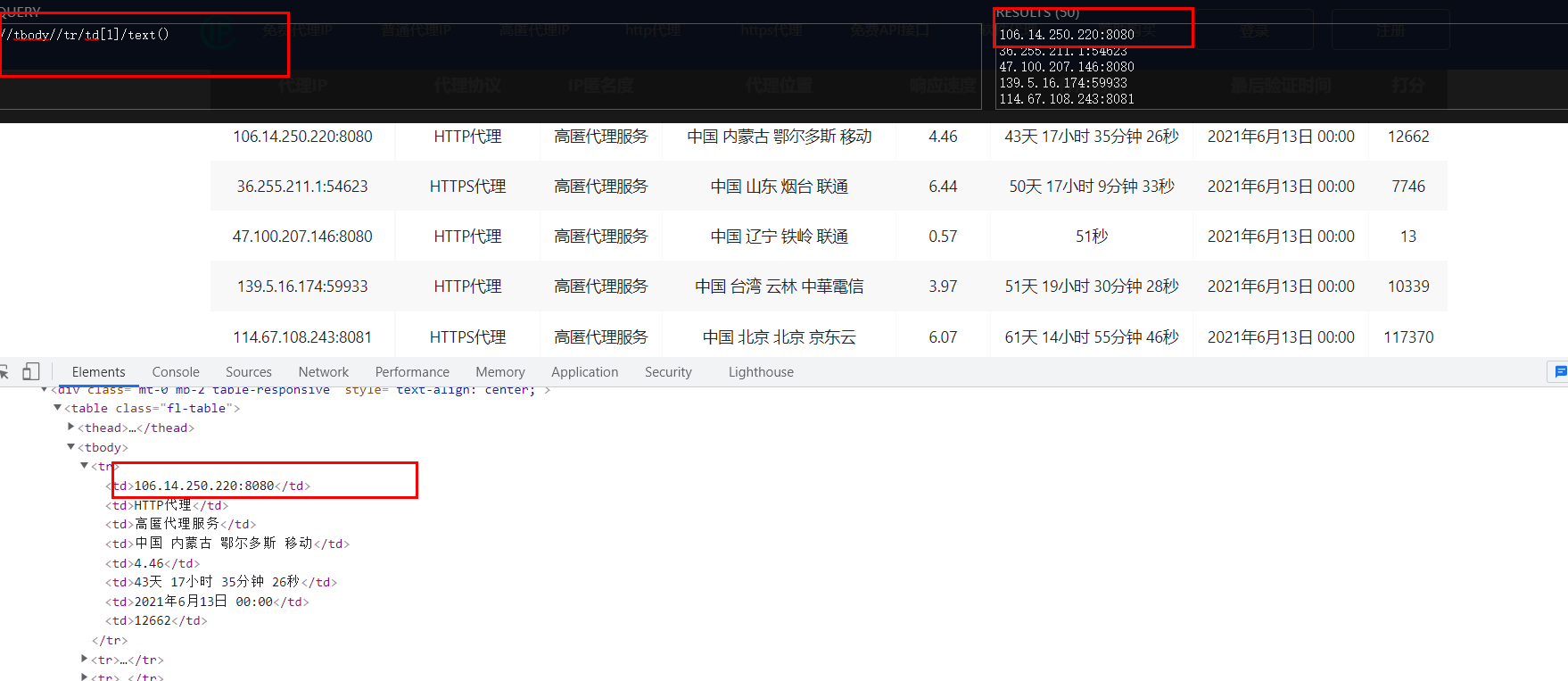

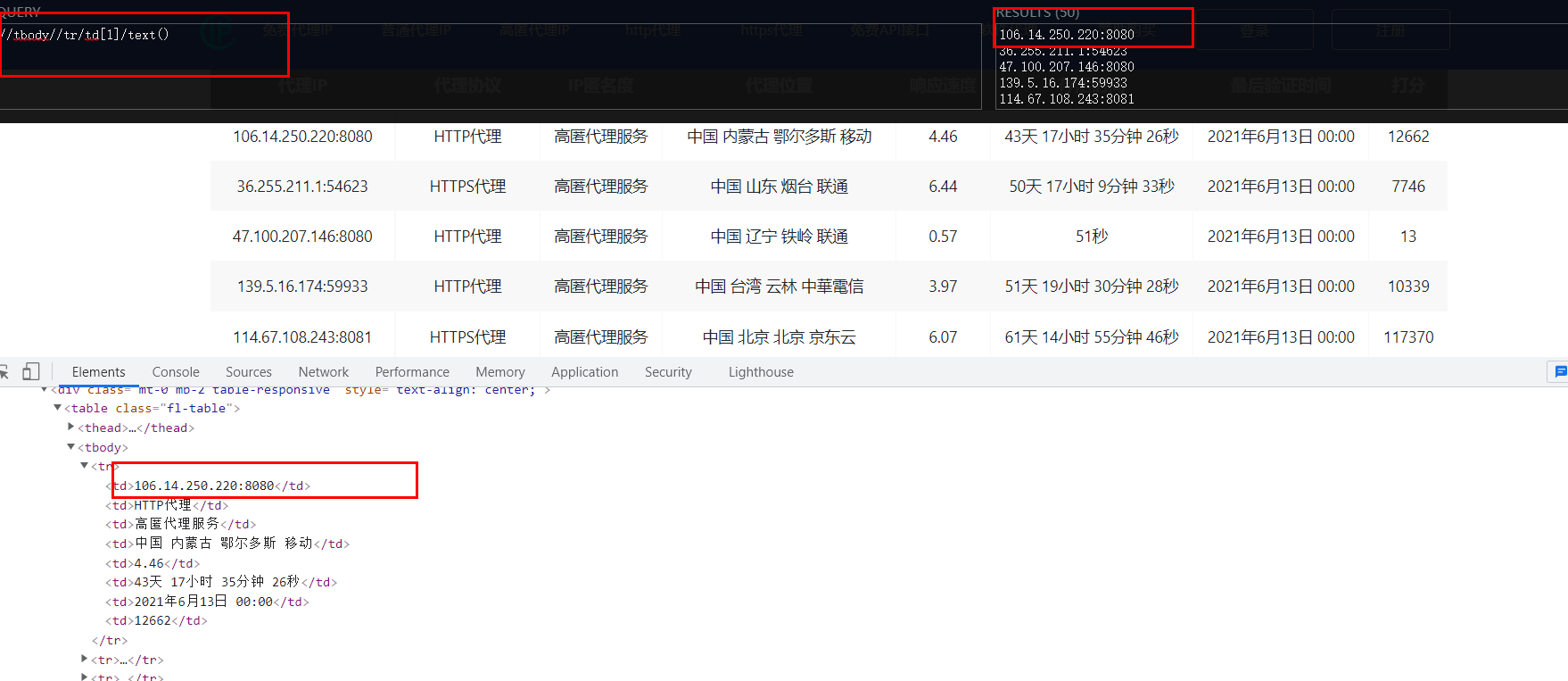

After entering the door, we'll go back to the second step, where we already know where he's hiding it, and then we'll use our xapth to pull it out step by step

Decryptor is the xpath helper tool in Google. See another article for installation tutorials

Article Entry

Hidden Point Found Next Open Tool Input corresponding code to fetch data

Enter a code in the upper left corner and output what we get in the upper right corner

Next enter the program Enter the corresponding password into the program

res_1=etree.HTML(res)

res_lis=res_1.xpath('//Tbody//tr/td[1]/text()') #xpath statement

Inventory of stolen goods

Above we've found what we want, and next we'll count the stolen goods

Remember to prepare a linen pocket to hold what we want before counting

ip_list=[] # Define a linen pocket to store usable ip addresses

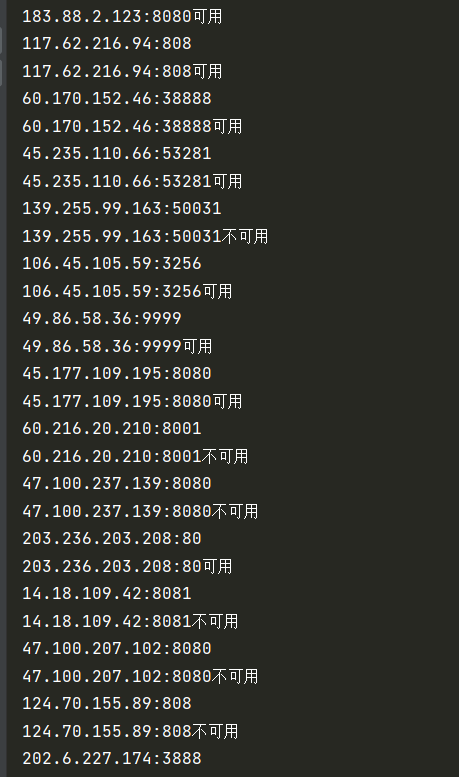

We've got a lot of things but some of them are bad, so let's go one by one and see if it's good or bad, and then we'll leave it as bad as he is.

Code directly up below

for li in res_lis: # Loop through the extracted ip address

print(li) #Used to test if the extraction is working

ip_lis=({

'http':'http:/{}'.format(li)

})#Stitching ip.address

url='http://baidu.com'#Just enter any address at will, because Baidu visits faster, so use Baidu

# ip_list=[]

try: #try: handle exceptions with

res=requests.get(url, headers=headers, proxies=proxies,timeout=6).text #Initiate a request to the test site

# print(res)

ip_list.append(li) # Add available IPS to the list

print('{}available'.format(li)) #Print for tips

# print(ip_list)

except Exception as e:

print('{}Not available'.format(li))# Printing is not available if not available

continue

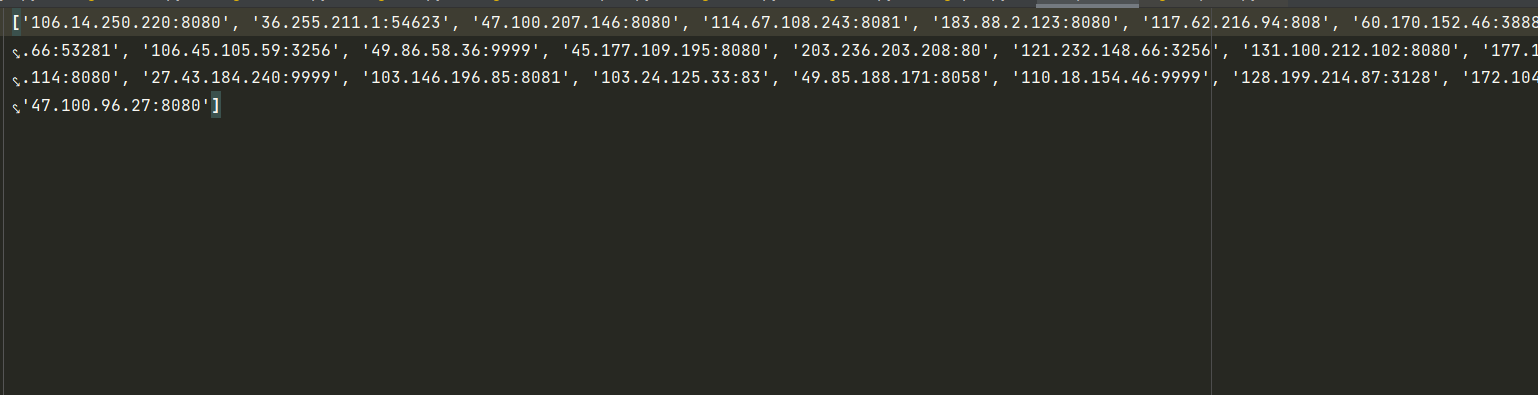

Going around

After our last sauce, it's time to pack the good stuff in your pocket

print(ip_list)#Print last available ip list

with open('ip.txt','a',)as f:#Write to File Local Storage

f.write(str(ip_list))

We pack and take the good stuff in a big pocket called ip.txt, A is how we put it in

Complete process

# Name: Someone who stole the battery for the week

# Time of crime: 2021/11/21 7:44

from fake_useragent import UserAgent # This module gets UAS from the network

import requests # Requests are used to initiate requests

import random # For Random

from lxml import etree # xpath parsing module

url='http://www.xiladaili.com/gaoni/2/'#destination url address

ua=UserAgent() # Call function

user_agent=ua.random #Random selection of ua

headers={'User-Agent':user_agent} #Stitching ua

proxies_list=[

'106.55.15.244:8889',

'119.183.250.1:9000'

'220.173.37.128:7890',

'223.96.90.216:8085',

'47.100.34.198:8001',

'103.103.3.6:8080',

'27.192.200.7:9000',

'113.237.3.178:9999',

'45.228.188.241:999',

'211.24.95.49:47615',

'191.101.39.193:80',

'103.205.15.97:8080',

'185.179.30.130:8080',

'190.108.88.97:999',

'182.87.136.228:9999',

'167.172.180.46:33555',

'58.255.7.90:9999',

'190.85.244.70:999',

'175.146.211.158:9999',

'36.56.102.35:9999',

'131.153.151.250:8003',

'195.9.61.22:45225',

'43.239.152.254:8080'

]#ip list

random_proxies = random.choice(proxies_list)#Randomly select ip from list

# Create headers

proxies = {'http' : random_proxies} #Stitching ip

res=requests.get(url,headers=headers,proxies=proxies).text # Initiate Request

print(res) # Just comment it out and use it earlier to test if you can make a normal request and print a response

res_1=etree.HTML(res)

res_lis=res_1.xpath('//Tbody//tr/td[1]/text()') #xpath statement

ip_list=[] # Define a list to store available ip addresses

for li in res_lis: # Loop through the extracted ip address

print(li) #Used to test if the extraction is working

ip_lis=({

'http':'http:/{}'.format(li)

})#Stitching ip.address

url='http://baidu.com'#Just enter any address at will, because Baidu visits faster, so use Baidu

# ip_list=[]

try: #try: handle exceptions with

res=requests.get(url, headers=headers, proxies=proxies,timeout=6).text #Initiate a request to the test site

# print(res)

ip_list.append(li) # Add available IPS to the list

print('{}available'.format(li)) #Print for tips

# print(ip_list)

except Exception as e:

print('{}Not available'.format(li))# Printing is not available if not available

continue

print(ip_list)#Print last available ip list

with open('ip.txt','a',)as f:#Write to File Local Storage

f.write(str(ip_list))

Result Display