preface:

Early jenkins assumed all the functions of ci/cd in kubernetes Jenkins Pipeline evolution , we are going to split the cd continuous integration into spinnaker!

Of course, the normal idea should be to get through the user account of jenkins spinnaker and integrate ldap.spinnaker account system. Relevant experiments have been done before integrating ldap.jenkins. The steps for Jenkins to integrate LDAP are omitted here. After all, the goal is to split the pipeline pipeline practice. Account system interworking is not so urgent!. Of course, the first step I think is to do a wave of image scanning without the step of image scanning! After all, safety comes first

Image scanning of jenkins pipeline

Note: the image image warehouse uses harbor

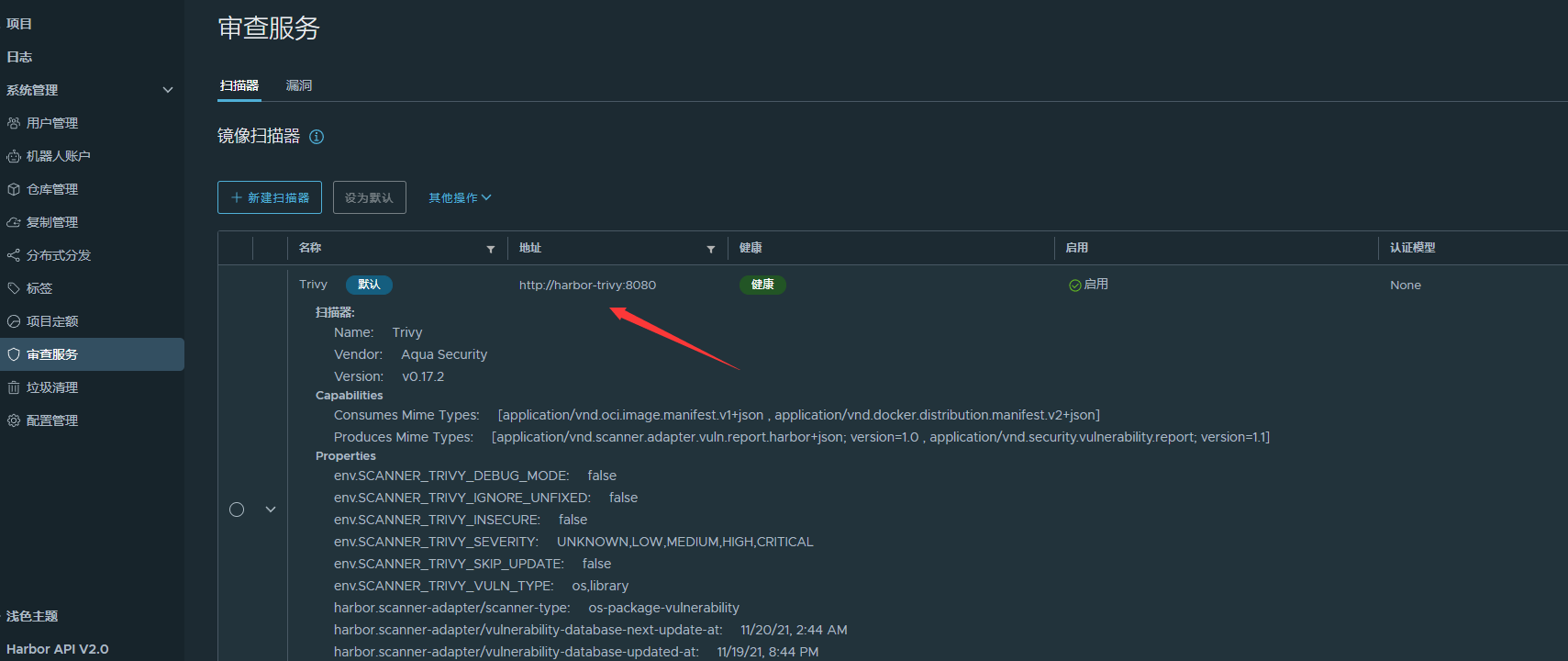

Trivy

harbor's default image scanner is Trivy. It seemed to be Claire early? remember

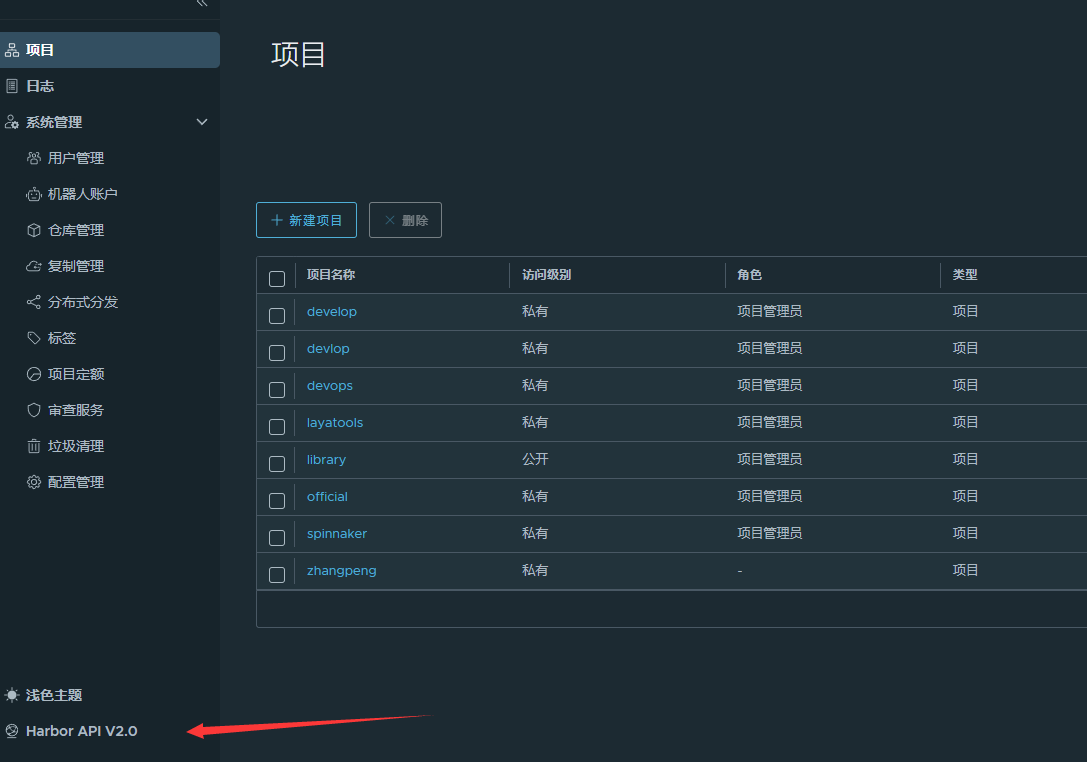

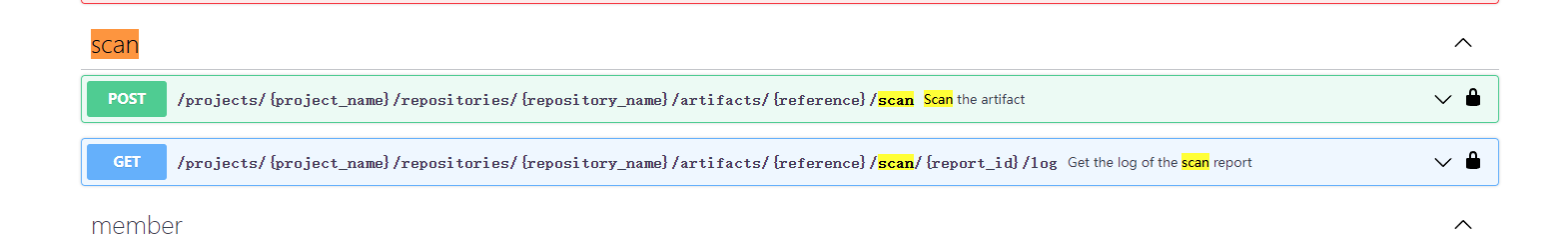

View the harbor api (it cannot be integrated with the pipeline to provide scanning reports)

Took a look at harbor's api. Harbor api can scan directly through scan:

But there is a defect here: I want to display the report directly in the jenkins pipeline. GET can only GET the log. I can't. The jenkins pipeline integrates automatic scanning in harbor. After the scanning is completed, continue to log in to harbor to confirm whether there are vulnerabilities in the image? So this function is a chicken rib for outsiders. However, with a learning attitude, let's experience the automatic scanning of images in jenkins pipeline. First, let's refer to the example of automatic image cleaning of big brother Zeyang:

import groovy.json.JsonSlurper

//Docker image warehouse information

registryServer = "harbor.layame.com"

projectName = "${JOB_NAME}".split('-')[0]

repoName = "${JOB_NAME}"

imageName = "${registryServer}/${projectName}/${repoName}"

harborAPI = ""

//pipeline

pipeline{

agent { node { label "build01"}}

//Set build trigger

triggers {

GenericTrigger( causeString: 'Generic Cause',

genericVariables: [[defaultValue: '', key: 'branchName', regexpFilter: '', value: '$.ref']],

printContributedVariables: true,

printPostContent: true,

regexpFilterExpression: '',

regexpFilterText: '',

silentResponse: true,

token: 'spinnaker-nginx-demo')

}

stages{

stage("CheckOut"){

steps{

script{

srcUrl = "https://gitlab.layabox.com/zhangpeng/spinnaker-nginx-demo.git"

branchName = branchName - "refs/heads/"

currentBuild.description = "Trigger by ${branchName}"

println("${branchName}")

checkout([$class: 'GitSCM',

branches: [[name: "${branchName}"]],

doGenerateSubmoduleConfigurations: false,

extensions: [],

submoduleCfg: [],

userRemoteConfigs: [[credentialsId: 'gitlab-admin-user',

url: "${srcUrl}"]]])

}

}

}

stage("Push Image "){

steps{

script{

withCredentials([usernamePassword(credentialsId: 'harbor-admin-user', passwordVariable: 'password', usernameVariable: 'username')]) {

sh """

sed -i -- "s/VER/${branchName}/g" app/index.html

docker login -u ${username} -p ${password} ${registryServer}

docker build -t ${imageName}:${data} .

docker push ${imageName}:${data}

docker rmi ${imageName}:${data}

"""

}

}

}

}

stage("scan Image "){

steps{

script{

withCredentials([usernamePassword(credentialsId: 'harbor-admin-user', passwordVariable: 'password', usernameVariable: 'username')]) {

sh """

sed -i -- "s/VER/${branchName}/g" app/index.html

docker login -u ${username} -p ${password} ${registryServer}

docker build -t ${imageName}:${data} .

docker push ${imageName}:${data}

docker rmi ${imageName}:${data}

"""

}

}

}

}

stage("Trigger File"){

steps {

script{

sh """

echo IMAGE=${imageName}:${data} >trigger.properties

echo ACTION=DEPLOY >> trigger.properties

cat trigger.properties

"""

archiveArtifacts allowEmptyArchive: true, artifacts: 'trigger.properties', followSymlinks: false

}

}

}

}

}Transform spinnaker nginx demo pipeline

Still take my spinnaker nginx demo example to verify, see: About jenkins configuration spinnaker nginx demo , modify the pipeline as follows:

//Docker image warehouse information

registryServer = "harbor.xxxx.com"

projectName = "${JOB_NAME}".split('-')[0]

repoName = "${JOB_NAME}"

imageName = "${registryServer}/${projectName}/${repoName}"

//pipeline

pipeline{

agent { node { label "build01"}}

//Set build trigger

triggers {

GenericTrigger( causeString: 'Generic Cause',

genericVariables: [[defaultValue: '', key: 'branchName', regexpFilter: '', value: '$.ref']],

printContributedVariables: true,

printPostContent: true,

regexpFilterExpression: '',

regexpFilterText: '',

silentResponse: true,

token: 'spinnaker-nginx-demo')

}

stages{

stage("CheckOut"){

steps{

script{

srcUrl = "https://gitlab.xxxx.com/zhangpeng/spinnaker-nginx-demo.git"

branchName = branchName - "refs/heads/"

currentBuild.description = "Trigger by ${branchName}"

println("${branchName}")

checkout([$class: 'GitSCM',

branches: [[name: "${branchName}"]],

doGenerateSubmoduleConfigurations: false,

extensions: [],

submoduleCfg: [],

userRemoteConfigs: [[credentialsId: 'gitlab-admin-user',

url: "${srcUrl}"]]])

}

}

}

stage("Push Image "){

steps{

script{

withCredentials([usernamePassword(credentialsId: 'harbor-admin-user', passwordVariable: 'password', usernameVariable: 'username')]) {

sh """

sed -i -- "s/VER/${branchName}/g" app/index.html

docker login -u ${username} -p ${password} ${registryServer}

docker build -t ${imageName}:${data} .

docker push ${imageName}:${data}

docker rmi ${imageName}:${data}

"""

}

}

}

}

stage("scan Image "){

steps{

script{

withCredentials([usernamePassword(credentialsId: 'harbor-admin-user', passwordVariable: 'password', usernameVariable: 'username')]) {

harborAPI = "https://harbor.xxxx.com/api/v2.0/projects/${projectName}/repositories/${repoName}"

apiURL = "artifacts/${data}/scan"

sh """ curl -X POST "${harborAPI}/${apiURL}" -H "accept: application/json" -u ${username}:${password} """

}

}

}

}

stage("Trigger File"){

steps {

script{

sh """

echo IMAGE=${imageName}:${data} >trigger.properties

echo ACTION=DEPLOY >> trigger.properties

cat trigger.properties

"""

archiveArtifacts allowEmptyArchive: true, artifacts: 'trigger.properties', followSymlinks: false

}

}

}

}

}Refer to the pipeline script of Yangming boss to clean up the image, and modify it to add the stage of scan Image! In fact, they all refer to harbor's api documents. For more details, please refer to harbor's official api.

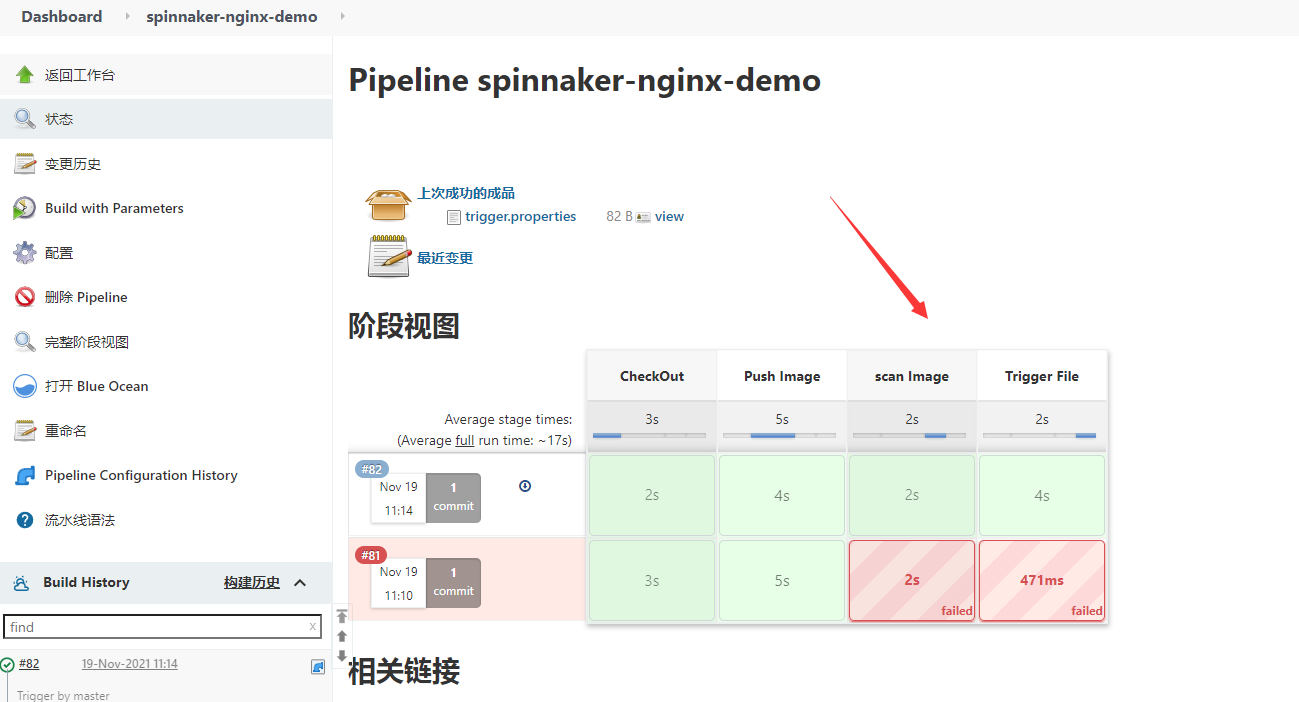

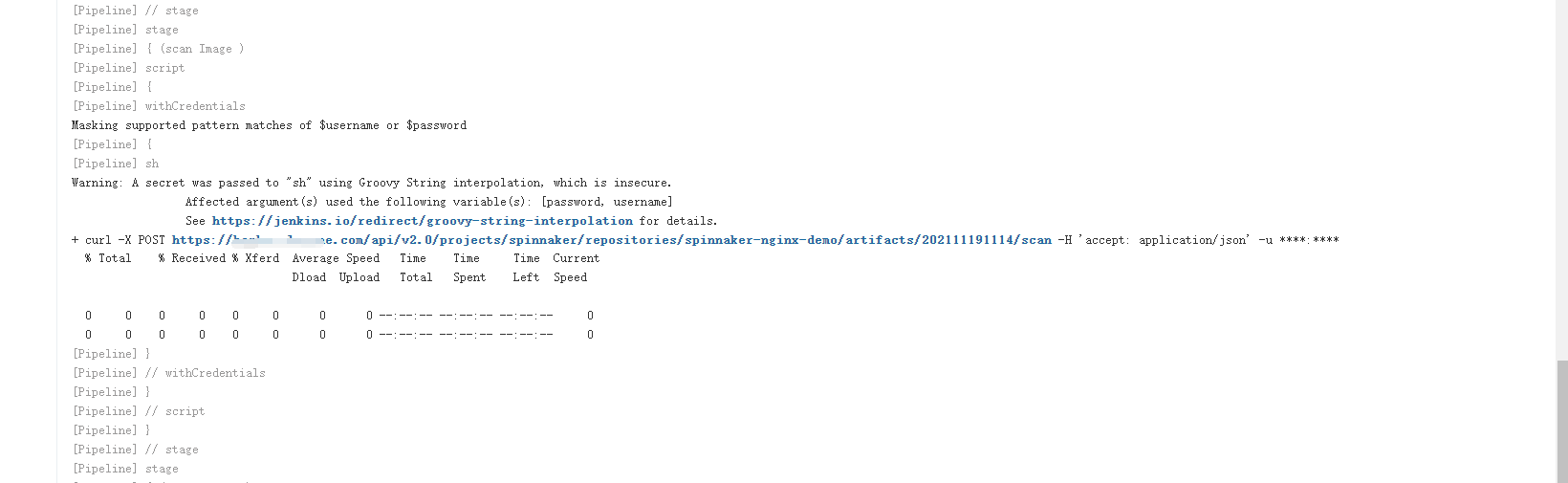

Trigger jenkins build

Spinnaker nginx demo pipeline is triggered by gitlab. Updating the file of any master branch in gitlab warehouse triggers jenkins Construction:

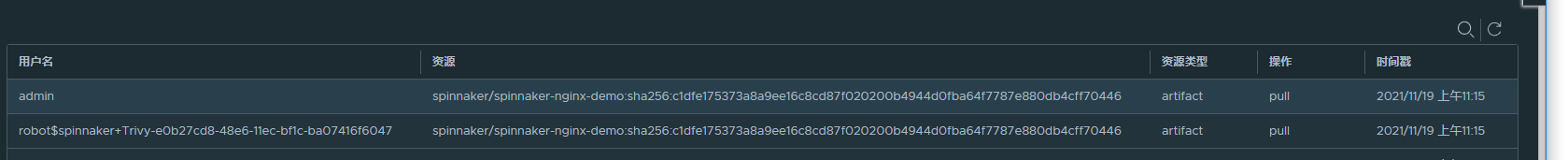

Log in to harbor warehouse for verification:

ok, the verification is successful. Of course, if there are other requirements, you can refer to the harbor api document. Of course, the premise is that the functions supported by harbor... Can't integrate the scanning report in jenkins, which makes me give up Trivy in harbor. Of course, it's also possible that I'm not familiar with Trivy and didn't go to the Trivy document, but just read the harbor api

anchore-engine

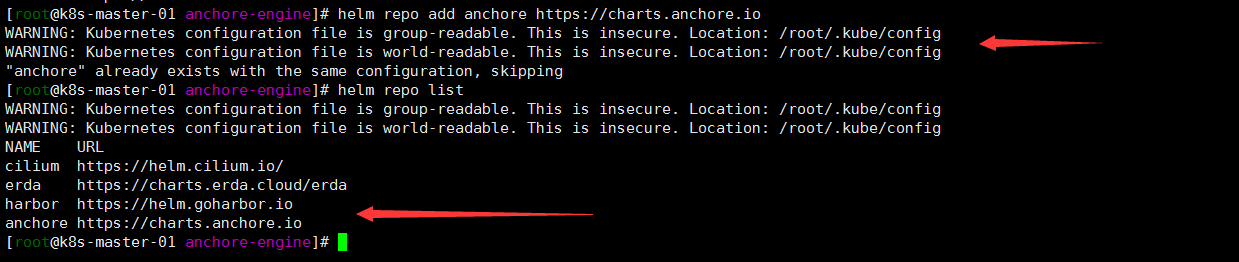

Installation of anchor engine Helm

Anchor engine was found on the Internet when I accidentally searched Jenkins scan image: https://cloud.tencent.com/developer/article/1666535 . it's a very good article. Then I took a look at the official website and found the installation method of helm: https://engine.anchore.io/docs/install/helm/ , install it and test it

[root@k8s-master-01 anchore-engine]# helm repo add anchore https://charts.anchore.io [root@k8s-master-01 anchore-engine]# helm repo list

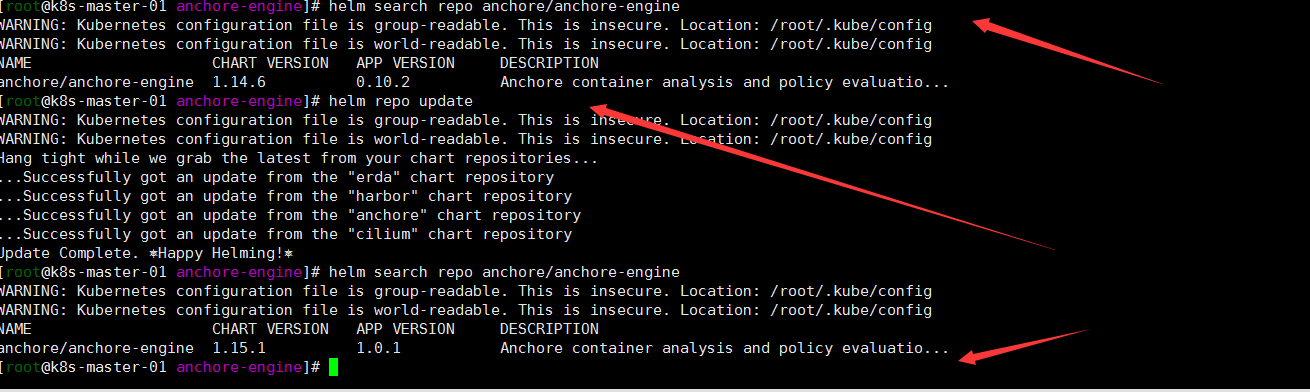

Note: hahaha, I did it again before, so helm warehouse added, um, and installed version 1.14.6. But if you don't succeed in integrating with jenkins, you want to try the latest version. But reality seems to beat me. It's estimated that jenkins's plug-in is too old? (the following step-by-step verification is that I haven't studied deeply... In fact, it's OK) by the way, review the helm command!

[root@k8s-master-01 anchore-engine]# helm search repo anchore/anchore-engine [root@k8s-master-01 anchore-engine]# helm repo update [root@k8s-master-01 anchore-engine]# helm search repo anchore/anchore-engine [root@k8s-master-01 anchore-engine]# helm fetch anchore/anchore-engine

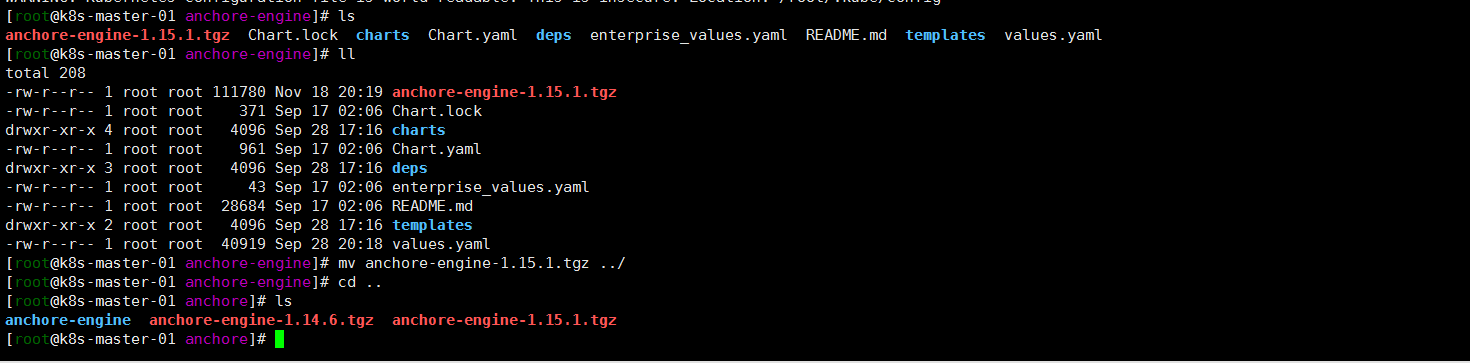

[root@k8s-master-01 anchore-engine]# ls [root@k8s-master-01 anchore-engine]# tar zxvf anchore-engine-1.15.1.tgz [root@k8s-master-01 anchore-engine]# cd anchore-engine

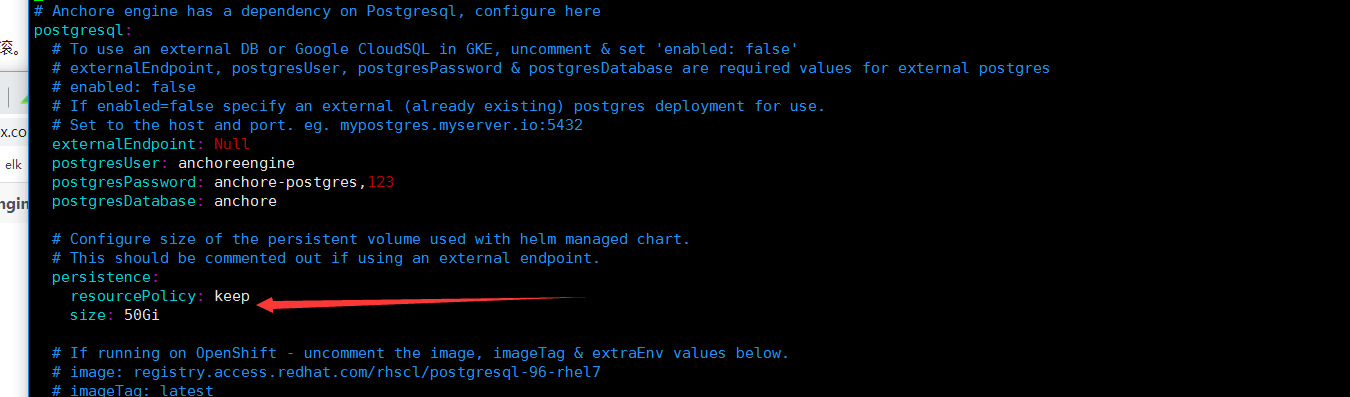

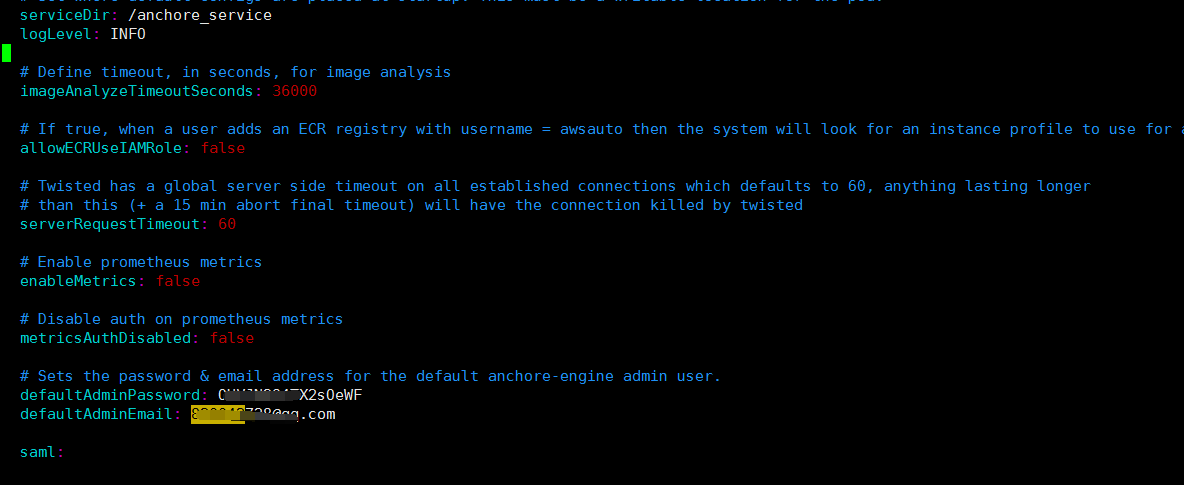

vim values.yaml

I changed the storage size and set the password and mailbox!

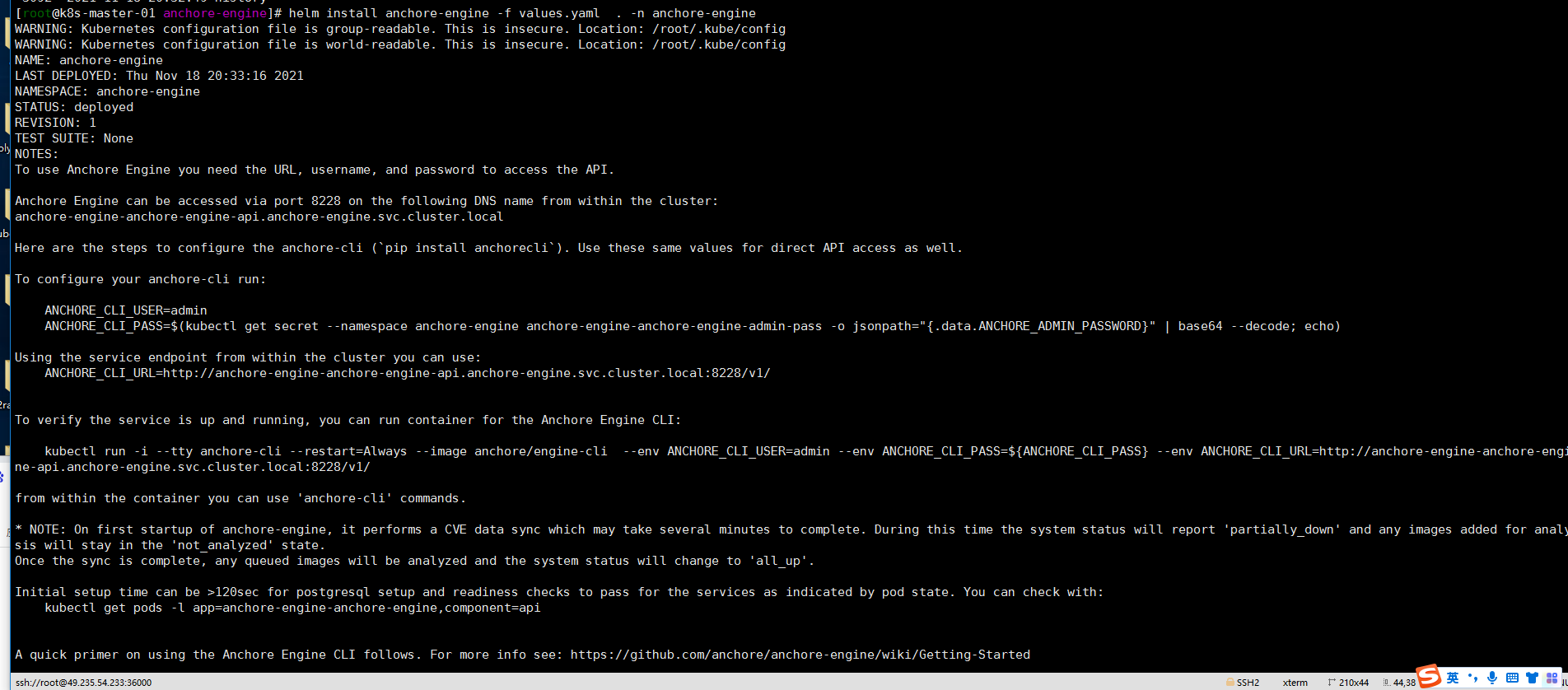

helm install anchore-engine -f values.yaml . -n anchore-engine

I found a pit father... Why is the domain of kubernetes set to cluster.local by default? After reading the configuration file, I can't find the modified

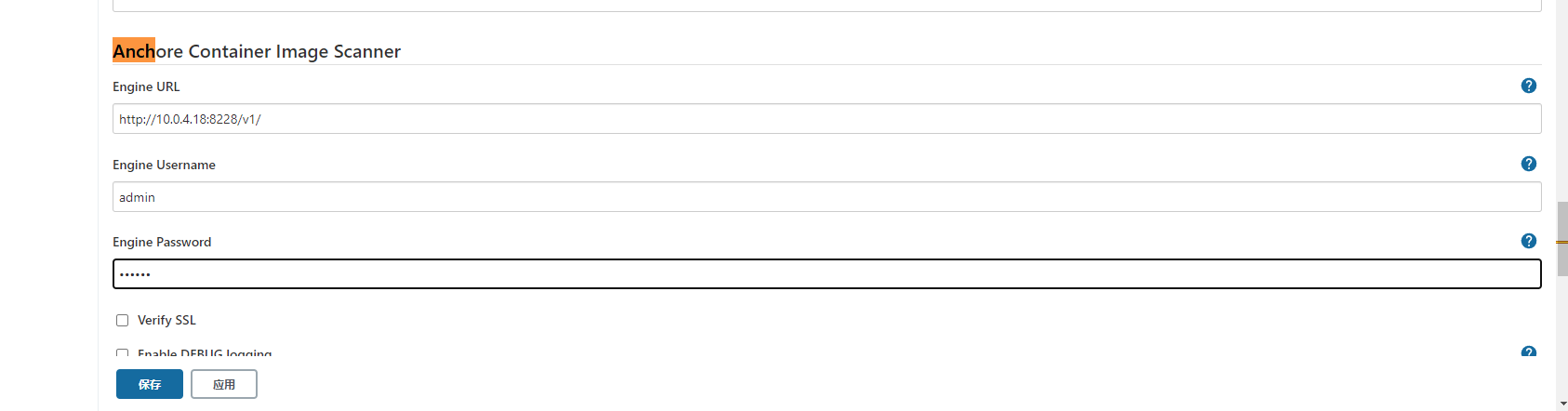

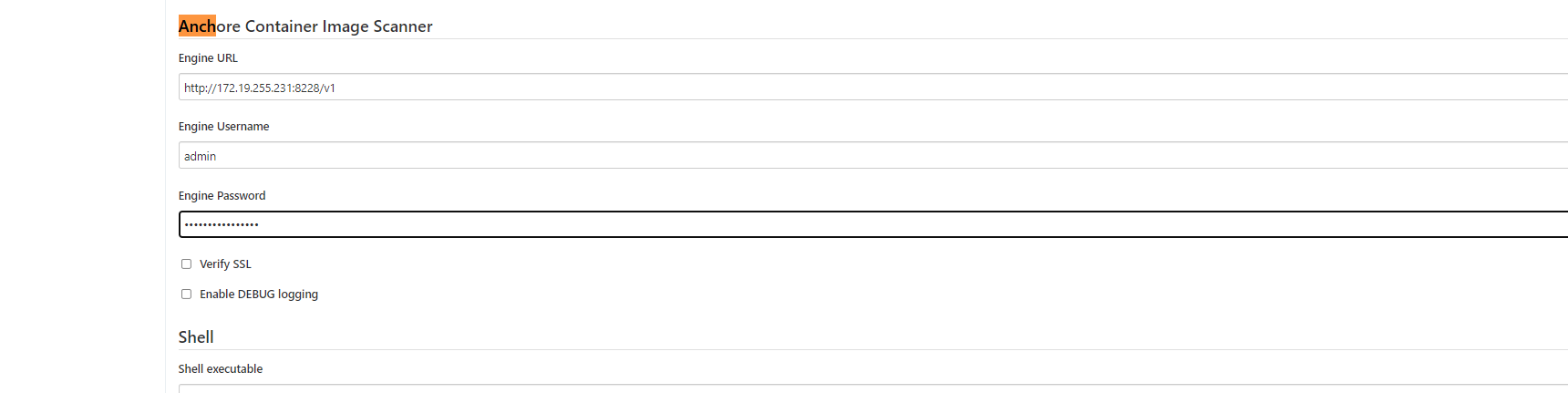

jenkins configuration

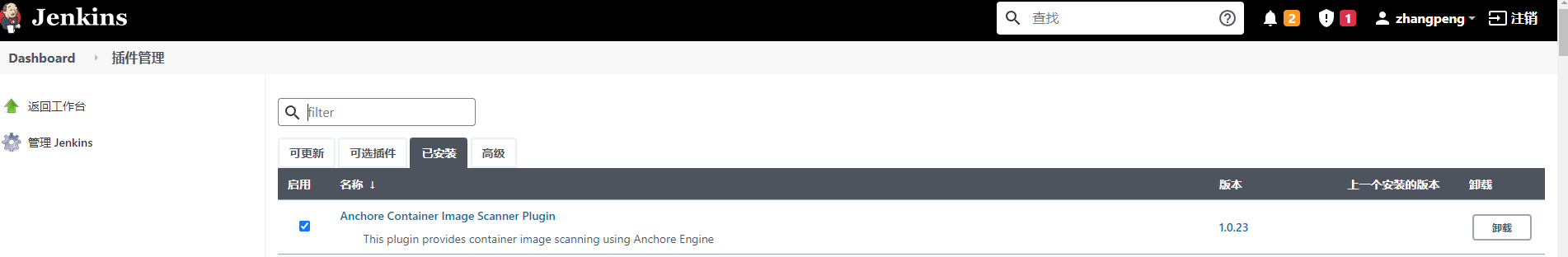

jenkins first installs the plug-in

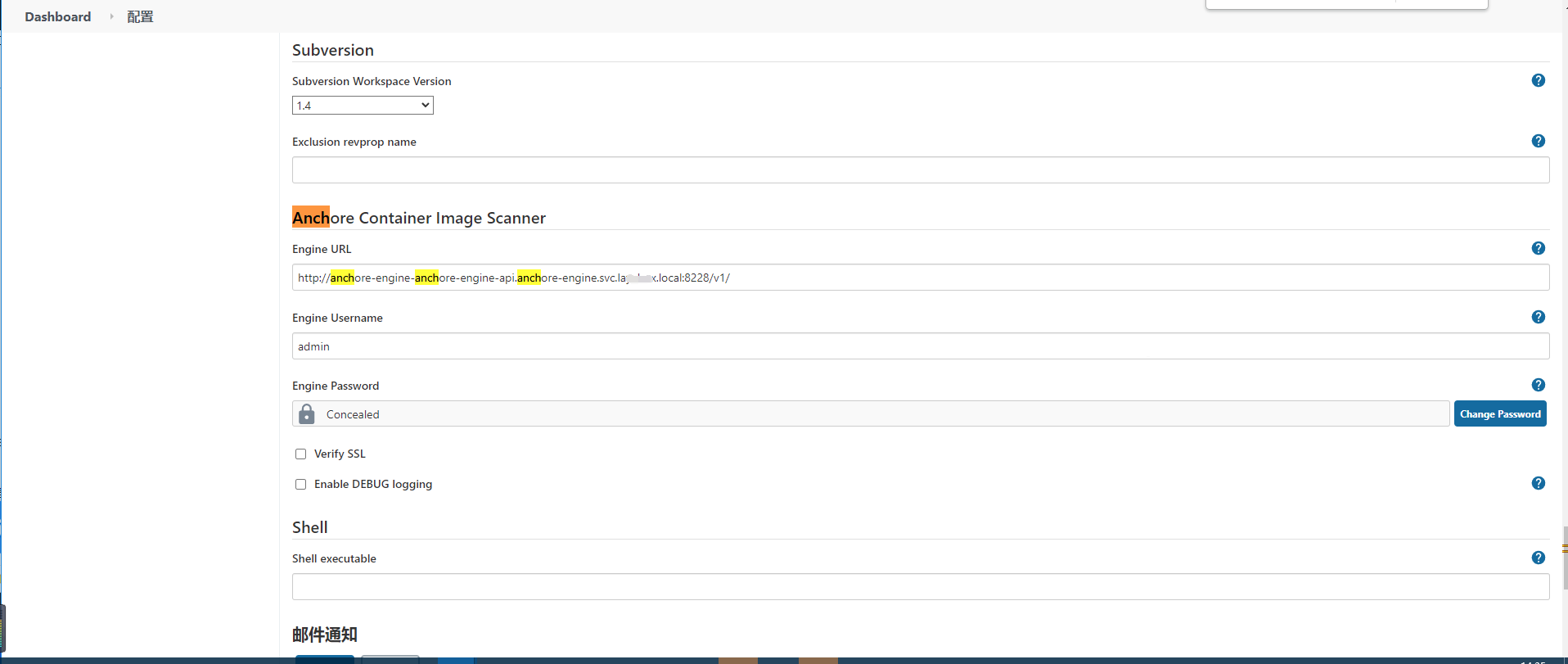

to configure:

System management - system configuration:

Build pipeline:

Because this is a test, I did it first Use anchor enine to improve the DevSecOps tool chain demo inside (modified the build node, github warehouse and dockerhub warehouse key):

jenkins creates a new pipeline task anchor anchor

pipeline {

agent { node { label "build01"}}

environment {

registry = "duiniwukenaihe/spinnaker-cd" //Warehouse address, which is used to push the image to the image warehouse. Modify according to the actual situation

registryCredential = 'duiniwukenaihe' //The voucher used to log in the image warehouse can be modified according to the actual situation

}

stages {

//jenkins downloads the code from the code warehouse

stage('Cloning Git') {

steps {

git 'https://github.com.cnpmjs.org/duiniwukenaihe/docker-dvwa.git'

}

}

//Build mirror

stage('Build Image') {

steps {

script {

app = docker.build(registry+ ":$BUILD_NUMBER")

}

}

}

//Push the image to the warehouse

stage('Push Image') {

steps {

script {

docker.withRegistry('', registryCredential ) {

app.push()

}

}

}

}

//Mirror scan

stage('Container Security Scan') {

steps {

sh 'echo "'+registry+':$BUILD_NUMBER `pwd`/Dockerfile" > anchore_images'

anchore engineRetries: "240", name: 'anchore_images'

}

}

stage('Cleanup') {

steps {

sh script: "docker rmi " + registry+ ":$BUILD_NUMBER"

}

}

}

}Note: github.com is modified to github.com.cnpmjs.org to speed up... After all, the dynamic code of wall crack can not be pull ed

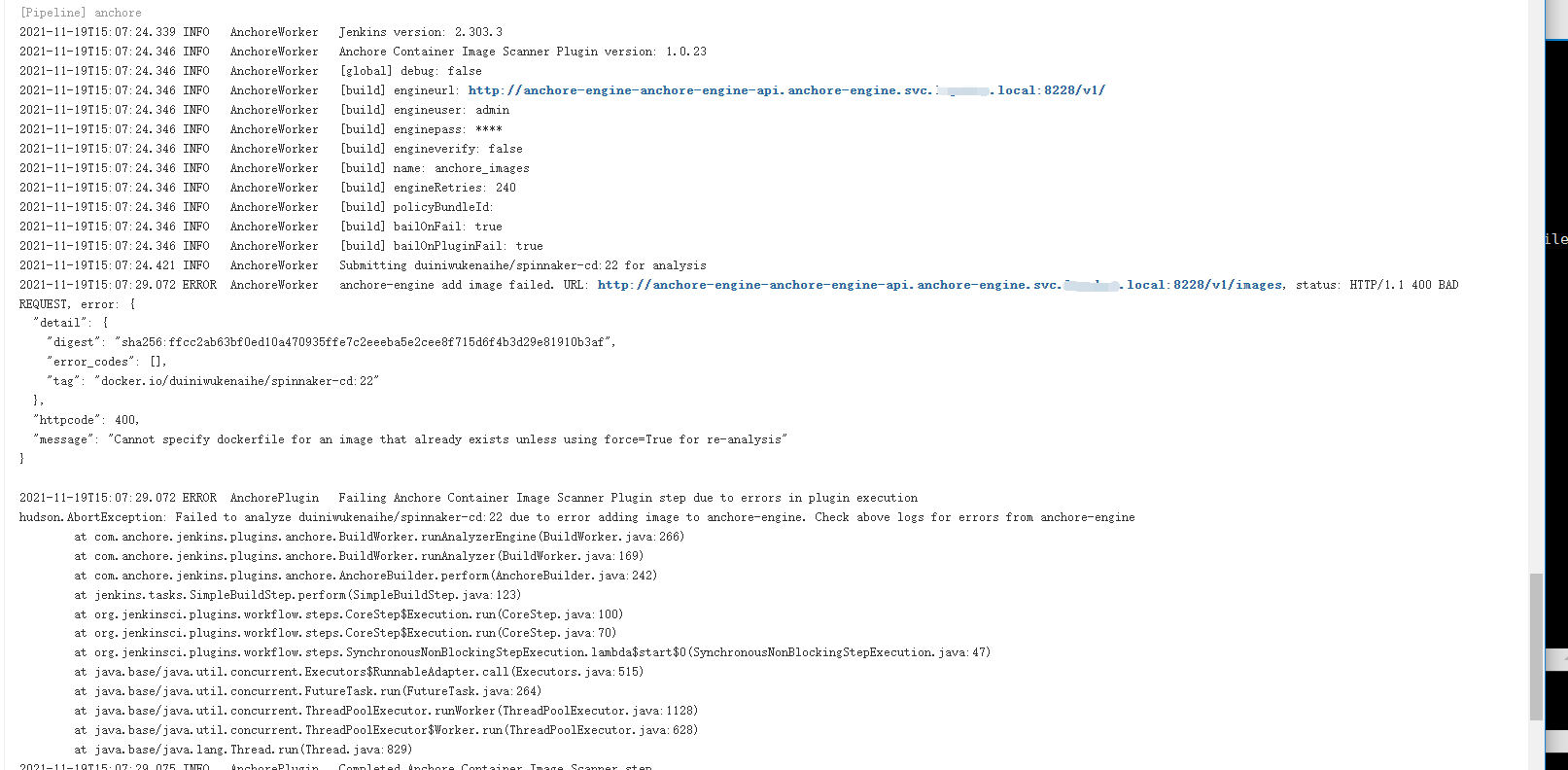

Run pipeline task

Anyway, it's done several times and ended in failure... Without understand ing, slowly peel off and find the problem

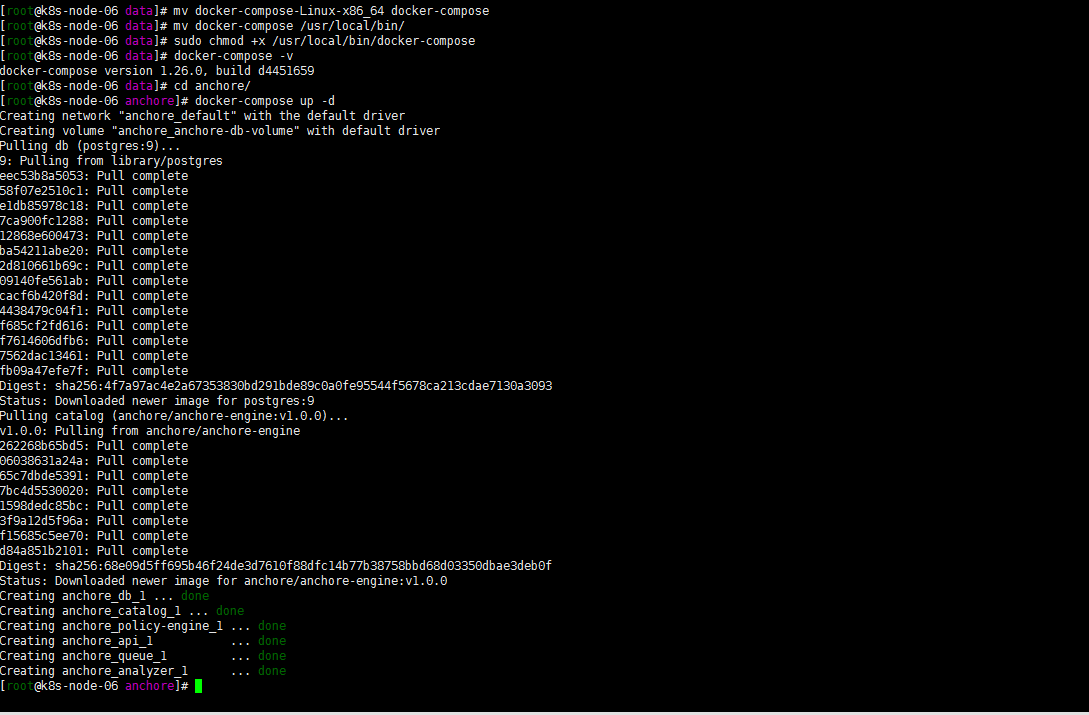

Docker compose install anchor engine

according to The tutorial uses anchor enine to improve the DevSecOps tool chain

A docker compose deployment method was developed:

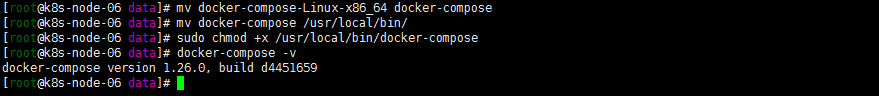

Note: by default, the cri of my cluster is containerd, and the k8s-node-06 node is the docker runtime and does not participate in scheduling. Anchor engine is ready to be installed on this server! Intranet ip: 10.0.4.18.

Before installing docker compose:

docker-compose up -d

The default yaml file is directly used without any additional modification. It is only tested in the early stage.

# curl https://docs.anchore.com/current/docs/engine/quickstart/docker-compose.yaml > docker-compose.yaml # docker-compose up -d

# This is a docker-compose file for development purposes. It refereneces unstable developer builds from the HEAD of master branch in https://github.com/anchore/anchore-engine

# For a compose file intended for use with a released version, see https://engine.anchore.io/docs/quickstart/

#

---

version: '2.1'

volumes:

anchore-db-volume:

# Set this to 'true' to use an external volume. In which case, it must be created manually with "docker volume create anchore-db-volume"

external: false

services:

# The primary API endpoint service

api:

image: anchore/anchore-engine:v1.0.0

depends_on:

- db

- catalog

ports:

- "8228:8228"

logging:

driver: "json-file"

options:

max-size: 100m

environment:

- ANCHORE_ENDPOINT_HOSTNAME=api

- ANCHORE_ADMIN_PASSWORD=foobar

- ANCHORE_DB_HOST=db

- ANCHORE_DB_PASSWORD=mysecretpassword

command: ["anchore-manager", "service", "start", "apiext"]

# Catalog is the primary persistence and state manager of the system

catalog:

image: anchore/anchore-engine:v1.0.0

depends_on:

- db

logging:

driver: "json-file"

options:

max-size: 100m

expose:

- 8228

environment:

- ANCHORE_ENDPOINT_HOSTNAME=catalog

- ANCHORE_ADMIN_PASSWORD=foobar

- ANCHORE_DB_HOST=db

- ANCHORE_DB_PASSWORD=mysecretpassword

command: ["anchore-manager", "service", "start", "catalog"]

queue:

image: anchore/anchore-engine:v1.0.0

depends_on:

- db

- catalog

expose:

- 8228

logging:

driver: "json-file"

options:

max-size: 100m

environment:

- ANCHORE_ENDPOINT_HOSTNAME=queue

- ANCHORE_ADMIN_PASSWORD=foobar

- ANCHORE_DB_HOST=db

- ANCHORE_DB_PASSWORD=mysecretpassword

command: ["anchore-manager", "service", "start", "simplequeue"]

policy-engine:

image: anchore/anchore-engine:v1.0.0

depends_on:

- db

- catalog

expose:

- 8228

logging:

driver: "json-file"

options:

max-size: 100m

environment:

- ANCHORE_ENDPOINT_HOSTNAME=policy-engine

- ANCHORE_ADMIN_PASSWORD=foobar

- ANCHORE_DB_HOST=db

- ANCHORE_DB_PASSWORD=mysecretpassword

- ANCHORE_VULNERABILITIES_PROVIDER=grype

command: ["anchore-manager", "service", "start", "policy_engine"]

analyzer:

image: anchore/anchore-engine:v1.0.0

depends_on:

- db

- catalog

expose:

- 8228

logging:

driver: "json-file"

options:

max-size: 100m

environment:

- ANCHORE_ENDPOINT_HOSTNAME=analyzer

- ANCHORE_ADMIN_PASSWORD=foobar

- ANCHORE_DB_HOST=db

- ANCHORE_DB_PASSWORD=mysecretpassword

volumes:

- /analysis_scratch

command: ["anchore-manager", "service", "start", "analyzer"]

db:

image: "postgres:9"

volumes:

- anchore-db-volume:/var/lib/postgresql/data

environment:

- POSTGRES_PASSWORD=mysecretpassword

expose:

- 5432

logging:

driver: "json-file"

options:

max-size: 100m

healthcheck:

test: ["CMD-SHELL", "pg_isready -U postgres"]

# # Uncomment this section to add a prometheus instance to gather metrics. This is mostly for quickstart to demonstrate prometheus metrics exported

# prometheus:

# image: docker.io/prom/prometheus:latest

# depends_on:

# - api

# volumes:

# - ./anchore-prometheus.yml:/etc/prometheus/prometheus.yml:z

# logging:

# driver: "json-file"

# options:

# max-size: 100m

# ports:

# - "9090:9090"

#

# # Uncomment this section to run a swagger UI service, for inspecting and interacting with the anchore engine API via a browser (http://localhost:8080 by default, change if needed in both sections below)

# swagger-ui-nginx:

# image: docker.io/nginx:latest

# depends_on:

# - api

# - swagger-ui

# ports:

# - "8080:8080"

# volumes:

# - ./anchore-swaggerui-nginx.conf:/etc/nginx/nginx.conf:z

# logging:

# driver: "json-file"

# options:

# max-size: 100m

# swagger-ui:

# image: docker.io/swaggerapi/swagger-ui

# environment:

# - URL=http://localhost:8080/v1/swagger.json

# logging:

# driver: "json-file"

# options:

# max-size: 100m

#

[root@k8s-node-06 anchore]# docker-compose ps

Name Command State Ports

------------------------------------------------------------------------------------------------

anchore_analyzer_1 /docker-entrypoint.sh anch ... Up (healthy) 8228/tcp

anchore_api_1 /docker-entrypoint.sh anch ... Up (healthy) 0.0.0.0:8228->8228/tcp

anchore_catalog_1 /docker-entrypoint.sh anch ... Up (healthy) 8228/tcp

anchore_db_1 docker-entrypoint.sh postgres Up (healthy) 5432/tcp

anchore_policy-engine_1 /docker-entrypoint.sh anch ... Up (healthy) 8228/tcp

anchore_queue_1 /docker-entrypoint.sh anch ... Up (healthy) 8228/tcpModify jenkins configuration

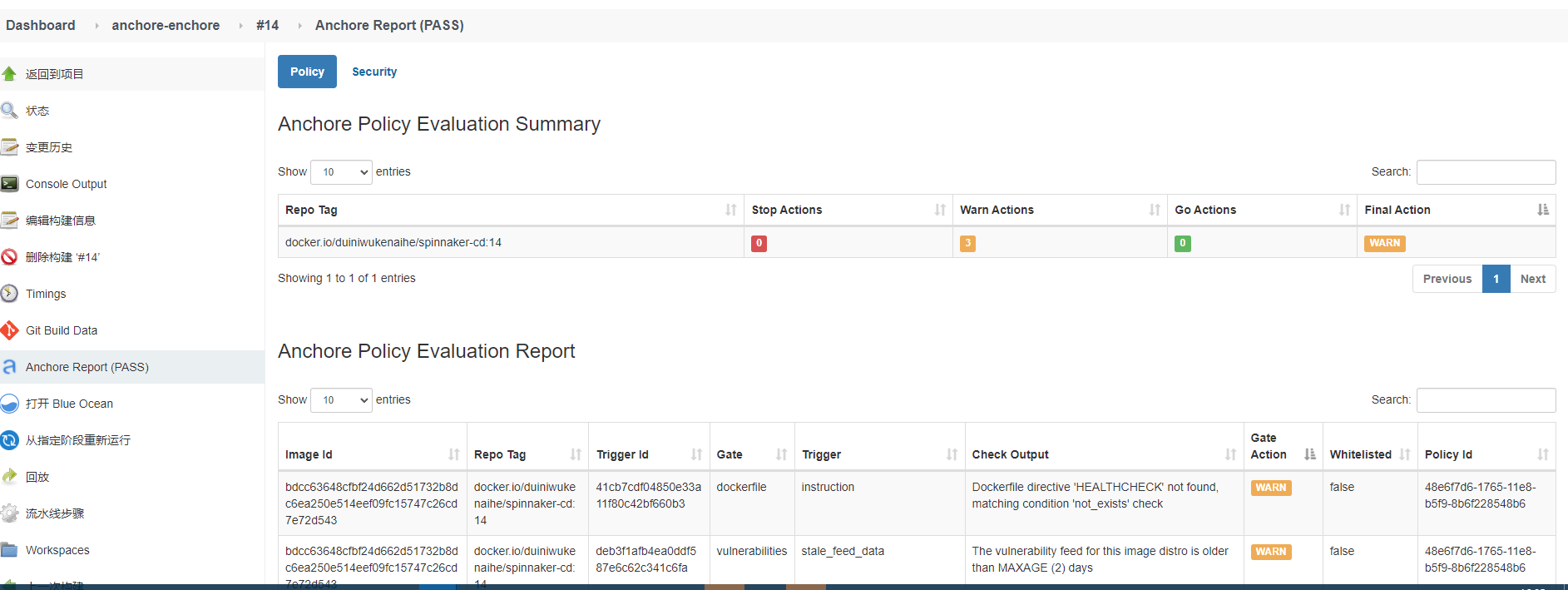

Pipeline Test:

Wrong guess:

helm deployment is not possible. It is preliminarily estimated that my container is not docker? Or is the server version too high?

Attendant problems:

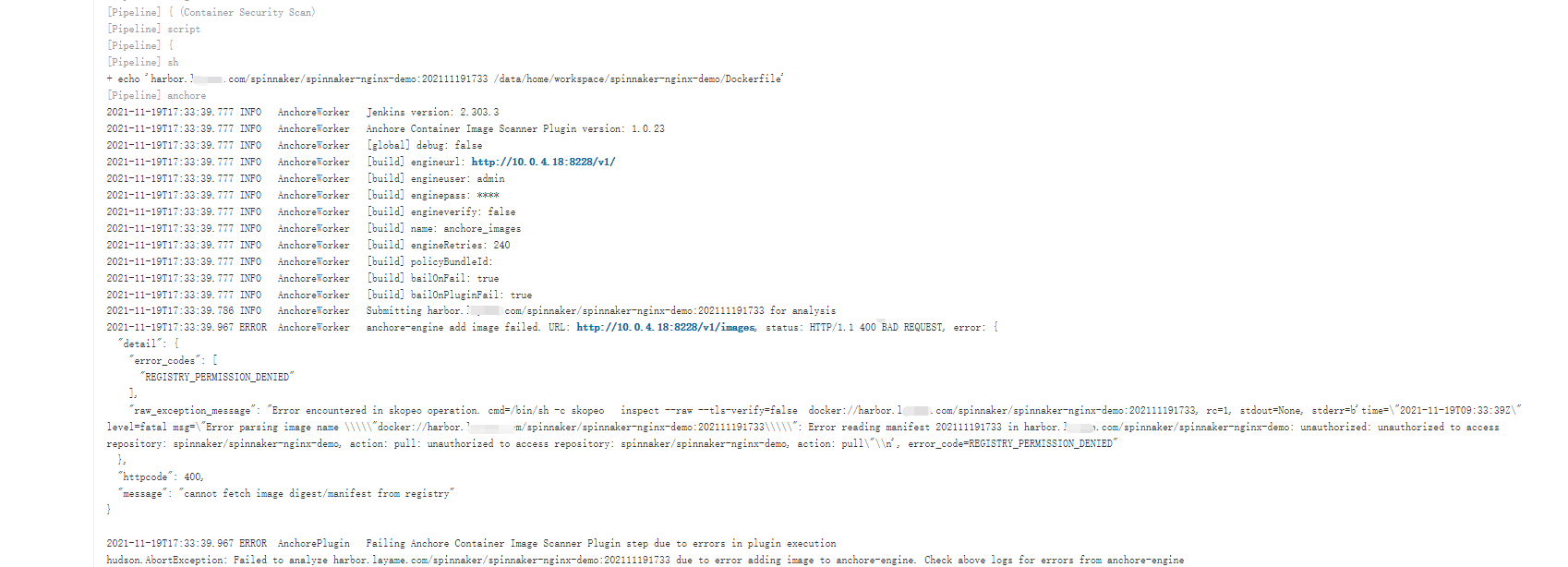

How to scan private warehouse images?

But then the problem comes again: the default image warehouse in anchor anchor anchor repository is dockerhub, my warehouse is a private harbor warehouse, and the application pipeline of spinnaker nginx demo can't run with increased scanning

//Docker image warehouse information

registryServer = "harbor.xxxx.com"

projectName = "${JOB_NAME}".split('-')[0]

repoName = "${JOB_NAME}"

imageName = "${registryServer}/${projectName}/${repoName}"

//pipeline

pipeline{

agent { node { label "build01"}}

//Set build trigger

triggers {

GenericTrigger( causeString: 'Generic Cause',

genericVariables: [[defaultValue: '', key: 'branchName', regexpFilter: '', value: '$.ref']],

printContributedVariables: true,

printPostContent: true,

regexpFilterExpression: '',

regexpFilterText: '',

silentResponse: true,

token: 'spinnaker-nginx-demo')

}

stages{

stage("CheckOut"){

steps{

script{

srcUrl = "https://gitlab.xxxx.com/zhangpeng/spinnaker-nginx-demo.git"

branchName = branchName - "refs/heads/"

currentBuild.description = "Trigger by ${branchName}"

println("${branchName}")

checkout([$class: 'GitSCM',

branches: [[name: "${branchName}"]],

doGenerateSubmoduleConfigurations: false,

extensions: [],

submoduleCfg: [],

userRemoteConfigs: [[credentialsId: 'gitlab-admin-user',

url: "${srcUrl}"]]])

}

}

}

stage("Push Image "){

steps{

script{

withCredentials([usernamePassword(credentialsId: 'harbor-admin-user', passwordVariable: 'password', usernameVariable: 'username')]) {

sh """

sed -i -- "s/VER/${branchName}/g" app/index.html

docker login -u ${username} -p ${password} ${registryServer}

docker build -t ${imageName}:${data} .

docker push ${imageName}:${data}

docker rmi ${imageName}:${data}

"""

}

}

}

}

stage('Container Security Scan') {

steps {

script{

sh """

echo "Start scanning"

echo "${imageName}:${data} ${WORKSPACE}/Dockerfile" > anchore_images

"""

anchore engineRetries: "360",forceAnalyze: true, name: 'anchore_images'

}

}

}

stage("Trigger File"){

steps {

script{

sh """

echo IMAGE=${imageName}:${data} >trigger.properties

echo ACTION=DEPLOY >> trigger.properties

cat trigger.properties

"""

archiveArtifacts allowEmptyArchive: true, artifacts: 'trigger.properties', followSymlinks: false

}

}

}

}

}

Inspiration from github issuse:

What's going on? Hard work pays off. Interested people have seen the issue of GitHub anchor warehouse: https://github.com/anchore/anchore-engine/issues/438 , found a solution

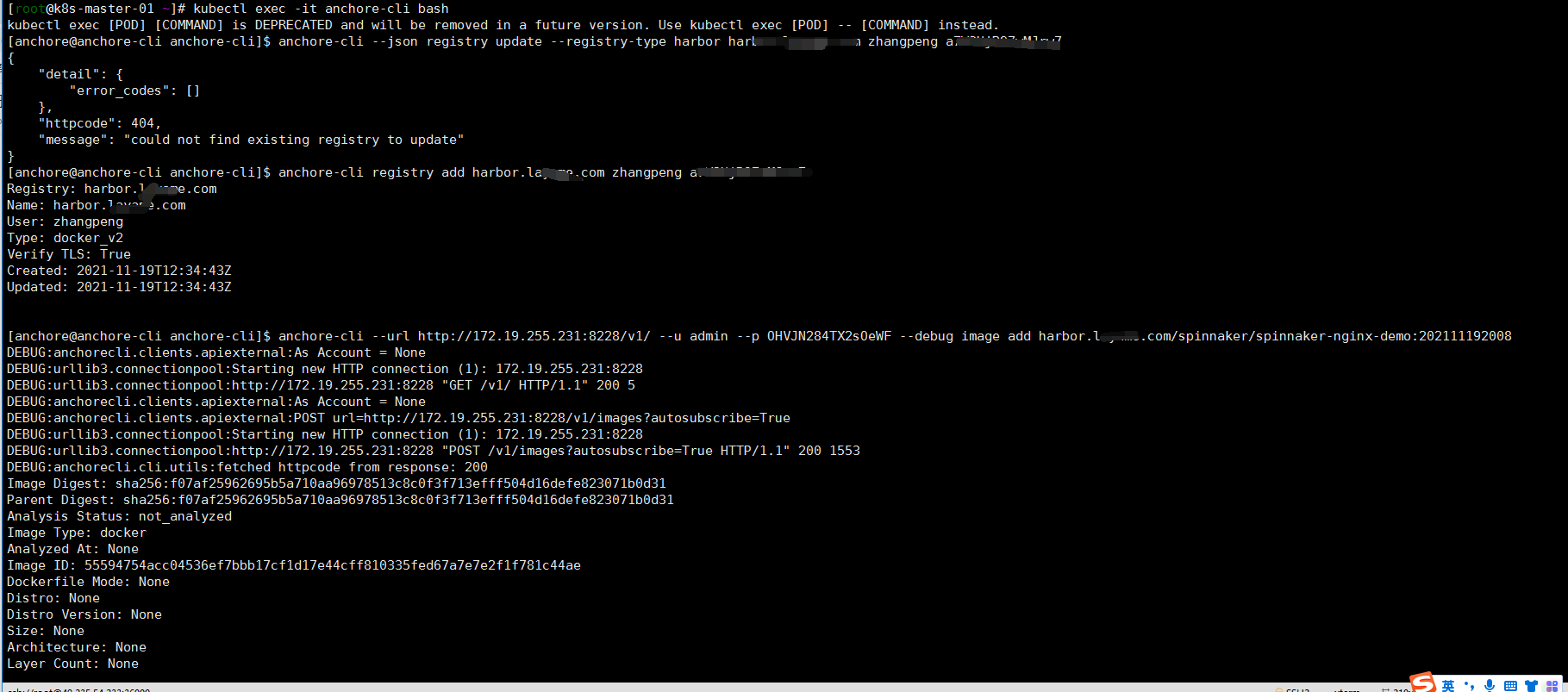

Add private warehouse configuration

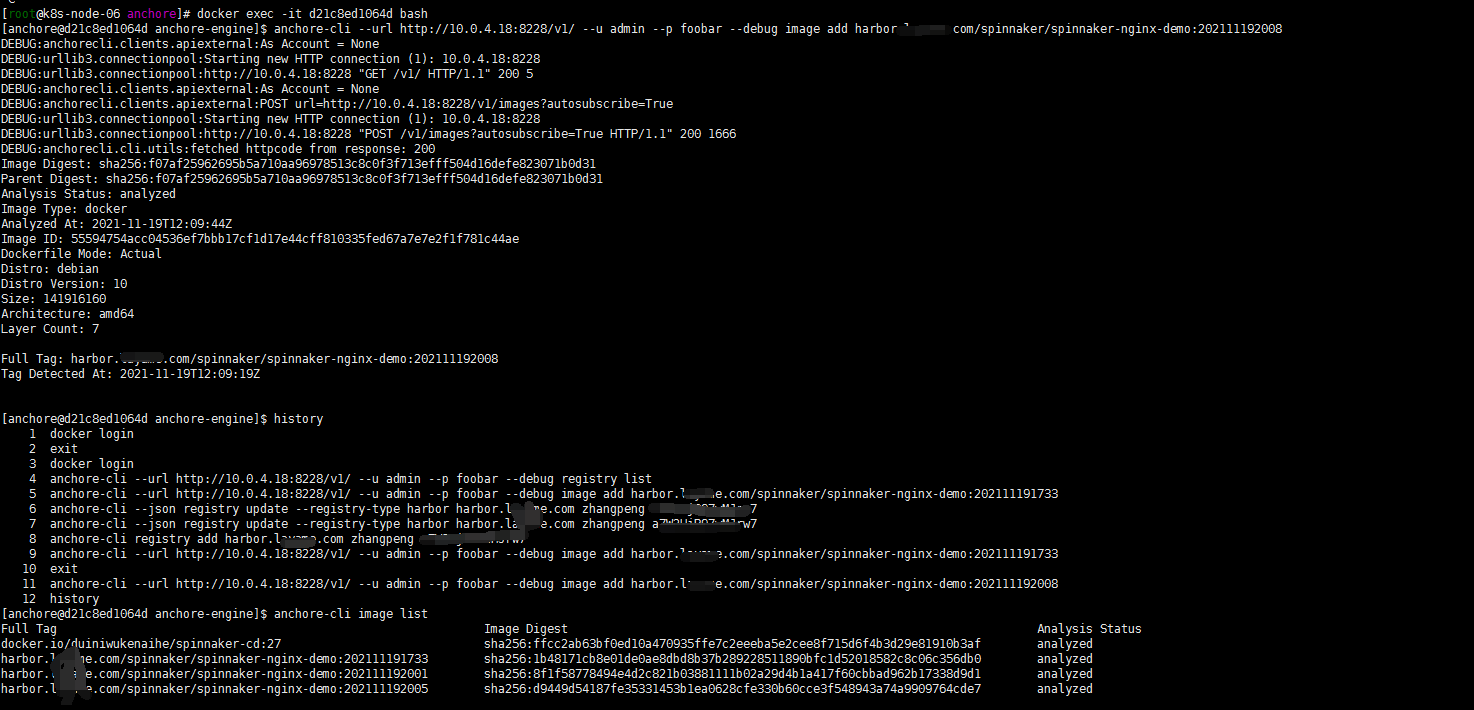

[root@k8s-node-06 anchore]# docker exec -it d21c8ed1064d bash [anchore@d21c8ed1064d anchore-engine]$ anchore-cli registry add harbor.xxxx.com zhangpeng xxxxxx [anchore@d21c8ed1064d anchore-engine]$ anchore-cli --url http://10.0.4.18:8228/v1/ --u admin --p foobar --debug image add harbor.layame.com/spinnaker/spinnaker-nginx-demo:202111192008

Well, I took a look at my harbor warehouse. I run my jenkins. It seems that my assembly line can report

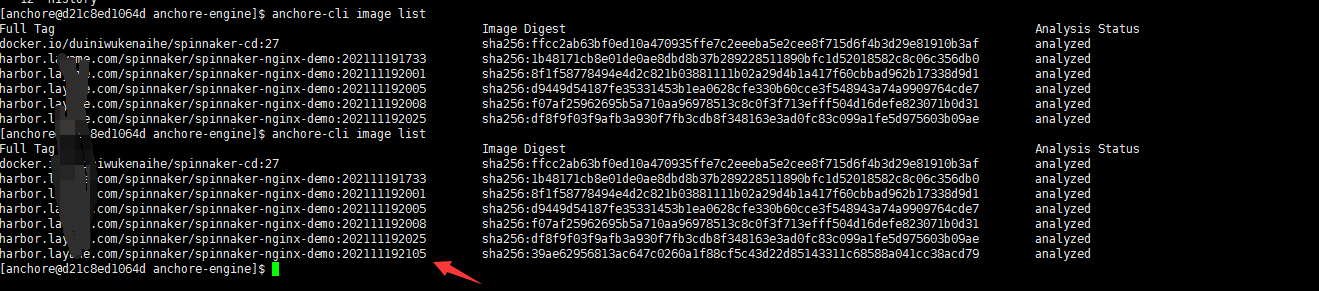

Log in to anchor_ api_ 1 container verification:

[anchore@d21c8ed1064d anchore-engine]$ anchore-cli image list

By the way, modify the anchor anchor built by helm

Similarly, I now suspect that the harbor deployed by my helm is also a mistake... I began to suspect that it was wrong. Try to modify it!

[root@k8s-master-01 anchore-engine]# kubectl get pods -n anchore-engine NAME READY STATUS RESTARTS AGE anchore-engine-anchore-engine-analyzer-fcf9ffcc8-dv955 1/1 Running 0 10h anchore-engine-anchore-engine-api-7f98dc568-j6tsz 1/1 Running 0 10h anchore-engine-anchore-engine-catalog-754b996b75-q5hqg 1/1 Running 0 10h anchore-engine-anchore-engine-policy-745b6778f7-hbsvx 1/1 Running 0 10h anchore-engine-anchore-engine-simplequeue-695df4498-wgss4 1/1 Running 0 10h anchore-engine-postgresql-9cdbb5f7f-4dcnk 1/1 Running 0 10h [root@k8s-master-01 anchore-engine]# kubectl get svc -n anchore-engine NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE anchore-engine-anchore-engine-api ClusterIP 172.19.255.231 <none> 8228/TCP 10h anchore-engine-anchore-engine-catalog ClusterIP 172.19.254.163 <none> 8082/TCP 10h anchore-engine-anchore-engine-policy ClusterIP 172.19.254.91 <none> 8087/TCP 10h anchore-engine-anchore-engine-simplequeue ClusterIP 172.19.253.141 <none> 8083/TCP 10h anchore-engine-postgresql ClusterIP 172.19.252.126 <none> 5432/TCP 10h [root@k8s-master-01 anchore-engine]# kubectl run -i --tty anchore-cli --restart=Always --image anchore/engine-cli --env ANCHORE_CLI_USER=admin --env ANCHORE_CLI_PASS=xxxxxx --env ANCHORE_CLI_URL=http://172.19.255.231:8228/v1 [anchore@anchore-cli anchore-cli]$ anchore-cli registry add harbor.xxxx.com zhangpeng xxxxxxxx [anchore@anchore-cli anchore-cli]$ anchore-cli --url http://172.19.255.231:8228/v1/ --u admin --p xxxx --debug image add harbor.xxxx.com/spinnaker/spinnaker-nginx-demo:202111192008

It seems to have succeeded! Overturn my runtime assumption or version

Modify the jenkins configuration again to build the anchor engine api address for helm. Due to the reason of cluster.local, I don't like to directly use the service address in the cluster:

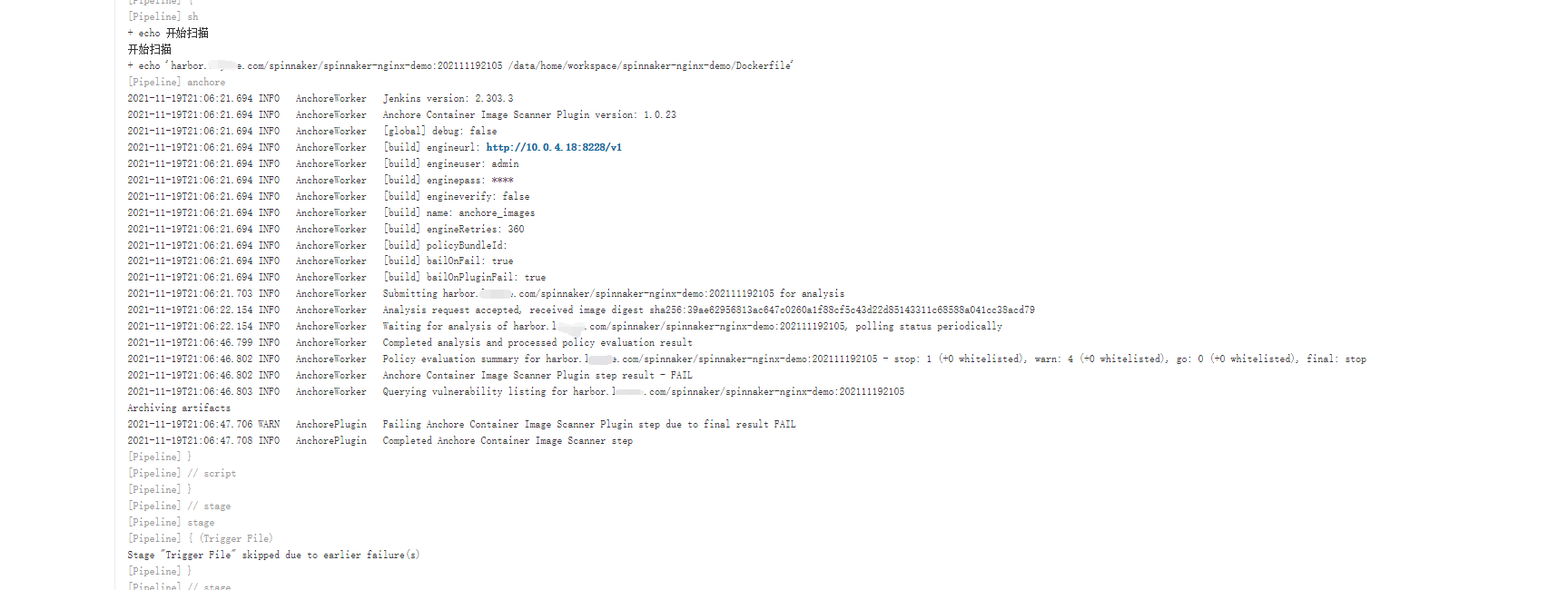

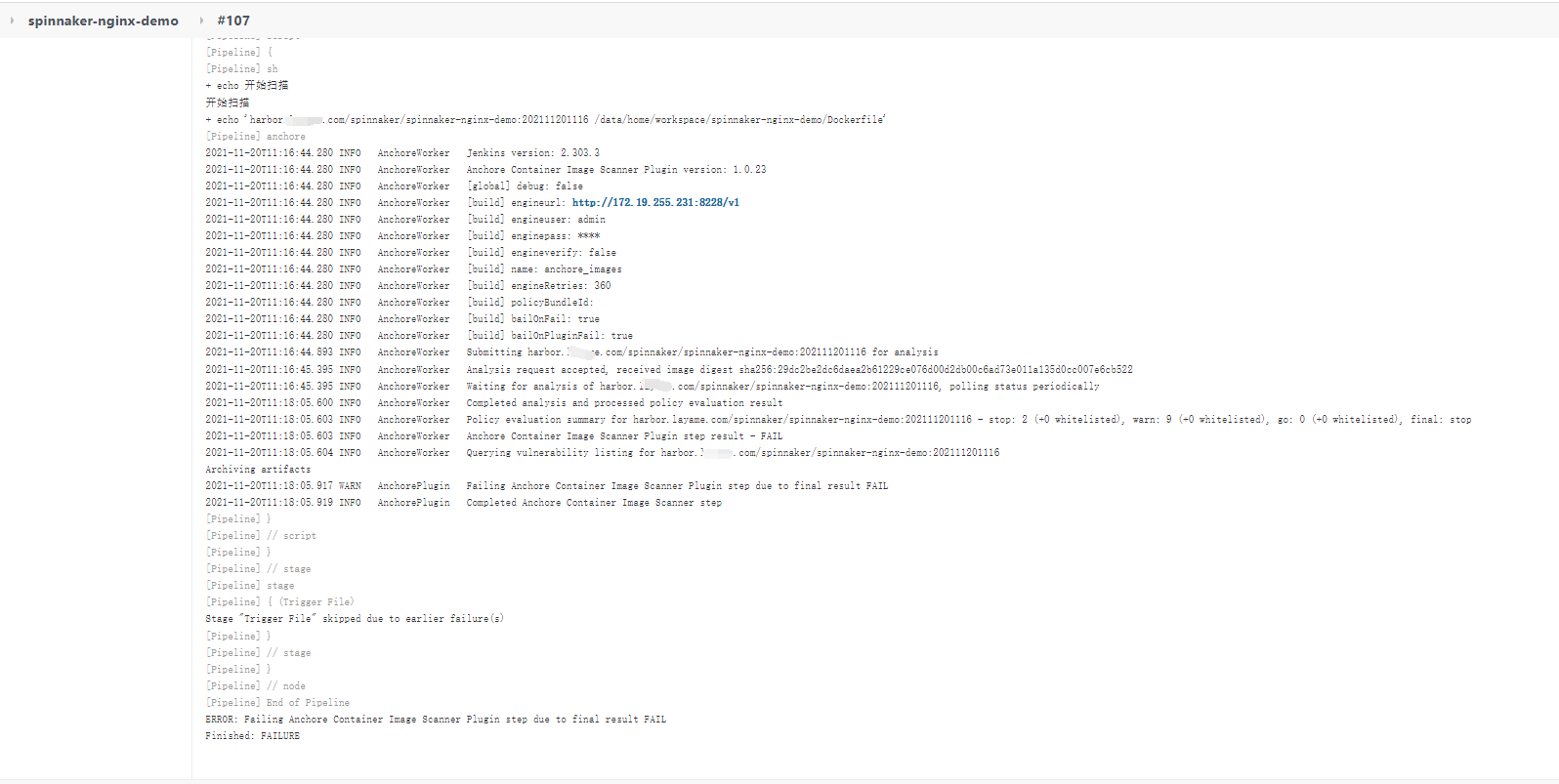

Run the jenkins task spinnaker nginx demo pipeline

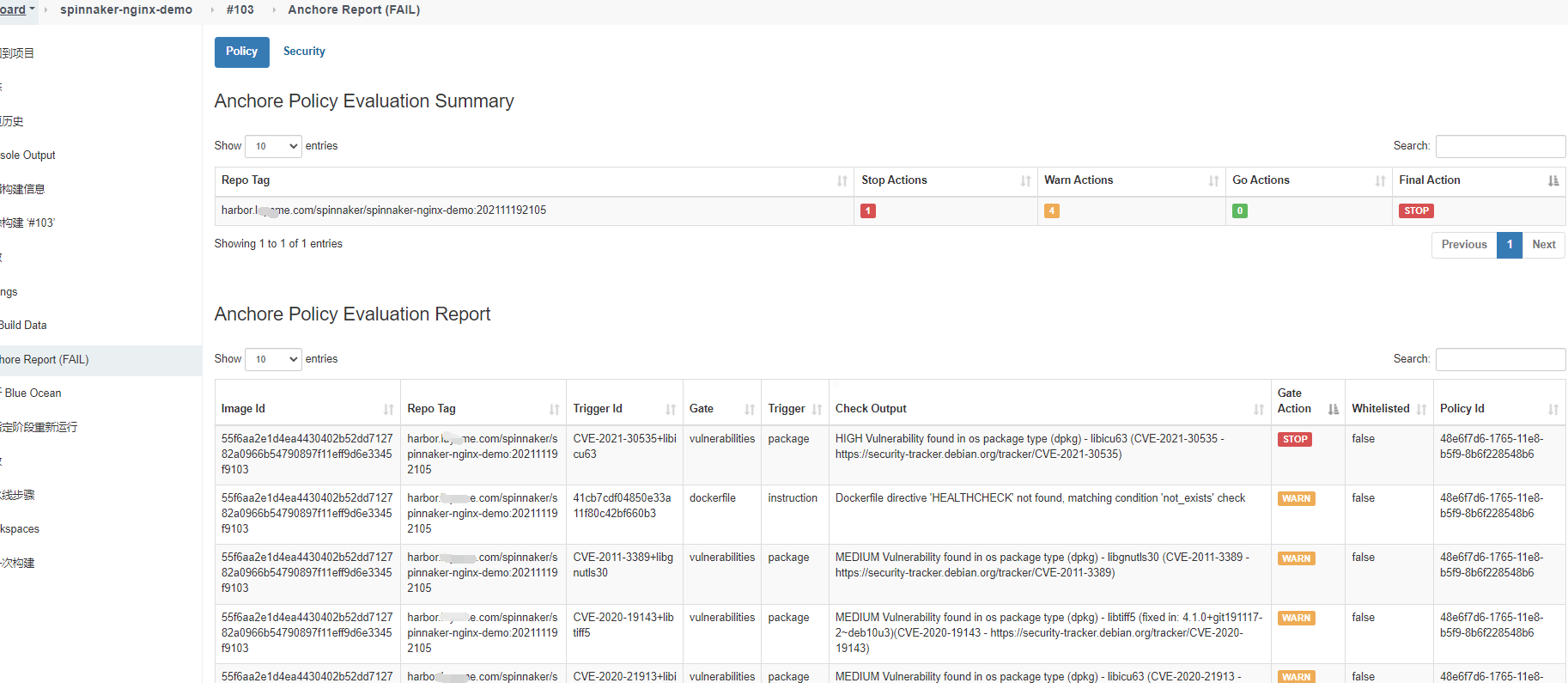

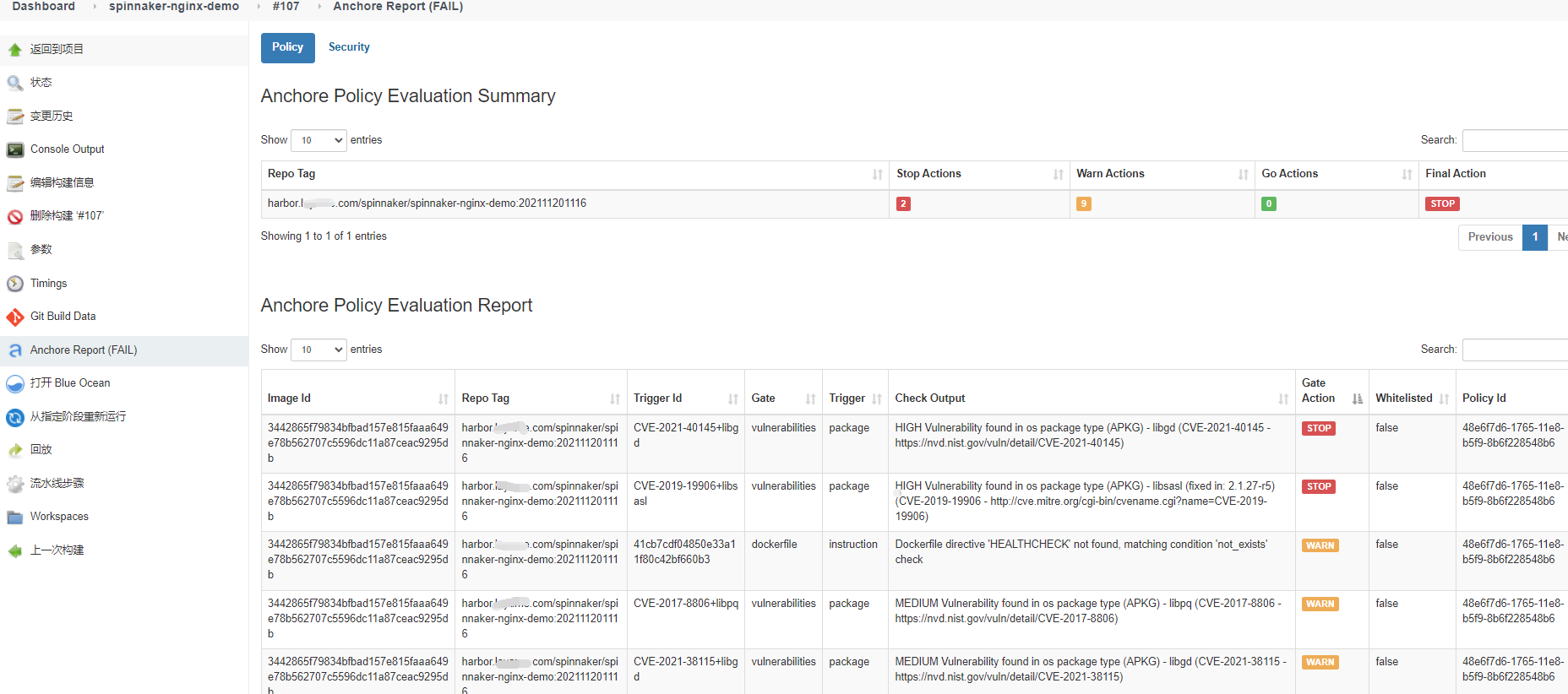

The pipeline task is still triggered by modifying the gitlab file. Unfortunately, the high-risk vulnerability detection failed to pass the FAIL. Hahaha, but the pipeline finally got through:

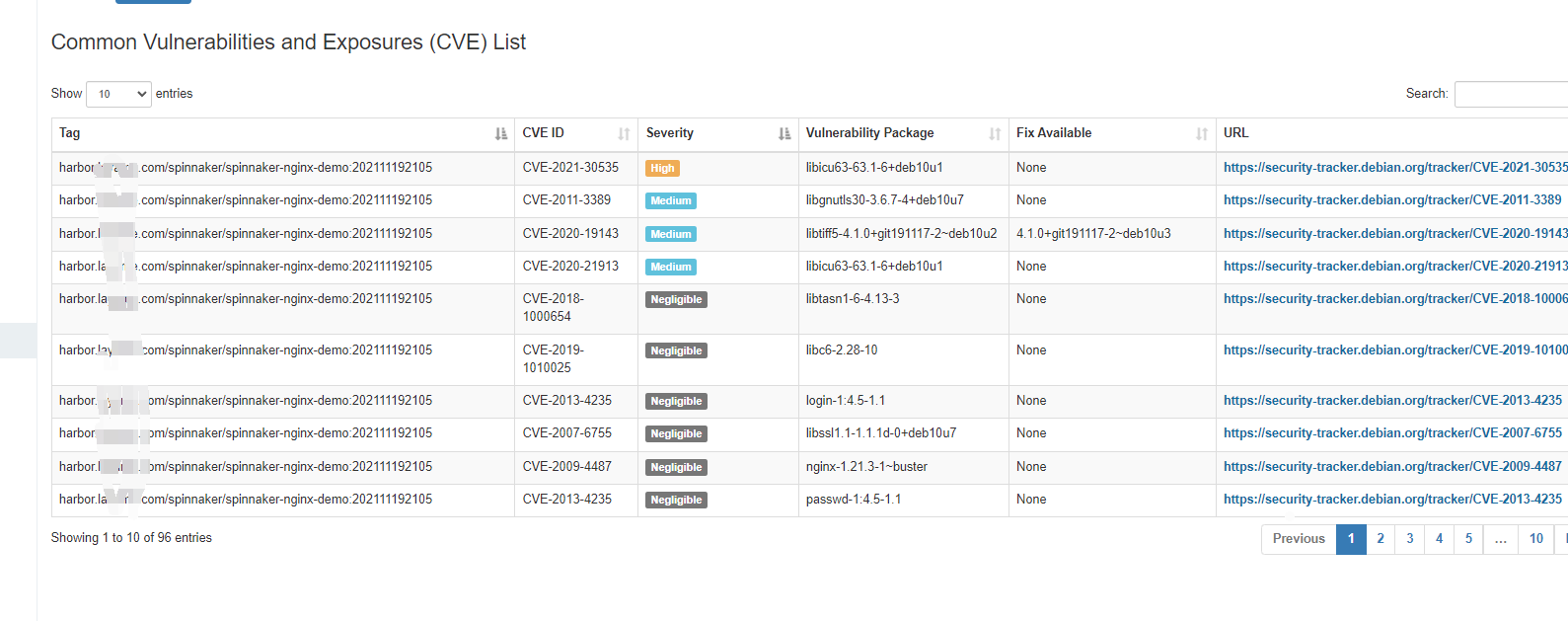

Compare Trivy with anchor engine

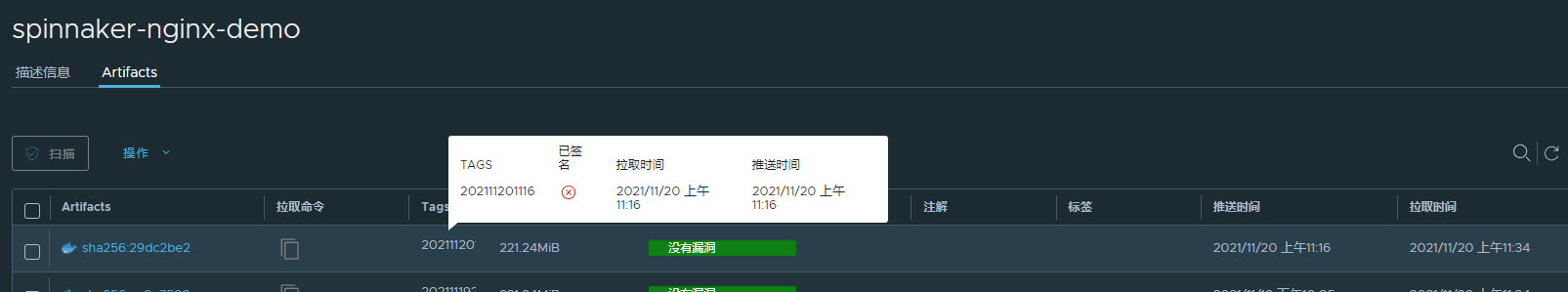

Compare the image of spinnaker nginx demo 107 product. The product label is harbor.xxxx.com/spinnaker/spinnaker-nginx-demo:202111201116:

Anchor engine report:

Why is there no vulnerability in harbor's trivy scanning?

Instantly fell into the obsessive-compulsive cycle to solve the loophole

To sum up:

- harbor customized image scanning plug-in tivy, well, you can also choose claim. It seems that you can also get through with anchor engine

- Anchor engine wants to add to the private warehouse. Remember to modify cluster.local for heml installation and assembly address. If it is a custom cluster

- Anchor engine scans more rigorously than trivy

- Be good at using the -- help command: anchor cli -- help