[OpenCV] detailed explanation and principle analysis of MatchTemplate function parameters

MatchTemplate

MatchTemplate(InputArray image, InputArray templ, OutputArray result, int method);

Image: enter an image to be matched, which supports 8U or 32F.

templ: enter a template image of the same type as image.

result: output the matrix of saved results, type 32F.

Method: the data comparison method to use.

1.result:

Result is a result matrix, assuming that the image to be matched is I, the width and height are (W, H), the template image is T, and the width and height are (W, H). Then the size of the result is (W-w+1,H-h+1).

2.method

TM_CCORR:

**Cross correlation algorithm** Cross correlation is a classical statistical matching algorithm, which determines the matching degree by calculating the cross-correlation between the template image and the matching image. The position of the search window at the maximum cross-correlation determines the position of the template image in the image to be matched.

characteristic

Be good at distinguishing different areas (with color differences).

TM_SQDIFF:

Error sum of squares algorithm Sum of Squared Differences (SSD algorithm for short), also known as difference sum algorithm

advantage

- The idea is simple and easy to understand (the sum of the absolute values of the difference between gray values at the corresponding position of the sub image and the template image, and then calculate the average).

- The operation process is simple and the matching accuracy is high.

shortcoming

- The amount of computation is too large.

- Very sensitive to noise.

TM_CCOEFF

Mean shifted cross correlation [Pearson correlation coefficient]. Cross correlation algorithm and Mean shift algorithm The combination of.

advantage

The algorithm is simple and easy to implement, and is suitable for real-time tracking

shortcoming

Tracking small targets and fast moving targets often fails, and can not recover tracking under all occlusion

TM_CCORR_NORMED

Normalized cross correlation (NCC) matching algorithm.

It is a linear invariant of brightness and contrast.

The disadvantage of this algorithm is that there are many feature points involved in the operation, and the operation speed is relatively slow.

Other normalization algorithms

TM_ SQDIFF_ Normalized and TM_CCOEFF_NORMED

Experimental comparison

Use trackbar to adjust different method s

const char* trackbar_label = "Method: \n 0: SQDIFF \n 1: SQDIFF NORMED \n 2: TM CCORR \n 3: TM CCORR NORMED \n 4: TM COEFF \n 5: TM COEFF NORMED"; createTrackbar(trackbar_label, image_window, &match_method, max_Trackbar, MatchingMethod); MatchingMethod(0, 0);

template matching

void MatchingMethod(int, void*)

{

Mat img_display;

img.copyTo(img_display);

int result_cols = img.cols - templ.cols + 1;

int result_rows = img.rows - templ.rows + 1;

result.create(result_rows, result_cols, CV_32FC1);

matchTemplate(img, templ, result, match_method);

normalize(result, result, 0, 1, NORM_MINMAX, -1, Mat());//Normalize the results to prevent overflow

double minVal; double maxVal; Point minLoc; Point maxLoc;

Point matchLoc;

minMaxLoc(result, &minVal, &maxVal, &minLoc, &maxLoc, Mat());//Different methods correspond to different minimum and maximum values

if (match_method == TM_SQDIFF || match_method == TM_SQDIFF_NORMED)

{

matchLoc = minLoc;

}

else

{

matchLoc = maxLoc;

}

rectangle(img_display, matchLoc, Point(matchLoc.x + templ.cols, matchLoc.y + templ.rows), Scalar::all(0), 2, 8, 0);//draw rectangle

// rectangle(result, matchLoc, Point(matchLoc.x + templ.cols, matchLoc.y + templ.rows), Scalar::all(0), 2, 8, 0);

imshow(image_window, img_display);

imshow(result_window, result);

imshow("template", templ);

return;

}

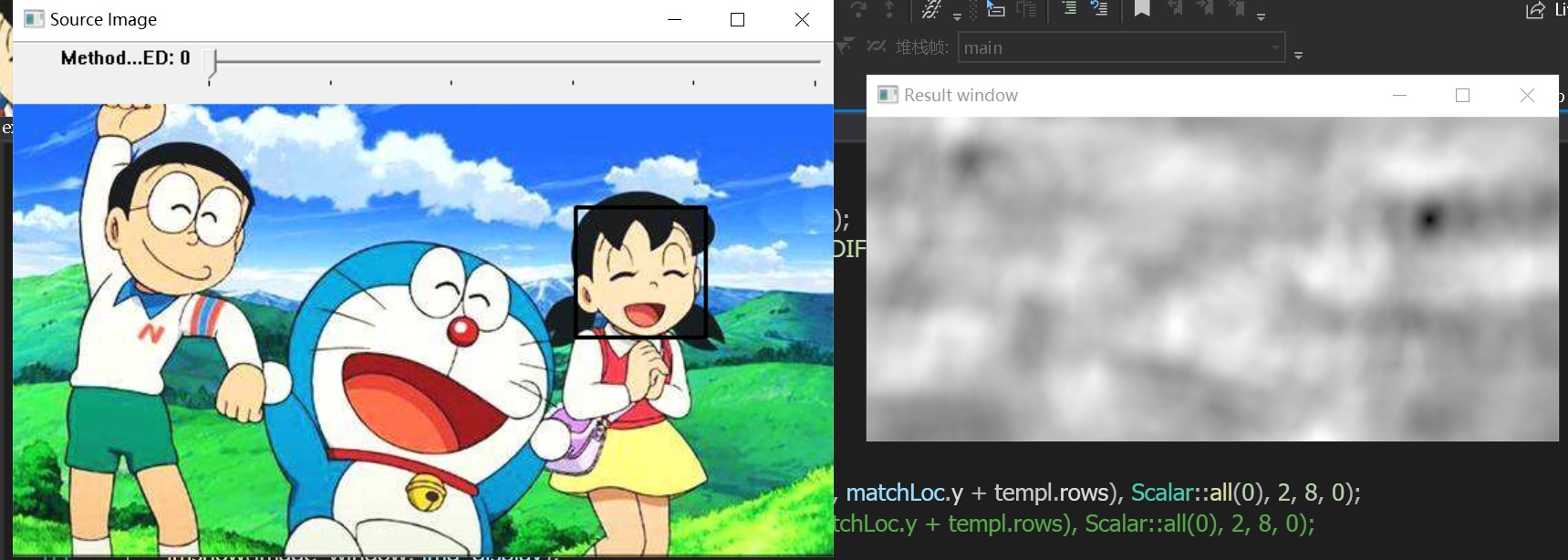

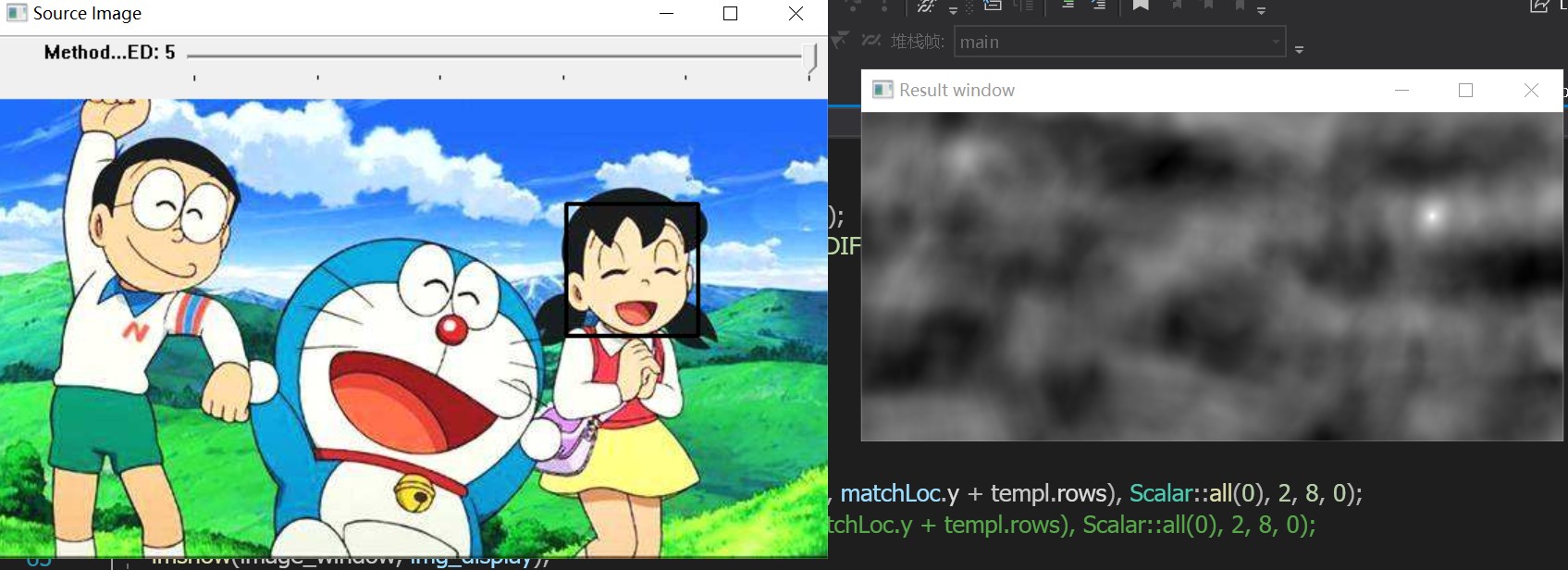

experimental result

TM_SQDIFF (mode 0) and TM_ CCOEFF_ Compared with normalized (mode 5):

TM_SQDIFF:

0 corresponds to the most matched position;

TM_CCOEFF_NORMED:

1 corresponds to the most matched position;

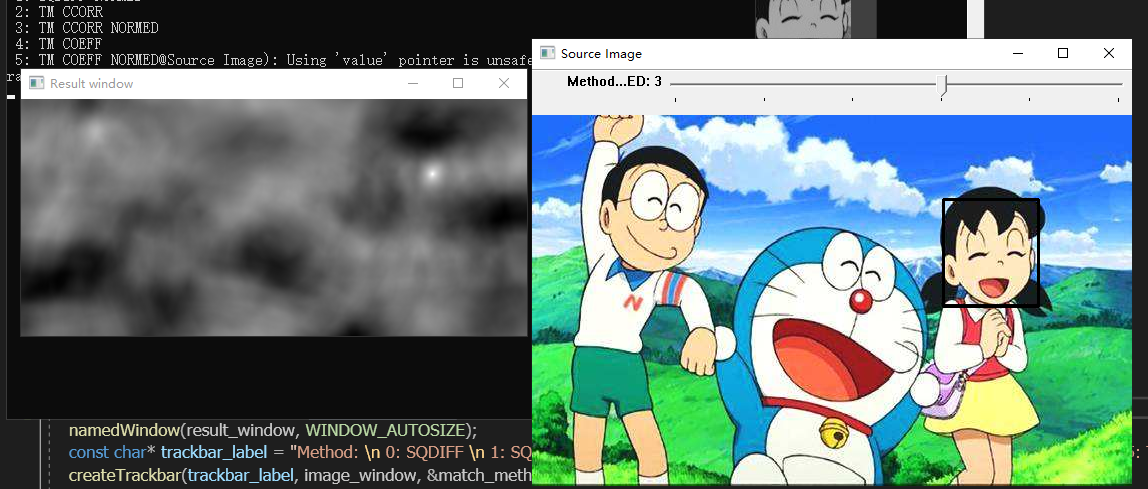

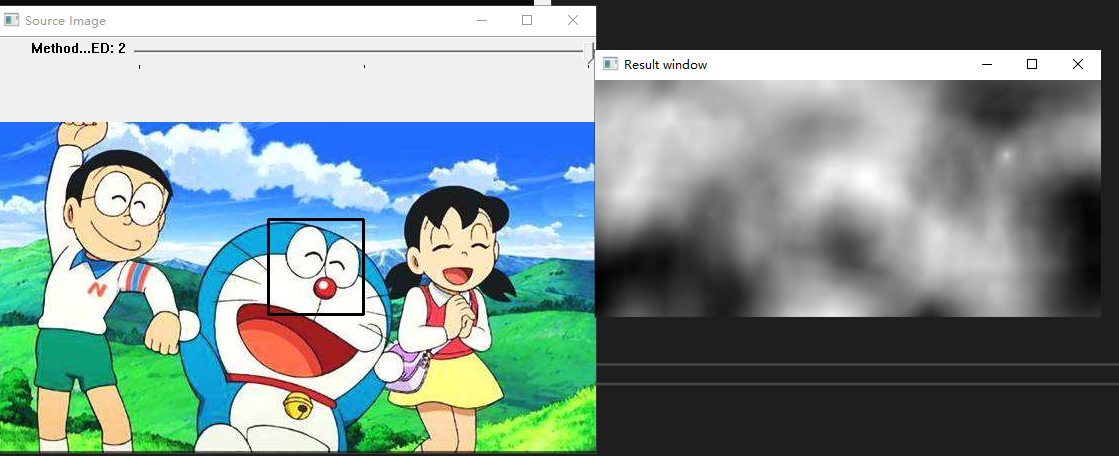

After the template color changes:

TM_CCORR (mode2) color discrimination is not obvious, resulting in matching error:

TM_CCORR_normal(3) correct:

Different matching values are different, but the correct region can be recognized generally.

Use your own avatar deformation to test the influence of deformation on template matching, and the results are roughly the same as above.