This article mainly analyzes the core components in Netty in detail.

Initiator Bootstrap and ServerBootstrap, as the intersection of Netty's client and server, are the first step in writing Netty's network program. It allows us to assemble the core components of Netty like building blocks. In the process of building Netty Server, we need to pay attention to three important steps

- Configure thread pool

- Channel initialization

- Handler processor build

Detailed explanation of scheduler

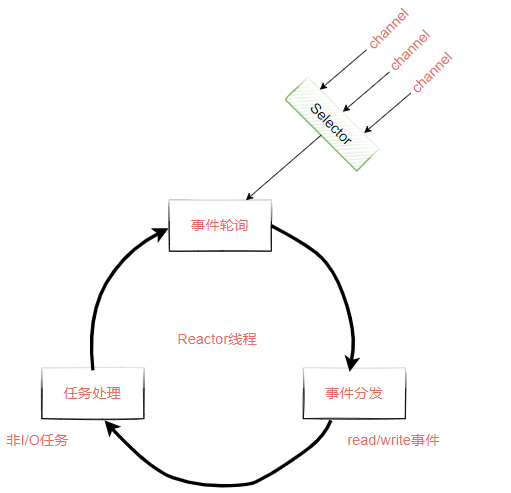

We talked about the Reactor model of NIO multiplexing design pattern earlier. The main idea of the Reactor model is to separate the responsibilities of network connection, event distribution and task processing, and improve the throughput in the Reactor model by introducing multithreading. It includes three Reactor models

- Single thread single Reactor model

- Multithreaded single Reactor model

- Multi thread multi Reactor model

In Netty, the above three threading models can be implemented very easily, and Netty recommends using the master-slave multithreading model, so that thousands of client connections can be easily processed. In the massive client concurrent requests, the master-slave multithreading model can make full use of the multi-core capability and improve the system throughput by increasing the number of SubReactor threads.

The operation mechanism of Reactor model is divided into four steps, as shown in Figure 2-10.

- The connection is registered. After the Channel is established, it is registered to the Selector selector in the Reactor thread

- Event polling: polling the I/O events of all channels registered in the Selector

- Event distribution: allocate corresponding processing threads for ready I/O events

- For task processing, the Reactor thread is also responsible for non I/O tasks in the task queue. Each Worker thread takes out tasks from its own maintained task queue for asynchronous execution.

< center > figure 2-10 Reactor workflow < / center >

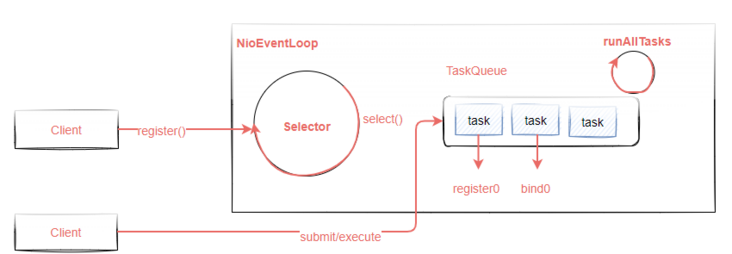

EventLoop event loop

In Netty, the event processor of Reactor model is implemented using EventLoop. One EventLoop corresponds to one thread. A Selector and taskQueue are maintained in EventLoop to handle network IO events and internal tasks respectively. Its working principle is shown in Figure 2-11.

< center > figure 2-11 NioEventLoop principle < / center >

EventLoop basic application

The following code represents EventLoop, which implements Selector registration and normal task submission functions respectively.

public class EventLoopExample {

public static void main(String[] args) {

EventLoopGroup group=new NioEventLoopGroup(2);

System.out.println(group.next()); //Output the first NioEventLoop

System.out.println(group.next()); //Output the second NioEventLoop

System.out.println(group.next()); //Since there are only two, we will start from the first

//Get an event loop object NioEventLoop

group.next().register(); //Register on selector

group.next().submit(()->{

System.out.println(Thread.currentThread().getName()+"-----");

});

}

}The core process of EventLoop

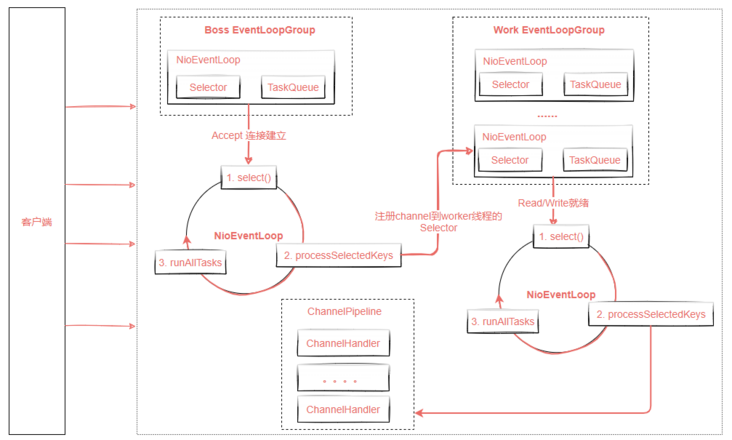

Based on the above explanation, after understanding the working mechanism of EventLoop, we will explain it through an overall flow chart, as shown in Figure 2-12.

EventLoop is an event processor of Reactor model. An EventLoop corresponds to a thread. It internally maintains a selector and taskQueue to handle IO events and internal tasks. The percentage of IO events and internal task execution time is adjusted through ioRatio, which represents the percentage of IO execution time. Tasks include ordinary tasks and delayed tasks that have arrived. Delayed tasks are stored in a priority queue. Before executing tasks, read all arrived tasks from the priority queue, and then add them to the taskQueue. Finally, tasks are executed uniformly.

< center > figure 2-12 working mechanism of EventLoop < / center >

How does EventLoop implement multiple Reactor models

Single thread mode

EventLoopGroup group=new NioEventLoopGroup(1); ServerBootstrap b=new ServerBootstrap(); b.group(group);

Multithreading mode

EventLoopGroup group =new NioEventLoopGroup(); //The default setting is twice the number of cpu cores ServerBootstrap b=new ServerBootstrap(); b.group(group);

Multithreaded master-slave mode

EventLoopGroup boss=new NioEventLoopGroup(1); EventLoopGroup work=new NioEventLoopGroup(); ServerBootstrap b=new ServerBootstrap(); b.group(boss,work);

Implementation principle of EventLoop

EventLoopGroup initialization method. In MultithreadEventExecutorGroup.java, build an EventExecutor array according to the configured number of nThreads

protected MultithreadEventExecutorGroup(int nThreads, Executor executor, EventExecutorChooserFactory chooserFactory, Object... args) { checkPositive(nThreads, "nThreads"); if (executor == null) { executor = new ThreadPerTaskExecutor(newDefaultThreadFactory()); } children = new EventExecutor[nThreads]; for (int i = 0; i < nThreads; i ++) { boolean success = false; try { children[i] = newChild(executor, args); } } }Register the implementation of channel to multiplexer, MultithreadEventLoopGroup.register method ()

SingleThreadEventLoop ->AbstractUnsafe.register ->AbstractChannel.register0->AbstractNioChannel.doRegister()

You can see that the channel will be registered in the unwrapped selector multiplexer in an eventLoop.

protected void doRegister() throws Exception { boolean selected = false; for (;;) { try { selectionKey = javaChannel().register(eventLoop().unwrappedSelector(), 0, this); return; } } }The event processing process is continuously traversed through the run method in NioEventLoop

protected void run() { int selectCnt = 0; for (;;) { try { int strategy; try { //The calculation strategy determines the current processing method according to whether there are tasks in the blocking queue strategy = selectStrategy.calculateStrategy(selectNowSupplier, hasTasks()); switch (strategy) { case SelectStrategy.CONTINUE: continue; case SelectStrategy.BUSY_WAIT: // fall-through to SELECT since the busy-wait is not supported with NIO case SelectStrategy.SELECT: long curDeadlineNanos = nextScheduledTaskDeadlineNanos(); if (curDeadlineNanos == -1L) { curDeadlineNanos = NONE; // nothing on the calendar } nextWakeupNanos.set(curDeadlineNanos); try { if (!hasTasks()) { //If the data in the queue is empty, select is called to query the ready event strategy = select(curDeadlineNanos); } } finally { nextWakeupNanos.lazySet(AWAKE); } default: } } selectCnt++; cancelledKeys = 0; needsToSelectAgain = false; /* ioRatio Adjust the percentage of connection events and internal task execution events * ioRatio The larger the, the greater the percentage of connection event processing */ final int ioRatio = this.ioRatio; boolean ranTasks; if (ioRatio == 100) { try { if (strategy > 0) { //Processing IO time processSelectedKeys(); } } finally { //Ensure that the tasks in the queue are executed each time ranTasks = runAllTasks(); } } else if (strategy > 0) { final long ioStartTime = System.nanoTime(); try { processSelectedKeys(); } finally { // Ensure we always run tasks. final long ioTime = System.nanoTime() - ioStartTime; ranTasks = runAllTasks(ioTime * (100 - ioRatio) / ioRatio); } } else { ranTasks = runAllTasks(0); // This will run the minimum number of tasks } if (ranTasks || strategy > 0) { if (selectCnt > MIN_PREMATURE_SELECTOR_RETURNS && logger.isDebugEnabled()) { logger.debug("Selector.select() returned prematurely {} times in a row for Selector {}.", selectCnt - 1, selector); } selectCnt = 0; } else if (unexpectedSelectorWakeup(selectCnt)) { // Unexpected wakeup (unusual case) selectCnt = 0; } } }

Coordination processing of Pipeline in service orchestration layer

Task scheduling can be realized through EventLoop, which is responsible for listening to I/O events and signal events. When relevant events are received, someone needs to respond to these events and data. These events are completed through ChannelHandler defined in ChannelPipeline. They are the core components of service orchestration layer in Netty.

In the following code, we add h1 and h2 inboundhandlers to handle the reading operation of client data. The code is as follows.

ServerBootstrap bootstrap = new ServerBootstrap();

bootstrap.group(bossGroup, workerGroup)

//Configure the channel of the Server, which is equivalent to the ServerSocketChannel in NIO

.channel(NioServerSocketChannel.class)

//childHandler indicates that a processor is configured for those worker threads,

// This is what NIO said above. It abstracts the specific logic for processing business and puts it into the Handler

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel socketChannel) throws Exception {

// socketChannel.pipeline().addLast(new NormalMessageHandler());

socketChannel.pipeline().addLast("h1",new ChannelInboundHandlerAdapter(){

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

System.out.println("handler-01");

super.channelRead(ctx, msg);

}

}).addLast("h2",new ChannelInboundHandlerAdapter(){

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

System.out.println("handler-02");

super.channelRead(ctx, msg);

}

});

}

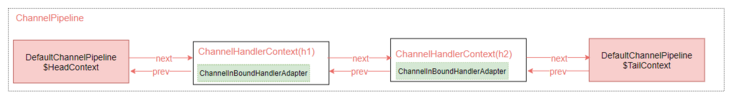

});The above code constructs a ChannelPipeline and obtains the structure shown in Figure 2-13. Each Channel will be bound with a ChannelPipeline. A ChannelPipeline contains multiple channelhandlers, which will be packaged as ChannelHandlerContext and added to the two-way linked list constructed by the Pipeline.

ChannelHandlerContext is used to save the context of ChannelHandler. It contains all events in the life cycle of ChannelHandler, such as connect/bind/read/write. The advantage of this design is that when each ChannelHandler transmits data, the pre and post general logic can be directly saved in ChannelHandlerContext for transmission.

< center > figure 2-13 < / center >

Outbound and inbound operations

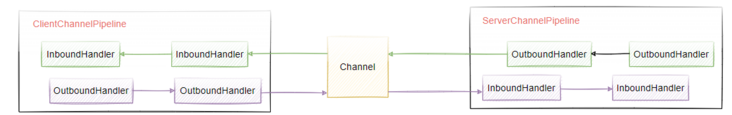

According to the flow direction of network data, the ChannelPipeline is divided into two processors: inbound ChannelInBoundHandler and outbound ChannelOutboundHandler. As shown in Figure 2-14, during the communication between the client and the server, the process of sending data from the client to the server is called outbound. For the server, the data flows from the client to the server, which is inbound at this time.

< center > figure 2-14 relationship between inbound and OutBound < / center >

ChannelHandler event trigger mechanism

When a Channel triggers an IO event, it will be processed through the Handler, and the ChannelHandler is designed around the life cycle of I/O events, such as connection establishment, data reading, data writing, connection destruction, etc.

ChannelHandler has two important sub interface implementations, which intercept I/O events of data inflow and data outflow respectively

- ChannelInboundHandler

- ChannelOutboundHandler

The Adapter class shown in Figure 2-15 provides many default operations. For example, there are many methods in ChannelHandler. Sometimes our user-defined methods do not need to overload all, but only one or two methods. Then you can use the Adapter class, which has many default methods. In other frameworks, the role of classes ending with Adapter is mostly the same. Therefore, when we use netty, we often rarely directly implement the interface of ChannelHandler and often inherit the Adapter class.

<img src="https://mic-blob-bucket.oss-cn-beijing.aliyuncs.com/202111090025881.png" alt="image-20210816200206761" style="zoom:67%;" />

< center > figure 2-15 ChannelHandler class diagram < / center >

The callback and trigger timing of ChannelInboundHandler event are as follows

| Event callback method | Trigger timing |

|---|---|

| channelRegistered | The Channel is registered with EventLoop |

| channelUnregistered | Channel unregisters from EventLoop |

| channelActive | The Channel is ready for reading and writing |

| channelInactive | The Channel is not ready |

| channelRead | The Channel can read data from the remote end |

| channelReadComplete | Channel read data complete |

| userEventTriggered | When a user event is triggered |

| channelWritabilityChanged | The write state of the Channel changes |

ChannelOutboundHandler time callback trigger timing

| Event callback method | Trigger timing |

|---|---|

| bind | Called when the request binds the channel to a local address |

| connect | Called when a request is made to connect the channel to a remote node |

| disconnect | Called when a request is made to disconnect the channel from the remote node |

| close | Called when a request is made to close the channel |

| deregister | Called when a request is made to unregister the channel from its EventLoop |

| read | Called when a request is made to read data through the channel |

| flush | Called when a request is made to refresh the queued data to the remote node through the channel |

| write | Called when a request is made to write data to a remote node through the channel |

Event propagation mechanism demonstration

public class NormalOutBoundHandler extends ChannelOutboundHandlerAdapter {

private final String name;

public NormalOutBoundHandler(String name) {

this.name = name;

}

@Override

public void write(ChannelHandlerContext ctx, Object msg, ChannelPromise promise) throws Exception {

System.out.println("OutBoundHandler:"+name);

super.write(ctx, msg, promise);

}

}public class NormalInBoundHandler extends ChannelInboundHandlerAdapter {

private final String name;

private final boolean flush;

public NormalInBoundHandler(String name, boolean flush) {

this.name = name;

this.flush = flush;

}

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

System.out.println("InboundHandler:"+name);

if(flush){

ctx.channel().writeAndFlush(msg);

}else {

super.channelRead(ctx, msg);

}

}

}ServerBootstrap bootstrap = new ServerBootstrap();

bootstrap.group(bossGroup, workerGroup)

//Configure the channel of the Server, which is equivalent to the ServerSocketChannel in NIO

.channel(NioServerSocketChannel.class)

//childHandler indicates that a processor is configured for those worker threads,

// This is what NIO said above. It abstracts the specific logic for processing business and puts it into the Handler

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel socketChannel) throws Exception {

socketChannel.pipeline()

.addLast(new NormalInBoundHandler("NormalInBoundA",false))

.addLast(new NormalInBoundHandler("NormalInBoundB",false))

.addLast(new NormalInBoundHandler("NormalInBoundC",true));

socketChannel.pipeline()

.addLast(new NormalOutBoundHandler("NormalOutBoundA"))

.addLast(new NormalOutBoundHandler("NormalOutBoundB"))

.addLast(new NormalOutBoundHandler("NormalOutBoundC"));

}

});After running the above code, you will get the following execution results

InboundHandler:NormalInBoundA InboundHandler:NormalInBoundB InboundHandler:NormalInBoundC OutBoundHandler:NormalOutBoundC OutBoundHandler:NormalOutBoundB OutBoundHandler:NormalOutBoundA

When the client sends a request to the server, it triggers the NormalInBound call chain of the service side, calls Handler one by one in accordance with the arrangement order, and when the InBound processing completes, calls the WriteAndFlush method to write back the data to the client, which triggers the write event of the NormalOutBoundHandler calling chain.

From the execution results, the event propagation directions of Inbound and Outbound are different. The Inbound propagation direction is head - > tail, and the Outbound propagation direction is tail head.

Anomaly propagation mechanism

ChannelPipeline time propagation mechanism is a typical responsibility chain model. Some students will certainly have questions. If a handler in this link is abnormal, what problems will it cause? We modified NormalInBoundHandler for the previous example

public class NormalInBoundHandler extends ChannelInboundHandlerAdapter {

private final String name;

private final boolean flush;

public NormalInBoundHandler(String name, boolean flush) {

this.name = name;

this.flush = flush;

}

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

System.out.println("InboundHandler:"+name);

if(flush){

ctx.channel().writeAndFlush(msg);

}else {

//Add exception handling

throw new RuntimeException("InBoundHandler:"+name);

}

}

}Once an exception is thrown at this time, the entire request chain will be interrupted. An exception capture method is provided in the ChannelHandler to avoid the interruption of the request link caused by a Handler exception in the ChannelHandler chain. It will propagate exceptions from the head node to the Tail node in the order of the Handler link. If the user does not handle the exception, the Tail node will handle it uniformly

Modify NormalInboundHandler and override the following method.

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

System.out.println("InboundHandlerException:"+name);

super.exceptionCaught(ctx, cause);

}In Netty application development, good exception handling is very important, which can make problem troubleshooting very easy, so we can solve the exception handling problem through a unified interception.

Add a composite processor implementation class

public class ExceptionHandler extends ChannelDuplexHandler {

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

if(cause instanceof RuntimeException){

System.out.println("Handling business exceptions");

}

super.exceptionCaught(ctx, cause);

}

}Add the new ExceptionHandler to the ChannelPipeline

bootstrap.group(bossGroup, workerGroup)

//Configure the channel of the Server, which is equivalent to the ServerSocketChannel in NIO

.channel(NioServerSocketChannel.class)

//childHandler indicates that a processor is configured for those worker threads,

// This is what NIO said above. It abstracts the specific logic for processing business and puts it into the Handler

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel socketChannel) throws Exception {

socketChannel.pipeline()

.addLast(new NormalInBoundHandler("NormalInBoundA",false))

.addLast(new NormalInBoundHandler("NormalInBoundB",false))

.addLast(new NormalInBoundHandler("NormalInBoundC",true));

socketChannel.pipeline()

.addLast(new NormalOutBoundHandler("NormalOutBoundA"))

.addLast(new NormalOutBoundHandler("NormalOutBoundB"))

.addLast(new NormalOutBoundHandler("NormalOutBoundC"))

.addLast(new ExceptionHandler());

}

});Finally, we can achieve unified exception handling.

Copyright notice: unless otherwise stated, all articles on this blog adopt CC BY-NC-SA 4.0 license agreement. Reprint please indicate from Mic to take you to learn architecture!

If this article is helpful to you, please pay attention and praise. Your persistence is the driving force of my continuous creation. Welcome to WeChat public official account for more dry cargo.