1. Visibility between threads

Multithreading improves efficiency and caches data locally, making data modification invisible,

To ensure visibility, either trigger synchronization instructions or add volatile modified memory. As long as there are modifications, synchronize each thread involved immediately

/**

* volatile Keyword to make a variable visible among multiple threads

* A B Threads all use A variable. java reserves A copy in thread A by default. Therefore, if thread B modifies the variable, thread A may not know

* Using the volatile keyword will make all threads read the modified value of the variable

*

* In the following code, running is a t object that exists in heap memory

* When thread t1 starts running, it will read the running value from memory to the working area of thread t1. This copy will be used directly during the running process and will not be used every time

* Read the heap memory, so that when the main thread modifies the running value, the t1 thread will not be aware of it, so it will not stop running

*

* Using volatile will force all threads to read the running value from the heap memory

*

* volatile The inconsistency caused by multiple threads modifying the running variable cannot be guaranteed,

* That is, volatile cannot replace synchronized

*

*/

public class T01_HelloVolatile {

private static volatile boolean running = true;

private static void m() {

System.out.println("m start");

while (running) {

//System.out.println("hello");

}

System.out.println("m end!");

}

public static void main(String[] args) throws IOException {

new Thread(T01_HelloVolatile::m, "t1").start();

SleepHelper.sleepSeconds(1);

running = false;

System.in.read();

}

}Cache line

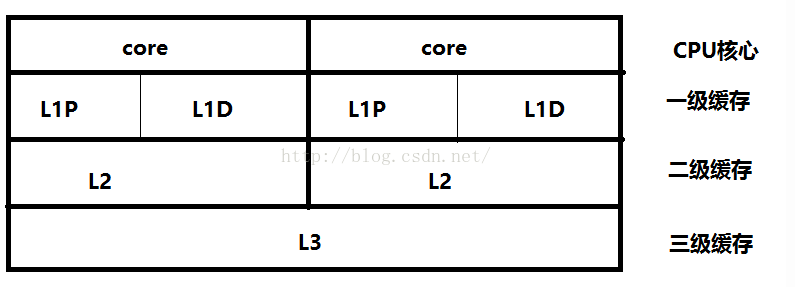

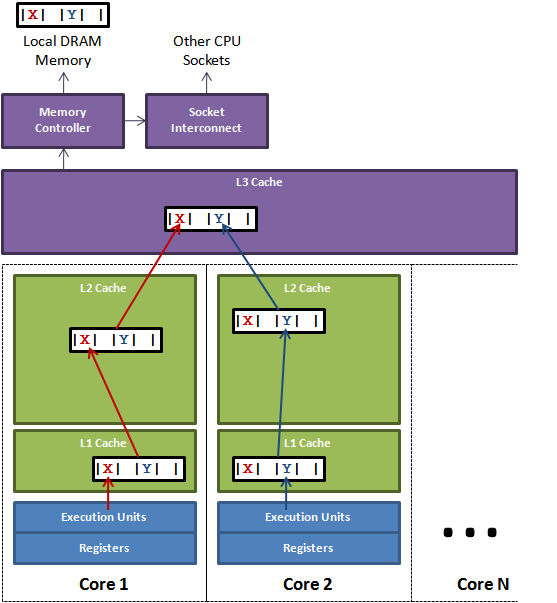

The most common cache line size is 64 bytes. When multiple threads modify independent variables, if these variables share the same cache line, they will inadvertently affect each other's performance, which is pseudo sharing

The problem of pseudo sharing is illustrated in the figure. The thread running on core 1 wants to update variable X, while the thread on Core 2 wants to update variable Y. Unfortunately, these two variables are in the same cache line. Each thread competes for ownership of the cache row to update variables. If core 1 gains ownership, the cache subsystem will invalidate the corresponding cache lines in core 2. When core 2 obtains ownership and then performs the update operation, core 1 will invalidate its corresponding cache line. This will go back and forth through L3 cache, which greatly affects the performance. If competing cores are located in different slots, additional connections across slots are required, and the problem may be more serious.

Lock contention caused by cache rows

In order to improve the processing speed, the processor does not directly communicate with the memory, but first reads the data in the system memory to the internal cache (L1,L2 or others) before operation, but does not know when it will be written to the memory after operation; If you write a variable declared Volatile, the JVM will send a Lock prefix instruction to the processor to write the data in the cache line where the variable is located back to the system memory. However, even if it is written back to memory, if the cached values of other processors are still old, there will be problems in performing the calculation operation. Therefore, in order to ensure that the caches of each processor are consistent, the cache consistency protocol will be implemented. Each processor checks whether its cached values are expired by sniffing the data transmitted on the bus, When the processor finds that the memory address corresponding to its cache line has been modified, it will set the cache line of the current processor to an invalid state. When the processor wants to modify this data, it will force the data to be read from the system memory to the processor cache again.

Java 8 implements byte filling to avoid pseudo sharing

JVM parameters - XX:-RestrictContended

@Contented is located in sun.misc. It is used to annotate the java attribute field and automatically fill in bytes to prevent pseudo sharing

import sun.misc.Contended;

//Note: when running this applet, you need to add a parameter: - XX: - restrictcontained

import java.util.concurrent.CountDownLatch;

public class T05_Contended {

public static long COUNT = 10_0000_0000L;

private static class T {

@Contended //Only 1.8 works, ensuring that x is in a separate line

public long x = 0L;

}

public static T[] arr = new T[2];

static {

arr[0] = new T();

arr[1] = new T();

}

public static void main(String[] args) throws Exception {

CountDownLatch latch = new CountDownLatch(2);

Thread t1 = new Thread(()->{

for (long i = 0; i < COUNT; i++) {

arr[0].x = i;

}

latch.countDown();

});

Thread t2 = new Thread(()->{

for (long i = 0; i < COUNT; i++) {

arr[1].x = i;

}

latch.countDown();

});

final long start = System.nanoTime();

t1.start();

t2.start();

latch.await();

System.out.println((System.nanoTime() - start)/100_0000);

}

}

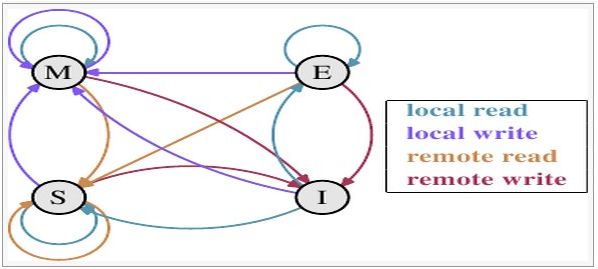

Cache consistency protocol MESI

M: Indicates that the content in the cache line has been modified, and the cache line is only cached in the CPU. The data in the cache line in this state is different from that in the memory. It will be written to the memory at some time in the future (when other CPUs want to read the contents of the cache line. Or when other CPUs want to modify the contents in the memory corresponding to the cache (I understand that when CPUs want to modify the memory, they must read it into the cache first and then modify it) , this is the same as reading the contents of the cache.).

E: E means that the content in the memory corresponding to the cache line is only cached by the CPU, and other CPUs do not cache the content in the memory corresponding to the cache line. The contents of the cache line in this state are consistent with those in memory. The cache can change to S state when any other CPU reads the contents in the memory corresponding to the cache. Or if the local processor writes to the cache, it will become M state.

S: This state means that the data exists not only in the local CPU cache, but also in the cache of other CPUs. The data in this state is consistent with the data in memory. When a CPU modifies the contents of the memory corresponding to the cache line, it will change the cache line to I state.

1: I nvalid when representing the contents of the cache row.

2. Order of concurrent programming

Out of order execution of CPU, in order to improve efficiency

As if serial of thread

A single thread, two statements, may not be executed in sequence

For single thread reordering, the final consistency must be guaranteed

As if serial: looks like serialization (single thread)

Will produce: multithreading will produce undesirable results

Object 0 =new Object();

1. Object creation process (semi initialization)

2. Reordering of DCL signal volatile instruction

3. Storage layout of objects in memory

4. What does the object header include

5. How to locate the object

6. How to allocate objects

7.Object 0 =new Object(); How many bytes