Environmental preparation

- First, you need to have a complete set of clusters

[root@master ~]# kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME master Ready master 114d v1.21.0 192.168.59.142 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.7 node1 Ready <none> 114d v1.21.0 192.168.59.143 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.7 node2 Ready <none> 114d v1.21.0 192.168.59.144 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.7 [root@master ~]# [root@master ~]# kubectl cluster-info Kubernetes control plane is running at https://192.168.59.142:6443 CoreDNS is running at https://192.168.59.142:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy Metrics-server is running at https://192.168.59.142:6443/api/v1/namespaces/kube-system/services/https:metrics-server:/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'. [root@master ~]#

- Then prepare a virtual machine in the same network segment separately for use as a client

[root@master2 ~]# ip a | grep 59

inet 192.168.59.151/24 brd 192.168.59.255 scope global noprefixroute ens33

[root@master2 ~]#

# Installation command

[root@master2 ~]#yum install -y kubelet-1.21.0-0 --disableexcludes=kubernetes

#--disableexcludes=kubernetes disable warehouses other than this

# Start service

[root@master2 ~]#systemctl enable kubelet && systemctl start kubelet

#Enable kubectl to use tab

[root@master2 ~]# head -n3 /etc/profile

# /etc/profile

source <(kubectl completion bash)

[root@master2 ~]#

# Now, there is no cluster information, and the error content may be different

[root@master2 ~]# kubectl get nodes

No resources found

[root@master2 ~]#

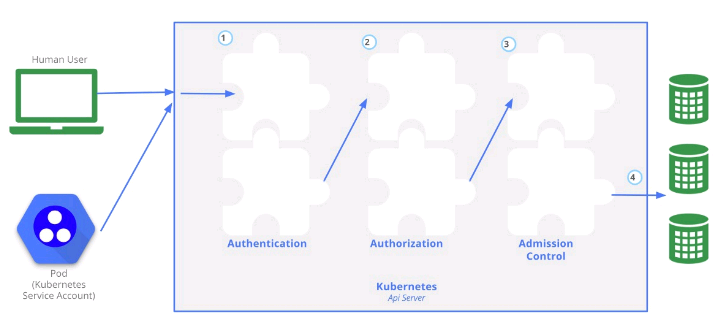

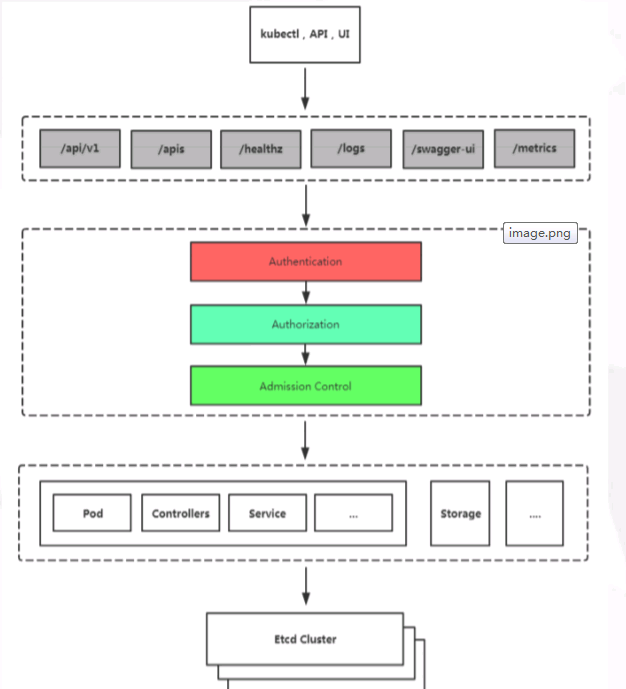

Introduction to k8s security framework

- Kubernetes is a distributed cluster management tool, and it is an important task to ensure the security of the cluster. API Server is not only the intermediary of communication between various components within the cluster, but also the entrance of external control. Therefore, the security mechanism of kubernetes is basically designed around the protection of API Server.

- Kubernetes uses three steps: Authentication, Authorization and Admission Control to ensure the security of API Server.

- If ordinary users want to access the cluster API Server safely, they often need a certificate, Token or user name + password; Pod access, ServiceAccount required

- K8S security control framework is mainly controlled by the following three stages. Each stage supports plug-in mode. Plug ins are enabled through API Server configuration.

- 1, Authentication

- 2.,Authorization

- 3.,Admission Control

- So the process is:

When kubectl, ui, program, etc. request a k8s interface, first authenticate (judge the authenticity) and authenticate (do you have permission to do this?)

token verification

explain

- By default, token authentication is supported in the cluster, but token authentication is not enabled, so let's start token authentication on the cluster [operate on the cluster master]

Enable token authentication

# Sir, it's a value

[root@master ~]# openssl rand -hex 10

f53309a4a68ce1ae8ead

[root@master ~]#

# Then add 18 lines to the following configuration file, which means that the token authentication method is enabled

# Note that the csv file must be placed under the / etc/kubernetes / file, followed by pki and bb

[root@master ~]# cat -n /etc/kubernetes/manifests/kube-apiserver.yaml | egrep -C1 token-auth-file

17 - --allow-privileged=true

18 - --token-auth-file=/etc/kubernetes/pki/bb.csv

19 - --feature-gates=RemoveSelfLink=false

[root@master ~]#

# Edit bb.csv file

# The contents of the file are: id generated above, user-defined user name, and id [must be separated by]

[root@master ~]# cat /etc/kubernetes/pki/bb.csv

f53309a4a68ce1ae8ead,ccx,3

[root@master ~]#

# Then restart the service and enable token, even if the configuration is completed

[root@master ~]# systemctl restart kubelet

[root@master ~]#

[root@master ~]# kubectl get nodes # You need to wait until you can see the following

NAME STATUS ROLES AGE VERSION

master Ready master 114d v1.21.0

node1 Ready <none> 114d v1.21.0

node2 Ready <none> 114d v1.21.0

[root@master ~]#

Test token verification

- Client connection cluster syntax: kubectl -s https: / / cluster master_ip:6443 --token = 'ID generated by cluster mastre' get nodes [kubectl options to view more parameters]

- Let's do the authentication connection step by step and pay attention to the notes

# At this time, the connection will report an error of certificate error [root@master2 ~]# kubectl -s https://192.168.59.142:6443 --token='f53309a4a68ce1ae8ead' get nodes -n kube-system Unable to connect to the server: x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error" while trying to verify candidate authority certificate "kubernetes") [root@master2 ~]# # We can add ignore certificate detection -- secure skip TLS verify = true # Then an error is reported that the ccx user cannot detect the namespace of nodes [root@master2 ~]# kubectl -s https://192.168.59.142:6443 --token='f53309a4a68ce1ae8ead' --insecure-skip-tls-verify=true get nodes -n kube-system Error from server (Forbidden): nodes is forbidden: User "ccx" cannot list resource "nodes" in API group "" at the cluster scope [root@master2 ~]# # To sum up, we come to the conclusion that we have passed the authentication, but we just don't have permission to view it #Now let's change the token value to one digit. If you lose it, you will be prompted that there is an error, saying that there is no authentication information [root@master2 ~]# kubectl -s https://192.168.59.142:6443 --token='f53309a4a68ce1ae8eax' --insecure-skip-tls-verify=true get nodes -n kube-system error: You must be logged in to the server (Unauthorized) [root@master2 ~]#

- After authentication, authorization is involved. For authorization, see the instructions in the authorization section below

Base auth [eliminated]

Interested self Baidu

kubeconfig validation

explain

-

Kubeconfig file - there is not a file called kubeconfig, but a file used for authentication, which is called kubeconfig

For example, aa.txt contains authentication information, so aa.txt is the kubeconfig file -

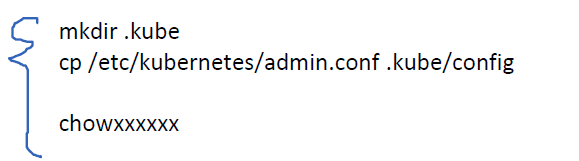

When we build a cluster, we have a process [as shown below], which is the process of creating kubeconfig file

-

That is, after installing kubernetes, the system will generate a kubeconfig file with administrator privileges

In the next test, we can view it under root, but we can't view it when we switch to other users, because other users don't have this kuebconfig file

By default, the cluster uses the kubeconfig file of ~ /. kube/config, which is under / root

[root@master ~]# ls /etc/kubernetes/ admin.conf controller-manager.conf kubelet.conf manifests pki scheduler.conf [root@master ~]# [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 114d v1.21.0 node1 Ready <none> 114d v1.21.0 node2 Ready <none> 114d v1.21.0 [root@master ~]# [root@master ~]# su - ccx [ccx@master ~]$ [ccx@master ~]$ kubectl get nodes The connection to the server localhost:8080 was refused - did you specify the right host or port? [ccx@master ~]$

kubeconfig file copy for testing

- Now we copy this file to the directory under the ccx user, give permission, and then test the cluster master node again

There are a lot of tests. They are tested step by step. Pay attention to the notes inside.

[root@master ~]# [root@master ~]# cp /etc/kubernetes/admin.conf ~ccx/ [root@master ~]# chown ccx.ccx ~ccx/admin.conf [root@master ~]# [root@master ~]# su - ccx Last login: Wed Nov 3 12:35:22 CST 2021 on pts/0 [ccx@master ~]$ ls ~/ admin.conf [ccx@master ~]$ cd ~/ [ccx@master ~]$ pwd /home/ccx [ccx@master ~]$ # At this time, it still can't, because the cluster doesn't know which kubeconfig file you are using [ccx@master ~]$ kubectl get nodes The connection to the server localhost:8080 was refused - did you specify the right host or port? [ccx@master ~]$ # Therefore, as long as we specify the file name, we can view [the path is fixed, so there is no need to add a path] [ccx@master ~]$ kubectl --kubeconfig=admin.conf get nodes NAME STATUS ROLES AGE VERSION master Ready master 114d v1.21.0 node1 Ready <none> 114d v1.21.0 node2 Ready <none> 114d v1.21.0 [ccx@master ~]$ # To sum up, as long as a user gets the file, no matter what the name of the file is, the changed user will have administrator privileges # But we can't only use this environment variable every time. It's troublesome, so we can set the form of the variable so that we don't have to specify the file # Note that ccx is still an ordinary user [ccx@master ~]$ export KUBECONFIG=admin.conf [ccx@master ~]$ [ccx@master ~]$ kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 114d v1.21.0 node1 Ready <none> 114d v1.21.0 node2 Ready <none> 114d v1.21.0 [ccx@master ~]$ # After we cancel this environment variable, it cannot be executed again [ccx@master ~]$ unset KUBECONFIG [ccx@master ~]$ kubectl get nodes The connection to the server localhost:8080 was refused - did you specify the right host or port? [ccx@master ~]$ # Now, is it possible not to execute environment variables and specify files? The answer is also yes # As mentioned earlier, the kuebconfig file is stored in. kube/config, so we just put the file in it and use it as root [ccx@master ~]$ cp admin.conf .kube/config [ccx@master ~]$ [ccx@master ~]$ kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 114d v1.21.0 node1 Ready <none> 114d v1.21.0 node2 Ready <none> 114d v1.21.0 [ccx@master ~]$

- Similarly, we now copy the configuration file to the host outside the cluster for the above test

# The following 151ip is the IP outside the cluster [the client tester above] [root@master ~]# scp /etc/kubernetes/admin.conf 192.168.59.151:~ The authenticity of host '192.168.59.151 (192.168.59.151)' can't be established. ECDSA key fingerprint is SHA256:+JrT4G9aMhaod/a9gBjUOzX5aONqQ7a4OX0Oj3Z978c. ECDSA key fingerprint is MD5:7f:4c:cc:5c:10:d2:54:d8:3c:dd:da:39:48:30:12:59. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '192.168.59.151' (ECDSA) to the list of known hosts. root@192.168.59.151's password: admin.conf 100% 5594 2.9MB/s 00:00 [root@master ~]# # Now go to the test machine and you can see the cluster information [root@master2 ~]# ls | grep adm admin.conf [root@master2 ~]# kubectl --kubeconfig=admin.conf get nodes NAME STATUS ROLES AGE VERSION master Ready master 114d v1.21.0 node1 Ready <none> 114d v1.21.0 node2 Ready <none> 114d v1.21.0 [root@master2 ~]# [root@master2 ~]# kubectl --kubeconfig=admin.conf get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME master Ready master 114d v1.21.0 192.168.59.142 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.7 node1 Ready <none> 114d v1.21.0 192.168.59.143 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.7 node2 Ready <none> 114d v1.21.0 192.168.59.144 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.7 [root@master2 ~]# # Users can directly access the cluster through this file because the certificate in this file has been bound to the cluster.

- Because the permission of admin file is the highest, if you give this file to others, others can directly operate the cluster, which will bring great security risks, so we usually don't do this.

Create kubeconfig file [important]

- To create the kubeconfig file, we need a private key and a certificate issued by the cluster CA. As we have to go to the Public Security Bureau (authority) to apply for an ID card, the Public Security Bureau will issue us an ID card after examination. This ID card can be used as a valid document to prove our identity, rather than printing a business card as a valid document.

- Similarly, we cannot directly use the private key to generate the public key, but must use the private key to generate the certificate request file (application), and then apply for the certificate (ID card) from the Ca (authority) according to the certificate request file. After the CA passes the audit, it will issue the certificate.

- Let's start the whole process.

Because this is important, create files and ns spaces separately~

[root@master ~]# mkdir sefe [root@master ~]# cd sefe [root@master sefe]# kubectl create ns safe namespace/safe created [root@master sefe]# kubens safe Context "context" modified. Active namespace is "safe". [root@master sefe]# [root@master sefe]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 114d v1.21.0 node1 Ready <none> 114d v1.21.0 node2 Ready <none> 114d v1.21.0 [root@master sefe]#

Apply for certificate

- Create private key, name ccx

[root@master sefe]# openssl genrsa -out ccx.key 2048 Generating RSA private key, 2048 bit long modulus ...............................................................................................................+++ .............................................................................+++ e is 65537 (0x10001) [root@master sefe]# ls ccx.key

- Generate the certificate request file ccx.csr using the private john.key just generated:

In particular, the value ccx of CN here is the user we authorize later.

[root@master sefe]# openssl req -new -key ccx.key -out ccx.csr -subj "/CN=ccx/O=cka2021" [root@master sefe]# ls ccx.csr ccx.key [root@master sefe]#

-

Client connection cluster syntax: kubectl -s https: / / cluster master_ip:6443 --username=user --password=passwd get nodes

-

base64 encode the certificate request file

There are many contents that need to be used later. Pay attention to copying completely

[root@master sefe]# cat ccx.csr | base64 | tr -d "\n" LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ1pUQ0NBVTBDQVFBd0lERU1NQW9HQTFVRUF3d0RZMk40TVJBd0RnWURWUVFLREFkamEyRXlNREl4TUlJQgpJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBNkNObzJWVWtTbFQ5bTRvR3Z6cTIyLzRXCmxIM2hVUHRGUHozYWQzZE5NQ1hHNEZrRVdJVG9nRnRISXlyWFc4TlRiZGcxZjN5dzA4aHNwZi9na20vQUYxeSsKUjF0a2JCNWdidDZuOU1wL2lsUUc3RHozYjF2bi9XVC9ieldHaWV3bTFFWEk4OFpaeEFOMllrZmFkdGpCYlhRNQo3MFRxbkx2a1VWVnRKTWRiQjV2aE1Ra3B0TVdvL3ovN2EweGYvbGYxOUgxQURWbXZsNVIvbGU4QVp6RXEwUWQ4ClhKL1FWQkRUNklpMFUxM29GVFEvMlRWeUVJOG5XU2N4K3NxSlBVUXpWL1dwZmJQOHl1SHloV2xNZHZ3RjJnbm0KZ0ZEdW9XdHdjc3NSSFNNdzJzcFc5bTJsM1UwYjczaGZsUmtpaDgyQ1Z5M1owK3ZrTFFkVHJOcWtXcE9TSlFJRApBUUFCb0FBd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFEdXJyS1VIMFZ6WDdtNC9LVEFKYnZXZHhLV29lQ1ZpCno5NHhmRmMrR1NZV2R3cFBLYk1Bcnp2TXYrTEswY0VNUUNMd1R3YmRCbmpjdmFmNG1pV2YyQUVteVRZa3ZNaVcKUTJHV0N1S0toVVZ0MTBxVU93YlFZcWpFZmlycFhTNlZtS1R4UHVKM0dCTmtBS2t4eHZGSGJIdG5ibGpYMmM2NQpzQVBaWThKSm5OUXIySUZTa3dGRlVEbHV1RmhaS1EycW8yZ2NxeENNT0JOanRTdVdqL3BnV1pIWDV4NGZCbW85Cm9qb2RIZmdFMm4zREJOMUIyMHZ4ZHRqTVRONzh6ZHoxUXV0WStrYUZXYTNmaEZFQjNMVnVQT3BqUzllVW82Q3AKWUdjL25LdGFVaGdWNmhSVVV0aXdZaFZ4N2FVMXlnQ08rYWc5QjRILzFMbGp3c1YvNEF0YjhtYz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUgUkVRVUVTVC0tLS0tCg==[root@master sefe]#

- Prepare yaml file for applying for certificate request file

Note that the apiVersion here should be with beta1, otherwise the signerName line cannot be commented out, but in this case, the subsequent operations cannot obtain the certificate. Here, the certificate request file encoded by base64 is in the request.

[root@master sefe]# cat csr.yaml apiVersion: certificates.k8s.io/v1beta1 kind: CertificateSigningRequest metadata: name: john spec: groups: - system:authenticated #signerName: kubernetes.io/legacy-aa #Note that this line is commented out #Replace the following request with the key generated above request: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ1pUQ0NBVTBDQVFBd0lERU1NQW9HQTFVRUF3d0RZMk40TVJBd0RnWURWUVFLREFkamEyRXlNREl4TUlJQgpJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBNkNObzJWVWtTbFQ5bTRvR3Z6cTIyLzRXCmxIM2hVUHRGUHozYWQzZE5NQ1hHNEZrRVdJVG9nRnRISXlyWFc4TlRiZGcxZjN5dzA4aHNwZi9na20vQUYxeSsKUjF0a2JCNWdidDZuOU1wL2lsUUc3RHozYjF2bi9XVC9ieldHaWV3bTFFWEk4OFpaeEFOMllrZmFkdGpCYlhRNQo3MFRxbkx2a1VWVnRKTWRiQjV2aE1Ra3B0TVdvL3ovN2EweGYvbGYxOUgxQURWbXZsNVIvbGU4QVp6RXEwUWQ4ClhKL1FWQkRUNklpMFUxM29GVFEvMlRWeUVJOG5XU2N4K3NxSlBVUXpWL1dwZmJQOHl1SHloV2xNZHZ3RjJnbm0KZ0ZEdW9XdHdjc3NSSFNNdzJzcFc5bTJsM1UwYjczaGZsUmtpaDgyQ1Z5M1owK3ZrTFFkVHJOcWtXcE9TSlFJRApBUUFCb0FBd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFEdXJyS1VIMFZ6WDdtNC9LVEFKYnZXZHhLV29lQ1ZpCno5NHhmRmMrR1NZV2R3cFBLYk1Bcnp2TXYrTEswY0VNUUNMd1R3YmRCbmpjdmFmNG1pV2YyQUVteVRZa3ZNaVcKUTJHV0N1S0toVVZ0MTBxVU93YlFZcWpFZmlycFhTNlZtS1R4UHVKM0dCTmtBS2t4eHZGSGJIdG5ibGpYMmM2NQpzQVBaWThKSm5OUXIySUZTa3dGRlVEbHV1RmhaS1EycW8yZ2NxeENNT0JOanRTdVdqL3BnV1pIWDV4NGZCbW85Cm9qb2RIZmdFMm4zREJOMUIyMHZ4ZHRqTVRONzh6ZHoxUXV0WStrYUZXYTNmaEZFQjNMVnVQT3BqUzllVW82Q3AKWUdjL25LdGFVaGdWNmhSVVV0aXdZaFZ4N2FVMXlnQ08rYWc5QjRILzFMbGp3c1YvNEF0YjhtYz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUgUkVRVUVTVC0tLS0tCg== usages: - client auth [root@master sefe]#

- Apply for certificate

[root@master sefe]# kubectl apply -f csr.yaml Warning: certificates.k8s.io/v1beta1 CertificateSigningRequest is deprecated in v1.19+, unavailable in v1.22+; use certificates.k8s.io/v1 CertificateSigningRequest certificatesigningrequest.certificates.k8s.io/ccx created [root@master sefe]#

- To view the issued certificate request:

The status is pending

[root@master sefe]# kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION ccx 7s kubernetes.io/legacy-unknown kubernetes-admin Pending [root@master sefe]#

- Approval certificate:

[root@master sefe]# kubectl certificate approve ccx certificatesigningrequest.certificates.k8s.io/ccx approved [root@master sefe]#

- Check again, and the status is not pending

[root@master sefe]# kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION ccx 95s kubernetes.io/legacy-unknown kubernetes-admin Approved,Issued [root@master sefe]#

- View all yaml file contents of the successfully created csr

[root@master sefe]# kubectl get csr ccx -o yaml

apiVersion: certificates.k8s.io/v1

kind: CertificateSigningRequest

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"certificates.k8s.io/v1beta1","kind":"CertificateSigningRequest","metadata":{"annotations":{},"name":"ccx"},"spec":{"groups":["system:authenticated"],"request":"LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ1pUQ0NBVTBDQVFBd0lERU1NQW9HQTFVRUF3d0RZMk40TVJBd0RnWURWUVFLREFkamEyRXlNREl4TUlJQgpJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBNkNObzJWVWtTbFQ5bTRvR3Z6cTIyLzRXCmxIM2hVUHRGUHozYWQzZE5NQ1hHNEZrRVdJVG9nRnRISXlyWFc4TlRiZGcxZjN5dzA4aHNwZi9na20vQUYxeSsKUjF0a2JCNWdidDZuOU1wL2lsUUc3RHozYjF2bi9XVC9ieldHaWV3bTFFWEk4OFpaeEFOMllrZmFkdGpCYlhRNQo3MFRxbkx2a1VWVnRKTWRiQjV2aE1Ra3B0TVdvL3ovN2EweGYvbGYxOUgxQURWbXZsNVIvbGU4QVp6RXEwUWQ4ClhKL1FWQkRUNklpMFUxM29GVFEvMlRWeUVJOG5XU2N4K3NxSlBVUXpWL1dwZmJQOHl1SHloV2xNZHZ3RjJnbm0KZ0ZEdW9XdHdjc3NSSFNNdzJzcFc5bTJsM1UwYjczaGZsUmtpaDgyQ1Z5M1owK3ZrTFFkVHJOcWtXcE9TSlFJRApBUUFCb0FBd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFEdXJyS1VIMFZ6WDdtNC9LVEFKYnZXZHhLV29lQ1ZpCno5NHhmRmMrR1NZV2R3cFBLYk1Bcnp2TXYrTEswY0VNUUNMd1R3YmRCbmpjdmFmNG1pV2YyQUVteVRZa3ZNaVcKUTJHV0N1S0toVVZ0MTBxVU93YlFZcWpFZmlycFhTNlZtS1R4UHVKM0dCTmtBS2t4eHZGSGJIdG5ibGpYMmM2NQpzQVBaWThKSm5OUXIySUZTa3dGRlVEbHV1RmhaS1EycW8yZ2NxeENNT0JOanRTdVdqL3BnV1pIWDV4NGZCbW85Cm9qb2RIZmdFMm4zREJOMUIyMHZ4ZHRqTVRONzh6ZHoxUXV0WStrYUZXYTNmaEZFQjNMVnVQT3BqUzllVW82Q3AKWUdjL25LdGFVaGdWNmhSVVV0aXdZaFZ4N2FVMXlnQ08rYWc5QjRILzFMbGp3c1YvNEF0YjhtYz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUgUkVRVUVTVC0tLS0tCg==","usages":["client auth"]}}

creationTimestamp: "2021-11-03T08:32:11Z"

name: ccx

resourceVersion: "12652380"

selfLink: /apis/certificates.k8s.io/v1/certificatesigningrequests/ccx

uid: 49a3aa81-b7a2-432a-a115-d98e065689ab

spec:

groups:

- system:masters

- system:authenticated

request: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ1pUQ0NBVTBDQVFBd0lERU1NQW9HQTFVRUF3d0RZMk40TVJBd0RnWURWUVFLREFkamEyRXlNREl4TUlJQgpJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBNkNObzJWVWtTbFQ5bTRvR3Z6cTIyLzRXCmxIM2hVUHRGUHozYWQzZE5NQ1hHNEZrRVdJVG9nRnRISXlyWFc4TlRiZGcxZjN5dzA4aHNwZi9na20vQUYxeSsKUjF0a2JCNWdidDZuOU1wL2lsUUc3RHozYjF2bi9XVC9ieldHaWV3bTFFWEk4OFpaeEFOMllrZmFkdGpCYlhRNQo3MFRxbkx2a1VWVnRKTWRiQjV2aE1Ra3B0TVdvL3ovN2EweGYvbGYxOUgxQURWbXZsNVIvbGU4QVp6RXEwUWQ4ClhKL1FWQkRUNklpMFUxM29GVFEvMlRWeUVJOG5XU2N4K3NxSlBVUXpWL1dwZmJQOHl1SHloV2xNZHZ3RjJnbm0KZ0ZEdW9XdHdjc3NSSFNNdzJzcFc5bTJsM1UwYjczaGZsUmtpaDgyQ1Z5M1owK3ZrTFFkVHJOcWtXcE9TSlFJRApBUUFCb0FBd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFEdXJyS1VIMFZ6WDdtNC9LVEFKYnZXZHhLV29lQ1ZpCno5NHhmRmMrR1NZV2R3cFBLYk1Bcnp2TXYrTEswY0VNUUNMd1R3YmRCbmpjdmFmNG1pV2YyQUVteVRZa3ZNaVcKUTJHV0N1S0toVVZ0MTBxVU93YlFZcWpFZmlycFhTNlZtS1R4UHVKM0dCTmtBS2t4eHZGSGJIdG5ibGpYMmM2NQpzQVBaWThKSm5OUXIySUZTa3dGRlVEbHV1RmhaS1EycW8yZ2NxeENNT0JOanRTdVdqL3BnV1pIWDV4NGZCbW85Cm9qb2RIZmdFMm4zREJOMUIyMHZ4ZHRqTVRONzh6ZHoxUXV0WStrYUZXYTNmaEZFQjNMVnVQT3BqUzllVW82Q3AKWUdjL25LdGFVaGdWNmhSVVV0aXdZaFZ4N2FVMXlnQ08rYWc5QjRILzFMbGp3c1YvNEF0YjhtYz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUgUkVRVUVTVC0tLS0tCg==

signerName: kubernetes.io/legacy-unknown

usages:

- client auth

username: kubernetes-admin

status:

certificate: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCVENDQWUyZ0F3SUJBZ0lRWXlYenJkTTVKYS9Ia3lQeGhNME5OekFOQmdrcWhraUc5dzBCQVFzRkFEQVYKTVJNd0VRWURWUVFERXdwcmRXSmxjbTVsZEdWek1CNFhEVEl4TVRFd016QTRNamd6T1ZvWERUSXlNVEV3TXpBNApNamd6T1Zvd0lERVFNQTRHQTFVRUNoTUhZMnRoTWpBeU1URU1NQW9HQTFVRUF4TURZMk40TUlJQklqQU5CZ2txCmhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBNkNObzJWVWtTbFQ5bTRvR3Z6cTIyLzRXbEgzaFVQdEYKUHozYWQzZE5NQ1hHNEZrRVdJVG9nRnRISXlyWFc4TlRiZGcxZjN5dzA4aHNwZi9na20vQUYxeStSMXRrYkI1ZwpidDZuOU1wL2lsUUc3RHozYjF2bi9XVC9ieldHaWV3bTFFWEk4OFpaeEFOMllrZmFkdGpCYlhRNTcwVHFuTHZrClVWVnRKTWRiQjV2aE1Ra3B0TVdvL3ovN2EweGYvbGYxOUgxQURWbXZsNVIvbGU4QVp6RXEwUWQ4WEovUVZCRFQKNklpMFUxM29GVFEvMlRWeUVJOG5XU2N4K3NxSlBVUXpWL1dwZmJQOHl1SHloV2xNZHZ3RjJnbm1nRkR1b1d0dwpjc3NSSFNNdzJzcFc5bTJsM1UwYjczaGZsUmtpaDgyQ1Z5M1owK3ZrTFFkVHJOcWtXcE9TSlFJREFRQUJvMFl3ClJEQVRCZ05WSFNVRUREQUtCZ2dyQmdFRkJRY0RBakFNQmdOVkhSTUJBZjhFQWpBQU1COEdBMVVkSXdRWU1CYUEKRk03Q2ZzYW51ZGNURkh0bm9leThoL1pRcUVack1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRRHF3cnNYSEIwVApHTjQwdlcvQmJsL1FuVmFKQUdYU2lTR0wwbHVud0dOd3FRRVY2RVhoM3lsR3drS1pCT2JRNHVxZ1F0Vmt5eFQvCnFEcUFERWh5QUx1VGtkREVxLzRsRmFqaDRlaWtHQkRVU3ZhNVNEb2NQUVhqa0JhUHJHMDQxTTh1dlFySFh3WGsKcEc5UGlmbExMTksyMzBzSGNPaS85MmVndmpEL3JIYkdTejV5cGpuWTZpMkJuSzZOcGpqWDRienEyTGl3bytOYQpLS2RIS3JPWXV3ajI0QVllWkRtWnVFZ3FBMXZlRUtSWXZaNVhSREVnL1lEckd1U2NUbkhLQkNPeHEzUVdSRkZTCm4xWG9hdEU1MkU5d3JDeVFsUXAzbi9KbEFqMmViRjh1SElVY1JFY1ZNSjZ5MU02YzlaTHZjdHh4NjA1SFJmeE0KSm1mazR0bkNLc3QvCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

conditions:

- lastTransitionTime: "2021-11-03T08:33:39Z"

lastUpdateTime: "2021-11-03T08:33:39Z"

message: This CSR was approved by kubectl certificate approve.

reason: KubectlApprove

status: "True"

type: Approved

[root@master sefe]#

# And it will generate its own ca file ha [ca name specified at the beginning]

[root@master sefe]# ls /etc/kubernetes/pki/ | grep ca

ca.crt

ca.key

front-proxy-ca.crt

front-proxy-ca.key

[root@master sefe]#

- View Certificate:

[root@master sefe]# kubectl get csr/ccx -o jsonpath='{.status.certificate}'

LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCVENDQWUyZ0F3SUJBZ0lRWXlYenJkTTVKYS9Ia3lQeGhNME5OekFOQmdrcWhraUc5dzBCQVFzRkFEQVYKTVJNd0VRWURWUVFERXdwcmRXSmxjbTVsZEdWek1CNFhEVEl4TVRFd016QTRNamd6T1ZvWERUSXlNVEV3TXpBNApNamd6T1Zvd0lERVFNQTRHQTFVRUNoTUhZMnRoTWpBeU1URU1NQW9HQTFVRUF4TURZMk40TUlJQklqQU5CZ2txCmhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBNkNObzJWVWtTbFQ5bTRvR3Z6cTIyLzRXbEgzaFVQdEYKUHozYWQzZE5NQ1hHNEZrRVdJVG9nRnRISXlyWFc4TlRiZGcxZjN5dzA4aHNwZi9na20vQUYxeStSMXRrYkI1ZwpidDZuOU1wL2lsUUc3RHozYjF2bi9XVC9ieldHaWV3bTFFWEk4OFpaeEFOMllrZmFkdGpCYlhRNTcwVHFuTHZrClVWVnRKTWRiQjV2aE1Ra3B0TVdvL3ovN2EweGYvbGYxOUgxQURWbXZsNVIvbGU4QVp6RXEwUWQ4WEovUVZCRFQKNklpMFUxM29GVFEvMlRWeUVJOG5XU2N4K3NxSlBVUXpWL1dwZmJQOHl1SHloV2xNZHZ3RjJnbm1nRkR1b1d0dwpjc3NSSFNNdzJzcFc5bTJsM1UwYjczaGZsUmtpaDgyQ1Z5M1owK3ZrTFFkVHJOcWtXcE9TSlFJREFRQUJvMFl3ClJEQVRCZ05WSFNVRUREQUtCZ2dyQmdFRkJRY0RBakFNQmdOVkhSTUJBZjhFQWpBQU1COEdBMVVkSXdRWU1CYUEKRk03Q2ZzYW51ZGNURkh0bm9leThoL1pRcUVack1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRRHF3cnNYSEIwVApHTjQwdlcvQmJsL1FuVmFKQUdYU2lTR0wwbHVud0dOd3FRRVY2RVhoM3lsR3drS1pCT2JRNHVxZ1F0Vmt5eFQvCnFEcUFERWh5QUx1VGtkREVxLzRsRmFqaDRlaWtHQkRVU3ZhNVNEb2NQUVhqa0JhUHJHMDQxTTh1dlFySFh3WGsKcEc5UGlmbExMTksyMzBzSGNPaS85MmVndmpEL3JIYkdTejV5cGpuWTZpMkJuSzZOcGpqWDRienEyTGl3bytOYQpLS2RIS3JPWXV3ajI0QVllWkRtWnVFZ3FBMXZlRUtSWXZaNVhSREVnL1lEckd1U2NUbkhLQkNPeHEzUVdSRkZTCm4xWG9hdEU1MkU5d3JDeVFsUXAzbi9KbEFqMmViRjh1SElVY1JFY1ZNSjZ5MU02YzlaTHZjdHh4NjA1SFJmeE0KSm1mazR0bkNLc3QvCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K[root@master sefe]#

[root@master sefe]#

- Export certificate file:

[root@master sefe]# kubectl get csr/john -o jsonpath='{.status.certificate}' | base64 -d >

-bash: syntax error near unexpected token `newline'

[root@master sefe]# kubectl get csr/ccx -o jsonpath='{.status.certificate}' | base64 -d > ccx.crt

[root@master sefe]# ls

ccx.crt ccx.csr ccx.key csr.yaml

[root@master sefe]#

- At this point, both the public key and the private key are available

- ccx.key: private key

- ccx.csr: public key

[root@master sefe]# ls ccx.crt ccx.csr ccx.key csr.yaml [root@master sefe]#

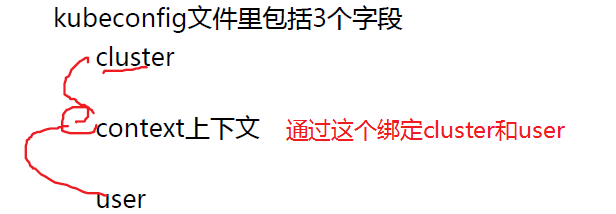

Create kubeconfig file

- Copy CA certificate

[root@master sefe]# cp /etc/kubernetes/pki/ca.crt . [root@master sefe]# ls ca.crt ccx.crt ccx.csr ccx.key csr.yaml [root@master sefe]# cat ca.crt -----BEGIN CERTIFICATE----- MIIC5zCCAc+gAwIBAgIBADANBgkqhkiG9w0BAQsFADAVMRMwEQYDVQQDEwprdWJl cm5ldGVzMB4XDTIxMDcwMjAxMzUyOFoXDTMxMDYzMDAxMzUyOFowFTETMBEGA1UE AxMKa3ViZXJuZXRlczCCASIwDQYJKoZIhvcNAQEBBQADggEPADCCAQoCggEBAPaA t0hnK8BSad5VhNcT4skCYK95XVRwOCtIwojUsgSisO2Rk9yhma2yv8NDi9fbjsCK hgxT2dd26garjjWq3WicfrScVnLWFWcPY8qrCxHc1al8y7kzbs/jIabElNnP1uEc kBjEakL2r37G19zr3pOqGuKju9DTPling+F9OA4GiDVE/o65W3VPcxEfl85RzDJ8 iZDh/n3bKf+8FRu7BdwiX0btUlPr32Uq5tNW3lKyI68lJCBse/gfgbJdlPWf45IE En7QEj6S2VmI0sHIP71CX6Zd0o7FSOEjfljFgn1uaqvymtQO7YXqonZ4vliCx09M pOuFi6egauBCXeiSmKECAwEAAaNCMEAwDgYDVR0PAQH/BAQDAgKkMA8GA1UdEwEB /wQFMAMBAf8wHQYDVR0OBBYEFM7CfsanudcTFHtnoey8h/ZQqEZrMA0GCSqGSIb3 DQEBCwUAA4IBAQBgPE6dyUXyt12IgrU4JLApBfcQns81OxUVVInLXE/hGBVUcF0j wwqxpoEQTYp1iO+Ps9Y7CAk5Rw2o2rd6XRp5atYeeZ8WVyavWphl/91wguwV+voh c00SfXLgTJdlfJcntMSsELZBE9vZkdUIkgBMyNzU1VM0vzrH5xXA/Lrf5oKRESue 6NbDg22bsBY92zH5Lg6a+ilJE5r+8/KREmTT/eeRfEuTR2s0HsxdItpCLzYvFwbr +/jD+O8DydpQK1LVh4Do+vdT/VPXohMSNhzBSW9fux49eu3wlk9+/nfRthyh7N6G 4sMP48eZqBlNnIG4suMOAoTz7Ly9JgbRYwyY -----END CERTIFICATE----- [root@master sefe]#

- Set cluster fields

kubectl config --kubeconfig=kc1 set-cluster cluster1 --server=https://192.168.59.142:6443 --certificate-authority=ca.crt --embed-certs=true

# --kubeconfig=kc1 -- kc1 custom name

# Set cluster cluster1 - custom name cluster1 above

#--server=https://192.168.59.142:6443 ——Master IP replacement

# --Certificate authority = ca.crt -- specify the ca.crt file below

#--Embedded certs = true means to write the contents of the certificate to this kubeconfig file.

[root@master sefe]# kubectl config --kubeconfig=kc1 set-cluster cluster1 --server=https://192.168.59.142:6443 --certificate-authority=ca.crt --embed-certs=true

Cluster "cluster1" set.

[root@master sefe]# ls

ca.crt ccx.crt ccx.csr ccx.key csr.yaml kc1

[root@master sefe]# cat kc1

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM1ekNDQWMrZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeE1EY3dNakF4TXpVeU9Gb1hEVE14TURZek1EQXhNelV5T0Zvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBUGFBCnQwaG5LOEJTYWQ1VmhOY1Q0c2tDWUs5NVhWUndPQ3RJd29qVXNnU2lzTzJSazl5aG1hMnl2OE5EaTlmYmpzQ0sKaGd4VDJkZDI2Z2FyampXcTNXaWNmclNjVm5MV0ZXY1BZOHFyQ3hIYzFhbDh5N2t6YnMvaklhYkVsTm5QMXVFYwprQmpFYWtMMnIzN0cxOXpyM3BPcUd1S2p1OURUUGxpbmcrRjlPQTRHaURWRS9vNjVXM1ZQY3hFZmw4NVJ6REo4CmlaRGgvbjNiS2YrOEZSdTdCZHdpWDBidFVsUHIzMlVxNXROVzNsS3lJNjhsSkNCc2UvZ2ZnYkpkbFBXZjQ1SUUKRW43UUVqNlMyVm1JMHNISVA3MUNYNlpkMG83RlNPRWpmbGpGZ24xdWFxdnltdFFPN1lYcW9uWjR2bGlDeDA5TQpwT3VGaTZlZ2F1QkNYZWlTbUtFQ0F3RUFBYU5DTUVBd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZNN0Nmc2FudWRjVEZIdG5vZXk4aC9aUXFFWnJNQTBHQ1NxR1NJYjMKRFFFQkN3VUFBNElCQVFCZ1BFNmR5VVh5dDEySWdyVTRKTEFwQmZjUW5zODFPeFVWVkluTFhFL2hHQlZVY0Ywagp3d3F4cG9FUVRZcDFpTytQczlZN0NBazVSdzJvMnJkNlhScDVhdFllZVo4V1Z5YXZXcGhsLzkxd2d1d1Yrdm9oCmMwMFNmWExnVEpkbGZKY250TVNzRUxaQkU5dlprZFVJa2dCTXlOelUxVk0wdnpySDV4WEEvTHJmNW9LUkVTdWUKNk5iRGcyMmJzQlk5MnpINUxnNmEraWxKRTVyKzgvS1JFbVRUL2VlUmZFdVRSMnMwSHN4ZEl0cENMell2RndicgorL2pEK084RHlkcFFLMUxWaDREbyt2ZFQvVlBYb2hNU05oekJTVzlmdXg0OWV1M3dsazkrL25mUnRoeWg3TjZHCjRzTVA0OGVacUJsTm5JRzRzdU1PQW9UejdMeTlKZ2JSWXd5WQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://192.168.59.142:6443

name: cluster1

contexts: null

current-context: ""

kind: Config

preferences: {}

users: null

[root@master sefe]#

- Set user fields

It is mainly used to write various secret key information

# Nothing needs to be modified

kubectl config --kubeconfig=kc1 set-credentials ccx --client-certificate=ccx.crt --client-key=ccx.key --embed-certs=true

[root@master sefe]# kubectl config --kubeconfig=kc1 set-credentials ccx --client-certificate=ccx.crt --client-key=ccx.key --embed-certs=true

User "ccx" set.

[root@master sefe]# cat kc1

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM1ekNDQWMrZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeE1EY3dNakF4TXpVeU9Gb1hEVE14TURZek1EQXhNelV5T0Zvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBUGFBCnQwaG5LOEJTYWQ1VmhOY1Q0c2tDWUs5NVhWUndPQ3RJd29qVXNnU2lzTzJSazl5aG1hMnl2OE5EaTlmYmpzQ0sKaGd4VDJkZDI2Z2FyampXcTNXaWNmclNjVm5MV0ZXY1BZOHFyQ3hIYzFhbDh5N2t6YnMvaklhYkVsTm5QMXVFYwprQmpFYWtMMnIzN0cxOXpyM3BPcUd1S2p1OURUUGxpbmcrRjlPQTRHaURWRS9vNjVXM1ZQY3hFZmw4NVJ6REo4CmlaRGgvbjNiS2YrOEZSdTdCZHdpWDBidFVsUHIzMlVxNXROVzNsS3lJNjhsSkNCc2UvZ2ZnYkpkbFBXZjQ1SUUKRW43UUVqNlMyVm1JMHNISVA3MUNYNlpkMG83RlNPRWpmbGpGZ24xdWFxdnltdFFPN1lYcW9uWjR2bGlDeDA5TQpwT3VGaTZlZ2F1QkNYZWlTbUtFQ0F3RUFBYU5DTUVBd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZNN0Nmc2FudWRjVEZIdG5vZXk4aC9aUXFFWnJNQTBHQ1NxR1NJYjMKRFFFQkN3VUFBNElCQVFCZ1BFNmR5VVh5dDEySWdyVTRKTEFwQmZjUW5zODFPeFVWVkluTFhFL2hHQlZVY0Ywagp3d3F4cG9FUVRZcDFpTytQczlZN0NBazVSdzJvMnJkNlhScDVhdFllZVo4V1Z5YXZXcGhsLzkxd2d1d1Yrdm9oCmMwMFNmWExnVEpkbGZKY250TVNzRUxaQkU5dlprZFVJa2dCTXlOelUxVk0wdnpySDV4WEEvTHJmNW9LUkVTdWUKNk5iRGcyMmJzQlk5MnpINUxnNmEraWxKRTVyKzgvS1JFbVRUL2VlUmZFdVRSMnMwSHN4ZEl0cENMell2RndicgorL2pEK084RHlkcFFLMUxWaDREbyt2ZFQvVlBYb2hNU05oekJTVzlmdXg0OWV1M3dsazkrL25mUnRoeWg3TjZHCjRzTVA0OGVacUJsTm5JRzRzdU1PQW9UejdMeTlKZ2JSWXd5WQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://192.168.59.142:6443

name: cluster1

contexts: null

current-context: ""

kind: Config

preferences: {}

users:

- name: ccx

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCVENDQWUyZ0F3SUJBZ0lRWXlYenJkTTVKYS9Ia3lQeGhNME5OekFOQmdrcWhraUc5dzBCQVFzRkFEQVYKTVJNd0VRWURWUVFERXdwcmRXSmxjbTVsZEdWek1CNFhEVEl4TVRFd016QTRNamd6T1ZvWERUSXlNVEV3TXpBNApNamd6T1Zvd0lERVFNQTRHQTFVRUNoTUhZMnRoTWpBeU1URU1NQW9HQTFVRUF4TURZMk40TUlJQklqQU5CZ2txCmhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBNkNObzJWVWtTbFQ5bTRvR3Z6cTIyLzRXbEgzaFVQdEYKUHozYWQzZE5NQ1hHNEZrRVdJVG9nRnRISXlyWFc4TlRiZGcxZjN5dzA4aHNwZi9na20vQUYxeStSMXRrYkI1ZwpidDZuOU1wL2lsUUc3RHozYjF2bi9XVC9ieldHaWV3bTFFWEk4OFpaeEFOMllrZmFkdGpCYlhRNTcwVHFuTHZrClVWVnRKTWRiQjV2aE1Ra3B0TVdvL3ovN2EweGYvbGYxOUgxQURWbXZsNVIvbGU4QVp6RXEwUWQ4WEovUVZCRFQKNklpMFUxM29GVFEvMlRWeUVJOG5XU2N4K3NxSlBVUXpWL1dwZmJQOHl1SHloV2xNZHZ3RjJnbm1nRkR1b1d0dwpjc3NSSFNNdzJzcFc5bTJsM1UwYjczaGZsUmtpaDgyQ1Z5M1owK3ZrTFFkVHJOcWtXcE9TSlFJREFRQUJvMFl3ClJEQVRCZ05WSFNVRUREQUtCZ2dyQmdFRkJRY0RBakFNQmdOVkhSTUJBZjhFQWpBQU1COEdBMVVkSXdRWU1CYUEKRk03Q2ZzYW51ZGNURkh0bm9leThoL1pRcUVack1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRRHF3cnNYSEIwVApHTjQwdlcvQmJsL1FuVmFKQUdYU2lTR0wwbHVud0dOd3FRRVY2RVhoM3lsR3drS1pCT2JRNHVxZ1F0Vmt5eFQvCnFEcUFERWh5QUx1VGtkREVxLzRsRmFqaDRlaWtHQkRVU3ZhNVNEb2NQUVhqa0JhUHJHMDQxTTh1dlFySFh3WGsKcEc5UGlmbExMTksyMzBzSGNPaS85MmVndmpEL3JIYkdTejV5cGpuWTZpMkJuSzZOcGpqWDRienEyTGl3bytOYQpLS2RIS3JPWXV3ajI0QVllWkRtWnVFZ3FBMXZlRUtSWXZaNVhSREVnL1lEckd1U2NUbkhLQkNPeHEzUVdSRkZTCm4xWG9hdEU1MkU5d3JDeVFsUXAzbi9KbEFqMmViRjh1SElVY1JFY1ZNSjZ5MU02YzlaTHZjdHh4NjA1SFJmeE0KSm1mazR0bkNLc3QvCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBNkNObzJWVWtTbFQ5bTRvR3Z6cTIyLzRXbEgzaFVQdEZQejNhZDNkTk1DWEc0RmtFCldJVG9nRnRISXlyWFc4TlRiZGcxZjN5dzA4aHNwZi9na20vQUYxeStSMXRrYkI1Z2J0Nm45TXAvaWxRRzdEejMKYjF2bi9XVC9ieldHaWV3bTFFWEk4OFpaeEFOMllrZmFkdGpCYlhRNTcwVHFuTHZrVVZWdEpNZGJCNXZoTVFrcAp0TVdvL3ovN2EweGYvbGYxOUgxQURWbXZsNVIvbGU4QVp6RXEwUWQ4WEovUVZCRFQ2SWkwVTEzb0ZUUS8yVFZ5CkVJOG5XU2N4K3NxSlBVUXpWL1dwZmJQOHl1SHloV2xNZHZ3RjJnbm1nRkR1b1d0d2Nzc1JIU013MnNwVzltMmwKM1UwYjczaGZsUmtpaDgyQ1Z5M1owK3ZrTFFkVHJOcWtXcE9TSlFJREFRQUJBb0lCQUVqR29SNVJoUGtRd0JrOQpJblJkTWVxeU05NEZ3TmVranNjTzJ5ak03QlFHSTkwOXl4RDlTZTdEcnYrbGRMSzkvNi9XTXA5dk5maVBReENmCnNZWDNKdCtzSWJNNVFiaHJwWXZjbmdjdVhPRy9DaFNSNEhpMjlYb0phZE14a3FZMnRNMmp1eVpCcnU2MnJ5eU0Kbmo3WUlYdnhEaUNuR0c1ZXNXdVdQb3RqUEhTYm53L0JOKzgwaFJYSitrUHlyK2pYMjVtRTlUcTh0emtEQUdXWQpMd0psb0dQenhPdG9lREJjb1hNWkgyWlJhRmhjd1VWRkJmcUlaMFY4UzZLVDkyTkYwVzVWdUlWR1RMNDJ0Mjl5CjBtQVdqM3ZrdDcyajZhUk9Wd0pkemQwVU43c3p6SFJJQjhhNTJCcTZybzl6UE1NTDRRcmtnamFSeXRsczduZ2kKZFp0SGY0RUNnWUVBK1ZTa1VvR2pZdWZ4eHQ5RjlKeVFsWVZUTmNlOTM4cXBQSnUrVDdEUVVLZ0V2MmVZMXF3cQpvTWJqb1JTVGpUbUFUZU9tWWEzS2d5SWR6VkszT000MWprcU1remlwb3VyK3NwSVJhbVBLQ0F2clo5Yi9rbm5lCnNrcTZweisxUjJwYVVqaEozZGtkZXBEUE05cmtxWjRQTG9mVkczT3JOZ1VJR3JhaGJ1TmhKN0VDZ1lFQTdsa0oKNlp3d2pKWENldGlxaXRZaHV1QWtwZXlTUGprWHMyaFQrQk0vQUY0SnJncGR1Y2E5VlhNQUlDSys2SlJSdDE5cAovaXpQN3Jucm1hWmNaM0cxWmdkUTFGZytTSkxxNFMzTk8yejRJaHVFWlYwSy91YjdxenZRdDBlSlVEMTdqNFQrCkxVRnhnZmE4WklybzhDWjIreStYazh5Nlh0Slp5Qy9aNU8ydElyVUNnWUVBMFhYVEttRXdjcm5xdXlqOWF4ZFEKdTl3cTRJWnlOQnpjZWtkWTVUZmtlYTM5ZHhOQUtqQ3ZDeXlyTkxyRmpxSWM4TkpzQjZscDlTcG5JUVA1V3VhWgp4WFZKamJEUGlrZWpPejlORkRUTEdHRnpIV1JZaHFTTmV2a2V2N3pjdlNkU3c3bjRERUVHNjkzVnhIbURHaC9vCkh5NEwwU2ttVDVhQWpYaWFQRDhYY3JFQ2dZQTlkWUlqMWQyQzhyN3lORnBOY0lmRUN6WUgvdWQ2MmZmdGtCSk8KM28rWlJhWlRWV0x6bTNhSXlSMllLNzEwZFlKWXVXYTRYcy9ESy9lL1ovRmR6eWxLUk1xbjVwVXcyNGxyUlFjdApzcHlORnZGZHZjOHZDVnFOdmQvRTB0SnFlV0FhRXQ0RHgyTkFjdUlEUHZwdnFrdDEyOERISUx4UjVRVzNvL2NZCm05elFIUUtCZ0dNZkVKUnh4Sk51ZllmcXNMa1QwQktWTEhCMDZsUHAxSGlLWVZuMXJkMmNZRkl2M3VNR2RrUXYKKzlYNVFIdkpsaWYzdUg1Wnp0TnZFdEVEY3lzcjdWN1RzNXo2Z1BGcUdrdDlKS1o2c1ZkcWNuYjRoSlhONXQzaQpBVWZtWkdrN0pFeVlSRXdPUm1jM3FBNld2RG9iUWtINGhXeXg4azN5R3NwTmNzcHgzd2ZGCi0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

[root@master sefe]#

- Set context field

The above is to define context. This is to define contexts. cluster and user in the above content are bound together

# Nothing needs to be modified

kubectl config --kubeconfig=kc1 set-context context1 --cluster=cluster1 --namespace=default --user=ccx

[root@master sefe]# kubectl config --kubeconfig=kc1 set-context context1 --cluster=cluster1 --namespace=default --user=ccx

Context "context1" created.

[root@master sefe]# cat ck1

cat: ck1: No such file or directory

[root@master sefe]# cat kc1

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM1ekNDQWMrZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeE1EY3dNakF4TXpVeU9Gb1hEVE14TURZek1EQXhNelV5T0Zvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBUGFBCnQwaG5LOEJTYWQ1VmhOY1Q0c2tDWUs5NVhWUndPQ3RJd29qVXNnU2lzTzJSazl5aG1hMnl2OE5EaTlmYmpzQ0sKaGd4VDJkZDI2Z2FyampXcTNXaWNmclNjVm5MV0ZXY1BZOHFyQ3hIYzFhbDh5N2t6YnMvaklhYkVsTm5QMXVFYwprQmpFYWtMMnIzN0cxOXpyM3BPcUd1S2p1OURUUGxpbmcrRjlPQTRHaURWRS9vNjVXM1ZQY3hFZmw4NVJ6REo4CmlaRGgvbjNiS2YrOEZSdTdCZHdpWDBidFVsUHIzMlVxNXROVzNsS3lJNjhsSkNCc2UvZ2ZnYkpkbFBXZjQ1SUUKRW43UUVqNlMyVm1JMHNISVA3MUNYNlpkMG83RlNPRWpmbGpGZ24xdWFxdnltdFFPN1lYcW9uWjR2bGlDeDA5TQpwT3VGaTZlZ2F1QkNYZWlTbUtFQ0F3RUFBYU5DTUVBd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZNN0Nmc2FudWRjVEZIdG5vZXk4aC9aUXFFWnJNQTBHQ1NxR1NJYjMKRFFFQkN3VUFBNElCQVFCZ1BFNmR5VVh5dDEySWdyVTRKTEFwQmZjUW5zODFPeFVWVkluTFhFL2hHQlZVY0Ywagp3d3F4cG9FUVRZcDFpTytQczlZN0NBazVSdzJvMnJkNlhScDVhdFllZVo4V1Z5YXZXcGhsLzkxd2d1d1Yrdm9oCmMwMFNmWExnVEpkbGZKY250TVNzRUxaQkU5dlprZFVJa2dCTXlOelUxVk0wdnpySDV4WEEvTHJmNW9LUkVTdWUKNk5iRGcyMmJzQlk5MnpINUxnNmEraWxKRTVyKzgvS1JFbVRUL2VlUmZFdVRSMnMwSHN4ZEl0cENMell2RndicgorL2pEK084RHlkcFFLMUxWaDREbyt2ZFQvVlBYb2hNU05oekJTVzlmdXg0OWV1M3dsazkrL25mUnRoeWg3TjZHCjRzTVA0OGVacUJsTm5JRzRzdU1PQW9UejdMeTlKZ2JSWXd5WQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://192.168.59.142:6443

name: cluster1

contexts:

- context:

cluster: cluster1

namespace: default

user: ccx

name: context1

current-context: ""

kind: Config

preferences: {}

users:

- name: ccx

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCVENDQWUyZ0F3SUJBZ0lRWXlYenJkTTVKYS9Ia3lQeGhNME5OekFOQmdrcWhraUc5dzBCQVFzRkFEQVYKTVJNd0VRWURWUVFERXdwcmRXSmxjbTVsZEdWek1CNFhEVEl4TVRFd016QTRNamd6T1ZvWERUSXlNVEV3TXpBNApNamd6T1Zvd0lERVFNQTRHQTFVRUNoTUhZMnRoTWpBeU1URU1NQW9HQTFVRUF4TURZMk40TUlJQklqQU5CZ2txCmhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBNkNObzJWVWtTbFQ5bTRvR3Z6cTIyLzRXbEgzaFVQdEYKUHozYWQzZE5NQ1hHNEZrRVdJVG9nRnRISXlyWFc4TlRiZGcxZjN5dzA4aHNwZi9na20vQUYxeStSMXRrYkI1ZwpidDZuOU1wL2lsUUc3RHozYjF2bi9XVC9ieldHaWV3bTFFWEk4OFpaeEFOMllrZmFkdGpCYlhRNTcwVHFuTHZrClVWVnRKTWRiQjV2aE1Ra3B0TVdvL3ovN2EweGYvbGYxOUgxQURWbXZsNVIvbGU4QVp6RXEwUWQ4WEovUVZCRFQKNklpMFUxM29GVFEvMlRWeUVJOG5XU2N4K3NxSlBVUXpWL1dwZmJQOHl1SHloV2xNZHZ3RjJnbm1nRkR1b1d0dwpjc3NSSFNNdzJzcFc5bTJsM1UwYjczaGZsUmtpaDgyQ1Z5M1owK3ZrTFFkVHJOcWtXcE9TSlFJREFRQUJvMFl3ClJEQVRCZ05WSFNVRUREQUtCZ2dyQmdFRkJRY0RBakFNQmdOVkhSTUJBZjhFQWpBQU1COEdBMVVkSXdRWU1CYUEKRk03Q2ZzYW51ZGNURkh0bm9leThoL1pRcUVack1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRRHF3cnNYSEIwVApHTjQwdlcvQmJsL1FuVmFKQUdYU2lTR0wwbHVud0dOd3FRRVY2RVhoM3lsR3drS1pCT2JRNHVxZ1F0Vmt5eFQvCnFEcUFERWh5QUx1VGtkREVxLzRsRmFqaDRlaWtHQkRVU3ZhNVNEb2NQUVhqa0JhUHJHMDQxTTh1dlFySFh3WGsKcEc5UGlmbExMTksyMzBzSGNPaS85MmVndmpEL3JIYkdTejV5cGpuWTZpMkJuSzZOcGpqWDRienEyTGl3bytOYQpLS2RIS3JPWXV3ajI0QVllWkRtWnVFZ3FBMXZlRUtSWXZaNVhSREVnL1lEckd1U2NUbkhLQkNPeHEzUVdSRkZTCm4xWG9hdEU1MkU5d3JDeVFsUXAzbi9KbEFqMmViRjh1SElVY1JFY1ZNSjZ5MU02YzlaTHZjdHh4NjA1SFJmeE0KSm1mazR0bkNLc3QvCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBNkNObzJWVWtTbFQ5bTRvR3Z6cTIyLzRXbEgzaFVQdEZQejNhZDNkTk1DWEc0RmtFCldJVG9nRnRISXlyWFc4TlRiZGcxZjN5dzA4aHNwZi9na20vQUYxeStSMXRrYkI1Z2J0Nm45TXAvaWxRRzdEejMKYjF2bi9XVC9ieldHaWV3bTFFWEk4OFpaeEFOMllrZmFkdGpCYlhRNTcwVHFuTHZrVVZWdEpNZGJCNXZoTVFrcAp0TVdvL3ovN2EweGYvbGYxOUgxQURWbXZsNVIvbGU4QVp6RXEwUWQ4WEovUVZCRFQ2SWkwVTEzb0ZUUS8yVFZ5CkVJOG5XU2N4K3NxSlBVUXpWL1dwZmJQOHl1SHloV2xNZHZ3RjJnbm1nRkR1b1d0d2Nzc1JIU013MnNwVzltMmwKM1UwYjczaGZsUmtpaDgyQ1Z5M1owK3ZrTFFkVHJOcWtXcE9TSlFJREFRQUJBb0lCQUVqR29SNVJoUGtRd0JrOQpJblJkTWVxeU05NEZ3TmVranNjTzJ5ak03QlFHSTkwOXl4RDlTZTdEcnYrbGRMSzkvNi9XTXA5dk5maVBReENmCnNZWDNKdCtzSWJNNVFiaHJwWXZjbmdjdVhPRy9DaFNSNEhpMjlYb0phZE14a3FZMnRNMmp1eVpCcnU2MnJ5eU0Kbmo3WUlYdnhEaUNuR0c1ZXNXdVdQb3RqUEhTYm53L0JOKzgwaFJYSitrUHlyK2pYMjVtRTlUcTh0emtEQUdXWQpMd0psb0dQenhPdG9lREJjb1hNWkgyWlJhRmhjd1VWRkJmcUlaMFY4UzZLVDkyTkYwVzVWdUlWR1RMNDJ0Mjl5CjBtQVdqM3ZrdDcyajZhUk9Wd0pkemQwVU43c3p6SFJJQjhhNTJCcTZybzl6UE1NTDRRcmtnamFSeXRsczduZ2kKZFp0SGY0RUNnWUVBK1ZTa1VvR2pZdWZ4eHQ5RjlKeVFsWVZUTmNlOTM4cXBQSnUrVDdEUVVLZ0V2MmVZMXF3cQpvTWJqb1JTVGpUbUFUZU9tWWEzS2d5SWR6VkszT000MWprcU1remlwb3VyK3NwSVJhbVBLQ0F2clo5Yi9rbm5lCnNrcTZweisxUjJwYVVqaEozZGtkZXBEUE05cmtxWjRQTG9mVkczT3JOZ1VJR3JhaGJ1TmhKN0VDZ1lFQTdsa0oKNlp3d2pKWENldGlxaXRZaHV1QWtwZXlTUGprWHMyaFQrQk0vQUY0SnJncGR1Y2E5VlhNQUlDSys2SlJSdDE5cAovaXpQN3Jucm1hWmNaM0cxWmdkUTFGZytTSkxxNFMzTk8yejRJaHVFWlYwSy91YjdxenZRdDBlSlVEMTdqNFQrCkxVRnhnZmE4WklybzhDWjIreStYazh5Nlh0Slp5Qy9aNU8ydElyVUNnWUVBMFhYVEttRXdjcm5xdXlqOWF4ZFEKdTl3cTRJWnlOQnpjZWtkWTVUZmtlYTM5ZHhOQUtqQ3ZDeXlyTkxyRmpxSWM4TkpzQjZscDlTcG5JUVA1V3VhWgp4WFZKamJEUGlrZWpPejlORkRUTEdHRnpIV1JZaHFTTmV2a2V2N3pjdlNkU3c3bjRERUVHNjkzVnhIbURHaC9vCkh5NEwwU2ttVDVhQWpYaWFQRDhYY3JFQ2dZQTlkWUlqMWQyQzhyN3lORnBOY0lmRUN6WUgvdWQ2MmZmdGtCSk8KM28rWlJhWlRWV0x6bTNhSXlSMllLNzEwZFlKWXVXYTRYcy9ESy9lL1ovRmR6eWxLUk1xbjVwVXcyNGxyUlFjdApzcHlORnZGZHZjOHZDVnFOdmQvRTB0SnFlV0FhRXQ0RHgyTkFjdUlEUHZwdnFrdDEyOERISUx4UjVRVzNvL2NZCm05elFIUUtCZ0dNZkVKUnh4Sk51ZllmcXNMa1QwQktWTEhCMDZsUHAxSGlLWVZuMXJkMmNZRkl2M3VNR2RrUXYKKzlYNVFIdkpsaWYzdUg1Wnp0TnZFdEVEY3lzcjdWN1RzNXo2Z1BGcUdrdDlKS1o2c1ZkcWNuYjRoSlhONXQzaQpBVWZtWkdrN0pFeVlSRXdPUm1jM3FBNld2RG9iUWtINGhXeXg4azN5R3NwTmNzcHgzd2ZGCi0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

[root@master sefe]#

- Set default context

Add 12 lines to "" in the following 13 lines

[root@master sefe]# cat -n kc1 | grep context

7 contexts:

8 - context:

12 name: context1

13 current-context: ""

[root@master sefe]# vi kc1

[root@master sefe]# cat -n kc1 | grep context

7 contexts:

8 - context:

12 name: context1

13 current-context: "context1"

[root@master sefe]#

- The kubeconfig file is created

User authorization

- At this time, we can copy this file to other hosts for testing or test on the current master. We can see that the name has changed to ccx, but we don't have permission to access it at this time

[root@master sefe]# kubectl --kubeconfig=kc1 get nodes Error from server (Forbidden): nodes is forbidden: User "ccx" cannot list resource "nodes" in API group "" at the cluster scope [root@master sefe]# [root@master sefe]# scp kc1 192.168.59.151:~ root@192.168.59.151's password: kc1 100% 5495 3.0MB/s 00:00 [root@master sefe]# # On the client [root@master2 ~]# kubectl --kubeconfig=kc1 get nodes Error from server (Forbidden): nodes is forbidden: User "ccx" cannot list resource "nodes" in API group "" at the cluster scope [root@master2 ~]#

- Now start authorizing the user ccx [authorizing is actually creating a cluster role binding]

I authorized the ccx user, and the certificate and secret key of ccx are stored in kc1, so kc1 has the authority of ccx

kubectl create clusterrolebinding test1 --clusterrole=cluster-admin --user=ccx #Cluster role binding test1 -- test1 is the name # --Clusterrole = cluster admin - permissions given to cluster admin # --user=ccx -- to which user name [root@master sefe]# kubectl create clusterrolebinding test1 --clusterrole=cluster-admin --user=ccx clusterrolebinding.rbac.authorization.k8s.io/test1 created [root@master sefe]# [root@master sefe]# kubectl get clusterrolebindings.rbac.authorization.k8s.io test1 NAME ROLE AGE test1 ClusterRole/cluster-admin 2m43s [root@master sefe]#

- Now test again

After you give permission, you can see it naturally below

[root@master sefe]# kubectl --kubeconfig=kc1 get nodes NAME STATUS ROLES AGE VERSION master Ready master 114d v1.21.0 node1 Ready <none> 114d v1.21.0 node2 Ready <none> 114d v1.21.0 [root@master sefe]# [root@master2 ~]# kubectl --kubeconfig=kc1 get nodes NAME STATUS ROLES AGE VERSION master Ready master 114d v1.21.0 node1 Ready <none> 114d v1.21.0 node2 Ready <none> 114d v1.21.0 [root@master2 ~]#

- Then delete the clusterrolebinding. You can see that it doesn't work again [because the permission is lost]

[root@master sefe]# kubectl delete clusterrolebindings.rbac.authorization.k8s.io test1 clusterrolebinding.rbac.authorization.k8s.io "test1" deleted [root@master sefe]# [root@master sefe]# kubectl --kubeconfig=kc1 get nodes Error from server (Forbidden): nodes is forbidden: User "ccx" cannot list resource "nodes" in API group "" at the cluster scope [root@master sefe]#

Verify kubeconfig file

- Because I deleted the permission above, I now create one

[root@master sefe]# kubectl create clusterrolebinding test1 --clusterrole=cluster-admin --user=ccx clusterrolebinding.rbac.authorization.k8s.io/test1 created [root@master sefe]#

- Check whether ccx has the permission of pod in the current namespace of list

[root@master sefe]# kubectl auth can-i list pods --as ccx yes [root@master sefe]#

- Check whether ccx has the permission of pod in Kube system in the list namespace

[root@master sefe]# kubectl auth can-i list pods -n kube-system --as ccx yes [root@master sefe]# # Normally, all namespaces are yes [root@master sefe]# kubectl auth can-i list pods -n ds --as ccx yes [root@master sefe]#

- This file can be used normally only when it is yes.