preface

I personally think java multithreading is the most difficult part of javaSe. I have learned it before, but I don't know how to start with the real needs of multithreading. In fact, I don't have a deep understanding of multithreading, the application scenario of multithreading api, the running process of multithreading, etc, This article will use the method of example + illustration + source code to analyze java multithreading.

The article is long. You can also choose to look at specific chapters. It is recommended to knock all multithreaded code by hand. Never believe the conclusions you see. What you run after coding is your own.

What is java multithreading?

Process and thread

process

- When a program is run, it starts a process, such as qq and word

- The program consists of instructions and data. The instructions should be run and the data should be loaded. The instructions are loaded and run by the cpu, and the data is loaded into the memory. When the instructions are running, the cpu can schedule the hard disk, network and other devices

thread

- A process can be divided into multiple threads

- A thread is an instruction stream, the smallest unit of cpu scheduling, and the cpu executes instructions one by one

Parallelism and concurrency

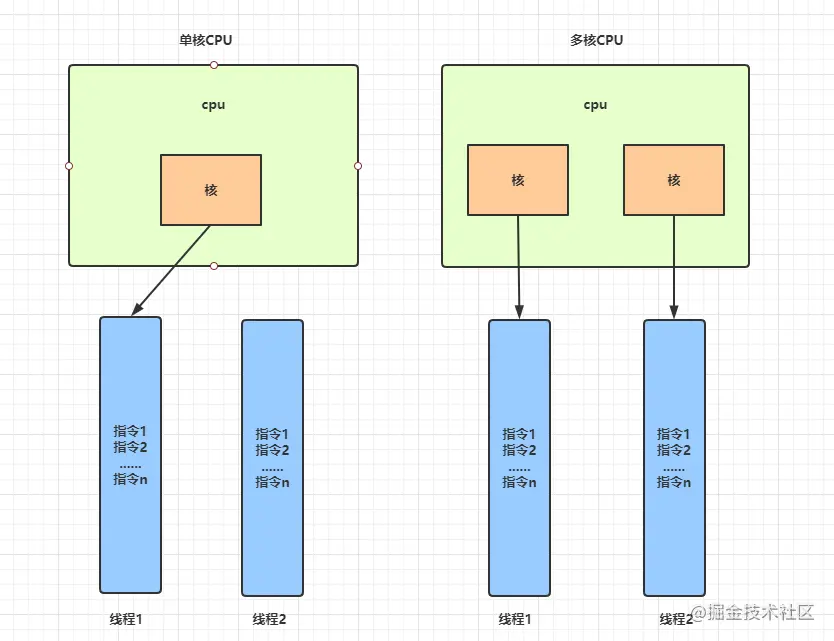

Concurrency: when a single core cpu runs multiple threads, the time slice switches quickly. Threads execute cpu in turn

Parallelism: when multi-core CPUs run multiple threads, they really run at the same time

java provides rich APIs to support multithreading.

Why multithreading?

Multithreading can be implemented with a single thread. The single thread runs well. Why does java introduce the concept of multithreading?

Benefits of multithreading:

-

The program runs faster! Come on! Come on!

-

Make full use of cpu resources. At present, almost no online cpu is single core, giving full play to the powerful ability of multi-core cpu

Where is multithreading difficult?

Single thread has only one execution line, the process is easy to understand, and the execution process of code can be clearly outlined in the brain

Multithreading is multi line, and generally there is interaction between multiple lines, and communication is required between multiple lines. The general difficulties are as follows

- The execution result of multithreading is uncertain, which is affected by cpu scheduling

- Multithreading security issues

- Thread resources are precious and depend on thread pool to operate threads. The parameter setting of thread pool

- Multithreaded execution is dynamic, simultaneous and difficult to track

- The bottom layer of multithreading is the operating system level, and the source code is difficult

Sometimes I want to turn myself into a byte and shuttle through the server to find out the context, just like the invincible Destruction King (those who have not seen this film can see it, and their brain holes are wide open).

Basic use of java multithreading

Define tasks, create and run threads

Task: the execution body of the thread. That is, our core code logic

Define task

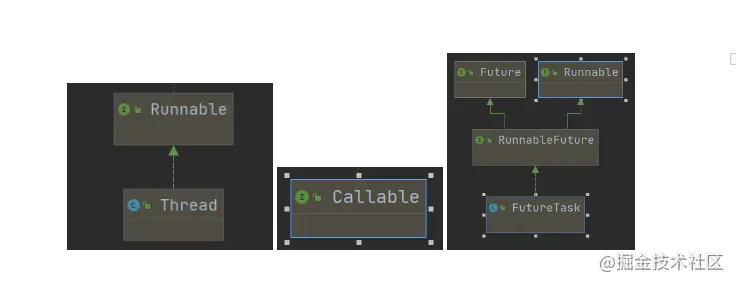

- Inherit Thread class (it can be said to combine tasks and threads)

- Implement the Runnable interface (it can be said that the task and thread are separated)

- Implement the Callable interface (execute tasks using FutureTask)

Limitations of Thread implementation tasks

- The task logic is written in the run method of Thread class, which has the limitation of single inheritance

- When creating multithreads, each task does not share member variables. static must be added to achieve sharing

Runnable and Callable address the limitations of Thread

However, Runbale has the following limitations compared with Callable

- The task has no return value

- The task cannot throw an exception to the caller

The following code defines threads in several ways

@Slf4j

class T extends Thread {

@Override

public void run() {

log.info("I'm an heir Thread Task");

}

}

@Slf4j

class R implements Runnable {

@Override

public void run() {

log.info("I am the realization Runnable Task");

}

}

@Slf4j

class C implements Callable<String> {

@Override

public String call() throws Exception {

log.info("I am the realization Callable Task");

return "success";

}

}How threads are created

- Create a Thread directly through the Thread class

- Create threads from within the thread pool

How to start a thread

- Call the thread's start() method

// Start the task that inherits the Thread class

new T().start();

// Starting the task that inherits the anonymous inner class of Thread can be optimized by lambda

Thread t = new Thread(){

@Override

public void run() {

log.info("I am Thread Anonymous inner class tasks");

}

};

// Start the task that implements the Runnable interface

new Thread(new R()).start();

// Start the task of implementing the Runnable anonymous implementation class

new Thread(new Runnable() {

@Override

public void run() {

log.info("I am Runnable Anonymous inner class tasks");

}

}).start();

// Start the simplified task of the lambda that implements Runnable

new Thread(() -> log.info("I am Runnable of lambda Simplified tasks")).start();

// Start the task that implements the Callable interface. Combined with FutureTask, you can obtain the execution results of the thread

FutureTask<String> target = new FutureTask<>(new C());

new Thread(target).start();

log.info(target.get());

The class diagram of the above thread related classes is as follows

Context switching

Under multi-core cpu, multi threads work in parallel. If there are many threads, a single core will schedule threads concurrently, and there will be the concept of context switching at runtime

When the cpu executes a thread's task, it allocates time slices to the thread. Context switching occurs in the following cases.

- The thread has run out of cpu time slices

- garbage collection

- The thread calls sleep, yield, wait, join, park, synchronized, lock and other methods

When context switching occurs, the operating system will save the state of the current thread and restore the state of another thread. There is a block memory address called program counter in the jvm, which is used to record which line of code the thread executes. It is thread private.

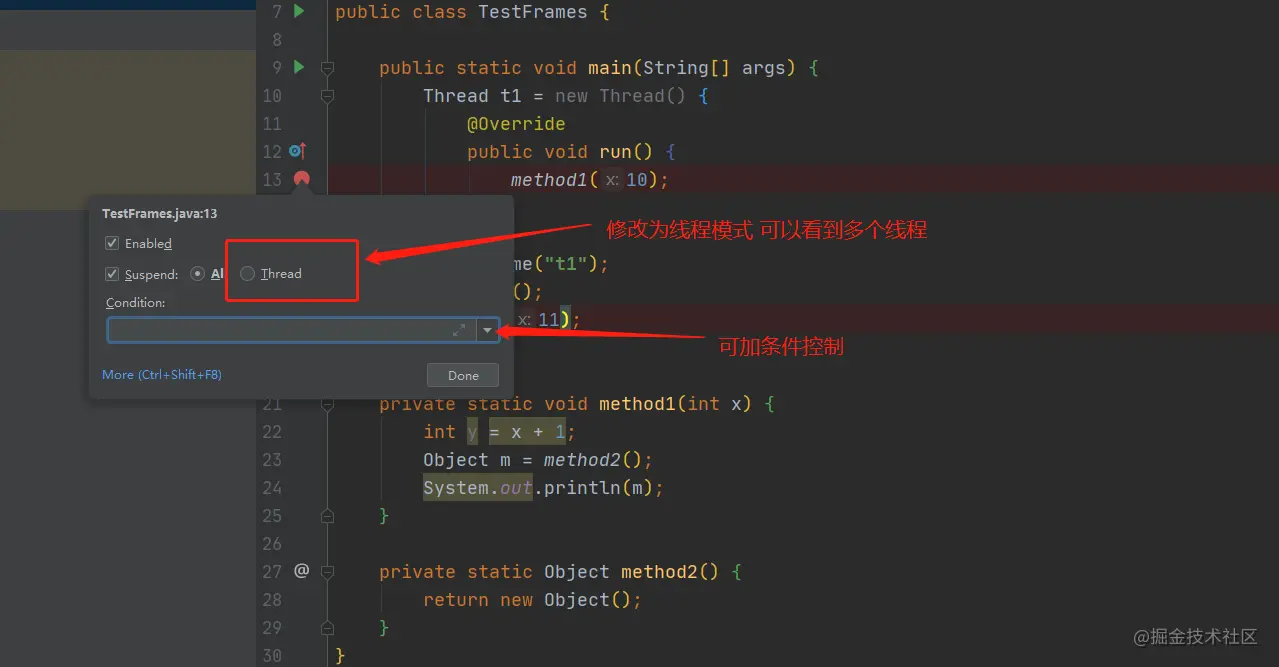

When the idea breaks, it can be set to Thread mode, and the debug mode of the idea can see the change of the stack frame

Comity of threads - yield() & priority of threads

The yield() method will make the running thread switch to the ready state and re compete for the time slice of the cpu. Whether to obtain the time slice depends on the allocation of the cpu.

The code is as follows

// Definition of method

public static native void yield();

Runnable r1 = () -> {

int count = 0;

for (;;){

log.info("---- 1>" + count++);

}

};

Runnable r2 = () -> {

int count = 0;

for (;;){

Thread.yield();

log.info(" ---- 2>" + count++);

}

};

Thread t1 = new Thread(r1,"t1");

Thread t2 = new Thread(r2,"t2");

t1.start();

t2.start();

// Operation results

11:49:15.796 [t1] INFO thread.TestYield - ---- 1>129504

11:49:15.796 [t1] INFO thread.TestYield - ---- 1>129505

11:49:15.796 [t1] INFO thread.TestYield - ---- 1>129506

11:49:15.796 [t1] INFO thread.TestYield - ---- 1>129507

11:49:15.796 [t1] INFO thread.TestYield - ---- 1>129508

11:49:15.796 [t1] INFO thread.TestYield - ---- 1>129509

11:49:15.796 [t1] INFO thread.TestYield - ---- 1>129510

11:49:15.796 [t1] INFO thread.TestYield - ---- 1>129511

11:49:15.796 [t1] INFO thread.TestYield - ---- 1>129512

11:49:15.798 [t2] INFO thread.TestYield - ---- 2>293

11:49:15.798 [t1] INFO thread.TestYield - ---- 1>129513

11:49:15.798 [t1] INFO thread.TestYield - ---- 1>129514

11:49:15.798 [t1] INFO thread.TestYield - ---- 1>129515

11:49:15.798 [t1] INFO thread.TestYield - ---- 1>129516

11:49:15.798 [t1] INFO thread.TestYield - ---- 1>129517

11:49:15.798 [t1] INFO thread.TestYield - ---- 1>129518As shown in the above results, thread t2 performs yield() every time it executes, and thread 1 has significantly more opportunities to execute than thread 2.

thread priority

The priority of the thread is adjusted by 1 ~ 10 within the thread. The default thread priority is NORM_PRIORITY:5

When the cpu is busy, the thread with higher priority gets more time slices

When the cpu is relatively idle, the priority setting is basically useless

public final static int MIN_PRIORITY = 1;

public final static int NORM_PRIORITY = 5;

public final static int MAX_PRIORITY = 10;

// Definition of method

public final void setPriority(int newPriority) {

}When the cpu is busy

Runnable r1 = () -> {

int count = 0;

for (;;){

log.info("---- 1>" + count++);

}

};

Runnable r2 = () -> {

int count = 0;

for (;;){

log.info(" ---- 2>" + count++);

}

};

Thread t1 = new Thread(r1,"t1");

Thread t2 = new Thread(r2,"t2");

t1.setPriority(Thread.NORM_PRIORITY);

t2.setPriority(Thread.MAX_PRIORITY);

t1.start();

t2.start();

// Possible operating results

11:59:00.696 [t1] INFO thread.TestYieldPriority - ---- 1>44102

11:59:00.696 [t2] INFO thread.TestYieldPriority - ---- 2>135903

11:59:00.696 [t2] INFO thread.TestYieldPriority - ---- 2>135904

11:59:00.696 [t2] INFO thread.TestYieldPriority - ---- 2>135905

11:59:00.696 [t2] INFO thread.TestYieldPriority - ---- 2>135906When the cpu is idle

Runnable r1 = () -> {

int count = 0;

for (int i = 0; i < 10; i++) {

log.info("---- 1>" + count++);

}

};

Runnable r2 = () -> {

int count = 0;

for (int i = 0; i < 10; i++) {

log.info(" ---- 2>" + count++);

}

};

Thread t1 = new Thread(r1,"t1");

Thread t2 = new Thread(r2,"t2");

t1.setPriority(Thread.MIN_PRIORITY);

t2.setPriority(Thread.MAX_PRIORITY);

t1.start();

t2.start();

// Possible running result thread 1 has low priority but runs first

12:01:09.916 [t1] INFO thread.TestYieldPriority - ---- 1>7

12:01:09.916 [t1] INFO thread.TestYieldPriority - ---- 1>8

12:01:09.916 [t1] INFO thread.TestYieldPriority - ---- 1>9

12:01:09.916 [t2] INFO thread.TestYieldPriority - ---- 2>2

12:01:09.916 [t2] INFO thread.TestYieldPriority - ---- 2>3

12:01:09.916 [t2] INFO thread.TestYieldPriority - ---- 2>4

12:01:09.916 [t2] INFO thread.TestYieldPriority - ---- 2>5

12:01:09.916 [t2] INFO thread.TestYieldPriority - ---- 2>6

12:01:09.916 [t2] INFO thread.TestYieldPriority - ---- 2>7

12:01:09.916 [t2] INFO thread.TestYieldPriority - ---- 2>8

12:01:09.916 [t2] INFO thread.TestYieldPriority - ---- 2>9

Daemon thread

By default, the java process will not end until all threads have finished running. There is a special thread called daemon thread. When all non daemon threads have ended, they will be forced to end even if they have not finished executing.

The default threads are non daemon threads.

Garbage collection thread is a typical daemon thread

// Definition of method

public final void setDaemon(boolean on) {

}

Thread thread = new Thread(() -> {

while (true) {

}

});

// Specific api. Set to true to indicate that there is no daemon thread. When the main thread ends, the daemon thread also ends.

// The default is false. When the main thread ends, the thread continues to run and the program does not stop

thread.setDaemon(true);

thread.start();

log.info("end");

Thread blocking

Thread blocking can be divided into many types. The definitions of thread blocking may be different from the operating system level and the java level, but in a broad sense, there are the following ways to make thread blocking

- BIO blocking, that is, blocking io flow is used

- sleep(long time) causes the thread to sleep and enter the blocking state

- a. The thread calling this method by join () enters the block and waits for thread a to resume running after execution

- Synchronized or ReentrantLock causes the thread to enter the blocking state without obtaining the lock (detailed in the chapter on synchronization lock)

- The wait() method after getting the lock will also cause the thread to enter the blocking state.

- LockSupport.park() causes the thread to enter the blocking state (detailed in the synchronization lock chapter)

sleep()

Hibernating a thread will put the running thread into a blocking state. When the sleep time is over, the time slice competing for cpu again continues to run

// Method definition native method

public static native void sleep(long millis) throws InterruptedException;

try {

// Sleep for 2 seconds

// This method will throw an InterruptedException exception, that is, it can be interrupted during sleep, and an exception will be thrown after being interrupted

Thread.sleep(2000);

} catch (InterruptedException abnormal e) {

}

try {

// Use TimeUnit's api instead of Thread.sleep

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

}

join()

join means that the thread calling the method enters the blocking state and resumes running after a thread completes execution

// Method definition has overloads

// Wait for the thread to finish executing before resuming running

public final void join() throws InterruptedException {

}

// Specify the time of the join. If the thread has not finished executing within the specified time, the caller thread will resume running without waiting

public final synchronized void join(long millis)

throws InterruptedException{}

Thread t = new Thread(() -> {

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

r = 10;

});

t.start();

// Let the main thread block and wait for the t thread to execute before continuing

// Remove the row, the execution result is 0, and the execution result of the row is 10

t.join();

log.info("r:{}", r);

// Operation results

13:09:13.892 [main] INFO thread.TestJoin - r:10

Thread interrupt()

// Definition of relevant methods

public void interrupt() {

}

public boolean isInterrupted() {

}

public static boolean interrupted() {

}Interrupt flag: whether the thread is interrupted. true indicates that it is interrupted, and false indicates that it is not interrupted

isInterrupted() gets the interrupt flag of the thread. After calling, the interrupt flag of the thread will not be modified

The interrupt() method is used to interrupt a thread

- Threads that explicitly throw InterruptedException methods such as sleep, wait, and join can be interrupted, but the interrupt flag of the thread is still false after interruption

- If a normal thread is interrupted, the thread will not be interrupted, but the interruption of the thread is marked as true

interrupted() gets the interrupt flag of the thread and clears the interrupt flag after calling. That is, if it is true, the interrupt flag after calling is false (not commonly used)

interrupt instance: a background monitoring thread keeps monitoring. When the outside world interrupts it, it ends running. The code is as follows

@Slf4j

class TwoPhaseTerminal{

// Monitoring thread

private Thread monitor;

public void start(){

monitor = new Thread(() ->{

// Constant monitoring

while (true){

Thread thread = Thread.currentThread();

// Determine whether the current thread is interrupted

if (thread.isInterrupted()){

log.info("The current thread was interrupted,End operation");

break;

}

try {

Thread.sleep(1000);

// After being interrupted in the monitoring logic, the interruption is marked as true

log.info("monitor");

} catch (InterruptedException e) {

// An exception is thrown when sleep is interrupted. The interrupt flag is still false

// When an interrupt is called, the interrupt is marked as true

thread.interrupt();

}

}

});

monitor.start();

}

public void stop(){

monitor.interrupt();

}

}Status of the thread

The above describes the use of some basic APIs. Calling the above methods will make the thread have the corresponding state.

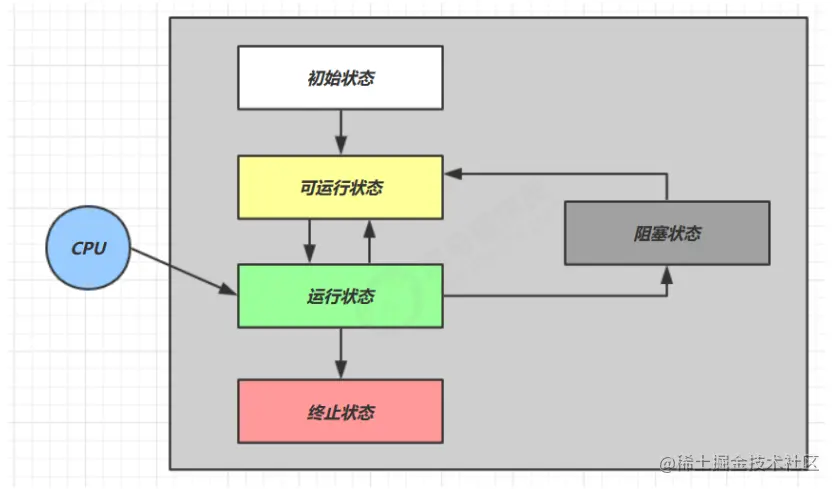

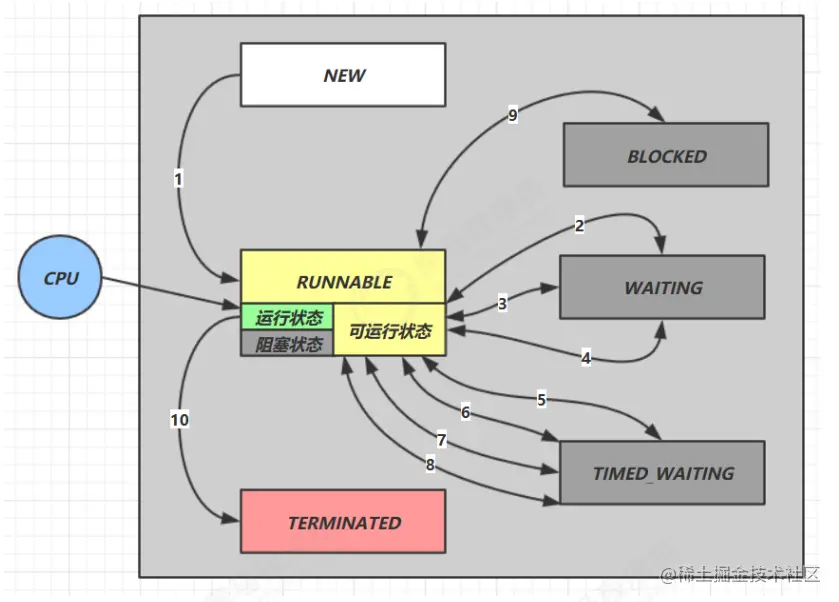

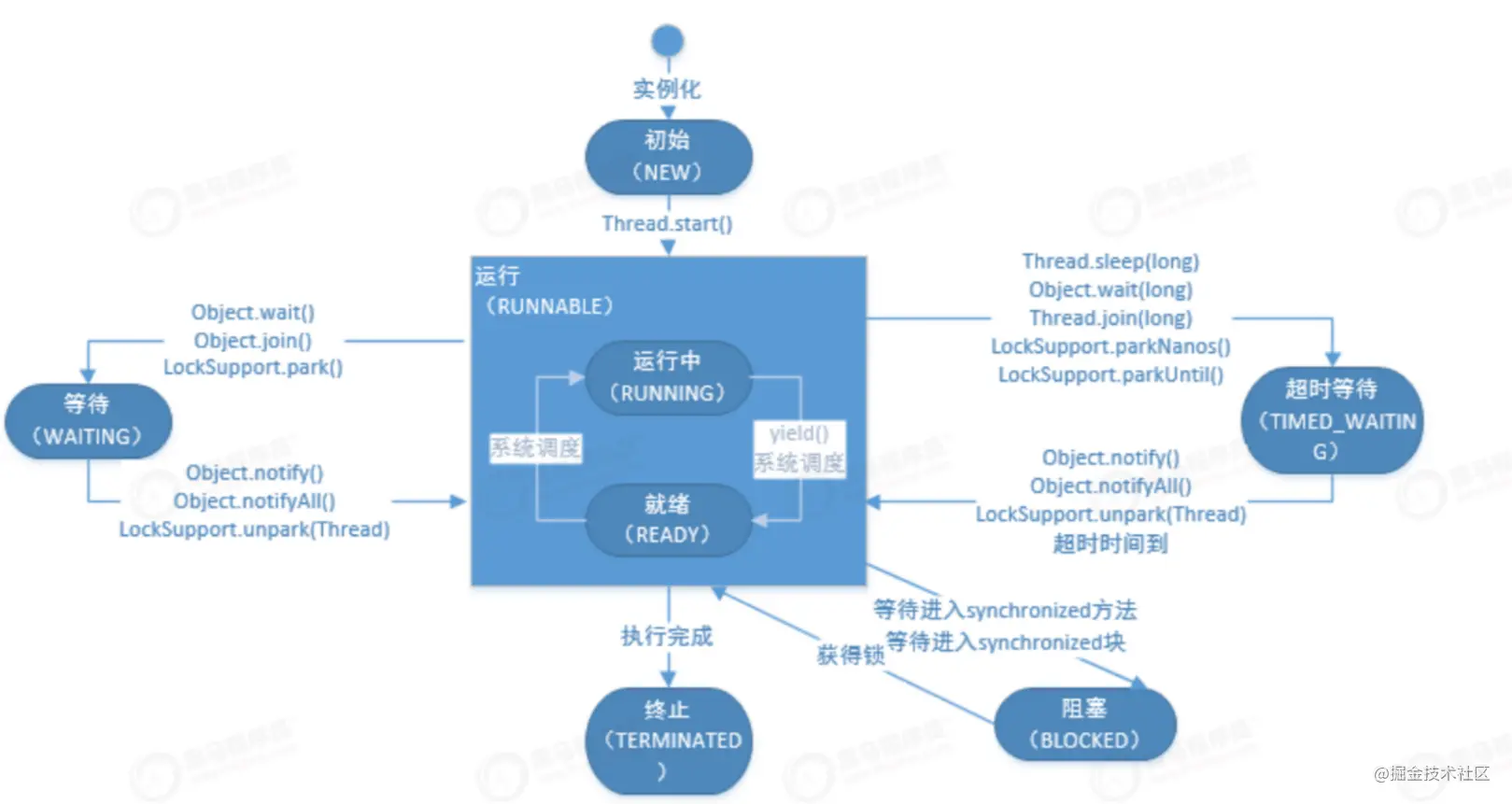

Thread states can be divided into five states from the operating system level and six states from the java api level.

Five states

- Initial state: the state when the thread object is created

- Runnable state (ready state): after calling the start() method, it enters the ready state, that is, it is ready to be scheduled and executed by the cpu

- Running status: the thread obtains the time slice of the cpu and executes the logic of the run() method

- Blocking state: the thread is blocked, abandons the cpu time slice, waits for the unblocking to return to the ready state, and competes for the time slice

- Termination status: the status of a thread after its execution is completed or an exception is thrown

Six states

Internal enumeration State in Thread class

public enum State {

NEW,

RUNNABLE,

BLOCKED,

WAITING,

TIMED_WAITING,

TERMINATED;

}- The NEW thread object is created

- The Runnable thread enters this state after calling the start() method. This state includes three cases

- Ready status: waiting for cpu to allocate time slice

- Running status: enter the Runnable method to execute the task

- Blocking state: the state when BIO executes blocking io flow

- Blocked is the blocking state when the lock is not obtained (detailed in the synchronization lock chapter)

- WAITING is the state after calling wait() and join() methods

- TIMED_WAITING is the state after calling sleep(time), wait(time), join(time) and other methods

- The status of the TERMINATED thread after execution is completed or an exception is thrown

Correspondence between six thread states and methods

Summary of thread related methods

It mainly summarizes the core methods in Thread class

| Method name | static | Method description |

|---|---|---|

| start() | no | Let the thread start, enter the ready state, and wait for the cpu to allocate time slices |

| run() | no | Rewrite the method of the Runnable interface to obtain the specific logic executed when the thread obtains the cpu time slice |

| yield() | yes | The comity of threads makes the threads that get the cpu time slice enter the ready state and compete for the time slice again |

| sleep(time) | yes | The thread sleeps for a fixed time and enters the blocking state. After the sleep time is completed, it scrambles for the time slice again, and the sleep can be interrupted |

| join()/join(time) | no | Call the join method of the thread object. The caller's thread enters the block, waits for the thread object to finish executing or reaches the specified time, and then resumes to compete for the time slice again |

| isInterrupted() | no | Get the interrupt flag of the thread, true: interrupted, false: not interrupted. The break flag is not modified after the call |

| interrupt() | no | Methods that interrupt threads and throw InterruptedException exceptions can be interrupted, but the interrupt flag will not be modified after interruption. The interrupt flag will be modified after the normal thread is interrupted |

| interrupted() | no | Gets the break flag of the thread. The break flag is cleared after calling |

| stop() | no | Stopping the thread is not recommended |

| suspend() | no | Suspended threads are not recommended |

| resume() | no | Resuming thread operation is not recommended |

| currentThread() | yes | Get current thread |

Thread related methods in Object

| Method name | Method description |

|---|---|

| wait()/wait(long timeout) | The thread that gets the lock enters a blocking state |

| notify() | Randomly wake up a thread that is waiting () |

| notifyAll(); | Wake up all the threads that are waiting () and compete for the time slice again |

Synchronous lock

Thread safety

- There is no problem for a program to run multiple threads

- The problem may occur when multiple threads access shared resources

- There is no problem with multiple threads reading shared resources

- When multiple threads read and write shared resources, there will be a problem if instruction interleaving occurs

Critical area: a piece of code is called critical area if it performs multithreaded read and write operations on shared resources.

Note that instruction interleaving means that when java code is parsed into a bytecode file, one line of Java code may have multiple lines in the bytecode, and it may be interleaved during thread context switching.

Thread safety means that when multiple threads call the method of the critical area of the same object, the attribute value of the object must not be wrong, which ensures thread safety.

Such as the following unsafe code

// Member variable of object

private static int count = 0;

public static void main(String[] args) throws InterruptedException {

// t1 thread + 5000 times to variable

Thread t1 = new Thread(() -> {

for (int i = 0; i < 5000; i++) {

count++;

}

});

// t2 thread to variable - 5000 times

Thread t2 = new Thread(() -> {

for (int i = 0; i < 5000; i++) {

count--;

}

});

t1.start();

t2.start();

// Let T1 and T2 complete

t1.join();

t2.join();

System.out.println(count);

}

// Operation results

-1399The above code has two threads, one + 5000 times and one - 5000 times. If the thread is safe, the value of count should still be 0.

However, it runs many times, and the results are different each time, and they are not 0, so it is thread unsafe.

Must all operations of a thread safe class be thread safe?

Some thread safe classes are often mentioned in development, such as ConcurrentHashMap. Thread safety means that each independent method in the class is thread safe, but the combination of methods is not necessarily thread safe.

Are member and static variables thread safe?

- If there is no multi-threaded sharing, it is thread safe

- If multithreaded sharing exists

- If multithreading has only read operations, it is thread safe

- Multithreading has write operations, and the code of write operations is a critical area, so the thread is unsafe

Are local variables thread safe?

- Local variables are thread safe

- Objects referenced by local variables are not necessarily thread safe

- Thread safe if the object does not escape the scope of the method

- If the object escapes from the scope of the method, such as the return value of the method, thread safety needs to be considered

synchronized

Synchronous locks are also called object locks. They are locked on objects. Different objects are different locks.

This keyword is used to ensure thread safety and is a blocking solution.

At most one thread can hold the object lock at the same time, and other threads will be blocked when they want to obtain the object lock. There is no need to worry about context switching.

Note: do not understand that a thread is locked, and it will be executed all the time when it enters the synchronized code block. If the time slice is switched, other threads will also be executed. After switching back, it will be executed immediately, but it will not be executed to the resources with competing locks, because the current thread has not released the lock.

When a thread executes the synchronized code block, it will wake up the waiting thread

synchronized actually uses object locks to ensure the atomicity of critical areas. The code in critical areas is indivisible and will not be interrupted by thread switching

Basic use

// Adding a lock to a method actually locks this object

private synchronized void a() {

}

// When synchronizing code blocks, the lock object can be arbitrary. Adding it to this is the same as the a() method

private void b(){

synchronized (this){

}

}

// Adding to static methods is actually locking class objects

private synchronized static void c() {

}

// Synchronous code blocks actually lock class objects, which is the same as the c() method

private void d(){

synchronized (TestSynchronized.class){

}

}

// The bytecode source code corresponding to the above b method, in which monitorenter is the place to lock

0 aload_0

1 dup

2 astore_1

3 monitorenter

4 aload_1

5 monitorexit

6 goto 14 (+8)

9 astore_2

10 aload_1

11 monitorexit

12 aload_2

13 athrow

14 returnThread safe code

private static int count = 0;

private static Object lock = new Object();

private static Object lock2 = new Object();

// Both t1 thread and t2 object lock the same object. Thread safety is guaranteed. No matter how many times this code is executed, the result is 0

public static void main(String[] args) throws InterruptedException {

Thread t1 = new Thread(() -> {

for (int i = 0; i < 5000; i++) {

synchronized (lock) {

count++;

}

}

});

Thread t2 = new Thread(() -> {

for (int i = 0; i < 5000; i++) {

synchronized (lock) {

count--;

}

}

});

t1.start();

t2.start();

// Let T1 and T2 complete

t1.join();

t2.join();

System.out.println(count);

}Key point: locking is added to the object. It must be the same object before locking can take effect

Thread communication

wait+notify

Inter thread communication can be realized by sharing variables + wait() & notify()

wait() puts the thread into a blocking state, and notify() wakes the thread up

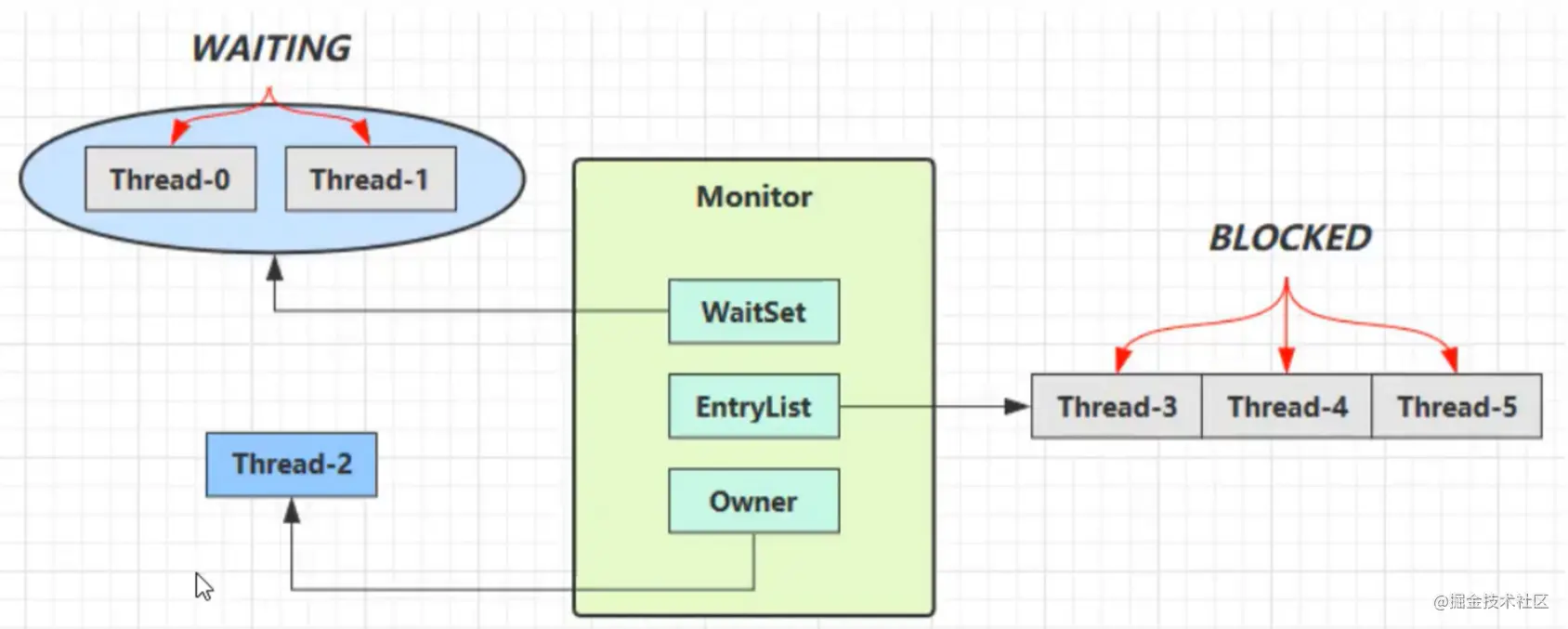

When multiple threads compete to access the synchronization method of the object, the lock object will be associated with an underlying Monitor object (implementation of heavyweight lock)

As shown in the figure below, after thread0 and 1 compete for the lock and execute the code, threads 2, 3, 4 and 5 execute the code in the critical area at the same time to start competing for the lock

- Thread-0 obtains the lock of the object first and is associated with the owner of the monitor. The wait() method of the lock object is called in the synchronization code block. After calling, it will enter the waitSet to wait. The same is true for Thread-1. At this time, the state of thread-0 is waiting

- Thread2, 3, 4 and 5 compete at the same time. After 2 obtains the lock, it is associated with the owner of the monitor. 3, 4 and 5 can only enter the EntryList and wait. At this time, the status of 2 thread is Runnable, and the status of 3, 4 and 5 is Blocked

- 2 after execution, wake up the threads in the entryList, 3, 4 and 5 to compete for locks, and the obtained threads will be associated with the owner of the monitor

- The 3, 4, and 5 threads call the notify() or the notifyAll() of the lock object during execution, which will wake up the thread of waitSet, wake the thread into entryList and wait for the re competition lock.

be careful:

-

The Blocked state and the Waitting state are both Blocked states

-

The Blocked thread wakes up when the owner thread releases the lock

-

The use scenarios of wait and notify must be synchronized, and the object lock must be obtained before calling. Use the lock object to call, otherwise an exception will be thrown

- wait() releases the lock and enters the waitSet to pass in the time. If it is not awakened within the specified time, it will wake up automatically

- notify() randomly wakes up a thread in the waitSet

- notifyAll() wakes up all threads in the waitSet

static final Object lock = new Object();

new Thread(() -> {

synchronized (lock) {

log.info("Start execution");

try {

// Can only be called inside the synchronization code

lock.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

log.info("Continue with core logic");

}

}, "t1").start();

new Thread(() -> {

synchronized (lock) {

log.info("Start execution");

try {

lock.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

log.info("Continue with core logic");

}

}, "t2").start();

try {

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

}

log.info("Start waking up");

synchronized (lock) {

// Can only be called inside the synchronization code

lock.notifyAll();

}

// results of enforcement

14:29:47.138 [t1] INFO TestWaitNotify - Start execution

14:29:47.141 [t2] INFO TestWaitNotify - Start execution

14:29:49.136 [main] INFO TestWaitNotify - Start waking up

14:29:49.136 [t2] INFO TestWaitNotify - Continue with core logic

14:29:49.136 [t1] INFO TestWaitNotify - Continue with core logicWhat is the difference between wait and sleep?

Both will put the thread into a blocking state, with the following differences

- wait is the method of Object and sleep is the method of Thread

- wait will immediately release the lock, sleep will not release the lock

- The status of the thread after wait is watching sleep, and the status of the thread after wait is Time_Waiting

park&unpark

LockSupport is a tool class under juc. It provides park and unpark methods to realize thread communication

Differences compared with wait and notity

- wait and notify need to obtain the object lock park unpark

- unpark can specify the wake-up thread to notify random wake-up

- The order of park and unpark can be first, and the order of unpark wait and notify cannot be reversed

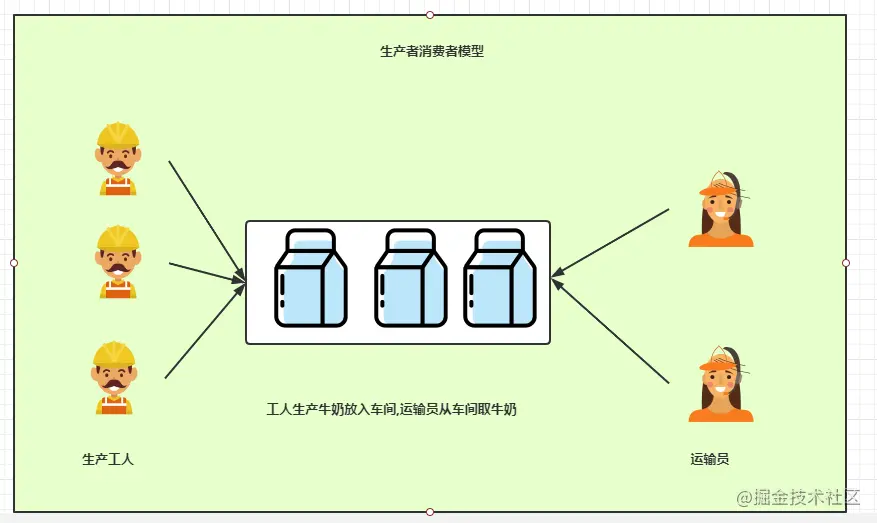

Producer consumer model

It means that there are producers to produce data and consumers to consume data. When producers are full, they will not produce. Inform consumers to take it and wait for consumption before production.

Consumers will not consume until they consume. Inform producers to produce and continue to consume when production arrives.

public static void main(String[] args) throws InterruptedException {

MessageQueue queue = new MessageQueue(2);

// Three producers store values in the queue

for (int i = 0; i < 3; i++) {

int id = i;

new Thread(() -> {

queue.put(new Message(id, "value" + id));

}, "producer" + i).start();

}

Thread.sleep(1000);

// A consumer keeps taking values from the queue

new Thread(() -> {

while (true) {

queue.take();

}

}, "consumer").start();

}

}

// Message queues are held by producers and consumers

class MessageQueue {

private LinkedList<Message> list = new LinkedList<>();

// capacity

private int capacity;

public MessageQueue(int capacity) {

this.capacity = capacity;

}

/**

* production

*/

public void put(Message message) {

synchronized (list) {

while (list.size() == capacity) {

log.info("Queue full, producer waiting");

try {

list.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

list.addLast(message);

log.info("Production message:{}", message);

// Inform consumers after production

list.notifyAll();

}

}

public Message take() {

synchronized (list) {

while (list.isEmpty()) {

log.info("The queue is empty and the consumer is waiting");

try {

list.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

Message message = list.removeFirst();

log.info("Consumption news:{}", message);

// Notify producers after consumption

list.notifyAll();

return message;

}

}

}

// news

class Message {

private int id;

private Object value;

}Synchronous lock case

In order to more vividly express the concept of synchronization lock, here is an example in life to concretize the above concepts as much as possible.

Here is a thing that everyone is very interested in. Money!!! (except Mr. Ma).

In reality, we go to the ATM at the door of the bank to withdraw money. The money in the ATM is a shared variable. In order to ensure safety, it is impossible for two strangers to enter the same ATM at the same time to withdraw money, so only one person can enter the ATM and lock the door of the ATM, and others can only wait at the door of the ATM.

There are multiple ATMs, the money in them does not affect each other, and there are multiple locks (multiple object locks). There is no security problem for money withdrawers to withdraw money from multiple ATMs at the same time.

If every stranger who withdraws money is a thread, when the withdrawer enters the ATM and locks the door (the thread obtains the lock), he goes out after taking the money (the thread releases the lock), and the next person competes to the lock to withdraw the money.

Suppose that the staff is also a thread. If the teller finds that the ATM is short of money after entering, he will notify the staff to add money to the ATM (call notifyAll method), and the teller will suspend withdrawal and enter the bank lobby to block waiting (call wait method).

The staff and tellers in the bank lobby were awakened and re competed for the lock. After entering, if it was a teller, because the ATM had no money, they had to enter the bank lobby and wait.

When the staff obtains the lock of the ATM and enters, they will notify the people in the hall to withdraw the money after adding the money (call the notifyAll method). Pause to add money, enter the bank lobby and wait for wake-up to add money (call the wait method).

At this time, all the people waiting in the lobby came to compete for the lock, who got it and who entered to continue to withdraw the money.

The difference from reality is that there is no concept of queuing here. Whoever grabs the lock will go in and get it.

ReentrantLock

Reentrant lock: after a thread obtains the lock of an object, it can be obtained when it needs to obtain the lock inside the execution method. Such as the following code

private static final ReentrantLock LOCK = new ReentrantLock();

private static void m() {

LOCK.lock();

try {

log.info("begin");

// Call m1()

m1();

} finally {

// Pay attention to the release of the lock

LOCK.unlock();

}

}

public static void m1() {

LOCK.lock();

try {

log.info("m1");

m2();

} finally {

// Pay attention to the release of the lock

LOCK.unlock();

}

}

synchronized is also a reentrant lock. ReentrantLock has the following advantages

- Timeout for lock acquisition is supported

- Can be broken when acquiring a lock

- Can be set as fair lock

- There can be different condition variables, that is, there are multiple waitsets, and wake-up can be specified

api

// The default is a non fair lock. If the parameter is passed to true, it means a non fair lock

ReentrantLock lock = new ReentrantLock(false);

// Attempt to acquire lock

lock()

// The release lock should be placed in a finally block and must be executed until

unlock()

try {

// It can be broken when acquiring a lock, and the thread in the block can be broken

LOCK.lockInterruptibly();

} catch (InterruptedException e) {

return;

}

// Try to acquire a lock, and return false if it cannot be acquired

LOCK.tryLock()

// The timeout time is not obtained for a period of time, and false is returned

tryLock(long timeout, TimeUnit unit)

// One lock can create multiple lounges by specifying the condition variable

Condition waitSet = ROOM.newCondition();

// After releasing the lock, enter the waitSet and wait for it to be released. Other threads can grab the lock

yanWaitSet.await()

// Wake up the thread of the specific lounge and rewrite the contention lock after waking up

yanWaitSet.signal()

Example: one thread outputs a, one thread outputs b, one thread outputs c, and abc outputs in sequence for 5 consecutive times

This is about thread communication. It can be realized by using wait()/notify() and control variables. This function can be realized by using ReentrantLock here.

public static void main(String[] args) {

AwaitSignal awaitSignal = new AwaitSignal(5);

// Construct three conditional variables

Condition a = awaitSignal.newCondition();

Condition b = awaitSignal.newCondition();

Condition c = awaitSignal.newCondition();

// Open three threads

new Thread(() -> {

awaitSignal.print("a", a, b);

}).start();

new Thread(() -> {

awaitSignal.print("b", b, c);

}).start();

new Thread(() -> {

awaitSignal.print("c", c, a);

}).start();

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

awaitSignal.lock();

try {

// Wake up a first

a.signal();

} finally {

awaitSignal.unlock();

}

}

}

class AwaitSignal extends ReentrantLock {

// Number of cycles

private int loopNumber;

public AwaitSignal(int loopNumber) {

this.loopNumber = loopNumber;

}

/**

* @param print Output characters

* @param current Current condition variable

* @param next Next conditional variable

*/

public void print(String print, Condition current, Condition next) {

for (int i = 0; i < loopNumber; i++) {

lock();

try {

try {

// Wait after obtaining lock

current.await();

System.out.print(print);

} catch (InterruptedException e) {

}

next.signal();

} finally {

unlock();

}

}

}deadlock

Speaking of deadlocks, for example,

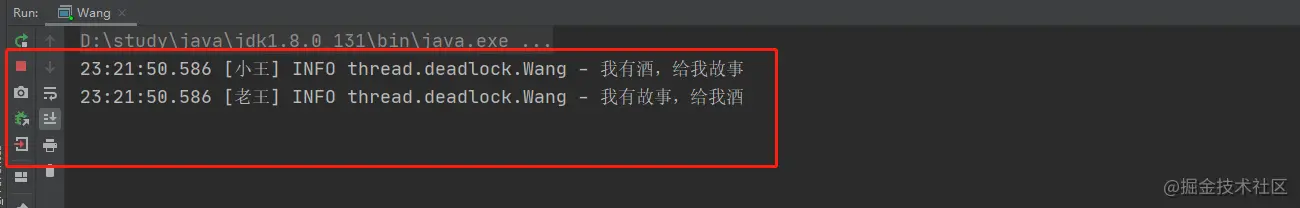

The following is the code implementation

static Beer beer = new Beer();

static Story story = new Story();

public static void main(String[] args) {

new Thread(() ->{

synchronized (beer){

log.info("I have wine. Give me a story");

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

synchronized (story){

log.info("Xiao Wang began to drink and tell stories");

}

}

},"Xiao Wang").start();

new Thread(() ->{

synchronized (story){

log.info("I have a story. Give me wine");

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

synchronized (beer){

log.info("Lao Wang began to drink and tell stories");

}

}

},"Lao Wang").start();

}

class Beer {

}

class Story{

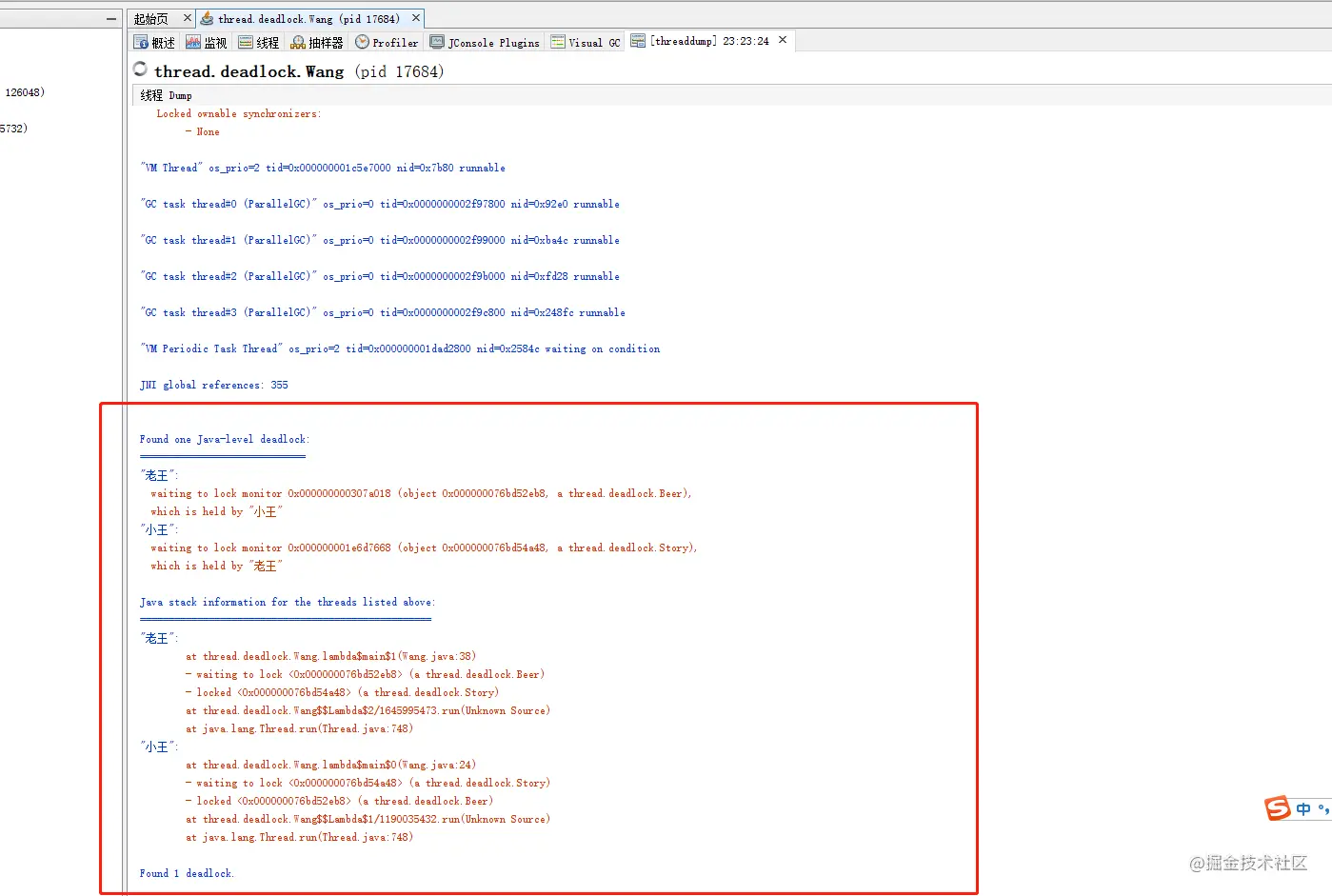

}Deadlocks prevent the program from running normally

The detection tool can check the deadlock information

java Memory Model (JMM)

jmm is embodied in the following three aspects

- Atomicity guarantees that instructions are not affected by context switching

- Visibility ensures that instructions are not affected by the cpu cache

- Ordering ensures that instructions are not affected by parallel optimization

visibility

Unstoppable program

static boolean run = true;

public static void main(String[] args) throws InterruptedException {

Thread t = new Thread(() -> {

while (run) {

// ....

}

});

t.start();

Thread.sleep(1000);

// The thread t does not stop as expected

run = false;

}

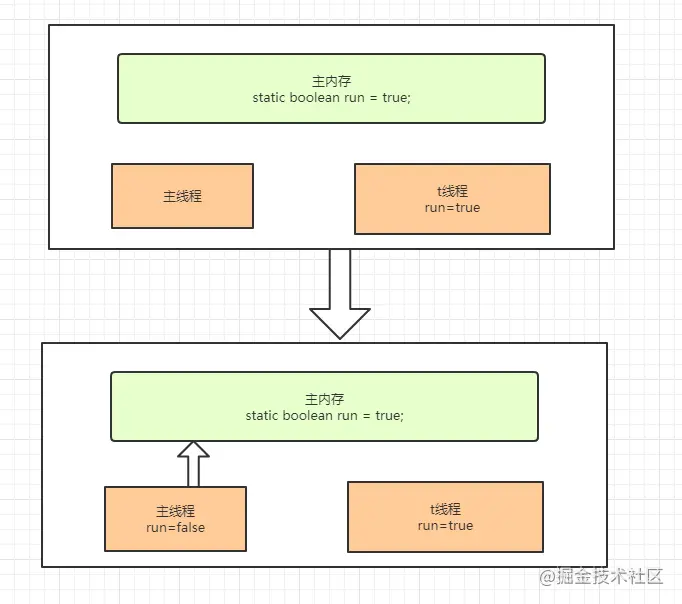

As shown in the figure above, the thread has its own work cache. When the main thread modifies the variables and synchronizes them to the main memory, the t thread does not read them, so the program cannot stop

Order

The JVM may adjust the execution order of statements without affecting the correctness of the program, which is also known as instruction reordering

static int i;

static int j;

// Perform the following assignment operations in a thread

i = ...;

j = ...;

It is possible to j Assign firstAtomicity

You should be familiar with atomicity. The synchronized code block of the above synchronization lock ensures atomicity, that is, a piece of code is a whole. Atomicity ensures thread safety and will not be affected by context switching.

volatile

This keyword addresses visibility and ordering, and volatile is implemented through memory barriers

- Write barrier

The write barrier will be added after the object write operation, the data before the write barrier will be synchronized to main memory, and the execution order of the write barrier will be ensured before the write barrier

- Read barrier

The read barrier will be added before the object read operation, the statements after the read barrier will be read from main memory, and the code after the read barrier will be executed after the read barrier

Note: volatile cannot solve atomicity, that is, thread safety cannot be realized through this keyword.

volatile application scenario: one thread reads variables and another thread operates variables. After adding this keyword, it is ensured that after writing variables, the threads reading variables can perceive them in time.

Unlocked cas

cas (compare and swap)

When assigning a value to a variable, read the value v from the memory and obtain the new value n to be exchanged. When executing the compareAndSwap() method, compare whether v is consistent with the value in the current memory. If so, exchange n and v. if not, try again.

The bottom layer of cas is cpu level, which can ensure the atomicity of operation without using synchronization lock.

private AtomicInteger balance;

// Simulate the specific operation of cas

@Override

public void withdraw(Integer amount) {

while (true) {

// Get current value

int pre = balance.get();

// Get new value after operation

int next = pre - amount;

// If the comparison and setting are successful, interrupt, otherwise try again

if (balance.compareAndSet(pre, next)) {

break;

}

}

}The efficiency of no lock is higher than that of the previous lock, because no lock will not involve the context switching of threads

cas is the idea of optimistic lock, and synchronized is the idea of pessimistic lock

cas is suitable for scenarios where there is little thread competition. If the competition is strong, retries often occur, which will reduce the efficiency

The juc concurrency package contains atomic classes that implement cas

- AtomicInteger/AtomicBoolean/AtomicLong

- AtomicIntegerArray/AtomicLongArray/AtomicReferenceArray

- AtomicReference/AtomicStampedReference/AtomicMarkableReference

AtomicInteger

Common APIs

new AtomicInteger(balance) get() compareAndSet(pre, next) // i.incrementAndGet() ++i // i.decrementAndGet() --i // i.getAndIncrement() i++ // i.getAndDecrement() ++i i.addAndGet() // Incoming functional interface modification i int getAndUpdate(IntUnaryOperator updateFunction) // The core method of cas compareAndSet(int expect, int update)

ABA problem

There is an ABA problem in cas, that is, when comparing and exchanging, if the original value is A, another thread will change it to B, and another thread will change it to A.

At this time, the exchange actually occurred, but the comparison and exchange can succeed because the value has not changed

Solution

AtomicStampedReference/AtomicMarkableReference

The above two classes solve the ABA problem. The principle is to add a version number to the object. Each time you modify it, you can avoid the ABA problem

Or adding boolean variable identifier and modifying boolean variable value after modification can also avoid ABA problem.

Thread pool

Introduction to thread pool

Thread pool is the most important knowledge point and difficulty of java concurrency, and it is the most widely used in practice.

Thread resources are very valuable and cannot be created indefinitely. There must be a tool to manage threads. Thread pool is a tool to manage threads. There is often the idea of pooling in java development, such as database connection pool, Redis connection pool, etc.

Some threads are created in advance and executed directly when the task is submitted, which can not only save the time of creating threads, but also control the number of threads.

Benefits of thread pooling

- Reduce resource consumption. Through the idea of pooling, reduce the consumption of creating threads and destroying threads, and control resources

- Improve the response speed. When the task arrives, it can run without creating a thread

- Provide more and more powerful functions with high scalability

Construction method of thread pool

public ThreadPoolExecutor(int corePoolSize,

int maximumPoolSize,

long keepAliveTime,

TimeUnit unit,

BlockingQueue<Runnable> workQueue,

ThreadFactory threadFactory,

RejectedExecutionHandler handler) {

}Significance of constructor parameters

| Parameter name | Parameter meaning |

|---|---|

| corePoolSize | Number of core threads |

| maximumPoolSize | Maximum number of threads |

| keepAliveTime | Idle time of emergency thread |

| unit | Idle time unit of emergency thread |

| workQueue | Blocking queue |

| threadFactory | Create a thread factory, which mainly defines the thread name |

| handler | Reject policy |

Thread pool case

Let's use an example to understand the parameters of the thread pool and the process of receiving tasks from the thread pool

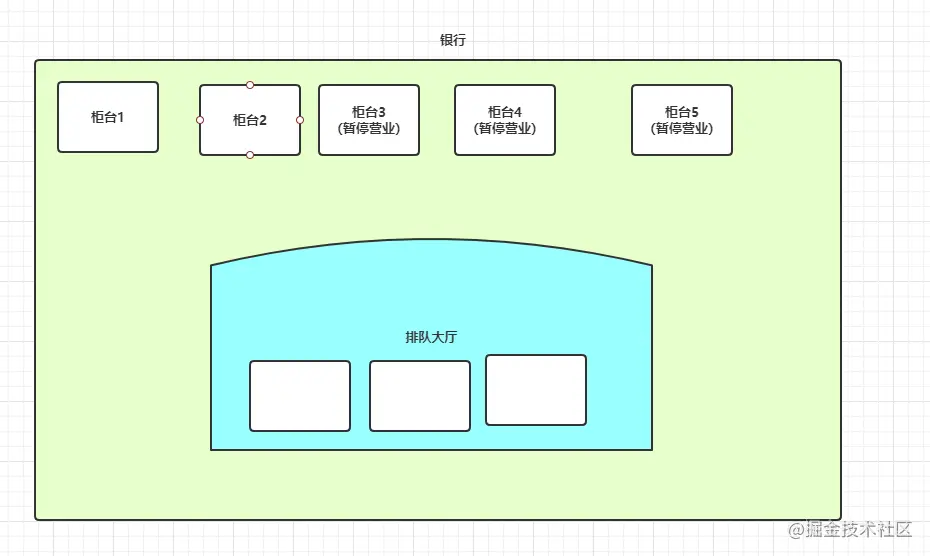

As shown in the figure above, the bank handles business.

- When the customer arrives at the bank, open the counter for handling. The counter is equivalent to a thread and the customer is equivalent to a task. Two are normally open counters and three are temporary counters. 2 is the number of core threads, and 5 is the maximum number of threads. That is, there are two core threads

- When the counter opened to the second, they were still processing business. When the customer comes back, he will line up in the queue hall. There are only three seats in the queue hall.

- When the queuing hall is full, the customers will continue to open the counter. At present, there are three temporary counters, that is, three emergency threads

- If you come back to customers at this time, you can't provide them with business normally and use the rejection policy to deal with them

- When the counter finishes processing the business, it will get the task from the queuing hall. When the counter cannot get the task every idle time, if the current number of threads is greater than the number of core threads, it will recycle the threads. The counter shall be revoked.

Status of thread pool

The thread pool indicates the status of the thread pool through the upper 3 bits of an int variable, and the lower 29 bits store the number of thread pools

| Status name | Senior three | Receive new task | Processing blocking queue tasks | explain |

|---|---|---|---|---|

| Running | 111 | Y | Y | Receive and process tasks normally |

| Shutdown | 000 | N | Y | It will not receive tasks, will complete the tasks being executed, and will also process the tasks in the blocking queue |

| stop | 001 | N | N | It will not receive tasks, interrupt the executing tasks, and give up processing the tasks in the blocking queue |

| Tidying | 010 | N | N | All tasks have been executed. The current active thread is 0. It is about to enter the end |

| Termitted | 011 | N | N | End state |

// runState is stored in the high-order bits private static final int RUNNING = -1 << COUNT_BITS; private static final int SHUTDOWN = 0 << COUNT_BITS; private static final int STOP = 1 << COUNT_BITS; private static final int TIDYING = 2 << COUNT_BITS; private static final int TERMINATED = 3 << COUNT_BITS;

Main process of thread pool

Steps of creating, receiving, executing and recycling threads of thread pool

- After creating a thread pool, the thread pool status is Running, and the following steps can be performed in this status

- When submitting a task, the thread pool will create a thread to process the task

- When the number of worker threads in the thread pool reaches the corePoolSize, continuing to submit tasks will enter the blocking queue

- When the blocking queue is full, continue to submit the task, and an emergency thread will be created to process it

- When the number of worker threads in the thread pool reaches maximumPoolSize, the reject policy is executed

- When the task fetching time of a thread reaches keepAliveTime and the task has not been fetched, and the number of working threads is greater than corePoolSize, the thread will be recycled

Note: the newly created thread is not a core thread, but the thread created later is a non core thread. There is no concept of core and non core thread, which has been misunderstood by me for a long time.

Reject policy

- The caller throws RejectedExecutionException (default policy)

- Let the caller run the task

- Discard this task

- Discard the earliest task in the blocking queue and join the task

How to submit a task

// Execute Runnable

public void execute(Runnable command) {

if (command == null)

throw new NullPointerException();

int c = ctl.get();

if (workerCountOf(c) < corePoolSize) {

if (addWorker(command, true))

return;

c = ctl.get();

}

if (isRunning(c) && workQueue.offer(command)) {

int recheck = ctl.get();

if (! isRunning(recheck) && remove(command))

reject(command);

else if (workerCountOf(recheck) == 0)

addWorker(null, false);

}

else if (!addWorker(command, false))

reject(command);

}

// Submit Callable

public <T> Future<T> submit(Callable<T> task) {

if (task == null) throw new NullPointerException();

// Build FutureTask internally

RunnableFuture<T> ftask = newTaskFor(task);

execute(ftask);

return ftask;

}

// Submit Runnable and specify the return value

public Future<?> submit(Runnable task) {

if (task == null) throw new NullPointerException();

// Build FutureTask internally

RunnableFuture<Void> ftask = newTaskFor(task, null);

execute(ftask);

return ftask;

}

// Submit Runnable and specify the return value

public <T> Future<T> submit(Runnable task, T result) {

if (task == null) throw new NullPointerException();

// Build FutureTask internally

RunnableFuture<T> ftask = newTaskFor(task, result);

execute(ftask);

return ftask;

}

protected <T> RunnableFuture<T> newTaskFor(Runnable runnable, T value) {

return new FutureTask<T>(runnable, value);

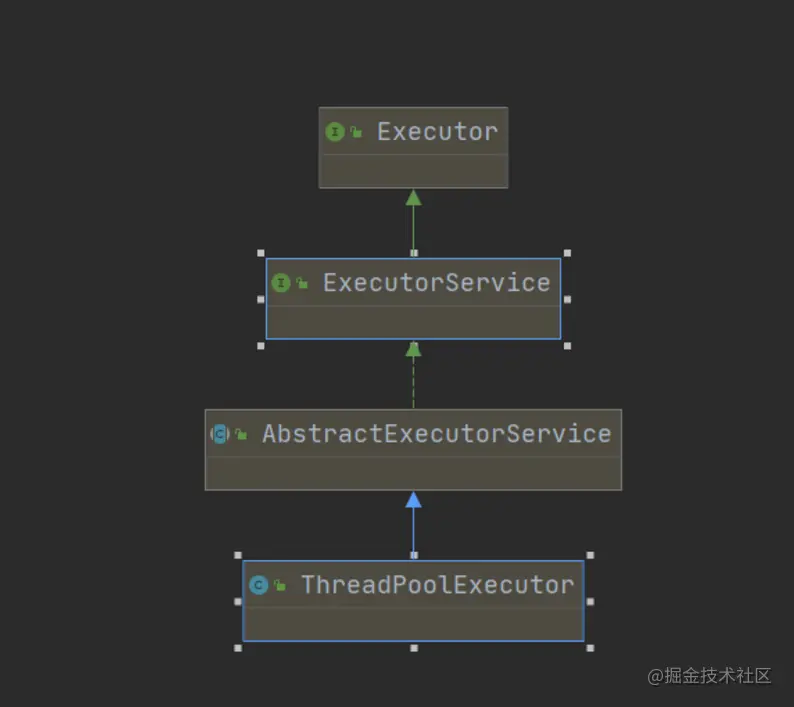

}Execetors create thread pool

Note: the following methods are not recommended

1.newFixedThreadPool

public static ExecutorService newFixedThreadPool(int nThreads) {

return new ThreadPoolExecutor(nThreads, nThreads,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<Runnable>());

}- Number of core threads = maximum number of threads. There are no emergency threads

- Unbounded blocking queue may cause oom

2.newCachedThreadPool

public static ExecutorService newCachedThreadPool() {

return new ThreadPoolExecutor(0, Integer.MAX_VALUE,

60L, TimeUnit.SECONDS,

new SynchronousQueue<Runnable>());

}

- The number of core threads is 0, the maximum number of threads is unlimited, and the emergency threads are recycled in 60 seconds

- The queue adopts synchronous queue to realize no capacity, that is, it cannot be put into the queue without a thread to get it

- This may result in too many threads and too much cpu load

3.newSingleThreadExecutor

public static ExecutorService newSingleThreadExecutor() {

return new FinalizableDelegatedExecutorService

(new ThreadPoolExecutor(1, 1,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<Runnable>()));

}- The number of core threads and the maximum number of threads are 1. There are no emergency threads, and the unbounded queue can receive tasks continuously

- Serialize tasks one by one, and use wrapper classes to mask and modify some parameters of the thread pool, such as corePoolSize

- If a thread throws an exception, it will re create a thread to continue execution

- May cause oom

4.newScheduledThreadPool

public static ScheduledExecutorService newScheduledThreadPool(int corePoolSize) {

return new ScheduledThreadPoolExecutor(corePoolSize);

}- The thread pool of task scheduling can specify the delay time for calling and the interval time for calling

Thread pool shutdown

shutdown()

It will make the thread pool state shutdown and cannot receive tasks, but it will finish executing the tasks in the worker thread and blocking queue, which is equivalent to graceful shutdown

public void shutdown() {

final ReentrantLock mainLock = this.mainLock;

mainLock.lock();

try {

checkShutdownAccess();

advanceRunState(SHUTDOWN);

interruptIdleWorkers();

onShutdown(); // hook for ScheduledThreadPoolExecutor

} finally {

mainLock.unlock();

}

tryTerminate();

}shutdownNow()

It will make the thread pool status stop and cannot receive tasks. It will immediately interrupt the working thread in execution, and will not execute the tasks in the blocking queue. It will return the task list in the blocking queue

public List<Runnable> shutdownNow() {

List<Runnable> tasks;

final ReentrantLock mainLock = this.mainLock;

mainLock.lock();

try {

checkShutdownAccess();

advanceRunState(STOP);

interruptWorkers();

tasks = drainQueue();

} finally {

mainLock.unlock();

}

tryTerminate();

return tasks;

}Correct usage posture of thread pool

The difficulty of thread pool lies in the configuration of parameters. There is a set of theoretical configuration parameters

cpu intensive: it refers to that the program mainly performs cpu operations

Number of core threads: number of CPU cores + 1

IO intensive: remote call RPC, database operation, etc., without using cpu for a large number of operations. Most application scenarios

Number of core threads = number of cores * expected cpu utilization * total time / cpu operation time

However, based on the above theory, it is still difficult to configure because the cpu operation time is difficult to estimate

Refer to the following table for the actual configuration size

| cpu intensive | io intensive | |

|---|---|---|

| Number of threads | Number of cores < = x < = number of cores * 2 | Number of cores * 50 < = x < = number of cores * 100 |

| queue length | y>=100 | 1<=y<=10 |

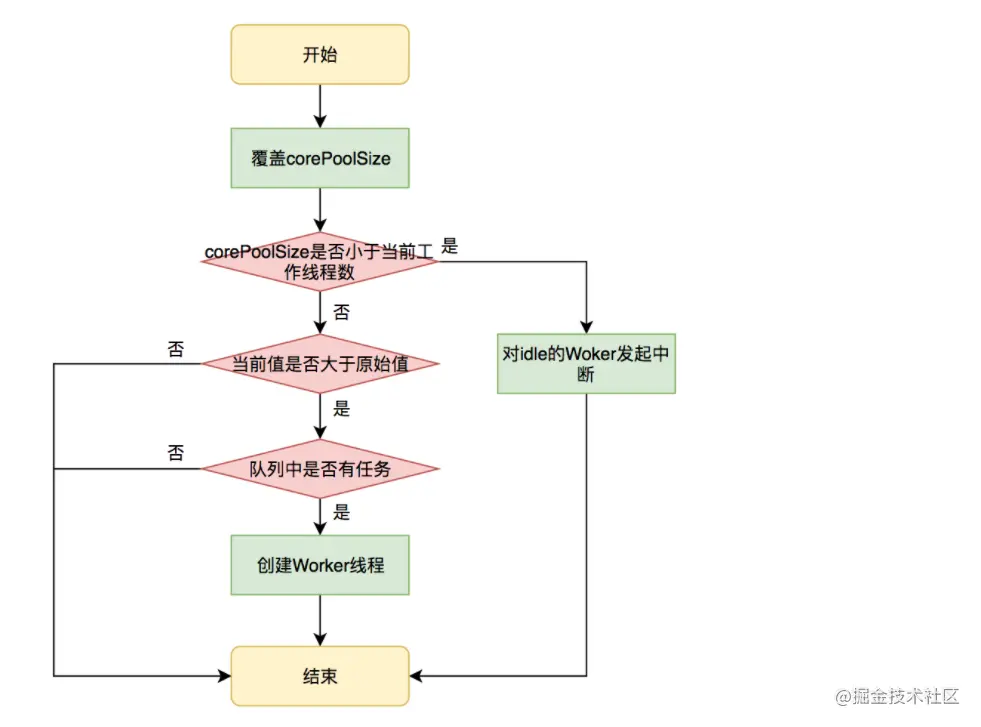

1. Thread pool parameters can be configured through distributed configuration, and there is no need to restart the application to modify the configuration

Thread pool parameters change according to the number of online requests. The best way is that the number of core threads, the maximum number of threads and the queue size are configurable

Mainly configure corePoolSize maxPoolSize queueSize

java provides methods to override parameters, and the parameters will be processed inside the thread pool for smooth modification

public void setCorePoolSize(int corePoolSize) {

}

2. Add thread pool monitoring

3.io intensive can be adjusted to add tasks to the maximum number of threads before placing them in the blocking queue

The code can mainly rewrite the method of blocking the queue to join the task

public boolean offer(Runnable runnable) {

if (executor == null) {

throw new RejectedExecutionException("The task queue does not have executor!");

}

final ReentrantLock lock = this.lock;

lock.lock();

try {

int currentPoolThreadSize = executor.getPoolSize();

// If the number of submitted tasks is less than the number of threads currently created, there are idle threads,

if (executor.getTaskCount() < currentPoolThreadSize) {

// Put the task in the queue and let the thread process the task

return super.offer(runnable);

}

// Core changes

// If the current number of threads is less than the maximum number of threads, false is returned to let the thread pool create new threads

if (currentPoolThreadSize < executor.getMaximumPoolSize()) {

return false;

}

// Otherwise, put the task in the queue

return super.offer(runnable);

} finally {

lock.unlock();

}

}3. Rejection policy it is recommended to use tomcat's rejection policy (give one chance)

// tomcat source code

@Override

public void execute(Runnable command) {

if ( executor != null ) {

try {

executor.execute(command);

} catch (RejectedExecutionException rx) {

// After an exception is caught, it is obtained from the queue, which is equivalent to retrying 1. The task cannot be obtained and the task is rejected

if ( !( (TaskQueue) executor.getQueue()).force(command) ) throw new RejectedExecutionException("Work queue full.");

}

} else throw new IllegalStateException("StandardThreadPool not started.");

}It is recommended to modify the method of fetching tasks from the queue: increase the timeout. If the timeout is 1 minute, the task cannot be fetched and returned

public boolean offer(E e, long timeout, TimeUnit unit){}epilogue

I've been working for three or four years and haven't officially written a blog. Self study has always been accumulated by taking notes. Recently, I learned java multithreading again and wanted to seriously write a blog and share this part over the weekend.

The article is long. Give a big praise to the little friends who see here! Due to the limited level of the author and the first blog, there will inevitably be mistakes in the article. We welcome your feedback and correction.