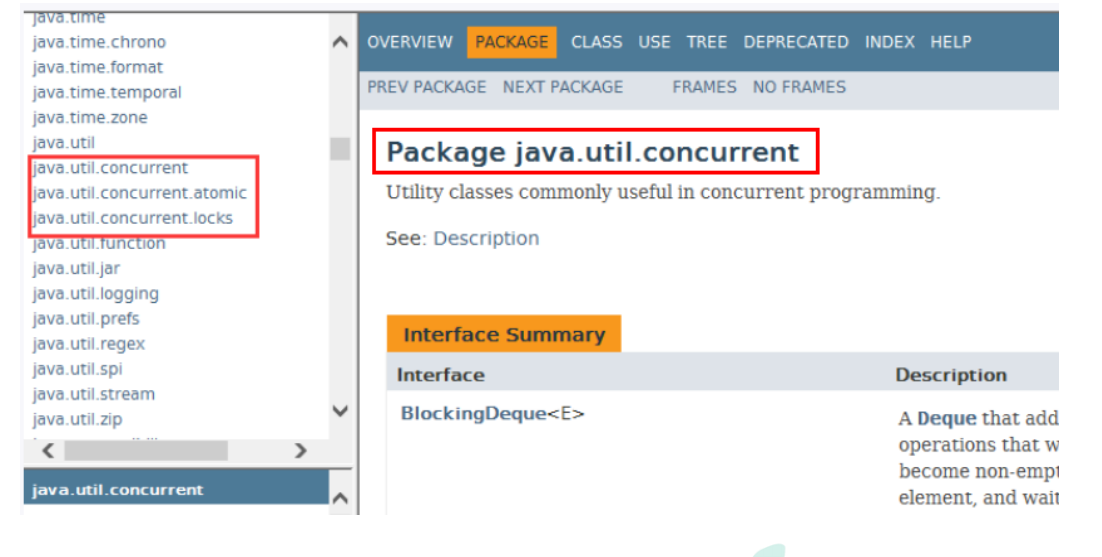

1 what is JUC

1.1 introduction to JUC

In Java, the thread part is a key point. The JUC mentioned in this article is also about threads. JUC is short for Java. Util. Concurrent toolkit. This is a toolkit for processing threads. JDK 1.5 began to appear.

1.2 processes and threads

Process is a running activity of a computer program on a data set. It is the basic unit for resource allocation and scheduling of the system and the basis of the structure of the operating system. In the contemporary computer architecture of thread oriented design, process is the container of thread. Program is the description of instructions, data and their organizational form, and process is the entity of program. It is a running activity of a program in a computer on a data set. It is the basic unit for resource allocation and scheduling of the system and the basis of the structure of the operating system. Program is the description of instructions, data and their organizational form, and process is the entity of program.

Thread is the smallest unit that the operating system can schedule operations. It is included in the process and is the actual operation unit in the process. A thread refers to a single sequential control flow in a process. Multiple threads can be concurrent in a process, and each thread executes different tasks in parallel.

In summary:

Process: refers to an application running in the system; Once a program runs, it is a process; Process - the smallest unit of resource allocation.

Thread: the basic unit in which the system allocates processor time resources, or a unit execution flow executed independently within a process. Thread -- the smallest unit of program execution.

1.3 thread status

According to the jdk1.8 version, threads have six states:

public enum State {

/**

* Thread state for a thread which has not yet started.

*/

NEW,(newly build)

/**

* Thread state for a runnable thread. A thread in the runnable

* state is executing in the Java virtual machine but it may

* be waiting for other resources from the operating system

* such as processor.

*/

RUNNABLE,((ready)

/**

* Thread state for a thread blocked waiting for a monitor lock.

* A thread in the blocked state is waiting for a monitor lock

* to enter a synchronized block/method or

* reenter a synchronized block/method after calling

* {@link Object#wait() Object.wait}.

*/

BLOCKED,(Blocking)

/**

* Thread state for a waiting thread.

* A thread is in the waiting state due to calling one of the

* following methods:

* <ul>

* <li>{@link Object#wait() Object.wait} with no timeout</li>

* <li>{@link #join() Thread.join} with no timeout</li>

* <li>{@link LockSupport#park() LockSupport.park}</li>

* </ul>

*

* <p>A thread in the waiting state is waiting for another thread to

* perform a particular action.

*

* For example, a thread that has called <tt>Object.wait()</tt>

* on an object is waiting for another thread to call

* <tt>Object.notify()</tt> or <tt>Object.notifyAll()</tt> on

* that object. A thread that has called <tt>Thread.join()</tt>

* is waiting for a specified thread to terminate.

*/

WAITING,((see you or leave)

/**

* Thread state for a waiting thread with a specified waiting time.

* A thread is in the timed waiting state due to calling one of

* the following methods with a specified positive waiting time:

* <ul>

* <li>{@link #sleep Thread.sleep}</li>* <li>{@link Object#wait(long) Object.wait} with timeout</li>

* <li>{@link #join(long) Thread.join} with timeout</li>

* <li>{@link LockSupport#parkNanos LockSupport.parkNanos}</li>

* <li>{@link LockSupport#parkUntil LockSupport.parkUntil}</li>

* </ul>

*/

TIMED_WAITING,(Obsolete (not waiting)

/**

* Thread state for a terminated thread.

* The thread has completed execution.

*/

TERMINATED;(End)

}

The difference between wait/sleep

(1) sleep is the static method of Thread, and wait is the method of Object, which can be called by any Object instance.

(2) sleep does not release the lock, nor does it need to occupy the lock. wait releases the lock, but it is called on the premise that the current thread occupies the lock (that is, the code should be in synchronized).

(3) They can all be interrupted by the interrupted method.

1.4 concurrency and parallelism

1.4.1 serial mode

Serial means that all tasks are carried out in sequence one by one. Serial means that a truck of firewood must be loaded before it can be transported. The truck of firewood can be unloaded only after it is transported to. The next step can be carried out only after the whole three steps are completed.

Serial is to get only one task at a time and execute this task.

1.4.2 parallel mode

Parallelism means that multiple tasks can be obtained at the same time and executed at the same time. Parallel mode is equivalent to dividing a long queue into multiple short queues, so parallel shortens the length of the task queue. The efficiency of parallelism strongly depends on multi-process / multi-threaded code from the code level, and depends on multi-core CPU from the hardware point of view.

1.4.3 concurrency

Concurrency refers to the phenomenon that multiple programs can run at the same time. More specifically, multiple processes can run at the same time or multiple instructions can run at the same time. But this is not the point. When describing concurrency, we will not deduct whether this word is accurate. The focus of concurrency is that it is a phenomenon. Concurrency describes the phenomenon of multiple processes running at the same time. But in fact, for a single core CPU, only one thread can run at a time. Therefore, "running at the same time" here does not mean that there are multiple threads running at the same time. This is a parallel concept, but provides a function for users to see that multiple programs are running at the same time, but in fact, the processes in these programs do not occupy the CPU all the time, but execute and stop for a while.

To solve the problem of large concurrency, large tasks are usually decomposed into multiple small tasks. Because the scheduling of processes by the operating system is random, it may be executed from any small task after being divided into multiple small tasks. Some phenomena may occur:

- It may occur that a small task has been executed many times and the next task has not been started. At this time, queues or similar data structures are generally used to store the results of various small tasks

- There may be the possibility of performing the second step before you are ready for the first step. At this time, multiplexing or asynchronous methods are generally used. For example, a task is executed only when an event notification is ready to be generated.

- These small tasks can be executed in parallel in a multi process / multi-threaded manner. These small tasks can also be executed by single process / single thread. At this time, it is likely to cooperate with multiplexing to achieve high efficiency

1.4.4 summary (key points)

Concurrency: multiple threads are accessing the same resource at the same time, and multiple threads are connected to one point.

Example: Spring Festival transportation ticket grabbing e-commerce spike

Parallel: multiple tasks are executed together and then summarized.

Example: make instant noodles, boil water in an electric kettle, tear the seasoning and pour it into the bucket

1.5 tube side

Monitor ensures that only one process is active in the process at the same time, that is, the operations defined in the process are called by only one process at the same time (implemented by the compiler). However, this does not ensure that the processes execute in the designed order.

Synchronization in the JVM is based on entry and exit monitor objects. Each object will have a monitor object, which will be created and destroyed together with java objects.

The execution thread must first hold the pipe process object before executing the method. When the method is completed, the pipe process will be released. The method will hold the pipe process during execution, and other threads can no longer obtain the same pipe process.

1.6 user threads and daemon threads

User thread: ordinary thread and user-defined thread used at ordinary times.

Daemon thread: running in the background, it is a special thread, such as garbage collection.

When the main thread ends, the user thread is still running and the JVM survives.

If there are no user threads, they are all daemon threads, and the JVM ends.

2 Lock interface

2.1 Synchronized

2.1.1 Synchronized keyword review

synchronized is a keyword in Java. It is a kind of synchronous lock. It modifies the following objects:

- Modify a code block. The modified code block is called a synchronization statement block. Its scope of action is the code enclosed in braces {}, and the object of action is the object calling the code block;

- Modify a method. The modified method is called a synchronous method. Its scope of action is the whole method, and the object of action is the object calling the method;

- Although you can use synchronized to define a method, synchronized is not part of the method definition, so the synchronized keyword cannot be inherited. If a method in the parent class uses the synchronized keyword and overrides the method in the subclass, the method in the subclass is not synchronized by default, and the synchronized keyword must be explicitly added to the method in the subclass. Of course, you can also call the corresponding method in the parent class in the subclass method, so that although the subclass method is not synchronous, the subclass calls the synchronization method of the parent class, so the subclass method is equivalent to synchronization.

- Modifies a static method. Its scope of action is the whole static method, and the object of action is all objects of this class;

- Modifies a class. Its scope of action is the part enclosed in parentheses after synchronized. The main object is all objects of this class.

2.1.2 ticket selling cases

class Ticket {

//Number of votes

private int number = 30;

//Operation method: selling tickets

public synchronized void sale() {

//Judge: is there a ticket

if(number > 0) {

System.out.println(Thread.currentThread().getName()+" :

"+(number--)+" "+number);

}

}

}

If a code block is modified by synchronized, when a thread acquires the corresponding lock and executes the code block, other threads can only wait all the time. The thread waiting to acquire the lock releases the lock. Here, the thread acquiring the lock releases the lock in only two cases:

1) The thread that obtains the lock executes the code block, and then the thread releases the possession of the lock;

2) When an exception occurs in thread execution, the JVM will let the thread automatically release the lock.

Then, if the thread that obtains the lock is blocked due to waiting for IO or other reasons (such as calling the sleep method), but does not release the lock, other threads can only wait dryly. Imagine how this affects the execution efficiency of the program.

Therefore, a mechanism is needed to prevent the waiting thread from waiting indefinitely (for example, only waiting for a certain time or being able to respond to interrupts), which can be done through Lock.

2.2 what is Lock

The lock implementation provides a wider range of lock operations than can be obtained using synchronous methods and statements. They allow more flexible structures, may have very different properties, and may support multiple associated conditional objects. Lock provides more functionality than synchronized.

2.2.1 Lock interface

Difference between Lock and Synchronized:

- Lock is not built-in in the Java language. synchronized is a keyword of the Java language, so it is a built-in feature. Lock is a class through which synchronous access can be realized;

- There is a big difference between lock and synchronized. Using synchronized does not require the user to manually release the lock. When the synchronized method or synchronized code block is executed, the system will automatically let the thread release the occupation of the lock; Lock requires the user to release the lock manually. If the lock is not released actively, it may lead to deadlock.

2.2.2 lock

The lock() method is one of the most commonly used methods, which is used to obtain locks. Wait if the lock has been acquired by another thread.

With lock, the lock must be released actively, and the lock will not be released automatically in case of abnormality. Therefore, generally speaking, the use of lock must be carried out in the try{}catch {} block, and the operation of releasing the lock must be carried out in the finally block to ensure that the lock must be released and prevent deadlock. Lock is usually used

For synchronization, it is used in the following form:

Lock lock = ...;

lock.lock();

try{

//Processing tasks

} catch(Exception e){

}finally{

lock.unlock(); //Release lock

}

2.2.3 newCondition

The keyword synchronized is used together with wait()/notify() to implement the wait / notify mode. The newcondition() method of Lock returns the Condition object. The Condition class can also implement the wait / notify mode.

When notifying with notify(), the JVM will wake up a waiting thread randomly. Selective notification can be made by using Condition class. There are two commonly used methods of Condition:

- await() will make the current thread wait and release the lock. When other threads call signal(), the thread will regain the lock and continue to execute.

- signal() is used to wake up a waiting thread.

Note: before calling the await()/signal() method of Condition, the thread also needs to hold the relevant Lock. After calling await(), the thread will release the Lock. After calling singal(), it will wake up a thread from the waiting queue of the current Condition object. The awakened thread attempts to obtain the Lock. Once the Lock is obtained, it will continue to execute.

2.3 ReentrantLock

ReentrantLock means "reentrant lock". The concept of reentrant lock will be described later.

ReentrantLock is the only class that implements the Lock interface, and ReentrantLock provides more methods. Let's see how to use it through some examples.

public class Test {

private ArrayList<Integer> arrayList = new ArrayList<Integer>();

public static void main(String[] args) {

final Test test = new Test();

new Thread(){

public void run() {

test.insert(Thread.currentThread());

};

}.start();

new Thread(){

public void run() {

test.insert(Thread.currentThread());

};

}.start();

}

public void insert(Thread thread) {

Lock lock = new ReentrantLock(); //Pay attention to this place

lock.lock();

try {

System.out.println(thread.getName()+"Got the lock");

for(int i=0;i<5;i++) {

arrayList.add(i);

}

} catch (Exception e) {

// TODO: handle exception

}finally {

System.out.println(thread.getName()+"The lock was released");

lock.unlock();

}

}

}

2.4 ReadWriteLock

ReadWriteLock is also an interface, in which only two methods are defined:

One is used to obtain a read lock and the other is used to obtain a write lock. In other words, the read and write operations of files are separated and divided into two locks to be allocated to threads, so that multiple threads can read at the same time. The following ReentrantReadWriteLock implements the ReadWriteLock interface.

ReentrantReadWriteLock provides many rich methods, but there are two main methods: readLock() and writeLock() to obtain read and write locks.

Let's take a look at the specific usage of ReentrantReadWriteLock through several examples.

If multiple threads need to read at the same time, take a look at the effect of synchronized:

public class Test {

private ReentrantReadWriteLock rwl = new ReentrantReadWriteLock();

public static void main(String[] args) {

final Test test = new Test();

new Thread(){

public void run() {

test.get(Thread.currentThread());

};

}.start();

new Thread(){

public void run() {

test.get(Thread.currentThread());

};

}.start();

}

public synchronized void get(Thread thread) {

long start = System.currentTimeMillis();

while(System.currentTimeMillis() - start <= 1) {

System.out.println(thread.getName()+"A read operation is in progress");

}

System.out.println(thread.getName()+"Read operation completed");

}

}

If the read-write lock is used instead:

public class Test {

private ReentrantReadWriteLock rwl = new ReentrantReadWriteLock();

public static void main(String[] args) {

final Test test = new Test();

new Thread(){

public void run() {

test.get(Thread.currentThread());

};

}.start();

new Thread(){

public void run() {

test.get(Thread.currentThread());

};

}.start();

}

public void get(Thread thread) {

rwl.readLock().lock();

try {

long start = System.currentTimeMillis();

while(System.currentTimeMillis() - start <= 1) {

System.out.println(thread.getName()+"A read operation is in progress");

}

System.out.println(thread.getName()+"Read operation completed");

} finally {

rwl.readLock().unlock();

}

}

}

This indicates that thread1 and thread2 perform read operations at the same time. This greatly improves the efficiency of read operations.

be careful:

- If a thread has occupied a read lock, if other threads want to apply for a write lock, the thread applying for a write lock will wait to release the read lock.

- If a thread has occupied a write lock, if other threads apply for a write lock or a read lock, the requesting thread will wait for the write lock to be released.

2.5 summary (key points)

Lock and synchronized have the following differences:

- Lock is an interface, synchronized is a keyword in Java, and synchronized is a built-in language implementation;

- synchronized will automatically release the Lock held by the thread when an exception occurs, so it will not cause deadlock; When an exception occurs in Lock, if you do not actively release the Lock through unLock(), it is likely to cause deadlock. Therefore, when using Lock, you need to release the Lock in the finally block;

- Lock allows the thread waiting for the lock to respond to the interrupt, but synchronized does not. When synchronized is used, the waiting thread will wait all the time and cannot respond to the interrupt;

- Through Lock, you can know whether you have successfully obtained the Lock, but synchronized cannot.

- Lock can improve the efficiency of reading operations by multiple threads. In terms of performance, if the competition for resources is not fierce, the performance of the two is similar. When the competition for resources is very fierce (that is, a large number of threads compete at the same time), the performance of lock is much better than that of synchronized.

3. Inter thread communication

There are two models of inter thread communication: shared memory and message passing. The following methods are implemented based on these two models. Let's analyze a common interview question:

Interview questions

Scenario - two threads. One thread adds 1 to the current value, and the other thread subtracts 1 to the current value. Inter thread communication is required.

3.1 synchronized scheme

/**

* volatile Keyword to realize thread alternating addition and subtraction

*/

public class TestVolatile {

/**

* Alternate addition and subtraction

*/

public static void main(String[] args){

DemoClass demoClass = new DemoClass();

new Thread(() ->{

for (int i = 0; i < 5; i++) { d

emoClass.increment();

}

}, "thread A").start();

new Thread(() ->{

for (int i = 0; i < 5; i++) {

demoClass.decrement();

}

}, "thread B").start();

}

}

class DemoClass{

//Addition and subtraction object

private int number = 0;

/**

* Plus 1

*/

public synchronized void increment() {

try {

while (number != 0){

this.wait();

}

number++; System.out.println("--------" +Thread.currentThread().getName() + "Plus one success----------,Value is:" + number);

notifyAll();

}catch (Exception e){

e.printStackTrace();

}

}

/**

* Minus one

*/

public synchronized void decrement(){

try {

while (number == 0){

this.wait();

}

number--;

System.out.println("--------" + Thread.currentThread().getName() + "Minus one success----------,Value is:" + number);

notifyAll();

}catch (Exception e){

e.printStackTrace();

}

}

}

3.2 Lock scheme

import java.util.concurrent.locks.Condition;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

class DemoClass{

//Addition and subtraction object

private int number = 0;

//Declare lock

private Lock lock = new ReentrantLock();

//Declaration key

private Condition condition = lock.newCondition();

/**

* Plus 1

*/

public void increment() {

try {

lock.lock();

while (number != 0){

condition.await();

}

number++;

System.out.println("--------" + Thread.currentThread().getName() + "Plus one success----------,Value is:" + number);

condition.signalAll();

}catch (Exception e){

e.printStackTrace();

}finally {

lock.unlock();

}

}

/**

* Minus one

*/

public void decrement(){

try {

lock.lock();

while (number == 0){

condition.await();

}

number--;

System.out.println("--------" + Thread.currentThread().getName() + "Minus one success----------,Value is:" + number);

condition.signalAll();

}catch (Exception e){

e.printStackTrace();

}finally {

lock.unlock(); }

}

}

3.4 customized communication between threads

interview

Problem: thread A prints 5 times, thread A prints 10 times, thread B prints 15 times, and thread C prints 15 times. Cycle 10 times in this order

import java.util.concurrent.locks.Condition;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

class DemoClass{

//Communication object: 0 -- print A 1 -- print B 2 -- Print C

private int number = 0;

//Declare lock

private Lock lock = new ReentrantLock();

//Declaration key A

private Condition conditionA = lock.newCondition();

//Declaration key B

private Condition conditionB = lock.newCondition();

//Declaration key C

private Condition conditionC = lock.newCondition(); /**

* A Print 5 times

*/

public void printA(int j){

try {

lock.lock();

while (number != 0){

conditionA.await();

}

System.out.println(Thread.currentThread().getName() + "output A,The first" + j + "Round start");

//Output 5 times A

for (int i = 0; i < 5; i++) {

System.out.println("A");

}

//Start printing B

number = 1;

//Wake up B

conditionB.signal();

}catch (Exception e){

e.printStackTrace();

}finally {

lock.unlock();

}

}

/**

* B Print 10 times

*/

public void printB(int j){

try {

lock.lock();

while (number != 1){

conditionB.await();

}

System.out.println(Thread.currentThread().getName() + "output B,The first" + j + "Round start");

//Output 10 times B

for (int i = 0; i < 10; i++) {

System.out.println("B");

}

//Start printing C

number = 2;

//Wake up C

conditionC.signal();

}catch (Exception e){

e.printStackTrace();

}finally {

lock.unlock();

}

}

/**

* C Print 15 times

*/

public void printC(int j){

try {

lock.lock();

while (number != 2){

conditionC.await();

}

System.out.println(Thread.currentThread().getName() + "output C,The first" + j + "Round start");

//Output 15 times C

for (int i = 0; i < 15; i++) {

System.out.println("C");

}

System.out.println("-----------------------------------------");

//Start printing A

number = 0;

//Wake up A

conditionA.signal();

}catch (Exception e){

e.printStackTrace();

}finally {

lock.unlock();

}

}

}

Test class

/**

* volatile Keyword to realize thread alternating addition and subtraction

*/

public class TestVolatile {

/**

* Alternate addition and subtraction

* @param args

*/

public static void main(String[] args){

DemoClass demoClass = new DemoClass();

new Thread(() ->{

for (int i = 1; i <= 10; i++) {

demoClass.printA(i);

}

}, "A thread ").start();

new Thread(() ->{

for (int i = 1; i <= 10; i++) {

demoClass.printB(i);

}

}, "B thread ").start();

new Thread(() ->{

for (int i = 1; i <= 10; i++) {

demoClass.printC(i);

}

}, "C thread ").start();

}

}

4 thread safety of collections

4.1 set operation Demo (demonstration)

NotSafeDemo:

import java.util.ArrayList;

import java.util.List;

import java.util.UUID;

/*** Collection thread safety case

*/

public class NotSafeDemo {

/**

* Multiple threads modify the collection at the same time

*/

public static void main(String[] args) {

List list = new ArrayList();

for (int i = 0; i < 100; i++) {

new Thread(() ->{

list.add(UUID.randomUUID().toString());

System.out.println(list);

}, "thread " + i).start();

}

}

}

Result: abnormal content

java.util.ConcurrentModificationException

Question: why do concurrent modification exceptions occur?

View the source code of the add method of ArrayList

/**

* Appends the specified element to the end of this list.

*

* @param e element to be appended to this list

* @return <tt>true</tt> (as specified by {@link Collection#add})

*/

public boolean add(E e) {

ensureCapacityInternal(size + 1); // Increments modCount!!

elementData[size++] = e;

return true;

}

So how do we solve the thread safety problem of List type?

4.2 Vector

Vector is a vector queue, which is a class added in JDK1.0. It inherits from AbstractList and implements List, randomaccess and clonable interfaces. Vector inherits AbstractList and implements List; Therefore, it is a queue that supports related functions such as addition, deletion, modification and traversal. Vector implements RandmoAccess interface, which provides random access function.

RandmoAccess is implemented by List in java to provide fast access for List. In vector, we can quickly obtain the element object through the element serial number; This is fast random access. Vector implements the clonable interface, that is, the clone() function. It can be cloned.

Unlike ArrayList, operations in Vector are thread safe.

NotSafeDemo code modification:

import java.util.ArrayList;

import java.util.List;

import java.util.UUID;

import java.util.Vector;

/**

* Collection thread safety case

*/

public class NotSafeDemo {

/**

* Multiple threads modify the collection at the same time

*/

public static void main(String[] args) {

List list = new Vector();

for (int i = 0; i < 100; i++) {

new Thread(() ->{

list.add(UUID.randomUUID().toString());System.out.println(list);

}, "thread " + i).start();

}

}

}

The result is no exception.

View the add method of Vector

/**

* Appends the specified element to the end of this Vector.

*

* @param e element to be appended to this Vector

* @return {@code true} (as specified by {@link Collection#add})

* @since 1.2

*/

public synchronized boolean add(E e) {

modCount++;

ensureCapacityHelper(elementCount + 1);

elementData[elementCount++] = e;

return true;

}

Found that the add method is synchronized, thread safe! Therefore, there are no concurrent exceptions.

4.3 Collections

Collections provides the method synchronizedList to ensure that the list is thread safe.

NotSafeDemo code modification

import java.util.*;

/**

* Collection thread safety case

*/public class NotSafeDemo {

/**

* Multiple threads modify the collection at the same time

*/

public static void main(String[] args) {

List list = Collections.synchronizedList(new ArrayList<>());

for (int i = 0; i < 100; i++) {

new Thread(() ->{

list.add(UUID.randomUUID().toString());

System.out.println(list);

}, "thread " + i).start();

}

}

}

There were no exceptions.

View method source code

/**

* Returns a synchronized (thread-safe) list backed by the specified

* list. In order to guarantee serial access, it is critical that

* <strong>all</strong> access to the backing list is accomplished

* through the returned list.<p>

*

* It is imperative that the user manually synchronize on the returned

* list when iterating over it:

* <pre>

* List list = Collections.synchronizedList(new ArrayList());

* ...

* synchronized (list) {

* Iterator i = list.iterator(); // Must be in synchronized block

* while (i.hasNext())

* foo(i.next());

* }

* </pre>

* Failure to follow this advice may result in non-deterministic behavior.*

* <p>The returned list will be serializable if the specified list is

* serializable.

*

* @param <T> the class of the objects in the list

* @param list the list to be "wrapped" in a synchronized list.

* @return a synchronized view of the specified list.

*/

public static <T> List<T> synchronizedList(List<T> list) {

return (list instanceof RandomAccess ?

new SynchronizedRandomAccessList<>(list) :

new SynchronizedList<>(list));

}

4.4 copyonwritearraylist (key)

First, we will learn about CopyOnWriteArrayList. Its features are as follows:

It is equivalent to a thread safe ArrayList. Like ArrayList, it is a variable array; However, unlike ArrayList, it has the following features:

-

It is most suitable for applications with the following characteristics: the List size is usually kept small, read-only operations are far more than variable operations, and inter thread conflicts need to be prevented during traversal.

-

It is thread safe.

-

Because you usually need to copy the entire underlying array, variable operations (add(), set(), remove(), and so on) are expensive.

-

Iterators support immutable operations such as hasNext(), next(), but do not support immutable operations such as remove().

-

Traversal using iterators is fast and does not conflict with other threads. When constructing iterators, iterators rely on invariant array snapshots.

-

Low efficiency of exclusive lock: it is solved by the idea of separation of read and write

-

The write thread obtains the lock, and other write threads are blocked

-

Copy ideas:

When we add elements to a container, instead of directly adding them to the current container, we first Copy the current container, Copy a new container, and then add elements to the new container. After adding elements, we point the reference of the original container to the new container.

At this time, a new problem will be thrown out, that is, the problem of inconsistent data. If the write thread has not had time to write into memory, other threads will read dirty data.

This is the idea and principle of CopyOnWriteArrayList. Just a copy.

NotSafeDemo code modification

import java.util.*;

import java.util.concurrent.CopyOnWriteArrayList;

/**

* Collection thread safety case

*/

public class NotSafeDemo {

/**

* Multiple threads modify the collection at the same time

*/

public static void main(String[] args) {

List list = new CopyOnWriteArrayList();

for (int i = 0; i < 100; i++) {

new Thread(() ->{

list.add(UUID.randomUUID().toString());

System.out.println(list);

}, "thread " + i).start();

}

}

}

The result is still no problem.

**Cause analysis (key points) 😗* Dynamic array and thread safety

Next, the principle of CopyOnWriteArrayList is further explained from the two aspects of "dynamic array" and "thread safety".

Dynamic array mechanism

- It has a "volatile array" inside to hold data. When "adding / modifying / deleting" data, a new array will be created, and the updated data will be copied to the new array. Finally, the array will be assigned to "volatile array", which is why it is called CopyOnWriteArrayList

- Because it creates new arrays when "adding / modifying / deleting" data, CopyOnWriteArrayList is inefficient when it involves modifying data; However, it is more efficient to perform only traversal search.

"Thread safety" mechanism

- Implemented through volatile and mutex.

- Use the "volatile array" to save the data. When a thread reads the volatile array, it can always see the last write of the volatile variable by other threads; In this way, volatile provides the guarantee of the mechanism that "the data read is always up-to-date".

- Protect data through mutexes. When "adding / modifying / deleting" data, you will first "obtain the mutex", and then update the data to the "volatile array" after modification, and then "release the mutex", so as to achieve the purpose of protecting data.

4.5 summary (key points)

1. Thread safe and thread unsafe collection

There are two types of collection types: thread safe and thread unsafe, such as:

ArrayList ----- Vector

HashMap -----HashTable

However, the above are implemented through the synchronized keyword, which is inefficient

2. Thread safe collections built by collections

3.java.util.concurrent and contract the CopyOnWriteArrayList CopyOnWriteArraySet type, which is installed with the thread through a dynamic array

Ensure thread safety in all aspects.

5 multi thread lock

5.1 demonstration of eight problems of lock

class Phone {

public static synchronized void sendSMS() throws Exception {

//Stay for 4 seconds

TimeUnit.SECONDS.sleep(4);

System.out.println("------sendSMS");

}

public synchronized void sendEmail() throws Exception {

System.out.println("------sendEmail");

}

public void getHello() {

System.out.println("------getHello");

}

}

/**

1 Standard access, print SMS or email first ------sendSMS ------sendEmail 2 Stop for 4 seconds. In the SMS method, print the SMS or email first ------sendSMS ------sendEmail 3 Add common hello How to do this? Text first or hello ------getHello ------sendSMS 4 Now there are two mobile phones. Print SMS or email first ------sendEmail ------sendSMS 5 Two static synchronization methods, one mobile phone, print SMS or email first ------sendSMS ------sendEmail6 Two static synchronization methods, two mobile phones, print SMS or email first ------sendSMS ------sendEmail 7 1 A static synchronization method,1 A common synchronization method, 1 mobile phone, print SMS or email first ------sendEmail ------sendSMS 8 1 A static synchronization method,1 A common synchronization method, 2 mobile phones, print SMS or email first ------sendEmail ------sendSMS

Conclusion:

- If there are multiple synchronized methods in an object, as long as one thread calls one of the synchronized methods at a certain time, other threads can only wait. In other words, only one thread can access these synchronized methods at a certain time.

- The lock is the current object this. After being locked, other threads cannot enter other synchronized methods of the current object.

- After adding a common method, it is found that it has nothing to do with the synchronization lock

- After changing to two objects, the lock is not the same, and the situation changes immediately.

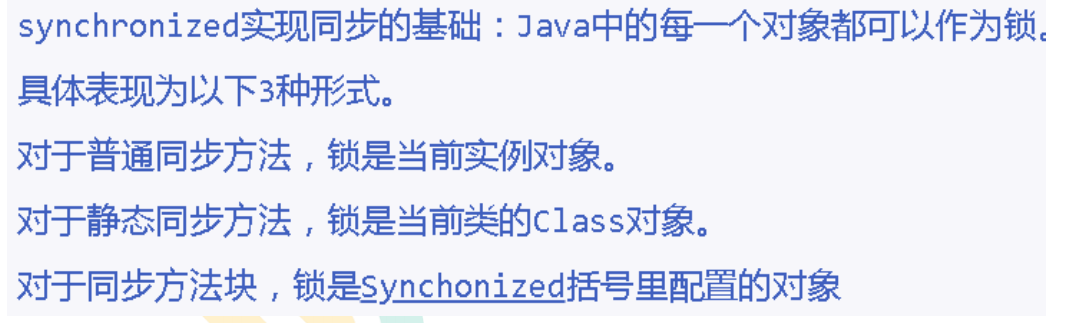

synchronized is the foundation of synchronization: every object in Java can be used as a lock. It is embodied in the following three forms.

- For normal synchronization methods, the lock is the current instance object.

- For static synchronization methods, the lock is the Class object of the current Class.

- For synchronized method blocks, locks are objects configured in synchronized parentheses

When a thread attempts to access a synchronized code block, it must first get the lock and release the lock when exiting or throwing an exception. That is, if the non static synchronization method of an instance object acquires a lock, other non static synchronization methods of the instance object must wait for the method acquiring the lock to release the lock before acquiring the lock. However, the non static synchronization methods of other instance objects use different locks from the non static synchronization methods of the instance object, Therefore, you must wait for the non static synchronous method of the instance object that has acquired the lock to release the lock to acquire their own lock.

All static synchronization methods also use the same lock - class object itself. These two locks are two different objects, so there will be no race condition between static synchronization methods and non static synchronization methods. However, once a static synchronization method acquires a lock, other static synchronization methods must wait for the method to release the lock before acquiring the lock. Whether it is between the static synchronization methods of the same instance object or between the static synchronization methods of different instance objects, they only need the instance objects of the same class!

6.1 Callable interface

At present, we have learned that there are two methods to create threads - one is to create a Thread class, and the other is to create threads by using Runnable. However, one of the missing features of Runnable is that when the Thread terminates (that is, when run () completes), we cannot make the Thread return results. In order to support this function, the Callable interface is provided in Java.

Now we are learning the third scheme for creating threads - the Callable interface.

The characteristics of Callable interface are as follows (key points):

- In order to implement Runnable, you need to implement the run () method that does not return anything, while for Callable, you need to implement the call () method that returns the result on completion.

- The call () method can throw exceptions, while run () cannot.

- The call method must be overridden to implement Callable

- runnable cannot be replaced directly because the constructor of Thread class does not have Callable at all

Create a new class MyThread realization runnable Interface

class MyThread implements Runnable{

@Override

public void run() {

}

}

New class MyThread2 realization callable Interface

class MyThread2 implements Callable<Integer>{

@Override

public Integer call() throws Exception {

return 200;

}

}

6.2 Future interface

When the call () method completes, the result must be stored in an object known to the main thread so that the main thread can know the result returned by the thread. To do this, you can use the Future object.

Think of future as an object that saves results – it may not save results temporarily, but it will be saved in the future (once Callable returns). Future is basically a way for the main thread to track progress and the results of other threads. To implement this interface, five methods must be rewritten. Important methods are listed here as follows:

- public boolean cancel (boolean mayInterrupt): used to stop tasks. If not already started, it stops the task. If started, the task is interrupted only if mayInterrupt is true.

- public Object get() throws InterruptedException ExecutionException: used to get the result of the task.

If the task is completed, it will return the result immediately, otherwise it will wait for the task to complete and then return the result. - public boolean isDone(): if the task is completed, it returns true, otherwise it returns false. You can see that Callable and future do two things - Callable is similar to Runnable because it encapsulates the task to be run on another thread, and future is used to store the results obtained from another thread. In fact, future can also be used with Runnable.

To create a thread, you need Runnable. In order to get results, we need future.

6.3 FutureTask

The Java library has a specific FutureTask type, which implements Runnable and Future, and conveniently combines the two functions. FutureTask can be created by providing Callable for its constructor. Then, the FutureTask object is supplied to the Thread constructor to create the Thread object. Therefore, Callable is used indirectly to create threads.

Core principles: (key points)

When time-consuming operations need to be performed in the main thread, but you don't want to block the main thread, you can hand over these jobs to the Future object to complete in the background.

- When the main thread needs it in the Future, it can obtain the calculation results or execution status of the background job through the Future object • generally, FutureTask is mostly used for time-consuming calculation. The main thread can obtain the results after completing its own task.

- The results can be retrieved only when the calculation is completed; Block the get method if the calculation is not yet complete

- Once the calculation is completed, you cannot restart or cancel the calculation

- get method, and the result can only be obtained when the calculation is completed. Otherwise, it will be blocked until the task turns to the completion state, and then the result will be returned or an exception will be thrown

- Get is evaluated only once, so the get method is placed last

6.4 using Callable and Future

CallableDemo case

/**

* CallableDemo Case list

*/

public class CallableDemo {

/**

* Implement runnable interface

*/

static class MyThread1 implements Runnable{

/**

* run method

*/

@Override

public void run() {

try { System.out.println(Thread.currentThread().getName() + "Thread entered run method");

}catch (Exception e){

e.printStackTrace();

}

}

}

/**

* Implement the callable interface

*/

static class MyThread2 implements Callable{

/**

* call method

* @return

* @throws Exception

*/

@Override

public Long call() throws Exception {

try {

System.out.println(Thread.currentThread().getName() + "Thread entered call method,Start getting ready for bed");

Thread.sleep(1000);

System.out.println(Thread.currentThread().getName() + "I wake up");

}catch (Exception e){

e.printStackTrace();

} return System.currentTimeMillis();

}

}

public static void main(String[] args) throws Exception{

//Declare runable

Runnable runable = new MyThread1();

//Declare callable

Callable callable = new MyThread2();

//future-callable

FutureTask<Long> futureTask2 = new FutureTask(callable);

//Thread two

new Thread(futureTask2, "Thread two").start();

for (int i = 0; i < 10; i++) {

Long result1 = futureTask2.get();

System.out.println(result1);

}

//Thread one

new Thread(runable,"Thread one").start();

}

}

6.5 summary (key points)

- When the main thread needs to perform more time-consuming operations, but does not want to block the main thread, you can hand over these jobs to the Future object to complete in the background. When the main thread needs them in the Future, you can obtain the calculation results or execution status of the background job through the Future object • generally, FutureTask is mostly used for time-consuming calculations. After the main thread completes its own tasks, Then get the results

- The results can be retrieved only when the calculation is completed; The get method is blocked if the calculation has not been completed. Once the calculation is completed, you cannot restart or cancel the calculation. Get method, and the result is obtained only when the calculation is completed. Otherwise, it will be blocked until the task turns to the completion state, and then the result will be returned or an exception will be thrown.

- Calculate only once

7. Three auxiliary classes of JUC

Three commonly used auxiliary classes are provided in JUC. These auxiliary classes can well solve the frequent operation of Lock lock when there are too many threads. The three auxiliary classes are:

• CountDownLatch: decrease count

• CyclicBarrier: Circular barrier

• Semaphore: signal light

7.1 reduce count CountDownLatch

CountDownLatch class can set a counter, and then use the countDown method to subtract 1. Use the await method to wait for the counter not greater than 0, and then continue to execute the statements after the await method.

- CountDownLatch mainly has two methods. When one or more threads call the await method, these threads will block.

- Other threads calling the countDown method will decrement the counter by 1 (the thread calling the countDown method will not block).

- When the value of the counter becomes 0, the thread blocked by the await method will wake up and continue to execute.

Scene: after six students leave the classroom one after another, the students on duty can close the door.

CountDownLatchDemo

import java.util.concurrent.CountDownLatch;

/**

* CountDownLatchDemo

*/

public class CountDownLatchDemo {

/**

* 6 The students on duty can not close the door until they leave the classroom one after another

*/

public static void main(String[] args) throws Exception{

//Define a counter with a value of 6

CountDownLatch countDownLatch = new CountDownLatch(6);

//Create 6 students

for (int i = 1; i <= 6; i++) {

new Thread(() ->{

try{

if(Thread.currentThread().getName().equals("Classmate 6")){

Thread.sleep(2000);

}

System.out.println(Thread.currentThread().getName() + "I am leaving");

//If the counter is decremented by one, it will not block

countDownLatch.countDown();

}catch (Exception e){

e.printStackTrace();

}

}, "classmate" + i).start();

}

//Main thread await rest

System.out.println("Main thread sleep");

countDownLatch.await();

//Wake up the main thread automatically after all leave

System.out.println("All left,The current counter is" + countDownLatch.getCount());

}

}

7.2 circulating barrier

CyclicBarrier can be seen from the English words that it probably means circular blocking. In use, the first parameter of the CyclicBarrier construction method is the number of target obstacles. Each time the CyclicBarrier is executed, the number of obstacles will be increased by one. If the target number of obstacles is reached, the statements after cyclicBarrier.await() will be executed. CyclicBarrier can be understood as a plus 1 operation.

Scene: you can summon the Dragon by collecting 7 dragon balls

CyclicBarrierDemo

import java.util.concurrent.CyclicBarrier;

/*** CyclicBarrierDemo Case list

*/

public class CyclicBarrierDemo {

//Defines the total number of Dragon Balls required for Dragon summoning

private final static int NUMBER = 7;

/**

* Collect 7 dragon balls to summon the dragon

* @param args

*/

public static void main(String[] args) {

//Define circular fence

CyclicBarrier cyclicBarrier = new CyclicBarrier(NUMBER, () ->{

System.out.println("Gather together" + NUMBER + "Dragon Ball,Now summon the dragon!!!!!!!!!");

});

//Define 7 threads to collect dragon balls respectively

for (int i = 1; i <= 7; i++) {

new Thread(()->{

try {

if(Thread.currentThread().getName().equals("Longzhu 3")){

System.out.println("Longzhu 3 snatch battle begins,Monkey King opens super Saiya mode!");

Thread.sleep(5000);

System.out.println("Longzhu 3 snatch battle ended,Monkey King won,Got Dragon Ball 3

number!");

}else{

System.out.println(Thread.currentThread().getName() + "Collected!!!!");

}

cyclicBarrier.await();

}catch (Exception e){

e.printStackTrace();

}

}, "Longzhu" + i + "number").start();

}

}

}

7.3 Semaphore

The first parameter passed in the Semaphore construction method is the maximum Semaphore (which can be regarded as the maximum thread pool). Each Semaphore is initialized to one, and at most one license can be distributed. Use the acquire method to obtain the license, and the release method to release the license.

Scene: grab parking spaces, 6 cars and 3 parking spaces

SemaphoreDemo

import java.util.concurrent.Semaphore;

/**

* Semaphore Case list

*/

public class SemaphoreDemo { /**

* Grab parking space, 10 cars, 1 parking space

*/

public static void main(String[] args) throws Exception{

//Define 3 parking spaces

Semaphore semaphore = new Semaphore(1);

//Simulated parking of 6 vehicles

for (int i = 1; i <= 10; i++) {

Thread.sleep(100);

//parking

new Thread(() ->{

try {

System.out.println(Thread.currentThread().getName() + "Find a parking space ing");

semaphore.acquire();

System.out.println(Thread.currentThread().getName() + "Car parking success!");

Thread.sleep(10000);

}catch (Exception e){

e.printStackTrace();

}finally {

System.out.println(Thread.currentThread().getName() + "Slip away, slip away");

semaphore.release();

}

}, "automobile" + i).start(); }

}

}

8 read / write lock

8.1 introduction to read-write lock

In reality, there is such a scenario: there are read and write operations on shared resources, and write operations are not as frequent as read operations. When there is no write operation, there is no problem for multiple threads to read a resource at the same time, so multiple threads should be allowed to read shared resources at the same time; However, if a thread wants to write these shared resources, it should not allow other threads to read and write to the resource.

For this scenario, JAVA's concurrent package provides a read-write lock ReentrantReadWriteLock, which represents two locks. One is a lock related to a read operation, which is called a shared lock; One is to write related locks, called exclusive locks.

Preconditions for a thread to enter lock reading:

- There are no write locks for other threads

- There is no write request, or there is a write request, but the calling thread and the thread holding the lock are the same (reentrant lock).

Prerequisites for a thread to enter a write lock:

- There are no read locks for other threads

- There are no write locks for other threads

The read-write lock has the following three important characteristics:

(1) Fair selectivity: supports unfair (default) and fair lock acquisition methods. Throughput is still unfair rather than fair.

(2) Reentry: both read lock and write lock support thread reentry.

(3) Lock demotion: follow the sequence of obtaining a write lock, obtaining a read lock, and then releasing a write lock. A write lock can be demoted to a read lock.

8.2 ReentrantReadWriteLock

Overall structure of ReentrantReadWriteLock class (source code)

public class ReentrantReadWriteLock implements ReadWriteLock,

java.io.Serializable {

/** Read lock */

private final ReentrantReadWriteLock.ReadLock readerLock;

/** Write lock */

private final ReentrantReadWriteLock.WriteLock writerLock;

final Sync sync;

/** Create a new sort using the default (unfair) sort attribute

ReentrantReadWriteLock */

public ReentrantReadWriteLock() {

this(false);

}

/** Create a new ReentrantReadWriteLock using the given fairness policy */

public ReentrantReadWriteLock(boolean fair) {

sync = fair ? new FairSync() : new NonfairSync();

readerLock = new ReadLock(this);

writerLock = new WriteLock(this); }

/** Returns the lock used for the write operation */

public ReentrantReadWriteLock.WriteLock writeLock() { return

writerLock; }

/** Returns the lock used for the read operation */

public ReentrantReadWriteLock.ReadLock readLock() { return

readerLock; }

abstract static class Sync extends AbstractQueuedSynchronizer {}

static final class NonfairSync extends Sync {}

static final class FairSync extends Sync {}

public static class ReadLock implements Lock, java.io.Serializable {}

public static class WriteLock implements Lock, java.io.Serializable {}

}

It can be seen that ReentrantReadWriteLock implements the ReadWriteLock interface, which defines the specifications for obtaining read locks and write locks, which need to be implemented by implementation classes; At the same time, it also implements the Serializable interface, which means that it can be serialized. You can see in the source code that ReentrantReadWriteLock implements its own serialization logic.

8.3 introduction cases

Scenario: read and write a hashmap using ReentrantReadWriteLock.

//Resource class

class MyCache {

//Create map collection

private volatile Map<String,Object> map = new HashMap<>();

//Create a read-write lock object

private ReadWriteLock rwLock = new ReentrantReadWriteLock();

//Release data

public void put(String key,Object value) {

//Add write lock

rwLock.writeLock().lock();

try {

System.out.println(Thread.currentThread().getName()+" "+key);

//Pause for a while

TimeUnit.MICROSECONDS.sleep(300);

//Release data

map.put(key,value);

System.out.println(Thread.currentThread().getName()+" "+key);

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

//Release write lock

rwLock.writeLock().unlock();

}

}

//Fetch data

public Object get(String key) {

//Add read lock

rwLock.readLock().lock();

Object result = null;

try {

System.out.println(Thread.currentThread().getName()+" "+key);

//Pause for a while

TimeUnit.MICROSECONDS.sleep(300);

result = map.get(key);

System.out.println(Thread.currentThread().getName()+" "+key);

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

//Release read lock

rwLock.readLock().unlock();

}

return result;

}

}

8.4 summary (important)

- When a thread holds a read lock, the thread cannot obtain a write lock (because when obtaining a write lock, if it finds that the current read lock is occupied, it immediately fails to obtain it, regardless of whether the read lock is held by the current thread or not).

- When a thread holds a write lock, the thread can continue to acquire the read lock (if it is found that the write lock is occupied when acquiring the read lock, the acquisition will fail only if the write lock is not occupied by the current thread).

Reason: when a thread acquires a read lock, other threads may also hold a read lock, so the thread that acquires the read lock cannot be "upgraded" to a write lock; For the thread that obtains the write lock, it must monopolize the read-write lock, so it can continue to obtain the read lock. When it obtains the write lock and the read lock at the same time, it can release the write lock first and continue to hold the read lock. In this way, a write lock will be "degraded" to a read lock.

9 blocking queue

9.1 BlockingQueue introduction

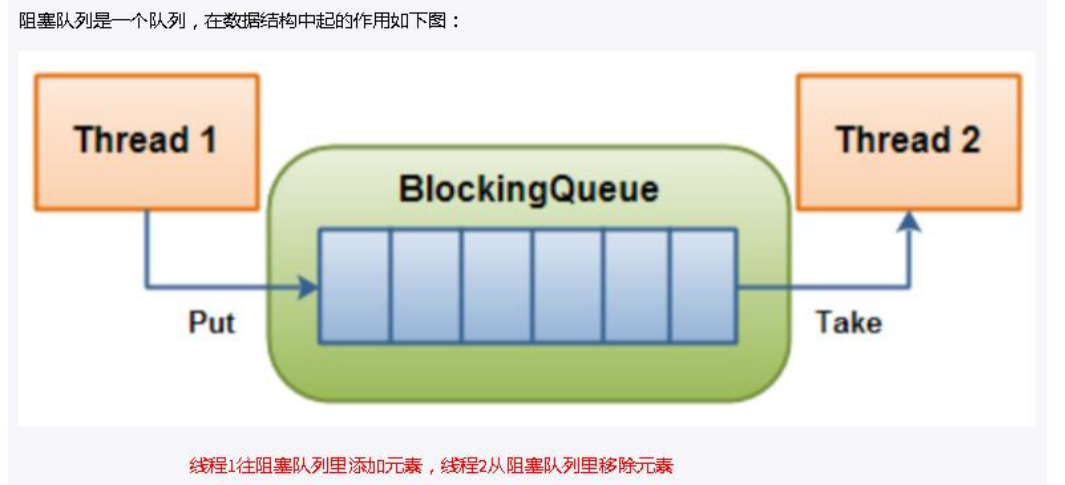

In Concurrent package, BlockingQueue solves the problem of how to "transmit" data efficiently and safely in multithreading. These efficient and thread safe queue classes bring great convenience for us to quickly build high-quality multithreaded programs. This article introduces all members of the BlockingQueue family in detail, including their respective functions and common usage scenarios.

Blocking queue, as its name implies, is a queue. Through a shared queue, data can be input from one end of the queue and output from the other end.

It is somewhat similar to our production and consumption model.

When the queue is empty, the operation of getting elements from the queue will be blocked.

When the queue is full, adding elements from the queue will be blocked.

Threads trying to get elements from an empty queue will be blocked until other threads insert new elements into the empty queue.

Threads trying to add new elements to a full queue will be blocked until other threads remove one or more elements from the queue or completely empty, making the queue idle and adding new elements later.

There are two common queues:

- First in first out (FIFO): the elements of the queue inserted first are also out of the queue first, which is similar to the function of queuing. To some extent, this queue also reflects a kind of fairness

- Last in first out (LIFO): the elements inserted later in the queue are out of the queue first. This kind of queue gives priority to the recent events (stack)

In the field of multithreading: the so-called blocking will suspend the thread (i.e. blocking) in some cases. Once the conditions are met, the suspended thread will be automatically aroused.

Why BlockingQueue is needed:

The advantage is that we don't need to care when we need to block the thread and wake up the thread, because all this is done by BlockingQueue.

Before the release of concurrent package, in a multithreaded environment, each programmer must control these details, especially considering efficiency and thread safety, which will bring great complexity to our program.

In multi-threaded environment, data sharing can be easily realized through queues. For example, in the classical "producer" and "consumer" models, data sharing between them can be easily realized through queues. Suppose we have several producer threads and several consumer threads. If the producer thread needs to share the prepared data with the consumer thread and use the queue to transfer the data, it can easily solve the problem of data sharing between them. But what if the producer and consumer do not match the data processing speed in a certain period of time? Ideally, if the producer produces data faster than the consumer consumes, and when the produced data accumulates to a certain extent, the producer must pause and wait (block the producer thread) in order to wait for the consumer thread to process the accumulated data, and vice versa.

- When there is no data in the queue, all threads on the consumer side will be automatically blocked (suspended) until data is put into the queue.

- When the queue is filled with data, all threads on the producer side will be automatically blocked (suspended) until there is an empty position in the queue, and the thread will wake up automatically.

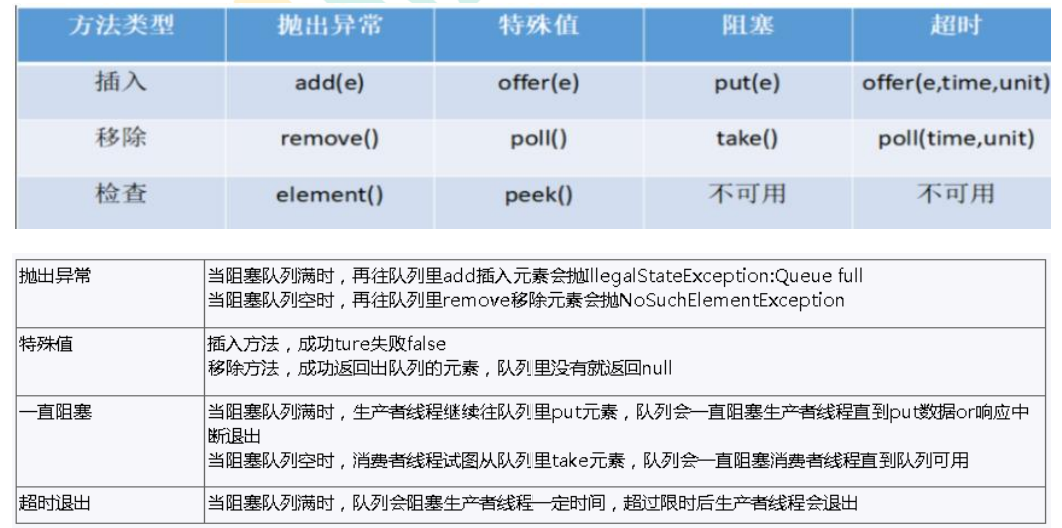

9.2 BlockingQueue core method

The core method of BlockingQueue:

1. Insert data:

- offer(anObject): means to add anObject to BlockingQueue if possible, that is, if BlockingQueue can accommodate it, return true, otherwise return false. (this method does not block the thread executing the current method)

- offer(E o, long timeout, TimeUnit unit): you can set the waiting time. If BlockingQueue cannot be added to the queue within the specified time, failure will be returned.

- put(anObject): add anObject to BlockingQueue. If there is no space in BlockQueue, the thread calling this method will be blocked until there is space in BlockingQueue

2. Obtain data

- poll(time): take the first object in the BlockingQueue. If it cannot be taken out immediately, you can wait for the time specified by the time parameter. If not, null will be returned.

- poll(long timeout, TimeUnit unit): take out an object of the head of the queue from the BlockingQueue. If there is data available in the queue within the specified time, the data in the queue will be returned immediately. Otherwise, if you know that there is no data available after the time-out, you will return failure.

- take(): take the first object in the BlockingQueue. If the BlockingQueue is empty, block it from entering the waiting state until new data is added to the BlockingQueue;

- drainTo(): obtain all available data objects from BlockingQueue at one time (you can also specify the number of data to be obtained). Through this method, the efficiency of obtaining data can be improved; There is no need to lock or release locks in batches.

9.3 introduction cases

import java.util.ArrayList;

import java.util.List;

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.BlockingQueue;

import java.util.concurrent.TimeUnit;

/**

* Blocking queue

*/

public class BlockingQueueDemo {

public static void main(String[] args) throws InterruptedException{

// List list = new ArrayList();

BlockingQueue<String> blockingQueue = newArrayBlockingQueue<>(3);

//first group

// System.out.println(blockingQueue.add("a"));

// System.out.println(blockingQueue.add("b"));

// System.out.println(blockingQueue.add("c"));

// System.out.println(blockingQueue.element());

//System.out.println(blockingQueue.add("x"));

// System.out.println(blockingQueue.remove());

// System.out.println(blockingQueue.remove());

// System.out.println(blockingQueue.remove());

// System.out.println(blockingQueue.remove());

// Group 2

// System.out.println(blockingQueue.offer("a"));

// System.out.println(blockingQueue.offer("b"));

// System.out.println(blockingQueue.offer("c"));

// System.out.println(blockingQueue.offer("x"));

// System.out.println(blockingQueue.poll());

// System.out.println(blockingQueue.poll());

// System.out.println(blockingQueue.poll());

// System.out.println(blockingQueue.poll());

// Group 3

// blockingQueue.put("a");

// blockingQueue.put("b");

// blockingQueue.put("c");

// //blockingQueue.put("x");

// System.out.println(blockingQueue.take());

// System.out.println(blockingQueue.take());

// System.out.println(blockingQueue.take());

// System.out.println(blockingQueue.take());

// Group 4

System.out.println(blockingQueue.offer("a"));

System.out.println(blockingQueue.offer("b"));

System.out.println(blockingQueue.offer("c"));

System.out.println(blockingQueue.offer("a",3L,TimeUnit.SECONDS));}

}

9.4 common BlockingQueue

9.4.1 arrayblockingqueue (common)

In the implementation of array based blocking queue, a fixed length array is maintained in ArrayBlockingQueue to cache the data objects in the queue. This is a common blocking queue. In addition to a fixed length array, ArrayBlockingQueue also stores two shaping variables, which respectively identify the position of the head and tail of the queue in the array.

ArrayBlockingQueue shares the same lock object when the producer places data and the consumer obtains data, which also means that the two cannot run in parallel, which is especially different from LinkedBlockingQueue; According to the analysis of the implementation principle, the ArrayBlockingQueue can completely adopt the separation lock, so as to realize the complete parallel operation of producer and consumer operations. The reason why Doug Lea didn't do this may be that the data writing and obtaining operations of ArrayBlockingQueue are light enough to introduce an independent locking mechanism. In addition to bringing additional complexity to the code, it can't take any advantage in performance. Another obvious difference between ArrayBlockingQueue and LinkedBlockingQueue is that the former will not generate or destroy any additional object instances when inserting or deleting elements, while the latter will generate an additional Node object. In the system that needs to process large quantities of data efficiently and concurrently for a long time, its impact on GC is still different. When creating ArrayBlockingQueue, we can also control whether the internal lock of the object adopts fair lock, and non fair lock is adopted by default.

Summary: bounded blocking queue composed of array structure.

9.4.2 linkedblockingqueue (common)

Similar to ArrayListBlockingQueue, the blocking queue based on linked list also maintains a data buffer queue (the queue is composed of a linked list). When the producer puts a data into the queue, the queue will get the data from the producer and cache it in the queue, and the producer will return immediately; Only when the queue buffer reaches the maximum cache capacity (LinkedBlockingQueue can specify this value through the constructor) will the producer queue be blocked. Until the consumer consumes a piece of data from the queue, the producer thread will be awakened. On the contrary, the processing on the consumer side is also based on the same principle. The reason why LinkedBlockingQueue can efficiently process concurrent data is that it uses independent locks for producer and consumer to control data synchronization, which also means that in the case of high concurrency, producers and consumers can operate the data in the queue in parallel, so as to improve the concurrency performance of the whole queue.

ArrayBlockingQueue and LinkedBlockingQueue are the two most common and commonly used blocking queues. In general, using these two classes is sufficient to deal with producer consumer problems between multiple threads.

Summary: bounded by linked list structure (but the default value of size is

integer.MAX_VALUE) blocks the queue.

9.4.3 DelayQueue

The element in the DelayQueue can be obtained from the queue only when the specified delay time has expired. DelayQueue is a queue with no size limit, so the operation (producer) that inserts data into the queue will never be blocked, but only the operation (consumer) that obtains data will be blocked.

Summary: delay unbounded blocking queue using priority queue.

9.4.4 PriorityBlockingQueue

Priority based blocking queue (priority is determined by the comparer object passed in by the constructor), but it should be noted that PriorityBlockingQueue will not block the data producer, but only the data consumer when there is no consumable data.

Therefore, special attention should be paid when using. The speed of producer production data must not be faster than that of consumer consumption data, otherwise all available heap memory space will be exhausted over time. When implementing PriorityBlockingQueue, the lock for internal thread synchronization adopts fair lock.

Summary: unbounded blocking queue supporting prioritization.

9.4.5 SynchronousQueue

A non buffered waiting queue is similar to the direct transaction without intermediary. It is a bit like the producers and consumers in the primitive society. The producers take the products to the market to sell to the final consumers of the products, and the consumers must go to the market in person to find the direct producer of the goods they want. If one party fails to find a suitable target, I'm sorry, everyone is waiting in the market. Compared with the buffered BlockingQueue, there is no intermediate dealer link (buffer zone). If there is a dealer, the producer directly wholesales the products to the dealer, without worrying that the dealer will eventually sell these products to those consumers. Because the dealer can stock some goods, compared with the direct transaction mode, Generally speaking, the intermediate dealer mode will have a higher throughput (can be sold in batches); On the other hand, due to the introduction of dealers, additional transaction links are added from producers to consumers, and the timely response performance of a single product may be reduced.

There are two different ways to declare a synchronous queue, which have different behavior.

The difference between fair mode and unfair mode:

- Fair mode: the SynchronousQueue will adopt fair lock and cooperate with a FIFO queue to block redundant producers and consumers, so as to the overall fair strategy of the system;

- Unfair mode (SynchronousQueue default): SynchronousQueue uses unfair locks and cooperates with a LIFO queue to manage redundant producers and consumers. In the latter mode, if there is a gap between the processing speed of producers and consumers, it is easy to be hungry, that is, the data of some producers or consumers may never be processed.

Summary: blocking queues that do not store elements, that is, queues of individual elements.

9.4.6 LinkedTransferQueue

LinkedTransferQueue is an unbounded blocked TransferQueue queue composed of a linked list structure. Compared with other blocking queues, LinkedTransferQueue has more tryTransfer and transfer methods.

LinkedTransferQueue adopts a preemptive mode. This means that when the consumer thread takes an element, if the queue is not empty, it directly takes the data. If the queue is empty, it generates a node (the node element is null) to join the queue. Then the consumer thread is waiting on this node. When the producer thread joins the queue, it finds a node with null element, and the producer thread does not join the queue, Directly fill the element into the node and wake up the waiting thread of the node. The awakened consumer thread takes the element and returns from the called method.

Summary: an unbounded blocking queue composed of linked lists.

9.4.7 LinkedBlockingDeque

LinkedBlockingDeque is a bidirectional blocking queue composed of linked list structure, that is, elements can be inserted and removed from both ends of the queue.

For some specified operations, if the queue state does not allow the operation when inserting or obtaining queue elements, the thread may be blocked until the queue state changes to allow operation. There are generally two cases of blocking here.

- When inserting an element: if the current queue is full, it will enter the blocking state. Wait until the queue has an empty position before inserting the element. This operation can be performed by setting the timeout parameter, and returning false after timeout indicates that the operation has failed. It can also be blocked without setting the timeout parameter, and throw an InterruptedException exception after interruption.

- When reading elements: if the current queue is empty, it will block until the queue is not empty, and then return elements. You can also set the timeout parameter.

Summary: bidirectional blocking queue composed of linked list

9.5 summary

- In the field of multithreading: the so-called blocking will suspend the thread (i.e. blocking) in some cases. Once the conditions are met, the suspended thread will be automatically aroused.

- Why BlockingQueue? Before the release of concurrent package, in a multithreaded environment, each programmer must control these details, especially considering efficiency and thread safety, which will bring great complexity to our program. After use, we don't need to care when we need to block the thread and wake up the thread, because all this is done by BlockingQueue.

10 ThreadPool thread pool

10.1 introduction to process pool

Thread pool: a thread usage mode. Too many threads will bring scheduling overhead, which will affect cache locality and overall performance. The thread pool maintains multiple threads, waiting for the supervisor to assign concurrent tasks. This avoids the cost of creating and destroying threads when processing short-time tasks. Thread pool can not only ensure the full utilization of the kernel, but also prevent over scheduling.

Example: 10 years ago, a single core CPU computer, fake multithreading, like a circus clown playing multiple balls, the CPU needed to switch back and forth. Now it is a multi-core computer. Multiple threads run on independent CPUs without switching, and the efficiency is high.

Advantages of thread pool: as long as the work done by thread pool is to control the number of running threads, put tasks into the queue during processing, and then start these tasks after threads are created. If the number of threads exceeds the maximum number, the threads exceeding the number will queue up and wait until other threads are executed, and then take tasks out of the queue for execution.

Its main features are:

- Reduce resource consumption: reduce the consumption caused by thread creation and destruction by reusing the created threads.

- Improve response speed: when the task arrives, the task can be executed immediately without waiting for thread creation.

- Improve the manageability of threads: threads are scarce resources. If they are created without restrictions, they will not only consume system resources, but also reduce the stability of the system. Using thread pool can carry out unified allocation, tuning and monitoring.

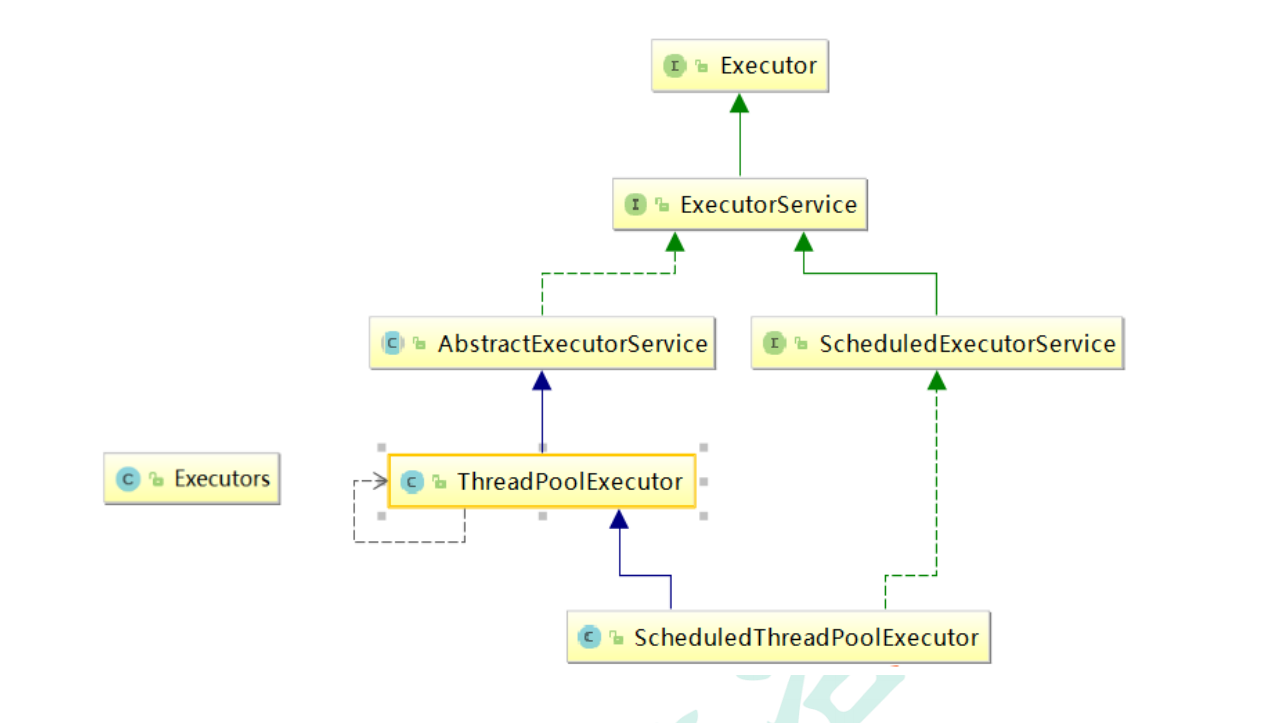

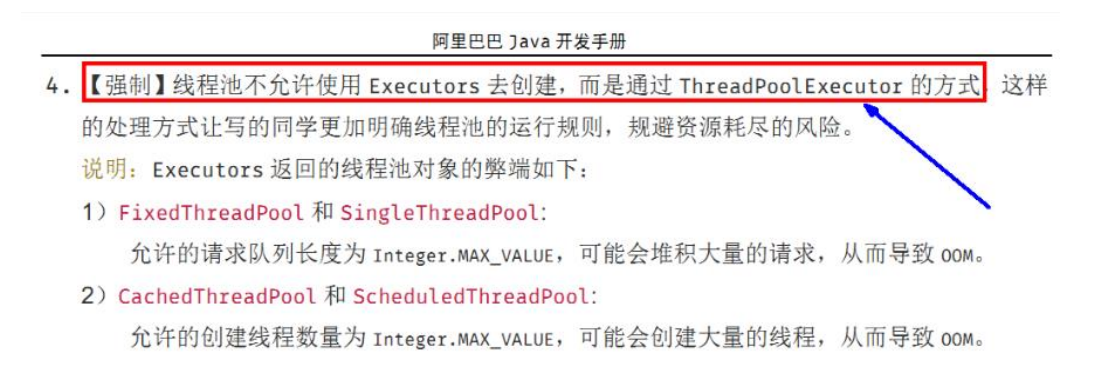

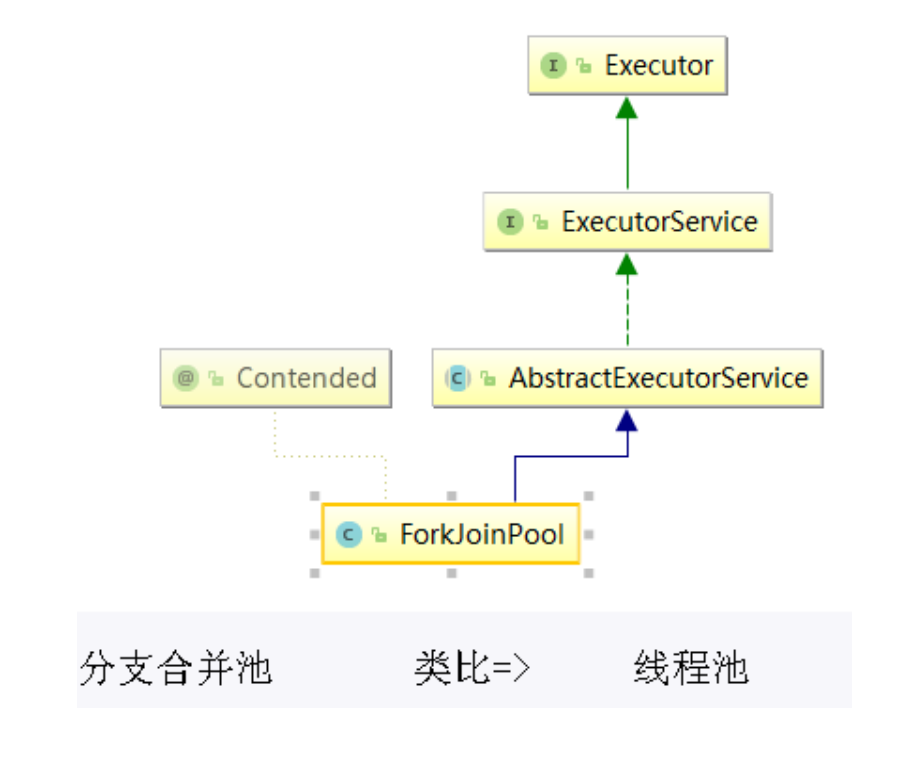

- The thread pool in Java is implemented through the Executor framework, which uses Executor, Executors, ExecutorService and ThreadPoolExecutor.

10.2 thread pool parameter description

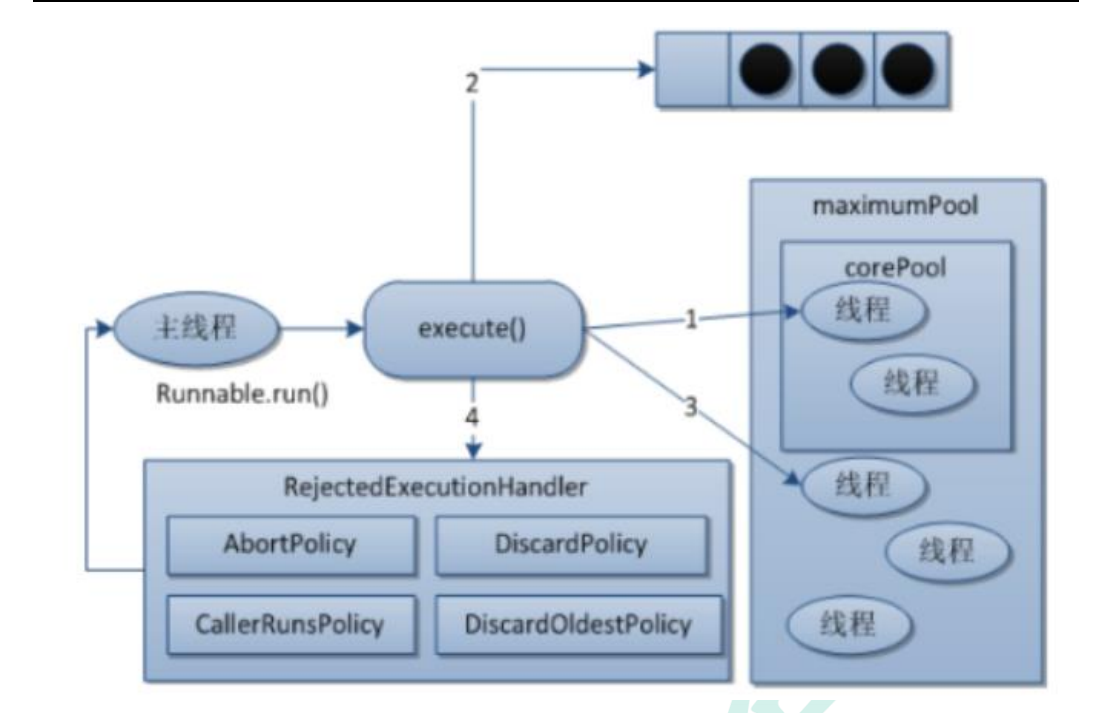

10.2.1 common parameters (key points)

- corePoolSize the number of core threads in the thread pool

- maximumPoolSize the maximum number of threads that can be accommodated

- keepAliveTime idle thread lifetime

- Unit is the time unit of survival

- workQueue is a queue that holds tasks submitted but not executed

- threadFactory creates a factory class for threads

- handler reject policy when waiting queue is full

In the thread pool, there are three important parameters that determine the rejection policy: corePoolSize - the number of core threads, that is, the minimum number of threads. workQueue - blocking queue. maximumPoolSize - the maximum number of threads. When the number of submitted tasks is greater than corePoolSize, tasks will be put into the workQueue blocking queue first. When the blocking queue is saturated, the thread pool will be expanded Number of threads until the maximum number of threads configured in maximumPoolSize is reached. At this time, more redundant tasks will trigger the thread pool

Your rejection strategy.

To sum up, in a word, when the number of tasks submitted is greater than (workQueue.size() + maximumPoolSize), the rejection policy of the thread pool will be triggered.

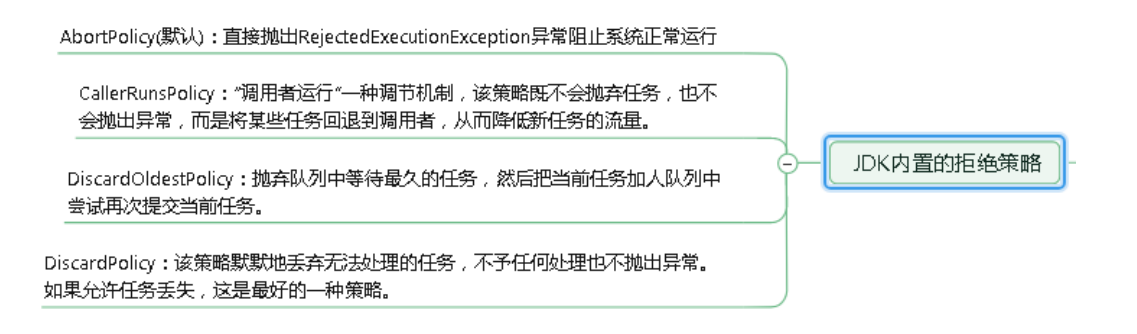

10.2.2 rejection strategy (key)

CallerRunsPolicy: when the reject policy is triggered, as long as the thread pool is not closed, the calling thread is used to run the task directly. Generally, the concurrency is relatively small, the performance requirements are not high, and failure is not allowed. However, because the caller runs the task himself, if the task Submission speed is too fast, it may lead to program blocking and inevitable loss of performance and efficiency.

AbortPolicy: discards the task and throws the RejectedExecutionException exception. The default rejection policy of the thread pool. The thrown exception must be handled properly, otherwise the current execution process will be interrupted and subsequent task execution will be affected.

Discard policy: directly discard, nothing else.

DiscardOldestPolicy: when the reject policy is triggered, as long as the thread pool is not closed, discard the oldest task in the blocking queue workQueue and add a new task.

10.3 types and creation of thread pool

10.3.1 newcachedthreadpool (common)

Function: create a cacheable thread pool. If the length of the thread pool exceeds the processing needs, you can flexibly recycle idle threads. If there is no recyclable thread, you can create a new thread

characteristic:

- The number of threads in the thread pool is not fixed, and the maximum value can be reached (Interger. MAX_VALUE)

- Threads in the thread pool can be reused and recycled (the default recycling time is 1 minute)

- When there are no available threads in the thread pool, a new thread will be created

Creation method:

/**

* Cacheable thread pool

*/

public static ExecutorService newCachedThreadPool(){

/**

* corePoolSize Number of core threads in the thread pool

* maximumPoolSize Maximum number of threads that can be accommodated

* keepAliveTime Idle thread lifetime

* unit Time unit of survival