Original address: Detailed explanation of preemption scheduling caused by Goroutine running for too long~

This paper focuses on the following two points:

-

Preemptive scheduling occurs.

-

Preemptive scheduling due to long running time.

sysmon system monitoring thread will periodically (10 milliseconds) preempt goroutine through retake.

Look at line 4376 of the runtime/proc.go file to analyze retake:

// forcePreemptNS is the time slice given to a G before it is// preempted.const forcePreemptNS = 10 * 1000 * 1000 // 10msfunc retake(now int64) uint32 { n := 0 // Prevent allp slice changes. This lock will be completely // uncontended unless we're already stopping the world. lock(&allpLock) //We can't use a range loop over allp because we may / / temporarily drop the allplock. Hence, we need to re fetch / / allp each time around the loop. For I: = 0; I < len (allp); I + + {/ / traverse all P _p: = allp [i] if _p_ = = nil {/ / this can happen if size has grown / / allp but not yet created new PS. continue} / / u p. Sysmonick is used by Sysmon thread to record the system call time and running time of monitored P. PD: = &_p. Sysmonick s: = _p. Status if s = = _psyscall {/ / P is in the system call, so you need to check whether preemption is required / / retake P from syscall if it's there for more than 1 Sysmon tick (at least 20us). T: = Int64 (_p_.syscalltick) if Int64 (PD. Syscalltick)! = t {pd.syscalltick = uint32 (t) pd.syscallwhen = now continue } // On the one hand we don't want to retake Ps if there is no other work to do, // but on the other hand we want to retake them eventually // because they can prevent the sysmon thread from deep sleep. if runqempty(_p_) && atomic.Load(&sched.nmspinning)+atomic.Load(&sched.npidle) > 0 && pd.syscallwhen+10*1000*1000 > now { continue } // Drop allpLock so we can take sched.lock. unlock(&allpLock) // Need to decrement number of idle locked M's // (pretending that one more is running) before the CAS. // Otherwise the M from which we retake can exit the syscall, // increment nmidle and report deadlock. incidlelocked(-1) if atomic.Cas(&_p_.status, s, _Pidle) { if trace.enabled { traceGoSysBlock(_p_) traceProcStop(_p_) } n++ _p_.syscalltick++ handoffp(_p_) } incidlelocked(1) lock(&allpLock) } else if s == _Prunning {/ / P is running. You need to check whether it has been running for too long / / preempt G if it's running for too long. / /. Schedtick: every time a scheduling occurs, the scheduler + + the value T: = Int64 (. Schedtick) if Int64 (PD. Schedtick)! = t {/ / the monitoring thread monitors a new schedule, so it resets the schedtick and schedwhen variables related to Sysmon. Pd.schedtick = uint32 (T) pd.schedwhen = now continue} / / pd.schedtick = = tdescription (pd.schedwhen ~ now) There has been no scheduling during this period. / / so the same goroutine has been running all the time. Next, check whether it has been running for more than 10 milliseconds if PD. Schedwhen + forcepreemptns > now {/ / a goroutine has been running for less than 10 milliseconds since it was first monitored by the Sysmon thread. Continue} / / it has been running for more than 10 milliseconds. Set the preemption request preemption (_p_)}} unlock (& allplock) return uint32 (n)}retake determines whether to initiate preemptive scheduling according to p two different states:

-

_Pruning indicates that the corresponding goroutine is running. If the running time exceeds 10ms, it needs to be preempted.

-

_Psyscall indicates that the corresponding goroutine is executing a system call to the kernel. At this time, it is necessary to decide whether to preempt scheduling according to multiple conditions.

If sysmon monitors that a goroutine has been running continuously for more than 10 milliseconds, call preemption to initiate preemption scheduling to the goroutine. Look at line 4465 of the runtime/proc.go file and analyze the preemption:

// Tell the goroutine running on processor P to stop.// This function is purely best-effort. It can incorrectly fail to inform the// goroutine. It can send inform the wrong goroutine. Even if it informs the// correct goroutine, that goroutine might ignore the request if it is// simultaneously executing newstack.// No lock needs to be held.// Returns true if preemption request was issued.// The actual preemption will happen at some point in the future// and will be indicated by the gp->status no longer being// Grunningfunc preemptone(_p_ *p) bool { mp := _p_.m.ptr() if mp == nil || mp == getg().m { return false } //GP is the preempted goroutine GP: = mp.curg if GP = = nil | GP = = mp.g0 {return false} Gp.preempt = true / / set the preemption flag / / every call in a go routine checks for stack overflow by / / comparing the current stack pointer to GP - > stackguard0. / / setting GP - > stackguard0 to stackpreempt folders / / preemption into the normal stack overflow check. / / stackgreempt is a constant 0xfffffffffade, which is a very large one gp.stackguard0 = stackPreempt / / set stackguard0 to enable the preempted goroutine to process preemption requests. return true}Preempttone sets preempt to true and stackguard0 to stackPreempt (stackPreempt is a constant 0xfffffffffade, which is a very large number) in the corresponding g structure of the preempted goroutine. The preempted goroutine is not forced to stop running.

The call chain of the preemption identification function defined in the process is morestack_noctxt() - > morestack() - > newstack().

Take the program as an example:

package mainimport "fmt"func sum(a, b int) int { a2 := a * a b2 := b * b c := a2 + b2 fmt.Println(c) return c}func main() { sum(1, 2)}Disassemble main with gdb, and the results are as follows:

=> 0x0000000000486a80 <+0>: mov %fs:0xfffffffffffffff8,%rcx 0x0000000000486a89 <+9>: cmp 0x10(%rcx),%rsp 0x0000000000486a8d <+13>: jbe 0x486abd <main.main+61> 0x0000000000486a8f <+15>: sub $0x20,%rsp 0x0000000000486a93 <+19>: mov %rbp,0x18(%rsp) 0x0000000000486a98 <+24>: lea 0x18(%rsp),%rbp 0x0000000000486a9d <+29>: movq $0x1,(%rsp) 0x0000000000486aa5 <+37>: movq $0x2,0x8(%rsp) 0x0000000000486aae <+46>: callq 0x4869c0 <main.sum> 0x0000000000486ab3 <+51>: mov 0x18(%rsp),%rbp 0x0000000000486ab8 <+56>: add $0x20,%rsp 0x0000000000486abc <+60>: retq 0x0000000000486abd <+61>: callq 0x44ece0 <runtime.morestack_noctxt> 0x0000000000486ac2 <+66>: jmp 0x486a80 <main.main>

The call to morestack_noctxt is at the end and comes through jbe. Let's look at the first three instructions:

0x0000000000486a80 <+0>: mov %fs:0xfffffffffffffff8,%rcx #Main function first instruction, RCX = g0x00000000000486a89 < + 9 >: CMP 0x10 (% RCX),% RSP 0x0000000000486a8d < + 13 >: JBE 0x486abd < main. Main + 61 >

jbe is a conditional jump instruction. Whether to jump is determined according to the execution result of the previous instruction.

Mainthe first instruction reads the pointer of the currently running g from the TLS (Go implements TLS according to fs) and places it in rcx. The source operand of the second instruction is indirect addressing. The corresponding content of the address offset 16 relative to g is read from memory to rsp.

Let's first look at the definition of the g structure:

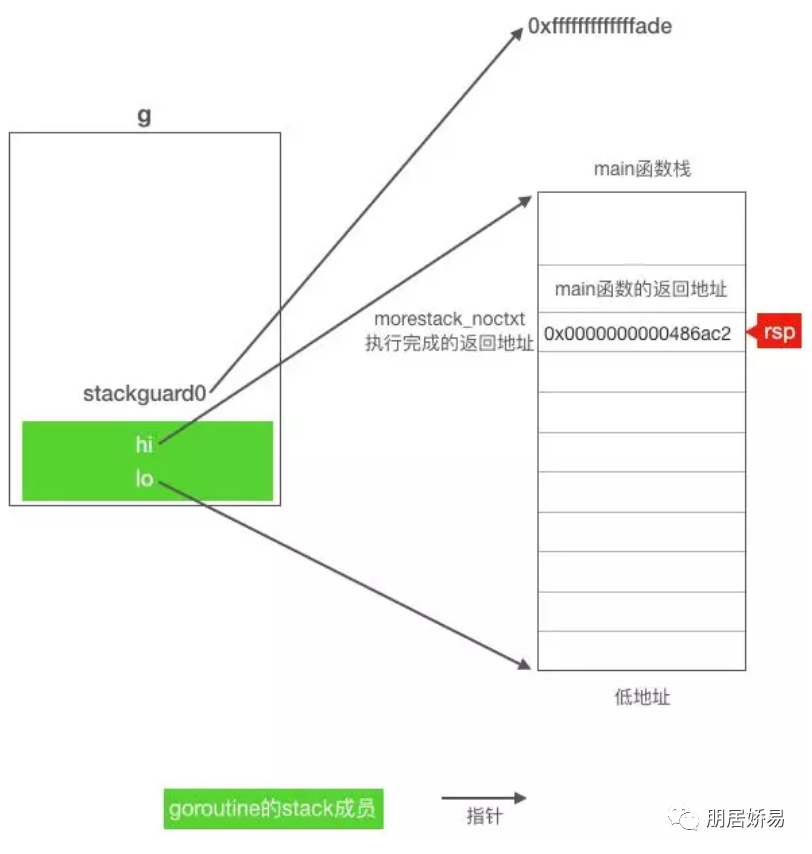

type g struct { stack stack stackguard0 uintptr stackguard1 uintptr ......}type stack struct { lo uintptr //8 bytes hi uintptr //8 bytes}The stack of g occupies 16 bytes (8 bytes for lo and 8 bytes for hi), so the starting position of g structure plus offset 16 corresponds to stackguard0. Therefore, the main secondary instruction means to compare the values of stack top register rsp and stackguard0. If rsp is small, it means that the current stack of g is running out, there is a risk of overflow, and the stack needs to be expanded. Suppose main If goroutine is set with the preemption flag, rsp will be much smaller than stackguard0. Therefore, if stackguard0 is set with the preemption flag, the code will jump to 0x0000000000486abd and call morestack_noctxt, the call will push the address 0x0000000000486ac2 of the following instruction onto the stack, and then jump to morestack_noctxt to execute.

Look at the stack state diagram of rsp, g and main at this time:

morestack_noctxt uses JMP to directly jump to morestack to continue execution. Call is not used to call morestack, so rsp does not change.

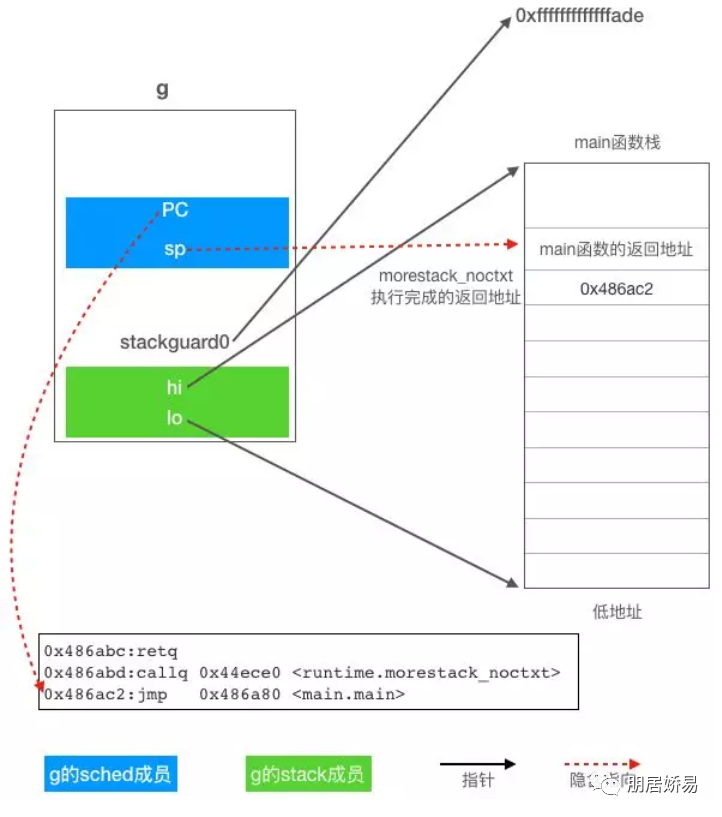

The morestack execution process is similar to the mcall previously analyzed. First save the goroutine calling morestack (this scenario is main) In the sched of the g structure corresponding to the scheduling information of goroutine), then switch to the g0 stack of the current working thread and continue to execute newstack.

Look at runtime / ASM_ Line 433 of the AMD64. S file analyzes morestack:

// morestack but not preserving ctxt.TEXT runtime·morestack_noctxt(SB),NOSPLIT,$0 MOVL $0, DX JMP runtime·morestack(SB)// Called during function prolog when more stack is needed.//// The traceback routines see morestack on a g0 as being// the top of a stack (for example, morestack calling newstack// calling the scheduler calling newm calling gc), so we must// record an argument size. For that purpose, it has no arguments.TEXT runtime·morestack(SB),NOSPLIT,$0-0 ...... get_ TLS (Cx) movq g (Cx), Si # Si = g (g structure variable corresponding to main goroutine)... #sp stack top register now points to morestack_ The return address of the noctxt function, # so AX = 0x0000000000486ac2 movq 0 (SP) after the execution of the following instruction. Ax # the following two instructions assign values to g.sched.PC and g.sched.g. in our example, g.sched.PC is assigned 0x0000000000486ac2, # that is, after the execution of morestack_ After the noctxt function, it should return the address to continue executing the instruction. Movq ax, (g_sched + gobuf_pc) (SI) #g.sched.PC = 0x0000000000486ac2 movq Si, (g_sched + gobuf_g) (SI) #g.sched.g = g leaq 8 (SP), ax #main function is calling morestack_ rsp register before noctxt # the following three instructions assign movq ax to g.sched.sp, g.sched.bp and g.sched.ctxt, (g_sched + gobuf_sp) (SI) movq BP, (g_sched + gobuf_bp) (SI) movq DX, (g_sched + gobuf_ctxt) (SI) # the above instructions save the site of G. next, start switching to G0 operation # switch to G0 stack, And set G of TLS to G0 #call newsack on M - > G0's stack. Movq M_ G0 (BX), BX movq, BX, G (Cx) # set G in TLS to G0 # restore the value of the top register of G0 stack to the CPU register to switch stacks. Before the following instruction is executed, #cpu still uses the stack of G calling this function. After execution, CPU starts to use the stack of G0 movq (g_sched + gobuf_sp) (BX), SP call runtime · newstack(SB) CALL runtime·abort(SB)// crash if newstack returns RET

Before switching to g0, the site information of the current goroutine is saved to the sched of the corresponding g structure. When main is scheduled next time, the scheduler can restore g.sched.sp to the rsp of the CPU to complete stack switching, then restore g.sched.pc to the rip of the CPU, and then the CPU continues to execute [0x0000000000486ac2] after callq <+ 66>: jmp 0x486a80 < Main. Main >] command, and the status is as follows:

Look at the 899 line of the runtime/stack.go file to analyze newstack:

// Called from runtime·morestack when more stack is needed.// Allocate larger stack and relocate to new stack.// Stack growth is multiplicative, for constant amortized cost.//// g->atomicstatus will be Grunning or Gscanrunning upon entry.// If the gc is trying to stop this g then it will set preemptscan to true.//// This must be nowritebarrierrec because it can be called as part of// stack growth from other nowritebarrierrec functions, but the// compiler doesn't check this.////go:nowritebarrierrecfunc newstack() { thisg := getg() //Thisg = G0... / / this line of code obtains g0.m.curg, that is, the goroutine that needs to expand the stack or respond to preemption. / / for our example, GP = main goroutine GP: = thisg. M.curg... / / Note: stackguard0 may change underfoot, if another thread / / is about to try to preempt GP. Read it just once and use that same / / value now and Below. / / check whether g.stackguard0 is set to stackpreempt. Preempt: = Atomic. Loaduintptr (& GP. Stackguard0) == stackPreempt // Be conservative about where we preempt. // We are interested in preempting user Go code, not runtime code. // If we're holding locks, mallocing, or preemption is disabled, don't // preempt. // This check is very early in newstack so that even the status change // from Grunning to Gwaiting and back doesn't happ en in this case. // That status change by itself can be viewed as a small preemption, // because the gc might change Gwaiting to Gscanwaiting, and then // this goroutine has to wait for the gc to finish before continuing. // If the gc is in some way dependent on this goroutine (for example, // it needs a lock held by the goroutine) , that small preemption turns / / into a real deadlock. If preempt {/ / check the status of preempted goroutine if thisg. M.locks! = 0 | thisg. M.mallocking! = 0 | thisg. M.preemptoff! = "| thisg. M.p.ptr(). Status! = | pruning {/ / let the goroutine keep running for now. / / GP - > preempt is set, so it will be preempted next time. / / restore stackguard0 to the normal value, indicating that we have processed the preemption request. gp.stackguard0 = gp.stack.lo + _StackGuard / / call gogo to continue running the current g without preemption To call the schedule function to select another goroutine gogo (& GP. Sched) / / never return}} / / other checks are made on the omitted code, so there are two same judgments here: if preempt {if GP = = thisg. M.g0 {throw ("Runtime: preempt G0")} if thisg. M.P = = 0 & & thisg. M.locks = = 0 {throw( "Runtime: G is running but p is not")}... / / start responding to the preemption request. / / Act like goroutine called runtime.Gosched. / / set the status of GP. The omitted code changes the status of GP to _waitingcaststatus (GP, _waiting, _running) when processing gc //Call gopreempt_m to switch GP to gopreempt_m(gp) // never return}...}newstack is used to expand the stack and respond to the preemption request made by sysmon. It first checks whether g.stackguard0 is set to stackPreempt. If yes, it means that sysmon has found that the running timeout and made a preemption request. After some basic checks, if it is found that the current goroutine can be preempted, call gopreempt_m to complete the scheduling. Look at line 2644 of the runtime/proc.go file to analyze G opreempt_m:

func gopreempt_m(gp *g) { if trace.enabled { traceGoPreempt() } goschedImpl(gp)}gopreempt_m completes the actual scheduling switching by calling goschedImpl.

goschedImpl first changes the gp state from _Grunning to _Grunnable, then releases the correlation between m and gp from the current worker thread through dropg, then puts gp into the global running queue and waits for the scheduler to schedule. Finally, schedule is added to the next round of scheduling cycle.

After this round of analysis, it can be seen that it is conditional for go to initiate preemption scheduling. sysmon is responsible for setting preemption flag for the preempted goroutine. The preempted goroutine checks g's stackguard0 at the entrance, and then decides whether to call morestack_noctxt, and finally adjusts to newstack to complete preemption scheduling.

The above is only a personal point of view, not necessarily accurate. It's the best to help you.

Well, that's the end of this article. If you like, let's have a triple hit.

The above are personal cognition. If there is infringement, please contact to delete.