AQS

When we construct a ReentrantLock object, we can specify a fair lock or an unfair lock by passing in a Boolean value. After entering the source code, we can find that new has different sync objects

public ReentrantLock(boolean fair) {

sync = fair ? new FairSync() : new NonfairSync();

}

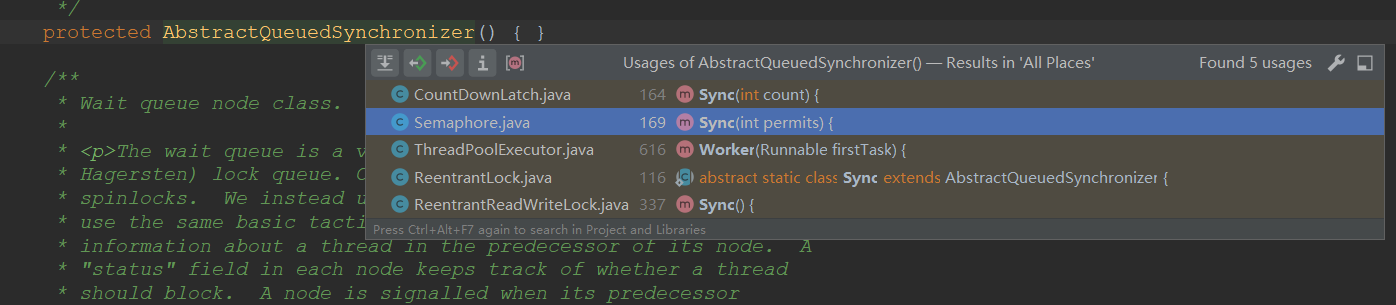

Click on the source code of FairSync or NonfairSync. In fact, they indirectly inherit the AbstractQueuedSynchronizer class, which provides a template method for our lock unlocking process, so let's learn about it first

AbstractQueuedSynchronizer, AQS for short, provides an extensible basic framework for building different synchronization components (reentry lock, read-write lock, CountDownLatch, etc.), as shown in the following figure.

AQS mainly constructs a first in, first out two-way queue. Put the thread that failed to grab the lock into the queue and block the thread. Wake up the thread after the lock is released. Let's take a look at the internal structure of AQS (only those used in ReentrantLock are shown)

//Head node

private transient volatile Node head;

//Tail node

private transient volatile Node tail;

//Locking a state variable is to lock this variable

private volatile int state;

static final class Node {

//Node waiting state

volatile int waitStatus;

//Previous node

volatile Node prev;

//Next node

volatile Node next;

//Thread of node

volatile Thread thread;

Node nextWaiter;

}

After understanding the basic structure of AQS, in fact, we can guess about it first

Lock: CAS the state variable. If it fails, create a new node object and put it behind the head. Then you can't get the lock and have nothing to do. Then shut down and block the thread to prevent the cpu from idling

Unlock: CAS is also performed on the state variable, and then other threads are notified to grab the lock. The most important thing is to wake up the previously blocked thread, and then a thread regains the lock, and the cycle starts again

ok, enter the source code to verify our guess

Lock source code

ReentrantLock generally uses unfair locks, so let's first look at the source code of unfair locks

final void lock() {

//Directly lock CAS to see if it can succeed

if (compareAndSetState(0, 1))

//Set current thread after success

setExclusiveOwnerThread(Thread.currentThread());

else

acquire(1);

}

Try CAS directly above. If it succeeds, set the thread occupying the lock to itself. If it fails to lock, enter the acquire method

public final void acquire(int arg) {

if (!tryAcquire(arg) &&

acquireQueued(addWaiter(Node.EXCLUSIVE), arg))

//Compensation interrupt status

selfInterrupt();

}

First, let's take a look at what is done in the tryAcquire method. Click in and you will find that the final implementation is the nonfairTryAcquire method

final boolean nonfairTryAcquire(int acquires) {

final Thread current = Thread.currentThread();

//Get lock status

int c = getState();

if (c == 0) {//Try locking directly. It's the same as the outer layer. Just try again

if (compareAndSetState(0, acquires)) {

setExclusiveOwnerThread(current);

//If locking is successful, it will directly return true, and the acquire method of the outer layer will actually end directly

return true;

}

}

//Determine whether the current thread holds the lock

else if (current == getExclusiveOwnerThread()) {

//It can be explained here that it is a reentrant lock

int nextc = c + acquires;

if (nextc < 0) // overflow

throw new Error("Maximum lock count exceeded");

setState(nextc);

return true;

}

//Returning false indicates that it needs to be put into the queue

return false;

}

You can find that tryAcquire does two things: 1. Try to lock again. 2. Whether the person holding the lock is himself. If return

If true, the method ends directly. If reture

If false, acquirequeueueueued (addwaiter (node. Exclusive), Arg) will be entered,

Let's first look at the addWaiter method

private Node addWaiter(Node mode) {

//mode is null at this time

Node node = new Node(Thread.currentThread(), mode);

// Try the fast path of enq; backup to full enq on failure

//Tail is the pointer to the end and assigned to pred. When the first thread comes in, tail is null

Node pred = tail;

//Here, to judge whether pred is null is to judge whether tail is null, that is, to judge whether there is a node at the end of the queue

//If it is not empty, put the current node directly behind the tail

if (pred != null) {

//Put the current node behind the tail

node.prev = pred;

//Setting the current node as the tail pointer is actually setting the current node as the last one

if (compareAndSetTail(pred, node)) {//CAS ensures that the queue is atomic

//The current node becomes the new tail of the team

pred.next = node;

return node;

}

}

//If it is empty, execute the following method

enq(node);

return node;

}

private Node enq(final Node node) {

//Deadlock initialization queue

for (;;) {

Node t = tail;

if (t == null) { // Must initialize

//The tail and head will be initialized for the first time in the loop. In fact, the tail and head are the same at the beginning

if (compareAndSetHead(new Node()))

tail = head;

} else {

//The code here is the same as that in addWaiter

node.prev = t;

//The second time of the loop will connect the current node to the previous tail position

if (compareAndSetTail(t, node)) {

t.next = node;

return t;

}

}

}

}

It can be found that the function of the addWaiter method is to set the current node behind the tail node. If the tail node is empty, execute the enq method to initialize the head and tail nodes. No matter what the result is, the addWaiter will return the current node

Then the next step is to go to the acquirequeueueueueued method

final boolean acquireQueued(final Node node, int arg) {

//Flag 1

boolean failed = true;

try {

//Sign 2

boolean interrupted = false;

//Dead cycle

for (;;) {

//Find the prev node of the current node

final Node p = node.predecessor();

//We can treat the head as the node that currently holds the lock. If the previous one is the head, we will start to obtain the lock again

if (p == head && tryAcquire(arg)) {

//If the lock is obtained successfully at this time, put the current node in the head position

setHead(node);

//node replaces the previous head, so set the previous head to null

p.next = null; // help GC

failed = false;

//Locking succeeded

return interrupted;

}

//There are two situations that will come here

//1. The first one is not the head, which means there are other brothers waiting in line

//2. The previous one is head, but the head has not released the lock, and locking failed

if (shouldParkAfterFailedAcquire(p, node) &&

parkAndCheckInterrupt())

interrupted = true;

}

} finally {

if (failed)

cancelAcquire(node);

}

}

There are two cases where the shouldParkAfterFailedAcquire and parkAndCheckInterrupt methods will be executed

1. The first one is not the head, which means there are other brothers waiting in line

2. The previous one is head, but the head has not released the lock, and locking failed

You can know from the method name that shouldParkAfterFailedAcquire is to detect whether the current thread is qualified to suspend

parkAndCheckInterrupt means to suspend the thread when shouldParkAfterFailedAcquire returns true

First, let's take a look at the shouldParkAfterFailedAcquire method

private static boolean shouldParkAfterFailedAcquire(Node pred, Node node) {

//Status of previous node

int ws = pred.waitStatus;

//If it is SIGNAL, return true directly

if (ws == Node.SIGNAL)

/*

* This node has already set status asking a release

* to signal it, so it can safely park.

*/

return true;

if (ws > 0) {

/*

* Predecessor was cancelled. Skip over predecessors and

* indicate retry.

*/

do {

//If it is in the canceled state, cycle to the front of the queue until a SIGNAL node is found, and then put the current node behind the SIGNAL node

node.prev = pred = pred.prev;

} while (pred.waitStatus > 0);

pred.next = node;

} else {

/*

* waitStatus must be 0 or PROPAGATE. Indicate that we

* need a signal, but don't park yet. Caller will need to

* retry to make sure it cannot acquire before parking.

*/

//Set the previous node to SIGNAL

compareAndSetWaitStatus(pred, ws, Node.SIGNAL);

}

return false;

}

It can be found that the shouldParkAfterFailedAcquire method is used to ensure that the state of the prev node of the current node node must be SIGNAL. When this condition is met, the parkAndCheckInterrupt method will be executed, otherwise it will enter the next dead cycle until it is guaranteed

The state of the prev node is SIGNAL, because only when the prev node is SIGNAL, the next node will wake up and the current node will dare to suspend itself,

Then let's look at the parkAndCheckInterrupt method

private final boolean parkAndCheckInterrupt() {

//Block current thread

LockSupport.park(this);

//If true is returned here, the status will be cleared

return Thread.interrupted();

}

Here, you can suspend yourself directly and wait for the head node to wake you up. If you arrive here, the locking process of unfair locks will end

We can see that it is basically consistent with our previous speculation that we have understood the implementation of unfair locks. What is the difference between the implementation of fair locks and unfair locks

How does fairness lock achieve fairness?

Let's look at the difference between the two source codes

Difference 1

//Fair lock

final void lock() {

acquire(1);

}

//Unfair

final void lock() {

//Directly lock CAS to see if it can succeed

if (compareAndSetState(0, 1))

//Set current thread after success

setExclusiveOwnerThread(Thread.currentThread());

else

acquire(1);

}

We compared the lock method above. If the lock method is a non fair lock, a new thread will directly try to add the lock. It will not queue up at all. If the fair lock is directly entered into the acquire method

Difference 2

protected final boolean tryAcquire(int acquires) {

final Thread current = Thread.currentThread();

int c = getState();

if (c == 0) {

if (!hasQueuedPredecessors() &&

compareAndSetState(0, acquires)) {

setExclusiveOwnerThread(current);

return true;

}

}

else if (current == getExclusiveOwnerThread()) {

int nextc = c + acquires;

if (nextc < 0)

throw new Error("Maximum lock count exceeded");

setState(nextc);

return true;

}

return false;

}

The above is the tryAcquire method of fair lock. We can see that the difference between this method and non fair lock is only that there is an additional hasQueuedPredecessors() judgment method. Similarly, let's see what this method does first

hasQueuedPredecessors

public final boolean hasQueuedPredecessors() {

// The correctness of this depends on head being initialized

// before tail and on head.next being accurate if the current

// thread is first in queue.

//Determine whether there are higher priority waiting threads in the queue

Node t = tail; // Read fields in reverse initialization order

Node h = head;

Node s;

//If false is returned here, you will go to CAS to grab the lock, otherwise you will queue up

return h != t &&

((s = h.next) == null || s.thread != Thread.currentThread());

}

This method is to judge whether there are waiting threads with higher priority in the queue, and return true: there are waiting threads with higher priority, and the current thread will queue obediently

Return false: if there is no thread with higher priority, go to CAS to grab the lock directly

Then let's analyze it

h != t && ((s = h.next) == null || s.thread != Thread.currentThread());

h != t what is the situation?

- Case 1: head is null and tail is not null

- Case 2: head is not null and tail is null

- Case 3: both head and tail are not null and equal

From the enq method, you can see that the head is set before the tail, so case 1 does not exist

Then come to the next step

((s = h.next) == null || s.thread != Thread.currentThread());

Case 2: at this time, other threads enter the enq method and have just executed compareAndSetHead(new Node()), but have not assigned a value to tail. At this time (s= h.next) == null is true, and directly exit the loop. At this time, it indicates that a thread with higher priority is executing a task

Case 3: the queue has been initialized successfully. At this time (s= h.next) == null is false, and then judge whether the current node is the next node of the head node. Please note that s = h.next has been assigned before. If s.thread= Thread. Currentthread() indicates that the current node is not the second node, so exit the loop and queue up

Did you find that the judgment of (s = h.next) == null is actually to distinguish case 1 and case 2 above, but it is too concise to understand

To sum up, the implementation of fair lock is to judge whether there are high priority threads through the hasQueuedPredecessors method, rather than directly grabbing the lock like an unfair lock

Prompt everyone that both non fair locks and fair locks operate before the thread does not enter the queue, but as long as it enters the queue, it must queue obediently

Summarize the locking process

When a thread attempts to lock, it will enter the AQS queue. The queue initializes a head node for the first time. At this time, the internal thread of the head node is null, and then the thread node thread1, the first thread to join the queue, will be connected to the initialized head node, and then thread1 will park itself, that is, except for the head node, other nodes in the queue will park themselves, Then, after the thread holding the lock releases the lock, it will wake up the first node behind the head node. At this time, that is, thread1 will be awakened. After the thread obtains the lock, it will set itself as head and set its own thread as null, because after obtaining the lock, it will setExclusiveOwnerThread(current); So there is no need to hold threads

Except for the first initialized head, all head nodes are nodes that have obtained locks

Unlock source code

Unlocking is relatively simple

public void unlock() {

sync.release(1);

}

public final boolean release(int arg) {

if (tryRelease(arg)) {

Node h = head;

if (h != null && h.waitStatus != 0)

unparkSuccessor(h);

return true;

}

return false;

}

The process of the release method is basically to operate on the state, and then wake up the next node if there is a next node in the queue

Let's look at the tryRelease method first

protected final boolean tryRelease(int releases) {

int c = getState() - releases;

//Only threads with locks can be unlocked

if (Thread.currentThread() != getExclusiveOwnerThread())

throw new IllegalMonitorStateException();

boolean free = false;

//c == 0 can be regarded as successful unlocking, that is to say, the lock must be unlocked several times after adding the lock

if (c == 0) {

free = true;

setExclusiveOwnerThread(null);

}

setState(c);

return free;

}

Let's see how to wake up the next thread

private void unparkSuccessor(Node node) {

/*

* If status is negative (i.e., possibly needing signal) try

* to clear in anticipation of signalling. It is OK if this

* fails or if status is changed by waiting thread.

*/

//The States here are 0: initialization state - 1: the previous SIGNAL state 1:CANCELLED state

int ws = node.waitStatus;

if (ws < 0)

//Set the current state to the initial state 0

compareAndSetWaitStatus(node, ws, 0);

/*

* Thread to unpark is held in successor, which is normally

* just the next node. But if cancelled or apparently null,

* traverse backwards from tail to find the actual

* non-cancelled successor.

*/

Node s = node.next;

if (s == null || s.waitStatus > 0) {

s = null;

//Traverse from the end of the queue to find the node in SIGNAL status closest to the head node, and then wake up

for (Node t = tail; t != null && t != node; t = t.prev)

if (t.waitStatus <= 0)

s = t;

}

//If it is not null, wake up the next thread directly, and the next thread will reset itself to head

if (s != null)

LockSupport.unpark(s.thread);

}

Here's a question. Why should the queue be traversed from back to front?

Since it is written like this, it must be because if there will be some problems in traversal from front to back, what problems will there be?

We assume that there is such a scenario

Now there are three threads a, B and C executing tasks

Thread A is currently at the end of the task queue, that is, the tail node

1. At this time, thread B executes compareAndSetTail(t, node) in enq method queued, but t.next=node has not been executed yet,

2. Thread C executes compareAndSetTail(t, node) and t.next = node; What happens at this time?

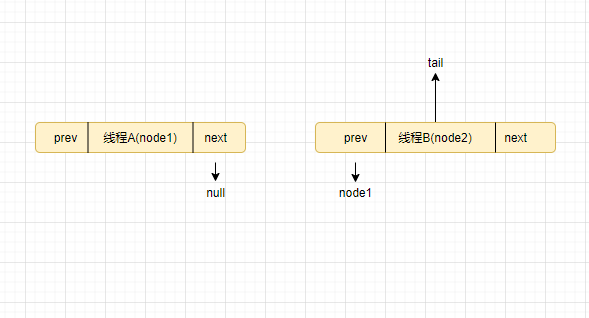

When the node where thread B is located joins the queue, but t.next=node has not been executed, the queue is like this

At this time, the tail has been set to the node2 node where thread B is located, but the next node of node1 has not been set yet

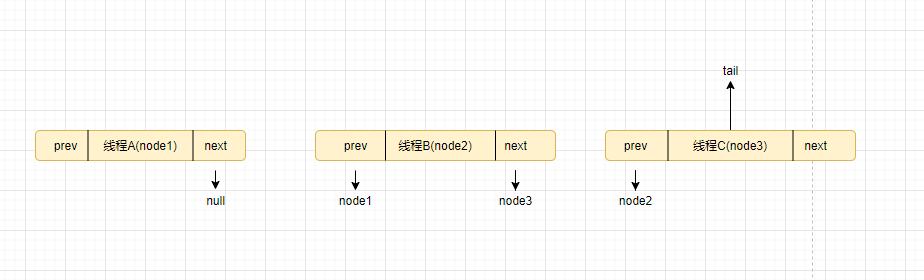

At this time, the cpu time slice is allocated to thread C. thread C starts to execute the queued task and executes t.next = node. The queue at this time is like this

Have you found it? At this time, the next of node1 node is still null, so in this case, if you traverse from front to back, the queue will be broken,

You can see that the prev of each node can find the node, so you need to traverse from back to front

ending

I hope you can point out any misunderstandings. If you have any questions, please discuss them together~