I learned the basics of Node.js a few days ago. Today I'll learn about Web application development, how to debug in the development process, and how to deploy online

Web application development

HTTP module

We can use the built-in HTTP module of Node.js to build the simplest HTTP service

const http = require("http");

http

.createServer((req, res) => {

res.end("Hello YK!!!");

})

.listen(3000, () => {

console.log("App running at http://127.0.0.1:3000/");

});

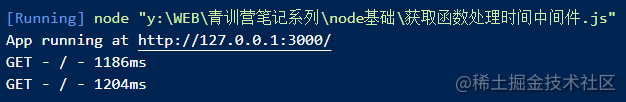

console output

Web page access (that is, send a GET request)

Koa

Some frameworks will be used in actual development, such as Express and Koa. Today, let's introduce Koa

introduce

Koa -- next generation Web development framework based on Node.js platform

Install npm i koa

Koa only provides a lightweight and elegant function library, which makes it easy to write Web applications without binding any Middleware in the kernel method

const Koa = require("koa");

const app = new Koa();

app.use(async (ctx) => {

ctx.body = "Hello YK!!!! by Koa";

});

app.listen(3000, () => {

console.log("App running at http://127.0.0.1:3000/");

});

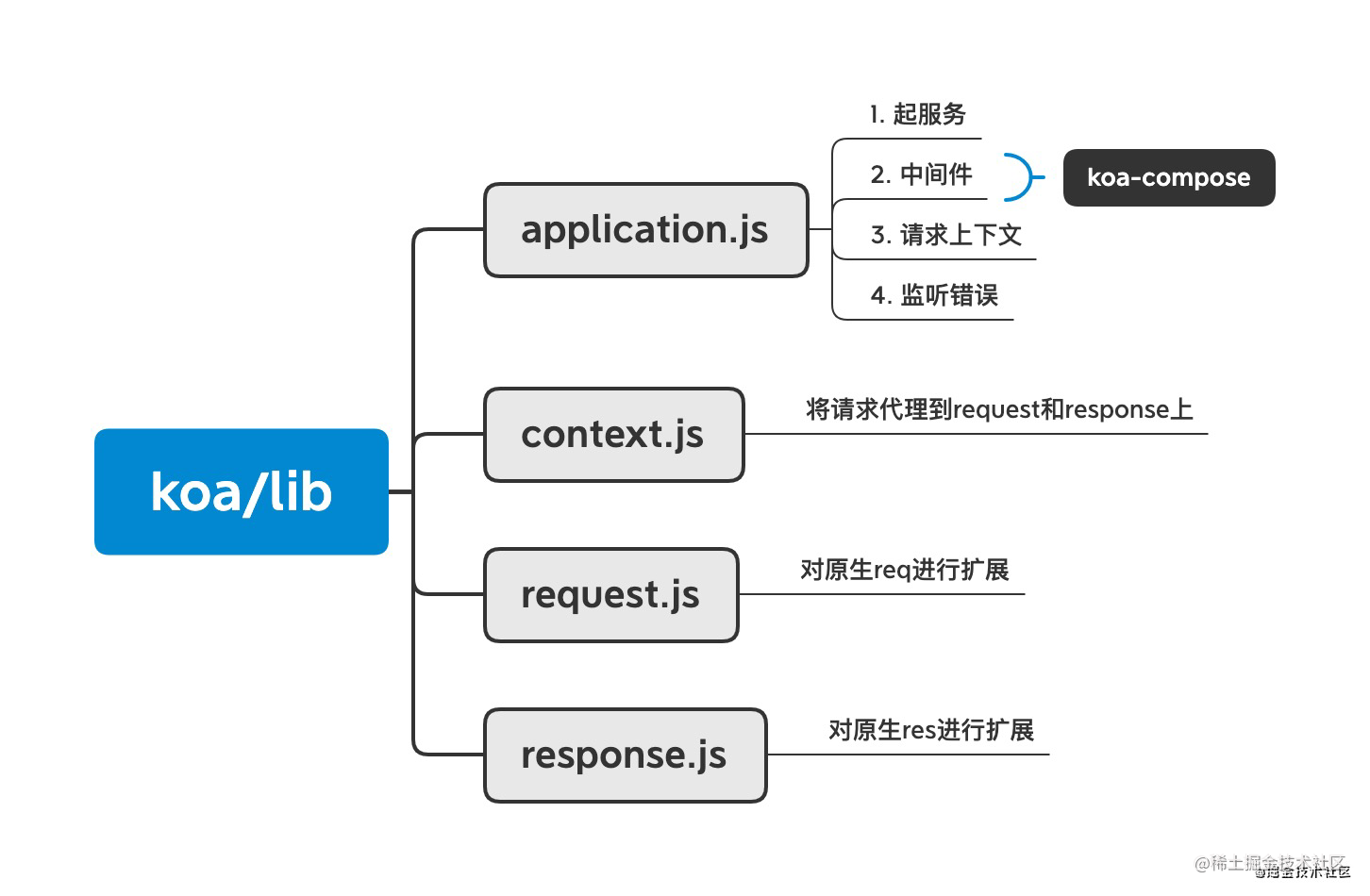

Koa execution process

- Service startup

- Instantiate application

- Registration Middleware

- Create service and listening port

- Accept / process request

- Get request req and res objects

- Req - > request, res - > response encapsulation

- request & response -> context

- Execution Middleware

- Output the content set to ctx.body

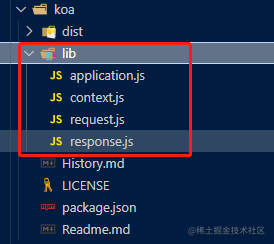

Koa's source code is relatively friendly. You can look at the source code yourself. There are only four files

middleware

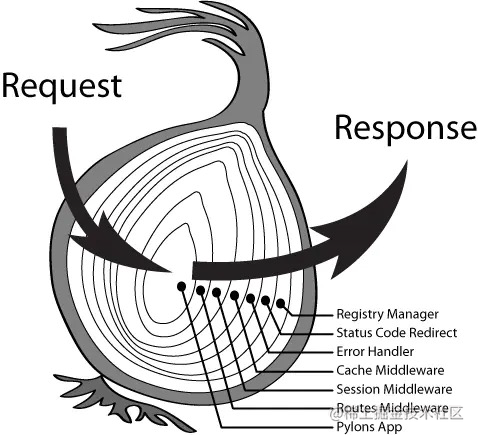

The Koa application is an object containing a set of middleware functions, which is organized and executed according to the onion model

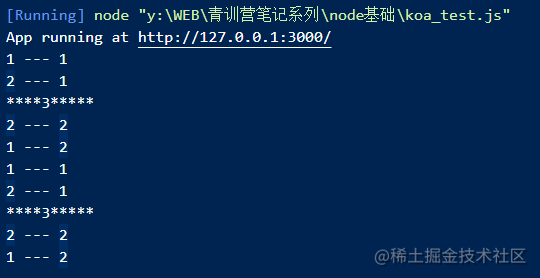

Execution sequence of middleware: output result 1 2 3 2 1

const Koa = require("koa");

const app = new Koa();

app.use(async (ctx, next) => {

console.log("1 --- 1");

await next();

console.log("1 --- 2");

});

app.use(async (ctx, next) => {

console.log("2 --- 1");

await next();

console.log("2 --- 2");

});

app.use(async (ctx, next) => {

console.log("****3*****");

ctx.body = "Sequential demonstration of Middleware";

});

app.listen(3000, () => {

console.log("App running at http://127.0.0.1:3000/");

});

Output result 1 2 3 2 1

Let's simply implement the middleware

Simple code implementation of Middleware

const fn1 = async (ctx, next) => {

console.log("before fn1");

ctx.name = "YKjun";

await next();

console.log("after fn1");

};

const fn2 = async (ctx, next) => {

console.log("before fn2");

ctx.age = 18;

await next();

console.log("after fn2");

};

const fn3 = async (ctx, next) => {

console.log(ctx);

console.log("in fn3...");

};

const compose = (middlewares, ctx) => {

const dispatch = (i) => {

let fn = middlewares[i];

return Promise.resolve(

fn(ctx, () => {

return dispatch(i + 1);

})

);

};

return dispatch(0);

};

compose([fn1, fn2, fn3], {});

Based on the principle of middleware, get the execution time of processing function?

const Koa = require("koa");

const app = new Koa();

// logger Middleware

app.use(async (ctx, next) => {

await next();

const rt = ctx.response.get("X-Response-Time");

if (ctx.url !== "/favicon.ico") {

console.log(`${ctx.method} - ${ctx.url} - ${rt}`);

}

});

// x-response-time Middleware

app.use(async (ctx, next) => {

const start = Date.now();

await next();

const ms = Date.now() - start;

ctx.set("X-Response-Time", `${ms}ms`);

});

app.use(async (ctx) => {

let sum = 0;

for (let i = 0; i < 1e9; i++) {

sum += i;

}

ctx.body = `sum=${sum}`;

});

app.listen(3000, () => {

console.log("App running at http://127.0.0.1:3000/");

});

It can be seen that the whole process is from top to bottom and then from bottom to top

Common middleware

- Koa Router: route resolution

- Koa body: request body parsing

- Koa logger: logging

- Koa Views: template rendering

- Koa2 CORS: cross domain processing

- Koa session: session processing

- Koa helmet: safety protection

There are many Koa middleware with uneven quality, which needs reasonable selection and efficient combination

Front end framework based on Koa

Open source: ThinkJS / Egg

Internal: Turbo, Era, Gulu

What did they do?

- Koa object response / request / context / application and other extensions

- Koa common middleware Library

- Internal service support

- Process management

- Scaffolding

- ...

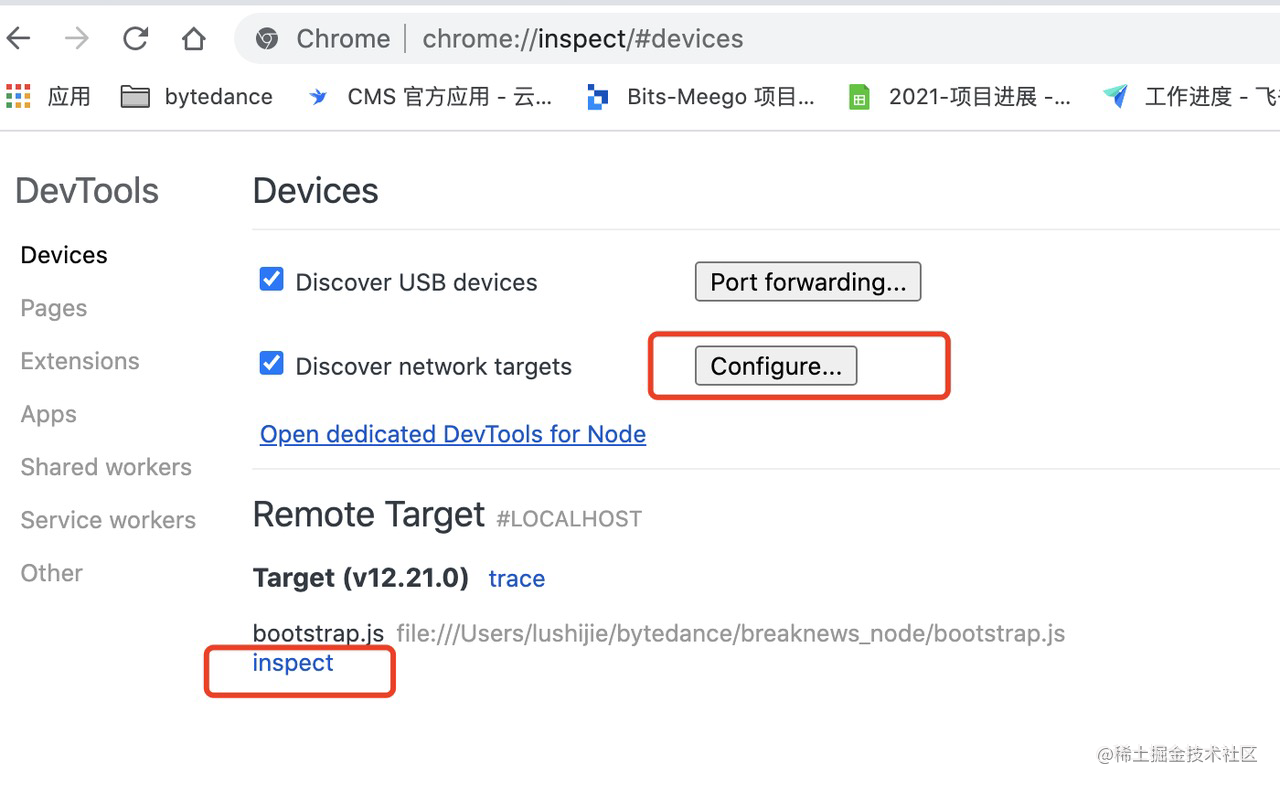

debugging

breakpoint debugging

Use node's inspect to debug the breakpoint of the specified file

node --inspect=0.0.0.0:9229 bootstrap.js

You can also install an ndb package

npm install ndb -g > ndb node bootstrap.js

Use vscode for code debugging (I like this)

Create a json configuration file before using it

Debug at break point

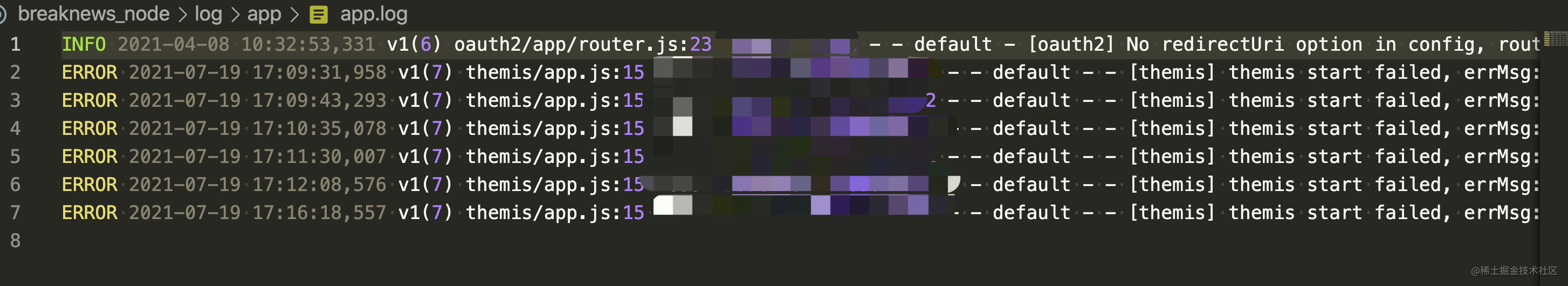

Log debugging

- SDK reporting

- toutiao.fe.app.2021-07-28.log

Online deployment

Node.js maintains the single thread feature of JavaScript in the browser (one thread per process)

Although Node.js is a single thread model, it is based on event driven, asynchronous and non blocking mode, which can be applied to high concurrency scenarios, while avoiding the resource overhead caused by thread creation and context switching between threads.

Disadvantages:

- Unable to utilize multi-core CPU

- The error will cause the whole application to exit, and the robustness is insufficient

- The CPU is occupied by a large number of calculations, and execution cannot continue

Using multi-core CPU

const http = require("http");

http

.createServer((req, res) => {

for (let i = 0; i < 1e7; i++) {} // Single core CPU

res.end(`handled by process.${process.pid}`);

})

.listen(8080);

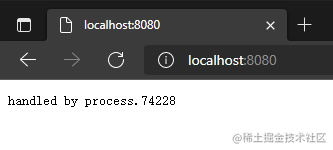

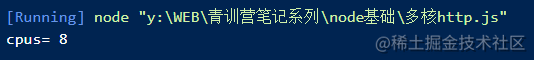

Execute the simplest HTTP Server

This is a single CPU service

How to use multi-core CPU?

Node.js provides cluster / child_process module

const cluster = require("cluster");

const os = require("os");

if (cluster.isMaster) {

const cpuLen = os.cpus().length;

console.log("cpus=", cpuLen);

for (let i = 0; i < cpuLen; i++) {

cluster.fork();

}

} else {

require("./Mononuclear http.js");

}

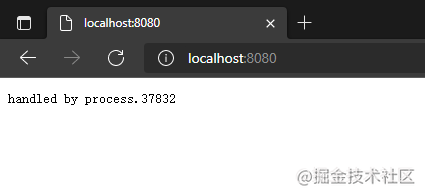

Performance comparison

ab -c200 -t10 http://localhost:8080/ Test for 10 seconds, concurrent 200

Single process 1828

Multiprocess 6938

Multi process robustness

A single process is easy to exit when the program makes mistakes. Let's take a look at the robustness of multiple processes

- fork: this event is triggered after copying a worker process.

- Online: after copying a work process, the work process actively sends an online message to the main process. After the main process receives the message, this event is triggered.

- Listening: after calling listen() in the work process (sharing the server side Socket), send a listening message to the main process. After the main process receives the message, it triggers the event.

- disconnect: this event will be triggered after the IPC channel between the main process and the worker process is disconnected.

- exit: this event is triggered when a worker process exits.

- This event is triggered after setup:cluster.setupMaster() is executed

const http = require("http");

const numCPUs = require("os").cpus().length;

const cluster = require("cluster");

if (cluster.isMaster) {

console.log("Master process id is", process.pid);

for (let i = 0; i < numCPUs; i++) {

cluster.fork();

}

cluster.on("exit", function (worker, code, signal) {

console.log("worker process died, id", worker.process.pid);

cluster.fork(); // Immediately after exiting, fork a new process

});

} else {

const server = http.createServer();

server.on("request", (req, res) => {

res.writeHead(200);

console.log(process.pid);

res.end("hello world\n");

});

server.listen(8080);

}

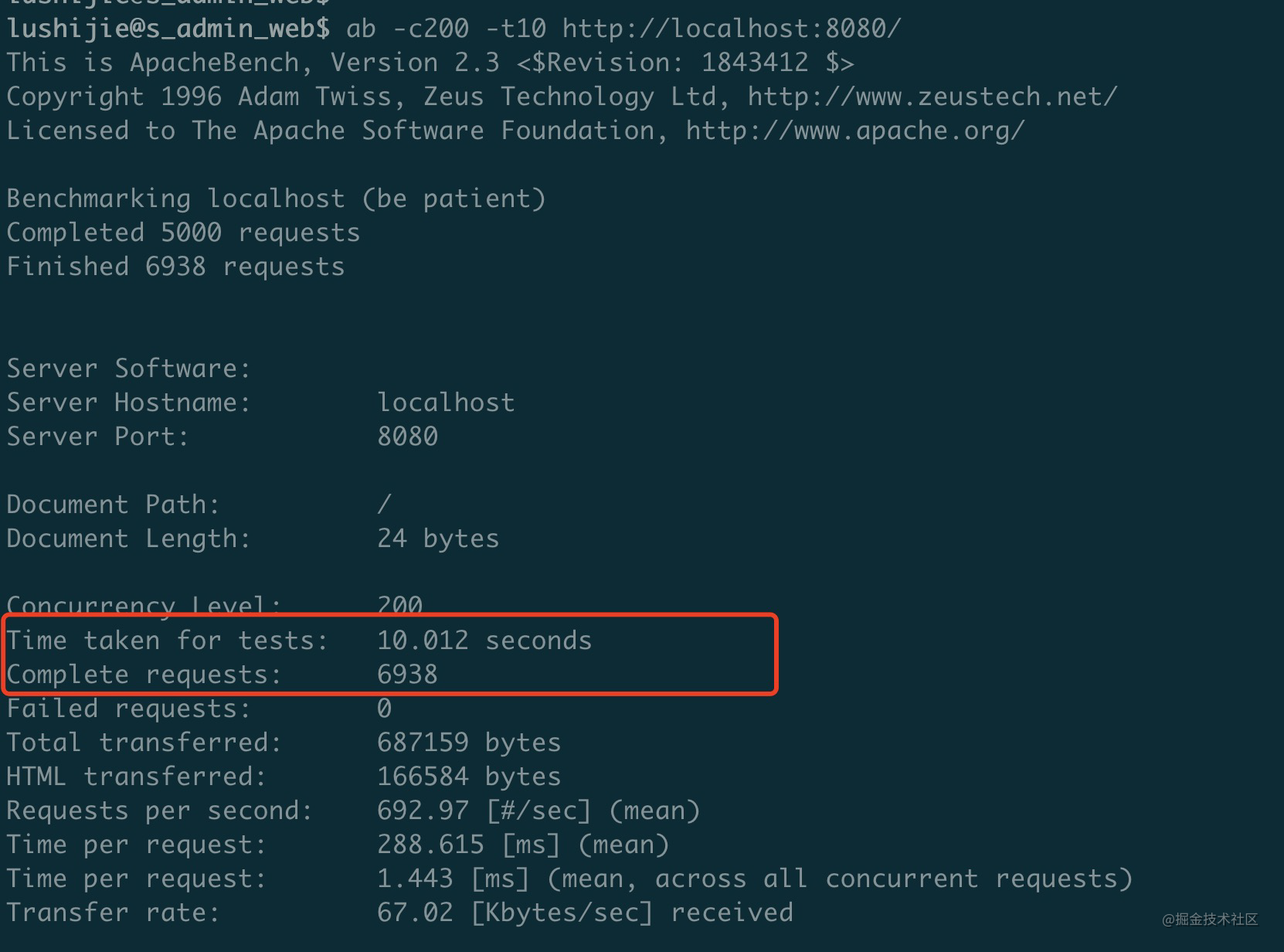

Process daemon

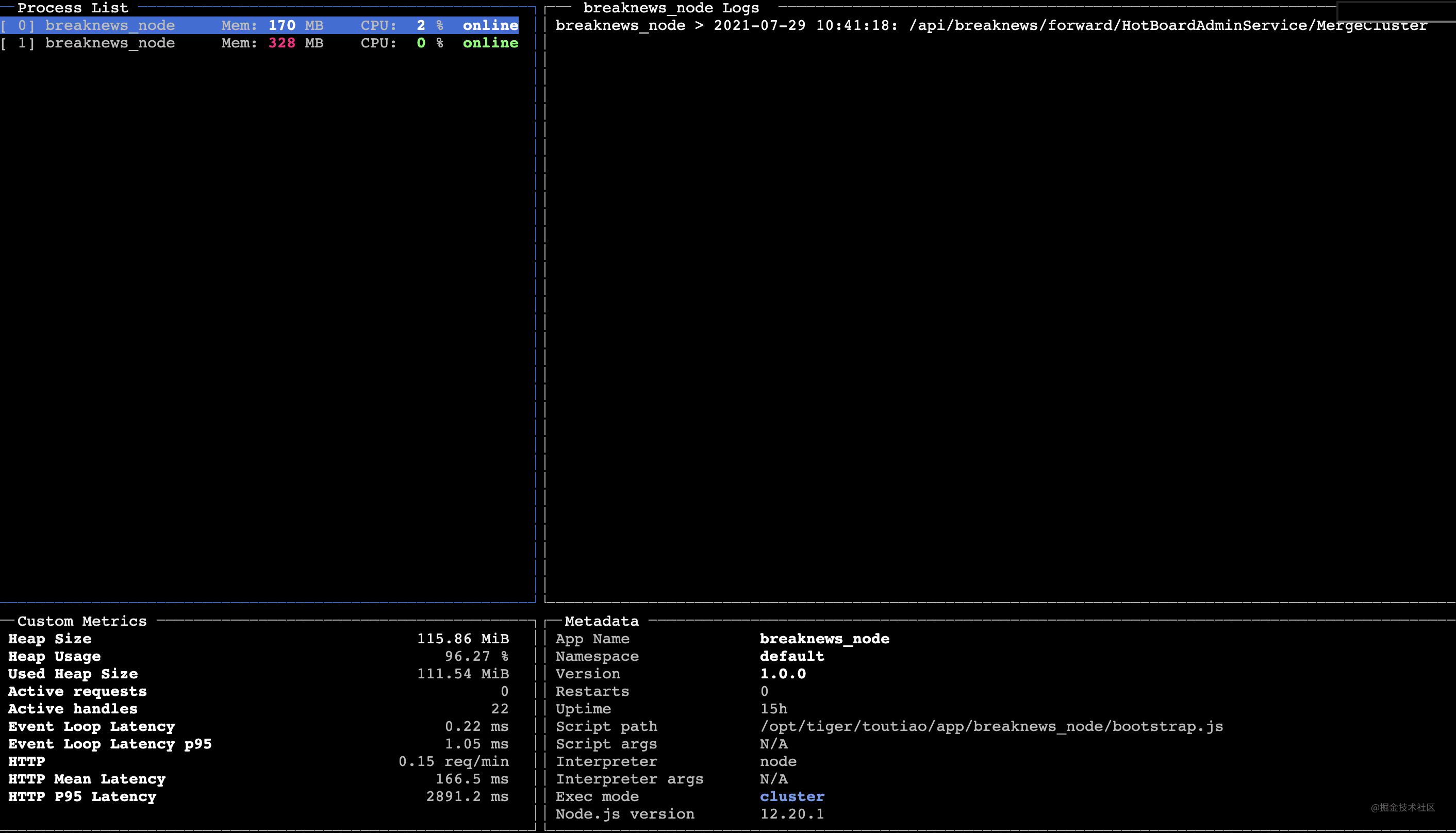

We don't have to manage all kinds of processes by hand every time. We can use management tools to realize process daemon.

Node.js process management tool:

- Multi process

- Automatic restart

- load balancing

- Log view

- Performance monitoring

Using pm2 process management and monitoring

Complex calculation

Node.js is complex and takes up CPU. What if it gets stuck?

const http = require("http");

const complexCalc = () => {

console.time("Calculation time");

let sum = 0;

for (let i = 0; i < 1e10; i++) {

sum += i;

}

console.timeEnd("Calculation time");

return sum;

};

const server = http.createServer();

server.on("request", (req, res) => {

if (req.url === "/compute") {

const sum = complexCalc();

res.end(`sum is ${sum} \n`);

} else {

res.end("success");

}

});

server.listen(8000);

For example, let's have a cyclic calculation to simulate complex calculation

The calculation takes more than ten seconds

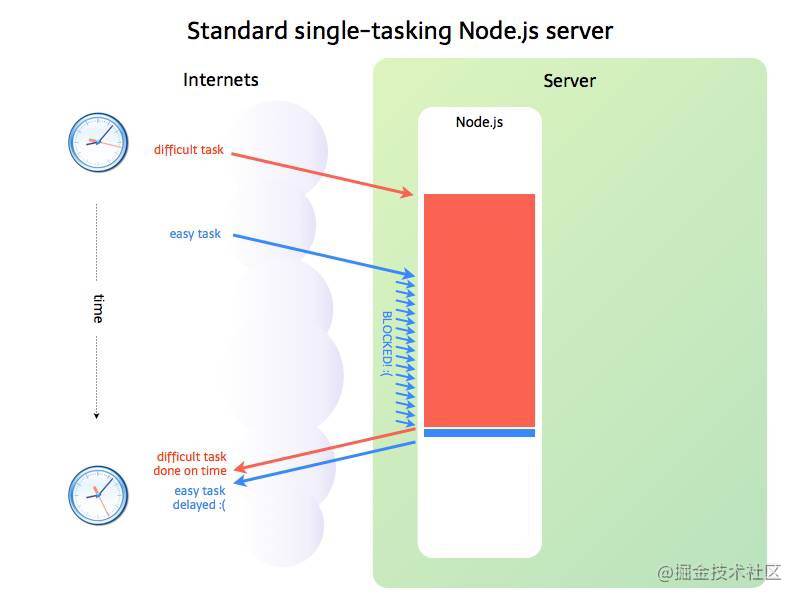

If we are requesting something else at this time, such as 127.0.0.1:8000/ping, we can't output the result immediately, but there will be blocking. We won't output success until the complex calculation is completed

Complex calculation will take a long time for CPU calculation, and simple tasks will wait for complex tasks to be executed after calculation

You can consider using multiple processes to process complex calculations into child processes without occupying the main process. After complex calculations are completed, send the results to the parent process, and then you can get the results

Multi process and process communication

Complex computing subprocess

Main process

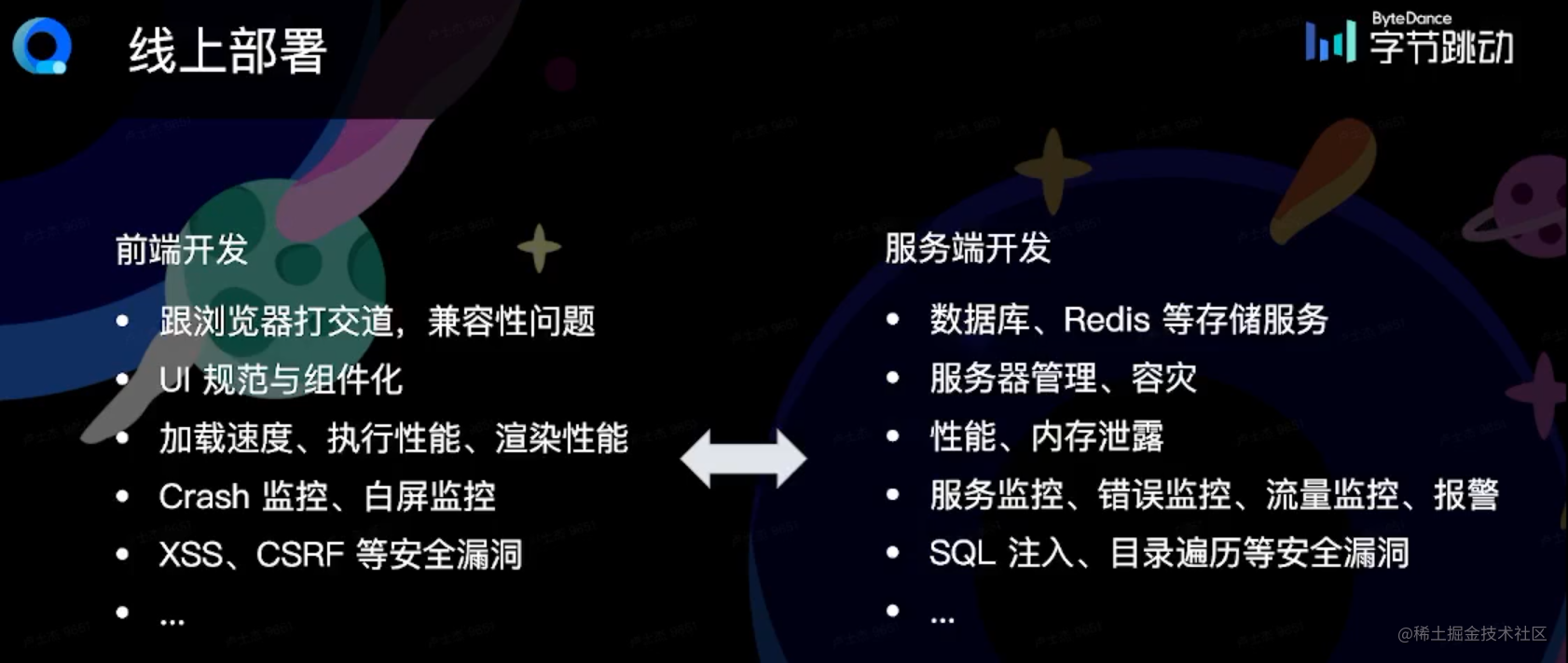

Comparison between front-end development and back-end development

Finally, let's compare the concerns of front-end and back-end development~

Front end development

- Dealing with browsers, compatibility issues

- UI specification and componentization

- Loading speed, execution performance, rendering performance

- Crash monitoring, white screen monitoring

- XSS, CSRF and other security vulnerabilities

Server development

- Database, Redis and other storage services

- Server management and disaster recovery

- Performance and memory leakage

- Service monitoring, error monitoring, flow monitoring and alarm

- Security vulnerabilities such as SQL injection and directory traversal