csdn online learning course, course consultation and Q & A and new course information: QQ communication group: 422901085 for course discussion

android cross process communication practice video course (add group to get preferential)

Actual development of Qianlima android framework - transaction failed caused by oneway driven by binder

First, let's look at the error:

06-15 12:10:36.686 31395 31507 W System.err: android.os.DeadObjectException: Transaction failed on small parcel; remote process probably died 06-15 12:10:36.686 31395 31512 E JavaBinder: !!! FAILED BINDER TRANSACTION !!! (parcel size = 112) 06-15 12:10:36.686 31395 31507 W System.err: at android.os.BinderProxy.transactNative(Native Method) 06-15 12:10:36.687 31395 31507 W System.err: at android.os.BinderProxy.transact(BinderProxy.java:511) 06-15 12:10:36.688 31395 31507 W System.err: at com.example.servicedemo.IStudentInterface1$Stub$Proxy.addStudentId(IStudentInterface1.java:126) 06-15 12:10:36.688 31395 31507 W System.err: at com.example.servicedemo.MainActivity$2$1$1.run(MainActivity.java:71) 06-15 12:10:36.688 31395 31507 W System.err: at java.lang.Thread.run(Thread.java:919) 06-15 12:10:36.689 31395 31512 W System.err: android.os.DeadObjectException: Transaction failed on small parcel; remote process probably died 06-15 12:10:36.655 0 0 I binder : 31395:31512 transaction failed 29201/-28, size 112-0 line 3132 06-15 12:10:36.657 0 0 I binder : 31395:31509 transaction failed 29201/-28, size 112-0 line 3132 06-15 12:10:36.662 0 0 I binder : 31395:31514 transaction failed 29201/-28, size 112-0 line 3132 06-15 12:10:36.662 0 0 I binder : 31395:31513 transaction failed 29201/-28, size 112-0 line 3132 06-15 12:10:36.689 31395 31509 E JavaBinder: !!! FAILED BINDER TRANSACTION !!! (parcel size = 112) 06-15 12:10:36.689 31395 31512 W System.err: at android.os.BinderProxy.transactNative(Native Method) 06-15 12:10:36.689 31395 31512 W System.err: at android.os.BinderProxy.transact(BinderProxy.java:511) 06-15 12:10:36.691 31395 31512 W System.err: at com.example.servicedemo.IStudentInterface1$Stub$Proxy.addStudentId(IStudentInterface1.java:126) 06-15 12:10:36.691 31395 31512 W System.err: at com.example.servicedemo.MainActivity$2$1$1.run(MainActivity.java:71) 06-15 12:10:36.692 31395 31512 W System.err: at java.lang.Thread.run(Thread.java:919)

Let's blink:

06-15 12:10:36.689 31395 31512 W System.err: android.os.DeadObjectException: Transaction failed on small parcel; remote process probably died

However, we have seen that the target process is not dead at all, so the target process written in the log here may be dead, which is wrong at all, so we can only continue to analyze the log

Here we remind you that if there is no obvious error in the user space when the binder cross process transmission is abnormal, and the error itself is the error returned by the binder driver, the next log to be analyzed should be the kernel log. By the way, here's a reminder to grab the kernel log:

adb logcat - b all > 1.txt here - b all means to grab all logs: main, events, system and kernel, while the normal adb logcat only grabs the main logs

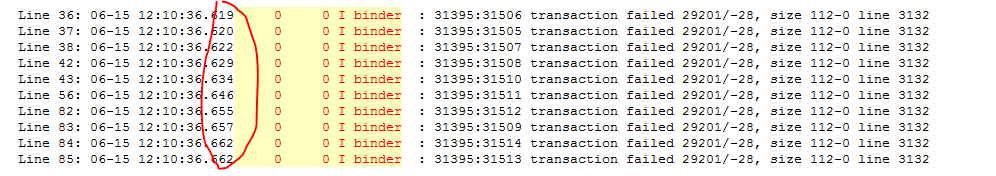

Generally, the process number displayed in the kernel log is 0, so in W system.err: android.os.deadobjectexception: transaction failed on small parcel; Around the remote process probably did, we analyzed the kernel log and printed it as follows:

06-15 12:10:36.655 0 0 I binder : 31395:31512 transaction failed 29201/-28, size 112-0 line 3132 06-15 12:10:36.657 0 0 I binder : 31395:31509 transaction failed 29201/-28, size 112-0 line 3132 06-15 12:10:36.662 0 0 I binder : 31395:31514 transaction failed 29201/-28, size 112-0 line 3132 06-15 12:10:36.662 0 0 I binder : 31395:31513 transaction failed 29201/-28, size 112-0 line 3132

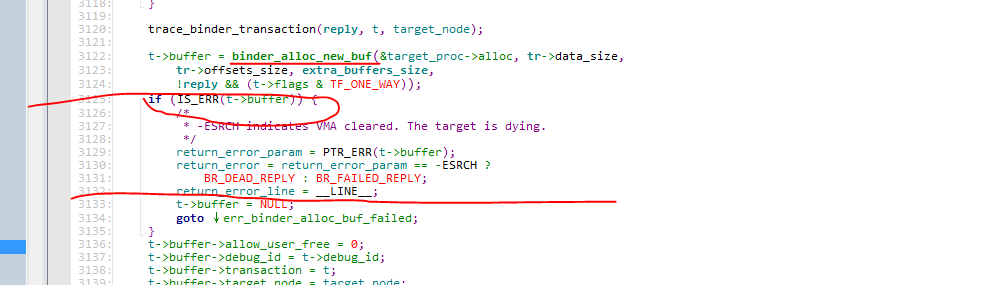

This is obviously printed by the binder driver in the kernel. Is the transaction failed also printed here, and the corresponding number of lines 3132 is also printed. Here, let's look at the code in line 3132:

It is obvious that the target process fails to apply for memory during the transaction. There may be several cases of memory failure:

1. It may be that the memory of the target process is full and cannot be applied

2. What other system errors are there

Then, based on the above doubts, look at the log and find:

There are many printing failures within 1ms. Is there any reason to doubt whether the client has initiated very, very frequent binder calls, and it should still be an asynchronous call, that is, oneway? Why? Because only oneway asynchronous calls are queued in the kernel binder driver, so if our client initiates oneway calls many times, the driver also executes them in turn and caches the unexecuted tasks to the queue. But let's think about it here. The unexecuted tasks enter the queue, That is, the memory applied only from the target process cannot be released. Here, the shared memory of the target process itself is 1M-8K, and the asynchronous limit is still half, that is, 500K, which means that if 5000 calls are initiated and each call exceeds 100 bytes, it is likely that exceptions cannot be seen in the applied memory. In the specific oneway case, the queue is cached and processed in turn. You can see the following code:

static bool binder_proc_transaction(struct binder_transaction *t,

struct binder_proc *proc,

struct binder_thread *thread)

{

struct binder_node *node = t->buffer->target_node;

struct binder_priority node_prio;

bool oneway = !!(t->flags & TF_ONE_WAY);

bool pending_async = false;

BUG_ON(!node);

binder_node_lock(node);

node_prio.prio = node->min_priority;

node_prio.sched_policy = node->sched_policy;

if (oneway) {//If it's oneway

BUG_ON(thread);

if (node->has_async_transaction) {

//For the first time, the async transaction has not been executed. Here, the async process needs to be executed, and it has not ended yet. Once again, there is a oneway call to the same node

pending_async = true;//If an async task is already executing, it needs to be set to allow the next execution delay

} else {

//First set in progress

node->has_async_transaction = 1;

}

}

binder_inner_proc_lock(proc);

//Generally, this exception will not occur if you ignore it

if (proc->is_dead || (thread && thread->is_dead)) {

binder_inner_proc_unlock(proc);

binder_node_unlock(node);

return false;

}

//First pending_async is not set to true, so it will look for threads if pending_async is set, there is no need to find the target thread

if (!thread && !pending_async)

thread = binder_select_thread_ilocked(proc);

if (thread) {

binder_transaction_priority(thread->task, t, node_prio,

node->inherit_rt);

binder_enqueue_thread_work_ilocked(thread, &t->work);

} else if (!pending_async) {

binder_enqueue_work_ilocked(&t->work, &proc->todo);

} else {

//If pending_ If async is set to true, work will not be executed, but async will be placed_ Todo queue backlog

binder_enqueue_work_ilocked(&t->work, &node->async_todo);

}

if (!pending_async)//pending_ If async is true, it will not wake up and execute

binder_wakeup_thread_ilocked(proc, thread, !oneway /* sync */);

binder_inner_proc_unlock(proc);

binder_node_unlock(node);

return true;

}

The comments are also very detailed. The summary is that once the same binder is used in oneway_ If the node has an async task being executed, the following tasks need to be cached in async_todo queue

So Async_ When will todo be taken out and executed?

case BC_FREE_BUFFER: {

binder_uintptr_t data_ptr;

struct binder_buffer *buffer;

if (get_user(data_ptr, (binder_uintptr_t __user *)ptr))

return -EFAULT;

ptr += sizeof(binder_uintptr_t);

buffer = binder_alloc_prepare_to_free(&proc->alloc,

data_ptr);

. . ellipsis

if (buffer->async_transaction && buffer->target_node) {

struct binder_node *buf_node;

struct binder_work *w;

buf_node = buffer->target_node;

binder_node_inner_lock(buf_node);

BUG_ON(!buf_node->has_async_transaction);

BUG_ON(buf_node->proc != proc);

//Remove async_todo

w = binder_dequeue_work_head_ilocked(

&buf_node->async_todo);

if (!w) {

//If async is found_ Has if there is no task in todo_ async_ Transaction becomes no task being executed

buf_node->has_async_transaction = 0;

} else {

binder_enqueue_work_ilocked(

w, &proc->todo);

binder_wakeup_proc_ilocked(proc);

}

binder_node_inner_unlock(buf_node);

}

trace_binder_transaction_buffer_release(buffer);

binder_transaction_buffer_release(proc, buffer, NULL);

binder_alloc_free_buf(&proc->alloc, buffer);

break;

}

That is, in free_ The buffer will be taken out from the async todo queue only when the communication is completed, which means that the next communication can be carried out only after the formal execution

Here we know the execution of oneway, which means that if we initiate very frequent calls in a short time, it is very easy to lead to the depletion of limited shared memory, so that the transaction failed. If this happens in the application, you may just say that you see a Remote exception. Your business may not have a very, very big impact. It's a big deal that your application crash es. However, if this exception occurs in the systemserver, it may be troublesome. The problem may be that a callback can't receive this, so troubleshooting is very troublesome, Therefore, the application of this course deliberately writes that frequent calls in oneway are used to simulate this error:

06-15 12:10:36.657 0 0 I binder : 31395:31509 transaction failed 29201/-28, size 112-0 line 3132

If you also find this error in the process of analyzing the log, but you can't get the code because of some third-party applications, you can write an example of frequent calls to report the error. The type of error (29201 / - 28 is the representation type) and the number of lines (3132) can indirectly prove that it is such an error, which can be modified by the corresponding third-party applications, In other words, if you know which interface, you can limit the frequency of this interface call in the framework to avoid this problem.