preparation in advance

1. A deployed k8s platform 2. An already built harbor Private warehouse

jenkins deployment

The mainstream is used here rbac Pattern deployment jenkins That is, they need to create: 1. One jenkins Namespace 2. One nodeport service Used externally web visit 3.One serviceaccount To protect jenkins The server can access Jenkins Resources under namespace 3. One clusterRole Used to set jenkins Can access k8s What resources and specific permissions 4. One clusterBinding take clusterRole Follow serviceaccount Bind to decide this sa Accessible range 5. One deployment To actually start jenkins The server specific jenkins.yaml As follows:

---

# Namespace

apiVersion: v1

kind: Namespace

metadata:

name: jenkins

---

#Dynamically store pvc because I use nfs to store jenkins data here. If you don't need data persistence, you don't need to create it,

#If necessary, you can refer to my previous article to build an nfs environment.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5G

---

# jenkins deployment start jenkins server

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins-master

namespace: jenkins

spec:

selector:

matchLabels:

app: jenkins-master

template:

metadata:

labels:

app: jenkins-master

namespace: jenkins

spec:

securityContext:

fsGroup: 1000

containers:

- name: jenkins-master

image: kanq.k8s.com:7443/devops/jenkins:latest #Here is a private warehouse built by myself.

imagePullPolicy: Always

ports:

- containerPort: 8080

name: http

- containerPort: 50000

name: agent

volumeMounts:

- name: jenkins-master-vol

mountPath: /var/jenkins_home

volumes:

- name: jenkins-master-vol

persistentVolumeClaim:

claimName: jenkins-pvc

serviceAccount: "jenkins-master"

---

# svc is used to support external access. Here, I set both 8080 and 50000 of jenkins to nodeport type, because I need to be in jenkisn

# Non k8s cluster nodes and k8s default nodeport nodes range from 3000 to 32767, so if you want to use 32768 or above ports

# If so, be sure to set the API server service node port range startup parameter as you need. Then restart the API server service

apiVersion: v1

kind: Service

metadata:

name: jenkins-master

namespace: jenkins

spec:

type: NodePort

ports:

- port: 8080

name: http

targetPort: 8080

nodePort: 38080

- port: 50000

name: agent

nodePort: 50000

targetPort: 50000

selector:

app: jenkins-master

---

# The serviceacout created with the default secert provides jenkins pod with ssl authentication for accessing k8s clusters

apiVersion: v1

kind: ServiceAccount

metadata:

name: jenkins-master

namespace: jenkins

---

# Create a role and set the access k8s resource type and permission range

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: jenkins-master

namespace: jenkins

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/exec"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/log"]

verbs: ["get","list","watch"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

---

# Bind the serviceaccount to the role, so that the pod configured with the serviceaccount can access according to the role

# Resource data under this namespace

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: jenkins-master

namespace: jenkins

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: jenkins-master

subjects:

- kind: ServiceAccount

name: jenkins-master

namespace: jenkins

kubectl apply -f jenkins.yaml After normal execution [root@kanq template]# kubectl get pod -n jenkins NAME READY STATUS RESTARTS AGE jenkins-master-5c65d6b7f-hx4f2 1/1 Running 0 5d17h kubectl logs -f jenkins-master-5c65d6b7f-hx4f2 -n jenkins .... ************************************************************* ************************************************************* ************************************************************* Jenkins initial setup is required. An admin user has been created and a password generated. Please use the following password ddto proceed to installation: 4aa4c650ddddee9d46f1b020e2142e2df369 #This is your login page http://k8s Node IP: token entered after 38080 This may also be found at: /var/jenkins_home/secrets/initialAdminPassword ************************************************************* ************************************************************* *************************************************************

Dynamic slave node making

Generally used directly jenkins It's OK to bring it, but if it can't meet our needs, we can customize it ourselves. For example, I need to meet here centos8 Version and need to be used inside podman kubectl Wait. By the way podman, behind docker Commercial fees have been charged, and k8s Will also be with docker Decoupling. Plus docker realization dind(Can execute in container docker Commands, for example docker build docker push )This is based on containers jenkins Node scenario is very necessary.. The trouble is because docker There is a reason for the daemon that we need to host docker.sock Mount to the container, the limit is too large, if k8s Using binary deployment, using containerd Run as a container without docker You will find that there is no host at all docker.sock How to hang up? Install another one docker? If used podman There is no such concern. because podman No background running docker.sock This daemon. We only use the inside of the container podman Just do some configuration.

To get back to the point, the following is the dockerfile file for making slave:

#The basic image using CentOS 8 can be modified as required

# If you only make jenkins slave, you can not install podman, and the two sed commands can not be executed. kubectl can also not be copied

# slave.jar download address:

#Get agent.jar

#It is also called slave.jar, which is actually remoting in jenkins

#Official website address

#https://www.jenkins.io/zh/projects/remoting/

#jar package download address

#https://repo.jenkins-ci.org/public/org/jenkins-ci/main/remoting/

#Get Jenkins slave

#git address

#https://github.com/jenkinsci/docker-inbound-agent/blob/master/jenkins-agent

FROM centos:centos8

RUN mkdir -p /usr/share/jekins && \

yum install -y java-1.8.0-openjdk podman && \

sed -i 's/#mount_program/mount_program/g' /etc/containers/storage.conf && \

sed -i 's/mountopt/#mountopt/g' /etc/containers/storage.conf && \

chmod +777 /usr/local/bin/jenkins-slave

COPY slave.jar /usr/share/jenkins/slave.jar

COPY jenkins-slave /usr/local/bin/jenkins-slave

COPY kubectl /usr/bin/kubectl

USER root

WORKDIR /home/jenkins

ENTRYPOINT ["jenkins-slave"]

take slave.tar Follow jenkins-slave Put it on the heel Dockerfile File sibling directory is OK.

docker build -t centos:jenkins-slave .

jenkins configuring dynamic nodes

-

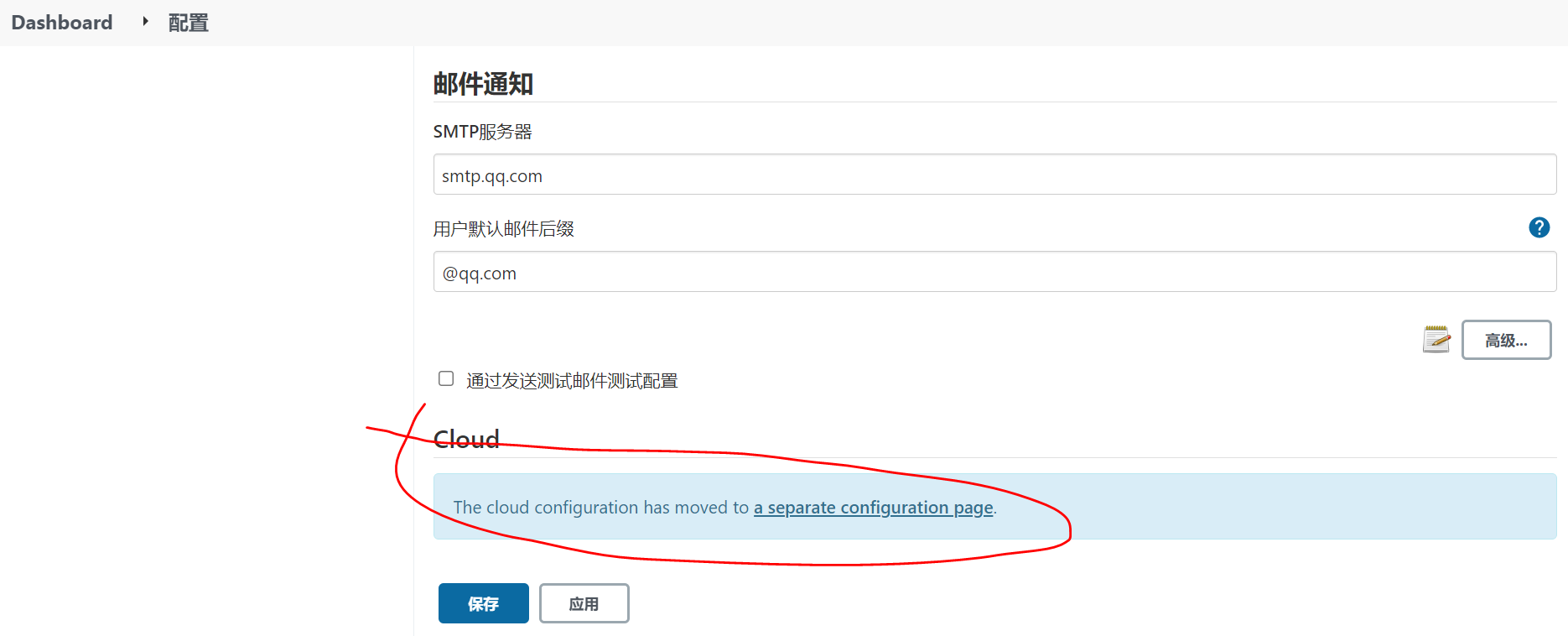

Open jenkins configuration and find cloud (usually at the bottom of the configuration page)

-

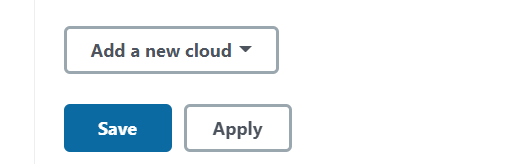

Select kubernetes to add cloud environment

-

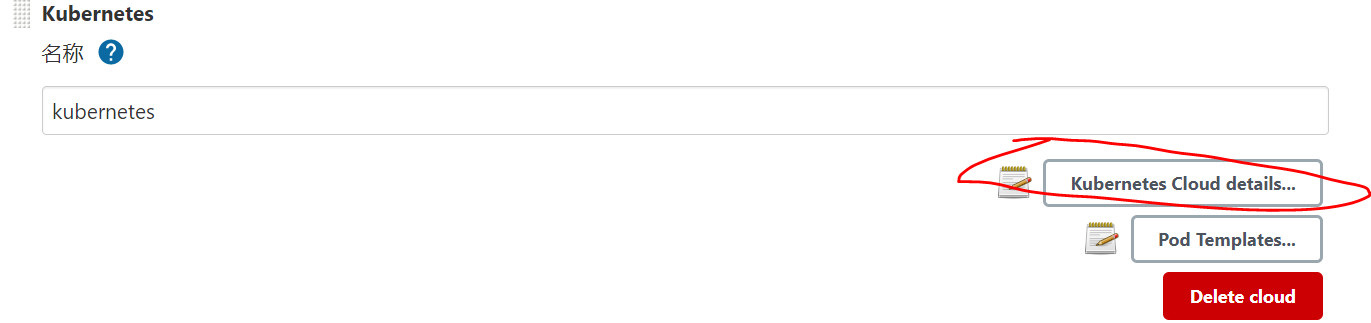

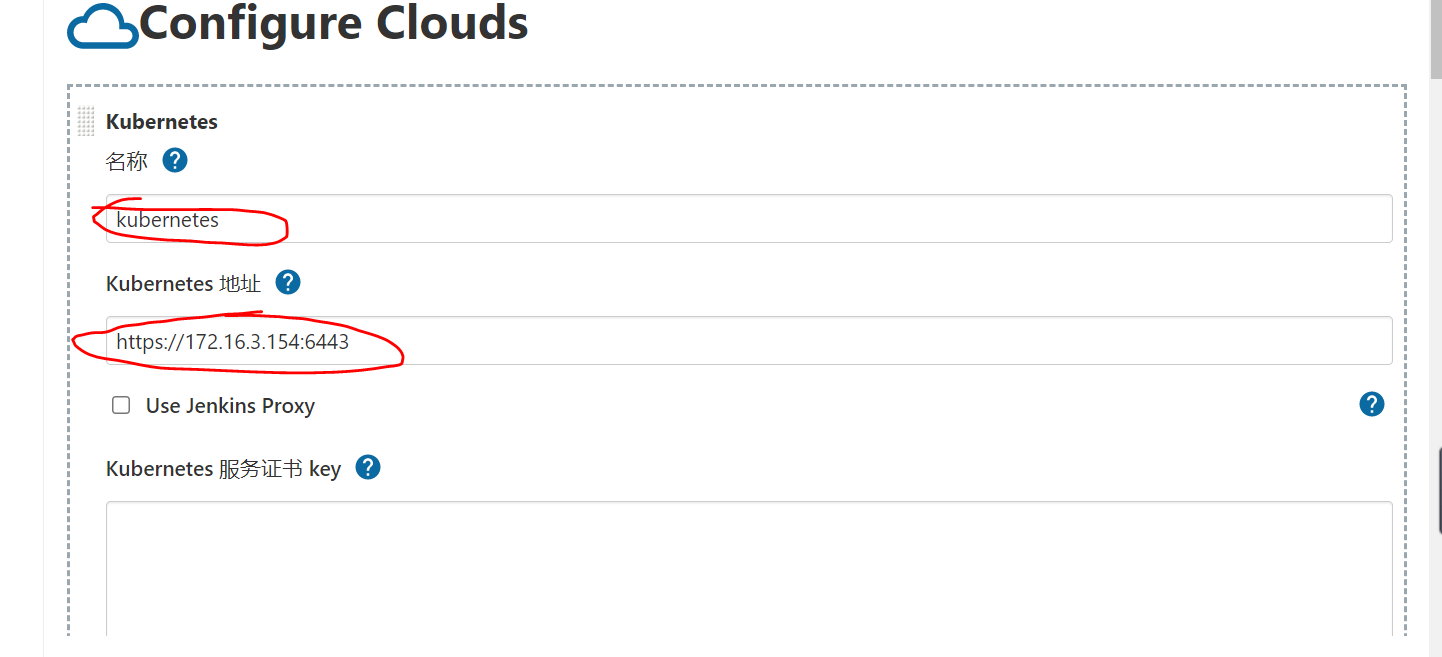

Configure kubernetes

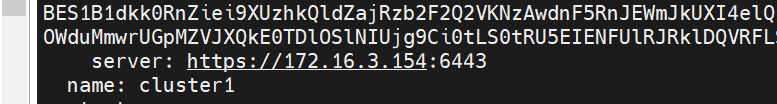

a. Set the cloud platform information name and fill it in casually. If you don't know the address, look at the information in your master node / root/.kube/config

server address

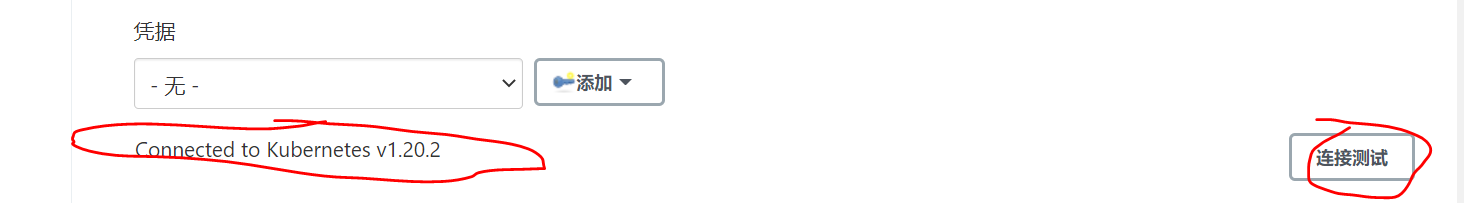

Click connection test after configuration

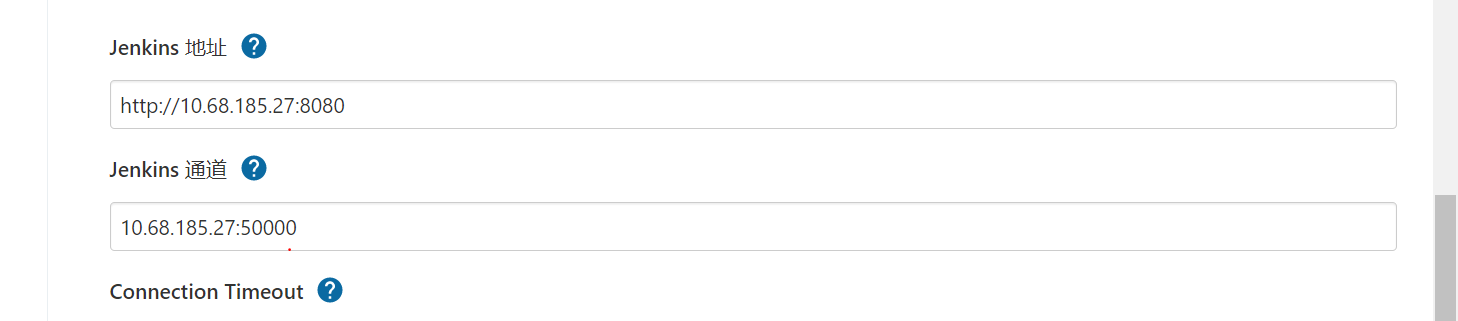

b. Configure jenkins related configurations. Here, svc internal ip and port are used

[root@kanq jenkins-slave]# kubectl get svc -n jenkins

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

jenkins-master NodePort 10.68.185.27 <none> 8080:30180/TCP,50000:50000/TCP 5d19h

Here 10.68.185.27 It's inside clusterip

The port is the following portip (port Is the internal port of the cluster ,nodePort Is the host exposed port, tragetPort Is the port inside the container )

So we use cluster internal ports 8080 and 50000

kubectl get svc jenkins-master -n jenkins -o yaml

'''

spec:

clusterIP: 10.68.185.27

clusterIPs:

- 10.68.185.27

externalTrafficPolicy: Cluster

ports:

- name: http

nodePort: 30180

port: 8080

protocol: TCP

targetPort: 8080

- name: agent

nodePort: 50000

port: 50000

protocol: TCP

targetPort: 50000

selector:

app: jenkins-master

sessionAffinity: None

type: NodePort

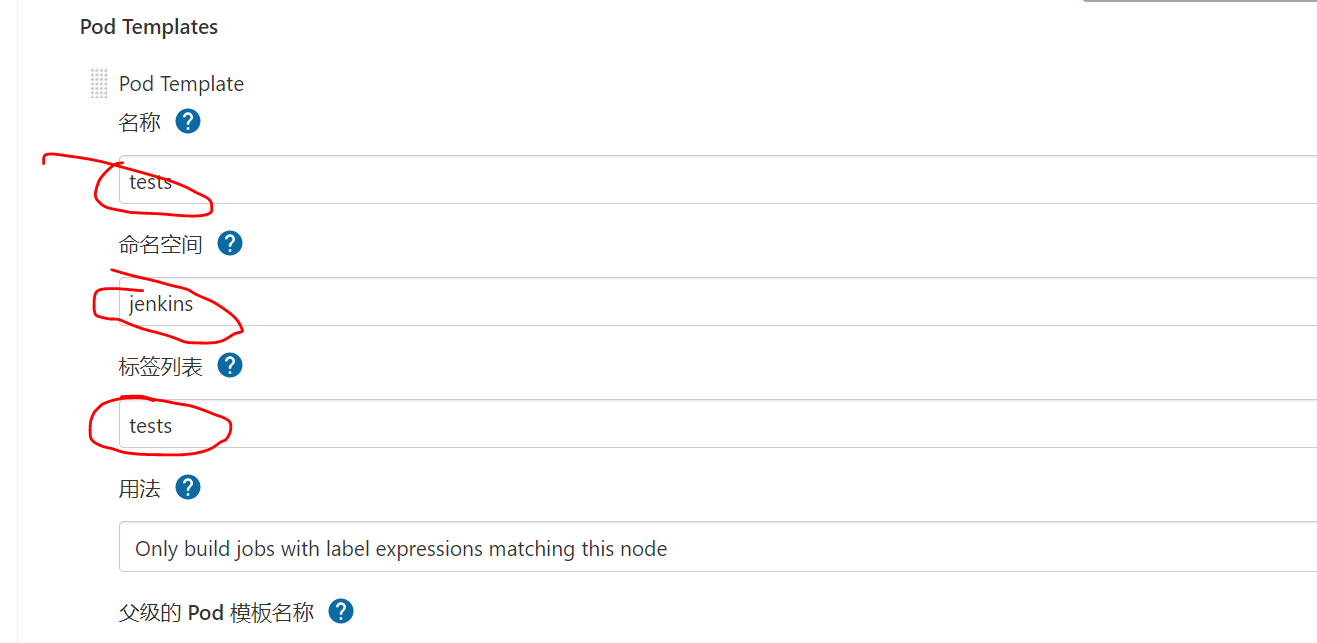

b. Configure pod

Take the name casually, but the namespace should be consistent with the jenkins server. The label is the node name configured in the pipeline behind you.

If you use the default slave node, you can save and exit directly from the previous step. Because a jnlp container is built in jenkins framework, jenkins / inbound agent image is used

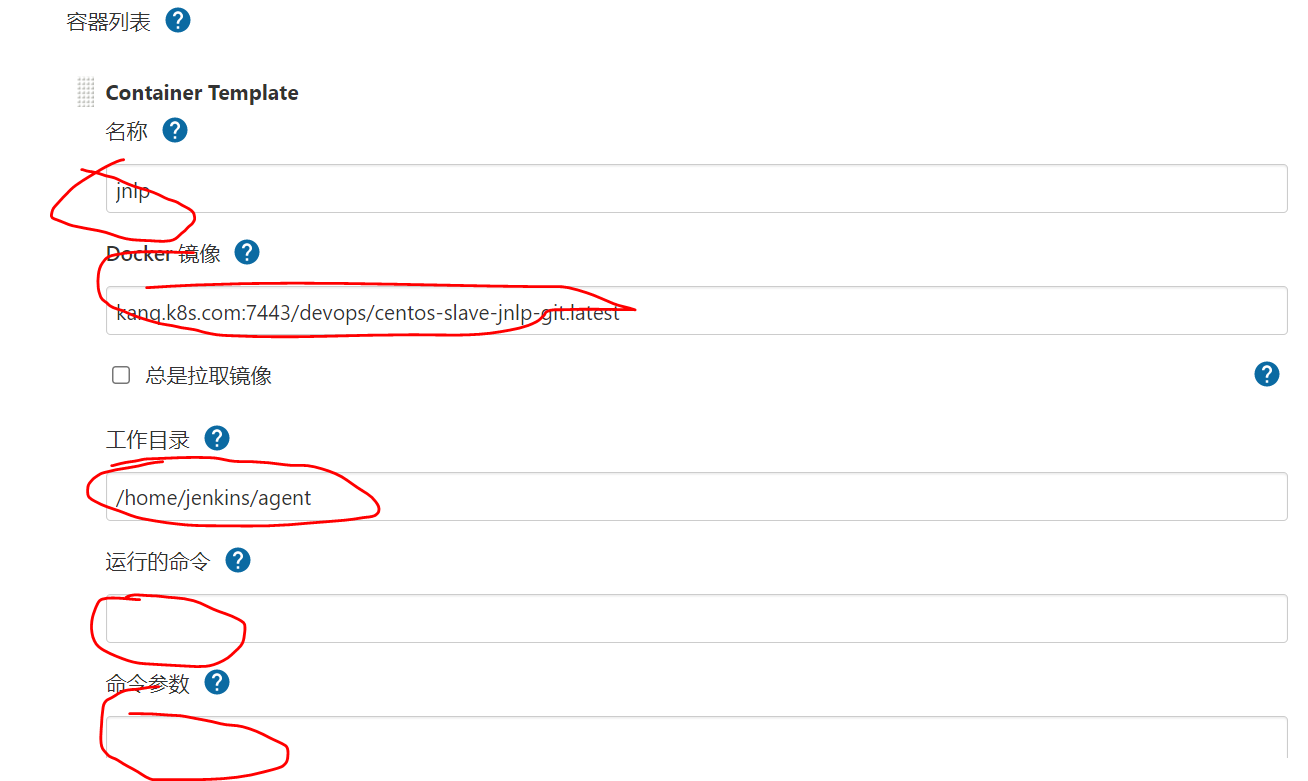

If you want to replace the image of the default pod, you need the following configuration. The name must be filled in jnlp, the working directory is optional, and the running commands and parameters need not be filled in

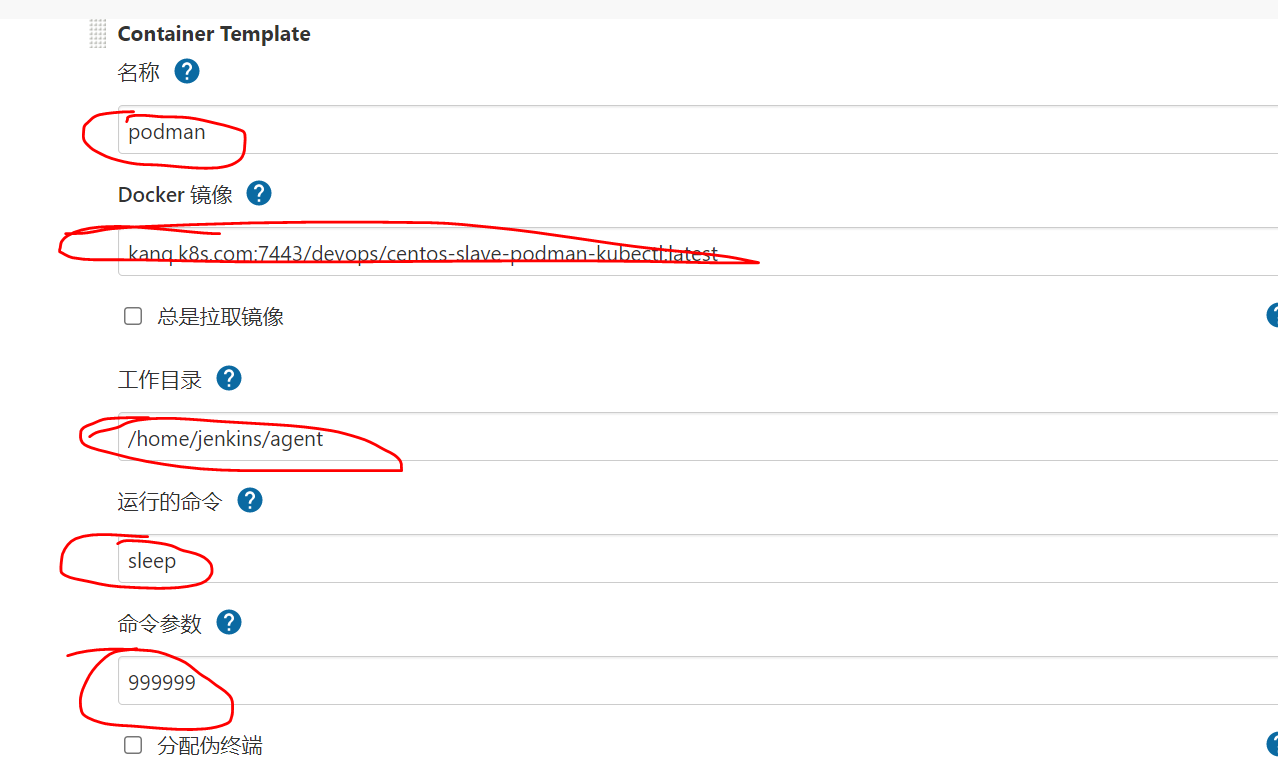

If you want to add another pod content container (this situation is common under pipline pipeline), click Add container. Note that the working directory here should be consistent with all containers. This is the shared directory of pod content container

Note: to run the command and fill in the command parameters, ensure that the container runs all the time after execution and will not exit directly (POD feature, the container started inside will exit automatically after executing the configured command. If it is not filled in here, it will execute the command configured by the image itself). Otherwise, pod notready will occur

This is also a problem that has plagued the whole day. So the simplest thing here is to directly use sleep 99999 to ensure that the container runs all the time,

The main configurations are those above, as well as other mounted volumes, environment variables, etc. I won't introduce them one by one here.

Note:

1. The environment variables of the volumes hung here will be hung in all containers. You cannot customize which containers use which variables and volumes

2. For the jenkins slave configuration of multiple containers, only ensure that the jenkins slave related tools are installed in the container named jnlp, and other containers do not need to be installed. Moreover, even if your container name is installed, if it is not jnlp related parameters, it will not be passed to your pod, resulting in the failure of container operation (if your container startup command is java slave).

The following describes a method of configuring pod templates in pipline. It is much more autonomous than the above page configuration. You can customize the container mounted volumes and environment variables. Make sure that each container corresponds to its own configuration

The following is a declarative pipline I wrote. When the code submission is triggered by webhook, this pipline is executed. The main tasks are:

- Configure the pod template of cloud kubernetes

- Download the code from the default jnlp container (image made by yourself with git added),

- Compile the code in the build container (use golang:v1.17 above and below docker.hub)

- The build image in the podman container (installed with podman) is push ed to the private warehouse, and then the kubectl create command is executed to start a deployment with this image. If it fails, it exits abnormally. After success, the started deployment is deleted

- According to the operation, send an email to the code submitter

def webHookData = readJSON text: "${head_commit}"

String commitID= webHookData["id"][0..7]

String url=webHookData.url

//String head_commit_author_email =

realJenkinsUrl=BUILD_URL.replaceAll("172.16.3.154:30180","113.57.110.41:15401")

pipeline{

agent {

kubernetes{

yaml '''

apiVersion: "v1"

kind: "Pod"

metadata:

name: "devops"

spec:

containers:

# If the default Jenkins / inbound agent meets your needs, it can be configured as in the above page

- name: "jnlp"

image: "kanq.k8s.com:7443/devops/centos-slave-jnlp-git:latest"

imagePullPolicy: "IfNotPresent"

volumeMounts:

- mountPath: "/home/jenkins/agent"

name: "workspace-volume"

readOnly: false

workingDir: "/home/jenkins/agent"

# When compiling images, remember to keep the container running all the time

- name: "build"

args:

- "999999"

command:

- "sleep"

image: "kanq.k8s.com:7443/devops/golang:1.17"

imagePullPolicy: "IfNotPresent"

name: "build"

volumeMounts:

- mountPath: "/home/jenkins/agent"

name: "workspace-volume"

readOnly: false

workingDir: "/home/jenkins/agent"

# It is used for image production, library push, deployment and verification.

- name: "podman"

args:

- "999999"

command:

- "sleep"

env:

- name: HarborU

valueFrom:

secretKeyRef:

name: harbor

key: username

- name: HarborP

valueFrom:

secretKeyRef:

name: harbor

key: password

image: "kanq.k8s.com:7443/devops/centos-slave-podman-kubectl:latest"

imagePullPolicy: "IfNotPresent"

securityContext:

privileged: true

volumeMounts:

- mountPath: "/etc/containers/certs.d/kanq.k8s.com/"

name: podman

- mountPath: "/home/"

name: k8sconfigmap

- mountPath: "/home/jenkins/agent"

name: "workspace-volume"

readOnly: false

workingDir: "/home/jenkins/agent"

activeDeadlineSeconds: 60000

volumes:

- emptyDir:

medium: ""

name: "workspace-volume"

- secret:

secretName: dockersecret

name: podman

- secret:

secretName: harbor

name: harbor

- configMap:

name: k8sconfig

name: k8sconfigmap

'''

}

}

stages {

stage('git') {

steps {

container("jnlp"){

sh """

git clone git@e.coding.net:kanqtest/kanq/kanqtest.git

env

"""

}

}

}

stage("compile"){

steps {

container("build"){

dir("$WORKSPACE/kanqtest"){

sh """

go build -a -o devops-go-sample cmd/main.go

"""

}

}

}

}

stage("build"){

steps {

container("podman"){

dir("$WORKSPACE/kanqtest"){

sh '''

cp -r /etc/containers/certs.d/kanq.k8s.com /etc/containers/certs.d/kanq.k8s.com:7443

echo "172.16.3.154 kanq.k8s.com" >>/etc/hosts

echo $HarborP |podman login -u $HarborU --password-stdin kanq.k8s.com:7443

podman build -t kanq.k8s.com:7443/devops/busybox:go .

podman push kanq.k8s.com:7443/devops/busybox:go

kubectl create deployment devopstest --image="kanq.k8s.com:7443/devops/busybox:go" -n jenkins

sleep 6s

heal=$(kubectl get pod -n jenkins|grep devopstest|grep Running)

if [[ "$heal" == "" ]];then

exit -1

fi

kubectl delete deployment devopstest -n jenkins

'''

}

}

}

}

}

post{

always{

sh "echo $commitID"

sh "echo $url"

addShortText background: 'white', borderColor: 'white', color: 'DodgerBlue', link: "$url", text: "$commitID"

mail bcc: '', body: """

code coding Address:'${head_commit_url}'

jenkins address: '${realJenkinsUrl}'

""", cc: '', from: '2833732855@qq.com', replyTo: '', subject: "kanq devops success!!!!", to: "$head_commit_author_email"

}

}

}