I. problem description

Colleagues reported that in our three node kafka cluster, when one of the servers goes down, the business is affected and cannot produce and consume messages. Program error:

WARN [Consumer clientId=consumer-1, groupId=console-consumer-55928] 1 partitions have leader brokers without a matching listener, including [baidd-0] (org.apache.kafka.clients.NetworkClient)

II. Fault simulation

2.1 When the replica of the partition is 1

#Production message

[root@Centos7-Mode-V8 kafka]# bin/kafka-console-producer.sh --broker-list 192.168.144.247:9193,192.168.144.251:9193,192.168.144.253:9193 --topic baidd

>aa

>bb

#Messages can be received when normal:

[root@Centos7-Mode-V8 kafka]# bin/kafka-console-consumer.sh -bootstrap-server 192.168.144.247:9193,192.168.144.251:9193,192.168.144.253:9193 --topic baidd

aa

bb

2.1.1 simulate closing the leader node of the topic

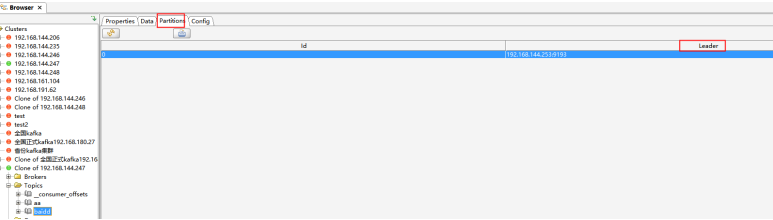

#Use the kafka tool to view the node on which the leader of the topic partition is located

Turn off its leader node and find that the producer and all consumer processes have been brushing the following information:

[2021-09-23 17:09:53,495] WARN [Consumer clientId=consumer-1, groupId=console-consumer-55928] 1 partitions have leader brokers without a matching listener, including [baidd-0] (org.apache.kafka.clients.NetworkClient)

Messages cannot be sent or consumed.

2.1.2 simulate turning off non leader nodes

Sometimes the consumer process will report an error: [2021-09-23 17:21:22480] warn [consumer ClientID = consumer-1, groupid = console-consumer-55928] connection to node 2147483645 (/ 192.168.144.253:9193) could not be established. Broker may not be available. (org. Apache. Kafka. Clients. Networkclient)

During the error reporting period, messages can be produced normally, but the data generated in the middle cannot be consumed.

2.1.3 summary

When there is only one replica in the partition, stopping any node will affect the business.

When the node of a partition leader goes down, production messages and consumption messages will be affected.

When the non leader node goes down, the consumption message will be affected.

2.2 partition with multiple replicas

When there are no other replicas of the partition, the impact on the business is understandable. Therefore, try to configure multiple replicas for topic and find that it still affects the business:

#Create a topic with three copies

bin/kafka-topics.sh --create --zookeeper 192.168.144.247:3292,192.168.144.251:3292,192.168.144.253:3292 --replication-factor 3 --partitions 1 --topic song

#View replica information

[root@Centos7-Mode-V8 kafka]# bin/kafka-topics.sh --zookeeper 192.168.144.247:3292,192.168.144.251:3292,192.168.144.253:3292 --describe --topic song

Topic:song PartitionCount:1 ReplicationFactor:3 Configs:

Topic: song Partition: 0 Leader: 0 Replicas: 0,2,1 Isr: 0,2,1

#Send a message

bin/kafka-console-producer.sh --broker-list 192.168.144.247:9193,192.168.144.251:9193,192.168.144.253:9193 --topic song

#Consumption process 1

bin/kafka-console-consumer.sh -bootstrap-server 192.168.144.247:9193,192.168.144.251:9193,192.168.144.253:9193 --topic song --group g1

#Consumption process 2

bin/kafka-console-consumer.sh -bootstrap-server 192.168.144.247:9193,192.168.144.251:9193,192.168.144.253:9193 --topic song --group g2

#Simulate closing the leader node of the topic

It is found that the message can still be produced. It is not reported that 1 partitions have leader brokers without a matching listener is wrong, but it is found that sometimes consumers report an error after they can't connect to the topic leader:

[2021-09-24 19:01:06,316] WARN [Consumer clientId=consumer-1, groupId=console-consumer-27609] Connection to node 2147483647 (/192.168.144.247:9193) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

Sometimes the data produced during this period does not come over, and the messages generated during node failure cannot be consumed.

But why do nodes still lose messages after multiple replicas are down?

Answer:__ consumer_ There is only one copy of offsets, which will make topic with multiple copies unable to achieve high availability.

#Later, by expanding the copy of kafka's own topic (_consumer_offsets), high availability of other common topics can be realized.

III. fault location

default.replication.factor is not configured in the Kafka configuration file, and the parameter defaults to 1, so it is equivalent to a single point.

IV. solutions

- Modify the kafka configuration file and increase the default copy factor of topic (this parameter is 1 by default):

default.replication.factor=3

If default.replication.factor=3 is set, offsets.topic.replication.factor will also default to 3.

Note: do not set default.replication.factor=3 and offsets.topic.replication.factor=1, so that the value of offsets.topic.replication.factor will overwrite the value of default.replication.factor.

#Restart kafka

slightly

- Expand a copy of an existing common topic

Can refer to https://blog.csdn.net/yabingshi_tech/article/details/120443647

- For__ consumer_offset extended copy

The method is the same as above, and the json file is as follows:

{

"version": 1,

"partitions": [

{

"topic": "__consumer_offsets",

"partition": 0,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 1,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 2,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 3,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 4,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 5,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 6,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 7,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 8,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 9,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 10,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 11,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 12,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 13,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 14,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 15,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 16,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 17,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 18,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 19,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 20,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 21,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 22,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 23,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 24,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 25,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 26,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 27,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 28,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 29,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 30,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 31,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 32,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 33,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 34,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 35,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 36,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 37,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 38,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 39,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 40,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 41,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 42,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 43,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 44,

"replicas": [

2,

0,

1

]

},

{

"topic": "__consumer_offsets",

"partition": 45,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 46,

"replicas": [

0,

1,

2

]

},

{

"topic": "__consumer_offsets",

"partition": 47,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 48,

"replicas": [

1,

2,

0

]

},

{

"topic": "__consumer_offsets",

"partition": 49,

"replicas": [

2,

0,

1

]

}

]

}

--This article refers to Kafka suddenly went down? Hold on, don't panic!