Continue with the previous article on training your own dataset using yolov5. This article will show you how to deploy yolov5 using Msnhnet on windows and linux, pc and jetson nx platforms.

pytorch model to msnhnet

Open the terminal under the yolov5 folder. Copy best.pt to the weights folder. Execute

python yolov5ToMsnhnet.py

The contents of the yolov5ToMsnhnet.py file:

from PytorchToMsnhnet import * Msnhnet.Export = True from models.experimental import attempt_load import torch weights = "weights/best.pt" # pt file msnhnetPath = "yolov5m.msnhnet" # Export.msnhnet file msnhbinPath = "yolov5m.msnhbin" # Export.msnhbin file model = attempt_load(weights, "cpu") model.eval() # cpu mode, inference mode img = torch.rand(512*512*3).reshape(1,3,512,512) #Generating Random Inference Data tans(model,img,msnhnetPath,msnhbinPath) #Model transformation

When the export is successful, the yolov5m.msnhnet and yolov5m.msnhbin files are generated under the folder.

Windows Papers

1.Dead work

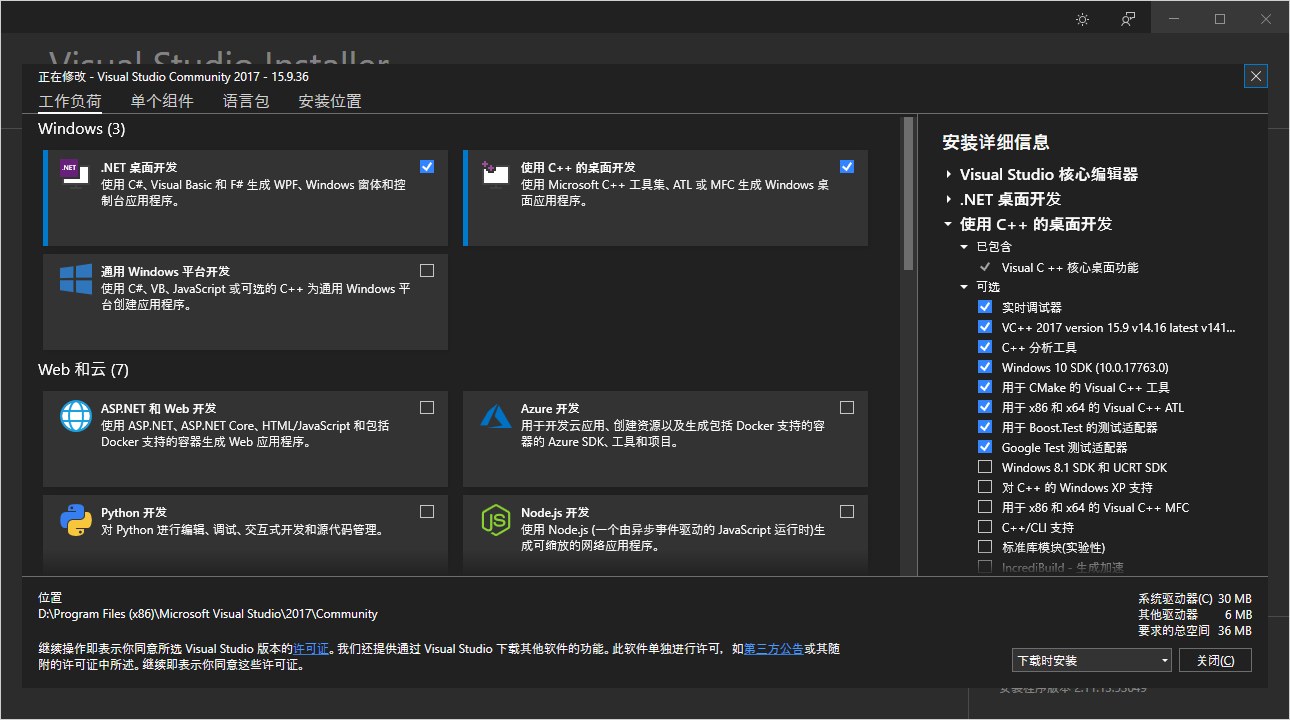

(1) Install Visual studio

- Website:https://visualstudio.microsoft.com/zh-hans/

- Download any version of visual studio above 2017 for installation. Check here. Net Desktop Development and Desktop Development using c++.

(2) Install cuda and cudnn, please Baidu yourself here.

- Cuda web address:https://developer.nvidia.com/cuda-downloads

- Cudnn web address:https://developer.nvidia.com/zh-cn/cudnn

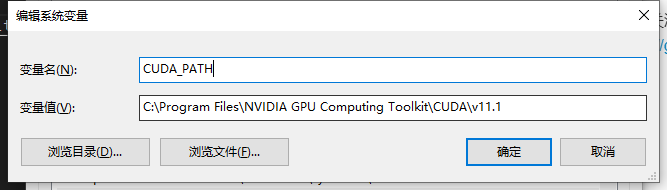

- Download the CUDA xx.exe file to install CUDA (preferably using the graphics card driver included in cuda), download the cudnn xx.zip file, and extract it into the C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\vxx.xx folder, where the CUDA and cudnn configurations are completed.

- Add C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\vxx.xx to the system environment variable.

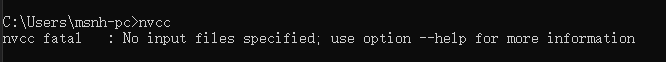

(3) Open the cmd and enter nvcc.Test whether the cuda installation is complete. The following results indicate that the cuda configuration is complete.

(4) Install cmake (Recommendation 3.17).

- Cmake download URL:https://cmake.org/files/v3.17/

- Download file: cmake-3.17.5-win64-x64.msi

- Complete installation.

(5) clone Msnhnet

git clone https://github.com/msnh2012/Msnhnet.git

2.Compile OpenCV Library

(1) Xiaobian here has prepared the source file of OpenCV for you, so you don't need to go online scientifically.

Links:https://pan.baidu.com/s/1lpyNNdYqdKj8R-RCQwwCWg Extraction Code: 6agk

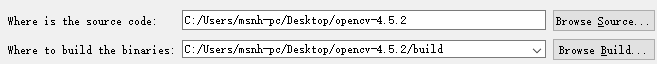

(2) Open cmake-gui.exe.

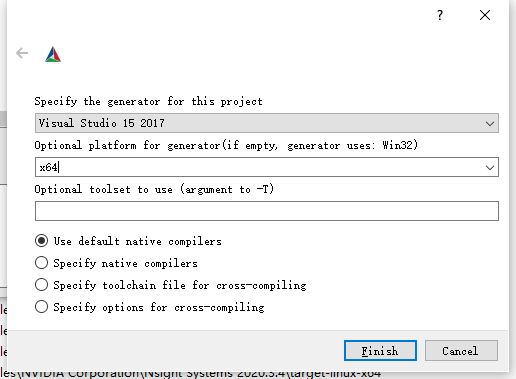

(3) Click config to select the installed version of visual studio, select x64 (take VS2017 for example here), click Finish, and wait for the configuration to complete.

(4) Configuration of parameters.

- CMAKE_INSTALL_PREFIX #Specify the installation location, such as: D:/libs/opencv - CPU_BASELINE #Select AVX2 (if the CPU supports AVX2 acceleration) - BUILD_TESTS #Uncheck

(5) Click generate->Generating done.

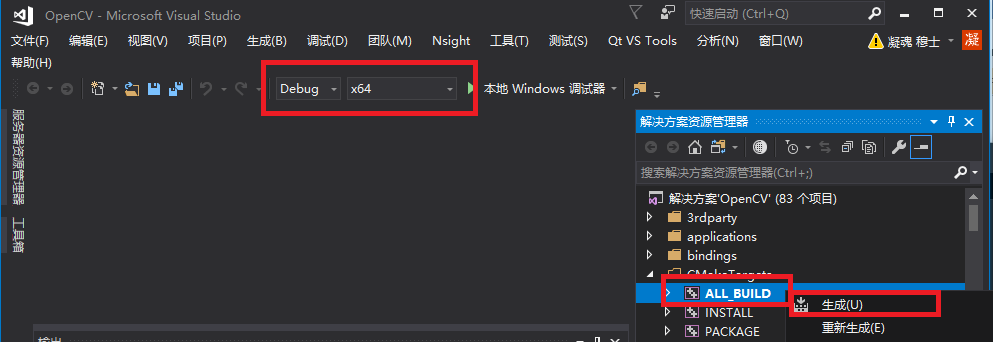

(6) Click Open Project. Select Debug right-click to generate. (This process needs to wait 10 to 60 minutes, depending on your computer configuration)

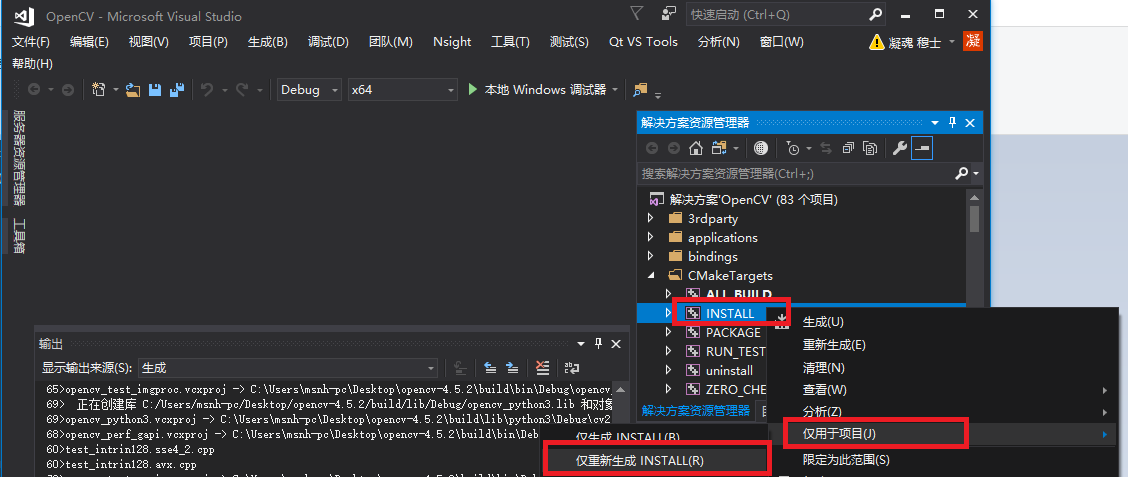

(7) Right-click installation. (The compiled executable will be installed in the specified installation location, such as D:/libs/opencv)

(8) Repeat steps 6-7 to select the Release version for compilation and installation.

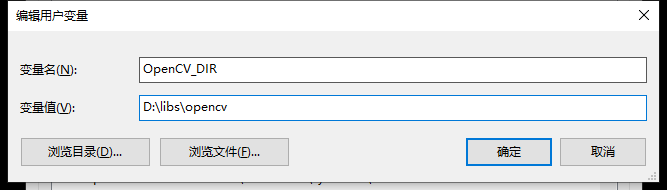

(9) Specify the OpenCV_DIR environment variable for CMakeList to use FindPackage to find OpenCV.

(10) Specify the Path environment variable. Add the bin folder location of Opencv under the Path environment variable, such as: D:\libs\opencv\x64\vc15\bin

3.Compile Msnhnet Library

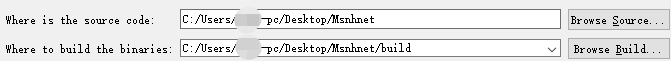

(1) Open cmake-gui.exe.

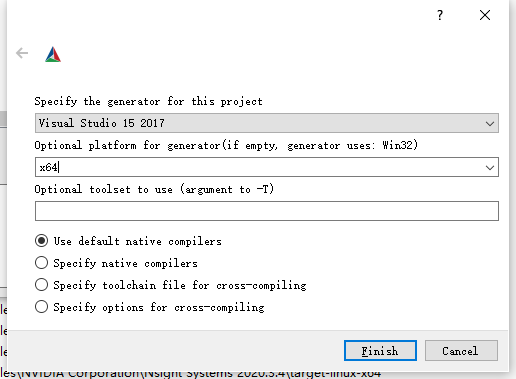

(2) Click config to select the installed version of visual studio, select x64 (take VS2017 for example here), click Finish, and wait for the configuration to complete.

(3) Check the following parameters.

- CMAKE_INSTALL_PREFIX #Specify the installation location, such as: D:/libs/Msnhnet - BUILD_EXAMPLE #Building examples - BUILD_SHARED_LIBS #Building Dynamic Link Library - BUILD_USE_CUDNN #Use CUDNN - BUILD_USE_GPU #Use GPU - BUILD_USE_OPENCV #Use OPENCV - ENABLE_OMP #Use OMP - OMP_MAX_THREAD #Use maximum number of cores

(4) Click generate->Generating done.

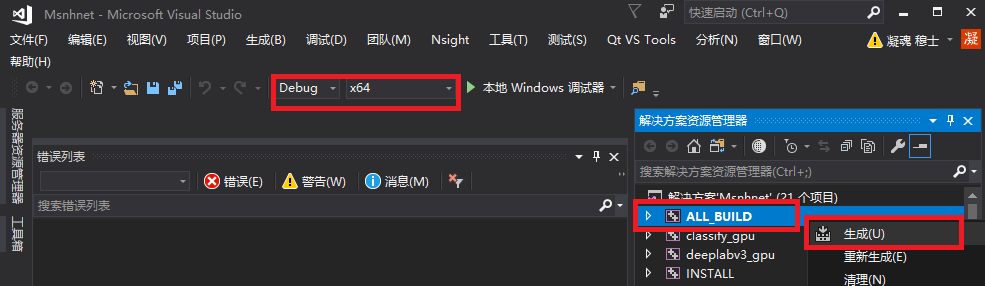

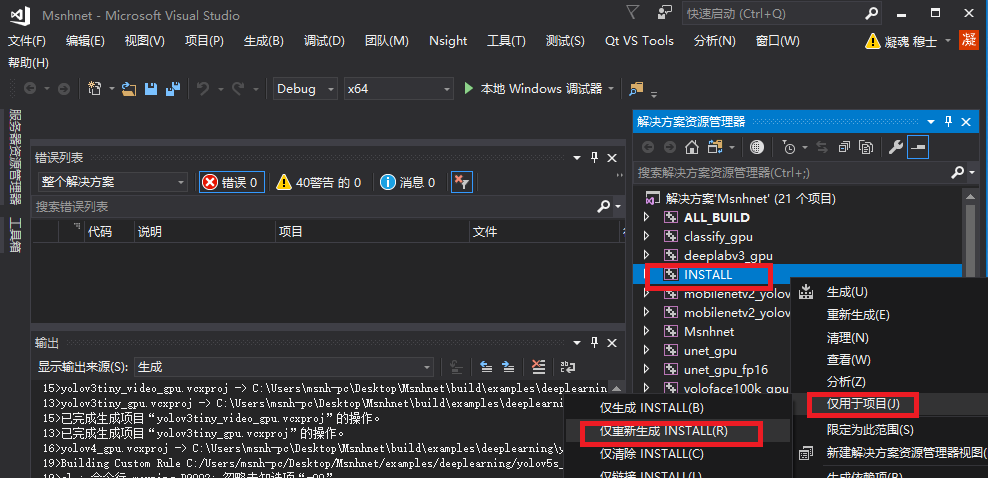

(5) Click Open Project. and select Debug to generate.

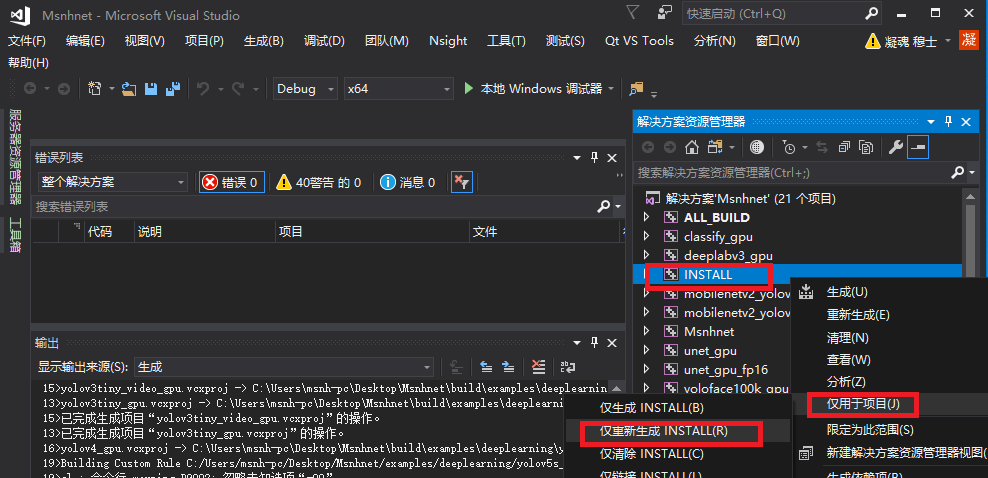

(6) Right-click installation. (The compiled executable will be installed in the specified installation location, such as D:/libs/Msnhnet)

(7) Repeat steps 6-7 to select the Release version for compilation and installation.

(8) Specify the Msnhnet_DIR environment variable for CMakeList to use FindPackage to find Msnhnet.

(9) Specify the Path environment variable. Add the bin folder location of Msnhnet under the Path environment variable, for example: D:\libs\Msnhnetbin

(10) Testing.

- Download a series of Msnhnet test models prepared in the widget. Unzip them to a directory such as the D disk root

Links:https://pan.baidu.com/s/1mBaJvGx7tp2ZsLKzT5ifOg

Extraction Code: x53z - Open the terminal in the Snhnet installation directory.

yolov3_gpu D:/models yolov3_gpu_fp16 D:/models #fp16 reasoning

- Of course, you can test other models.

4.Deploy Msnhnet with C#

(1) clone MsnhnetSharp

git clone https://github.com/msnh2012/MsnhnetSharp

(2) Double-click to open MsnhnetSharp.sln file

MsnhnetSharp source:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Runtime.InteropServices;

using System.Drawing;

using System.Drawing.Imaging;

using static MsnhnetSharp.MsnhnetDef;

namespace MsnhnetSharp

{

public class Msnhnet

{

const string MsnhnetLib = "msnhnet.dll";

private const int MaxBBoxNum = 1024;

[DllImport(MsnhnetLib, EntryPoint = "initMsnhnet")]

static extern int _initMsnhnet();

[DllImport(MsnhnetLib, EntryPoint = "dispose")]

static extern int _dispose();

[DllImport(MsnhnetLib, EntryPoint = "withGPU")]

static extern int _withGPU(ref int GPU);

[DllImport(MsnhnetLib, EntryPoint = "withCUDNN")]

static extern int _withCUDNN(ref int CUDNN);

[DllImport(MsnhnetLib, EntryPoint = "getInputDim")]

static extern int _getInputDim(ref int width, ref int heigth, ref int channel);

[DllImport(MsnhnetLib, EntryPoint = "getCpuForwardTime")]

static extern int _getCpuForwardTime(ref float cpuForwardTime);

[DllImport(MsnhnetLib, EntryPoint = "getGpuForwardTime")]

static extern int _getGpuForwardTime(ref float getGpuForwardTime);

[DllImport(MsnhnetLib, EntryPoint = "buildMsnhnet")]

static extern int _buildMsnhnet(ref IntPtr msg, string msnhnet, string msnhbin, int useFp16, int useCudaOnly);

[DllImport(MsnhnetLib, EntryPoint = "runClassifyFile")]

static unsafe extern int _runClassifyFile(ref IntPtr msg, string imagePath, ref int bestIndex, PredDataType predDataType,

int runGPU, float* mean, float* std);

[DllImport(MsnhnetLib, EntryPoint = "runClassifyList")]

static unsafe extern int _runClassifyList(ref IntPtr msg, byte* data, int width, int height, int channel, ref int bestIndex, PredDataType predDataType,

int swapRGB, int runGPU, float* mean, float* std);

[DllImport(MsnhnetLib, EntryPoint = "runClassifyNoPred")]

static unsafe extern int _runClassifyNoPred(ref IntPtr msg, float* data, int len, ref int bestIndex, int runGPU);

[StructLayout(LayoutKind.Sequential)]

public struct BBox

{

public float x;

public float y;

public float w;

public float h;

public float conf;

public float bestClsConf;

public UInt32 bestClsIdx;

public float angle;

};

[StructLayout(LayoutKind.Sequential)]

public struct BBoxContainer

{

[MarshalAs(UnmanagedType.ByValArray, SizeConst = MaxBBoxNum)]

public BBox[] boxes;

}

[DllImport(MsnhnetLib, EntryPoint = "runYoloFile")]

static extern int _runYoloFile(ref IntPtr msg, string imagePath, ref BBoxContainer bboxContainer, ref int detectedNum, int runGPU);

[DllImport(MsnhnetLib, EntryPoint = "runYoloList")]

static unsafe extern int _runYoloList(ref IntPtr msg, byte* data, int width, int height, int channel, ref BBoxContainer bboxContainer, ref int detectedNum, int swapRGB, int runGPU);

private bool netBuilt = false;

private bool netInited = false;

/// <summary>

/// check GPU

/// </summary>

/// <returns></returns>

static public bool WithGPU()

{

int GPU = 0;

_withGPU(ref GPU);

return (GPU == 1) ? true : false;

}

/// <summary>

/// check CUDNN

/// </summary>

/// <returns></returns>

static public bool WithCudnn()

{

int CUDNN = 0;

_withCUDNN(ref CUDNN);

return (CUDNN == 1) ? true : false;

}

/// <summary>

/// Get input dim

/// </summary>

/// <returns></returns>

public Dim GetInputDim()

{

if (netBuilt)

{

int width = 0;

int height = 0;

int channel = 0;

_getInputDim(ref width, ref height, ref channel);

Dim dim;

dim.width = width;

dim.height = height;

dim.channel = channel;

return dim;

}

else

{

throw new Exception("Net wasn't built yet");

}

}

/// <summary>

/// Get cpu forward time

/// </summary>

/// <returns></returns>

public float GetCpuForwardTime()

{

float time = 0;

_getCpuForwardTime(ref time);

return time;

}

/// <summary>

/// Get gpu forward time

/// </summary>

/// <returns></returns>

public float GetGpuForwardTime()

{

float time = 0;

if (_getGpuForwardTime(ref time) != 1)

{

throw new Exception("Msnhnet is not compiled with GPU mode!");

}

return time;

}

/// <summary>

/// init net

/// </summary>

public void InitNet()

{

if (_initMsnhnet() != 1)

{

throw new Exception("Init net Failed");

}

netInited = true;

}

/// <summary>

/// build net

/// </summary>

/// <param name="msnhnet">msnhnet file path</param>

/// <param name="msnhbin">msnhbin file path</param>

/// <param name="useFp16">use fp16 or not</param>

/// <param name="useCudaOnly">build with cudnn, but only use cuda</param>

public void BuildNet(string msnhnet, string msnhbin, bool useFp16, bool useCudaOnly)

{

if (!netInited)

{

throw new Exception("Net wasn't inited yet");

}

IntPtr msg = new IntPtr();

if (_buildMsnhnet(ref msg, msnhnet, msnhbin, useFp16 ? 1 : 0, useCudaOnly ? 1 : 0) != 1)

{

string mstr = Marshal.PtrToStringAnsi(msg);

throw new Exception(mstr);

}

netBuilt = true;

}

/// <summary>

/// dispose net

/// </summary>

public void Dispose()

{

_dispose();

}

/// <summary>

/// forward net with image file, with preprocess

/// </summary>

/// <param name="imagePath">image file path</param>

/// <param name="predDataType">process function</param>

/// <param name="runGPU">run wit GPU</param>

/// <param name="mean"> if normalize mean val </param>

/// <param name="std">if normalize std val</param>

/// <returns></returns>

public int RunClassifyFile(string imagePath, PredDataType predDataType, bool runGPU, float[] mean = null, float[] std = null)

{

if (!netBuilt)

{

throw new Exception("Net wasn't built yet");

}

IntPtr msg = new IntPtr();

int bestIndex = 0;

unsafe

{

if (predDataType == PredDataType.PRE_DATA_TRANSFORMED_FC3)

{

fixed (float* meanPtr = mean)

{

fixed (float* stdPtr = std)

{

if (_runClassifyFile(ref msg, imagePath, ref bestIndex, predDataType, runGPU ? 1 : 0, meanPtr, stdPtr) != 1)

{

string mstr = Marshal.PtrToStringAnsi(msg);

throw new Exception(mstr);

}

}

}

}

else

{

if (_runClassifyFile(ref msg, imagePath, ref bestIndex, predDataType, runGPU ? 1 : 0, null, null) != 1)

{

string mstr = Marshal.PtrToStringAnsi(msg);

throw new Exception(mstr);

}

}

}

return bestIndex;

}

/// <summary>

/// forward net with image BitmapData, with preprocess

/// </summary>

/// <param name="bitmap">data</param>

/// <param name="predDataType">process function</param>

/// <param name="swapRGB">net swap RGB or not</param>

/// <param name="runGPU">run wit GPU</param>

/// <param name="mean"> if normalize mean val </param>

/// <param name="std">if normalize std val</param>

/// <returns></returns>

public int RunClassifyList(BitmapData bitmap, PredDataType predDataType, bool swapRGB, bool runGPU, float[] mean = null, float[] std = null)

{

if (!netBuilt)

{

throw new Exception("Net wasn't built yet");

}

IntPtr msg = new IntPtr();

int bestIndex = 0;

unsafe

{

if (predDataType == PredDataType.PRE_DATA_TRANSFORMED_FC3)

{

fixed (float* meanPtr = mean)

{

fixed (float* stdPtr = std)

{

if (_runClassifyList(ref msg, (byte*)bitmap.Scan0, bitmap.Width, bitmap.Height, bitmap.Stride / bitmap.Width, ref bestIndex, predDataType, swapRGB ? 1 : 0, runGPU ? 1 : 0, meanPtr, stdPtr) != 1)

{

string mstr = Marshal.PtrToStringAnsi(msg);

throw new Exception(mstr);

}

}

}

}

else

{

if (_runClassifyList(ref msg, (byte*)bitmap.Scan0, bitmap.Width, bitmap.Height, bitmap.Stride / bitmap.Width, ref bestIndex, predDataType, swapRGB ? 1 : 0, runGPU ? 1 : 0, null, null) != 1)

{

string mstr = Marshal.PtrToStringAnsi(msg);

throw new Exception(mstr);

}

}

}

return bestIndex;

}

/// <summary>

/// forward net with float data, without preprocess

/// </summary>

/// <param name="data">flaot data</param>

/// <param name="runGPU">run wit GPU</param>

/// <returns></returns>

public int RunClassifyNoPred(float[] data, bool runGPU)

{

if (!netBuilt)

{

throw new Exception("Net wasn't built yet");

}

IntPtr msg = new IntPtr();

int bestIndex = 0;

unsafe

{

fixed (float* dataPtr = data)

{

if (_runClassifyNoPred(ref msg, dataPtr, data.Length, ref bestIndex, runGPU ? 1 : 0) != 1)

{

string mstr = Marshal.PtrToStringAnsi(msg);

throw new Exception(mstr);

}

}

}

return bestIndex;

}

/// <summary>

/// run yolo

/// </summary>

/// <param name="imagePath">image path</param>

/// <param name="runGPU">run with GPU</param>

/// <returns></returns>

public List<BBox> RunYoloFile(string imagePath, bool runGPU)

{

List<BBox> bboxVec = new List<BBox>();

IntPtr msg = new IntPtr();

BBoxContainer bboxContainer = new BBoxContainer();

int detectNum = 0;

if (!netBuilt)

{

throw new Exception("Net wasn't built yet");

}

if (_runYoloFile(ref msg, imagePath, ref bboxContainer, ref detectNum, runGPU?1:0)!=1)

{

string mstr = Marshal.PtrToStringAnsi(msg);

throw new Exception(mstr);

}

for (int i = 0; i < detectNum; i++)

{

bboxVec.Add(bboxContainer.boxes[i]);

}

return bboxVec;

}

public List<BBox> RunYoloList(BitmapData bitmap, bool swapRGB, bool runGPU)

{

if (!netBuilt)

{

throw new Exception("Net wasn't built yet");

}

List<BBox> bboxVec = new List<BBox>();

IntPtr msg = new IntPtr();

BBoxContainer bboxContainer = new BBoxContainer();

int detectNum = 0;

unsafe

{

if (_runYoloList(ref msg, (byte*)bitmap.Scan0, bitmap.Width, bitmap.Height, bitmap.Stride / bitmap.Width, ref bboxContainer, ref detectNum, swapRGB?1:0, runGPU?1:0) != 1)

{

string mstr = Marshal.PtrToStringAnsi(msg);

throw new Exception(mstr);

}

}

for (int i = 0; i < detectNum; i++)

{

bboxVec.Add(bboxContainer.boxes[i]);

}

return bboxVec;

}

}

}

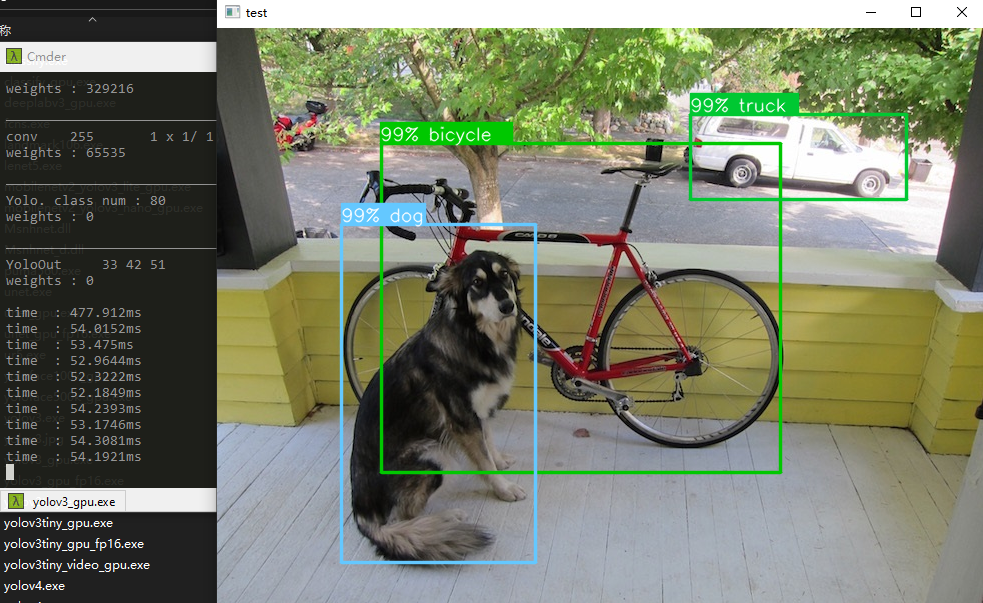

(3) Select x64 platform and Release mode, right-click to generate MsnhnetSharp, and regenerate MsnhnetForm.

(4) Click the start button.

(5) In the parameter configuration bar, specify msnhnetPath and msnhbinPath as parameters of yolov5m previously exported, and then copy and rename the previous labels.txt file to labels.names.

(6) Click to initialize the network. Wait for initialization to complete, init done.

(7) Click to read the picture and select the bus.jpg.

(8) Click on the yolo GPU(Yolo Detect GPU).The first reasoning takes a long time.

(9) Click to reset the picture.

(10) Click on the Yolo GPU again.Then the reasoning time is normal.

This is done using C# to deploy MsnhnetSharp, which can then be deployed to your own project using MsnhnetForm.

5.Deploy Msnhnet using CMake

Project file source:

Links:https://pan.baidu.com/s/1lpyNNdYqdKj8R-RCQwwCWg Extraction Code: 6agk

(1) Create a new MsnhnetPrj folder

(2) Copy yolov5m.msnhnet,yolov5m.msnhbin,labels.txt into MsnhnetPrj folder

(3) Create a new CMakeLists.txt file

cmake_minimum_required(VERSION 3.15)

project(yolov5m_msnhnet

LANGUAGES CXX C CUDA

VERSION 1.0)

find_package(OpenCV REQUIRED)

find_package(Msnhnet REQUIRED)

find_package(OpenMP REQUIRED)

add_executable(yolov5m_msnhnet yolov5m_msnhnet.cpp)

target_include_directories(yolov5m_msnhnet PUBLIC ${Msnhnet_INCLUDE_DIR})

target_link_libraries(yolov5m_msnhnet PUBLIC ${OpenCV_LIBS} Msnhnet)

(4) New yolov5m_msnhnet.cpp file

#include <iostream>

#include "Msnhnet/net/MsnhNetBuilder.h"

#include "Msnhnet/io/MsnhIO.h"

#include "Msnhnet/config/MsnhnetCfg.h"

#include "Msnhnet/utils/MsnhOpencvUtil.h"

void yolov5sGPUOpencv(const std::string& msnhnetPath, const std::string& msnhbinPath, const std::string& imgPath, const std::string& labelsPath)

{

try

{

Msnhnet::NetBuilder msnhNet;

Msnhnet::NetBuilder::setOnlyGpu(true);

//msnhNet.setUseFp16(true); //Turn on inference using FP16

msnhNet.buildNetFromMsnhNet(msnhnetPath);

std::cout<<msnhNet.getLayerDetail();

msnhNet.loadWeightsFromMsnhBin(msnhbinPath);

std::vector<std::string> labels ;

Msnhnet::IO::readVectorStr(labels, labelsPath.data(), "\n");

Msnhnet::Point2I inSize = msnhNet.getInputSize();

std::vector<float> img;

std::vector<std::vector<Msnhnet::YoloBox>> result;

img = Msnhnet::OpencvUtil::getPaddingZeroF32C3(imgPath, cv::Size(inSize.x,inSize.y));

for (size_t i = 0; i < 10; i++)

{

auto st = Msnhnet::TimeUtil::startRecord();

result = msnhNet.runYoloGPU(img);

std::cout<<"time : " << Msnhnet::TimeUtil::getElapsedTime(st) <<"ms"<<std::endl<<std::flush;

}

cv::Mat org = cv::imread(imgPath);

Msnhnet::OpencvUtil::drawYoloBox(org,labels,result,inSize);

cv::imshow("test",org);

cv::waitKey();

}

catch (Msnhnet::Exception ex)

{

std::cout<<ex.what()<<" "<<ex.getErrFile() << " " <<ex.getErrLine()<< " "<<ex.getErrFun()<<std::endl;

}

}

int main(int argc, char** argv)

{

std::string msnhnetPath = "yolov5m.msnhnet";

std::string msnhbinPath = "yolov5m.msnhbin";

std::string labelsPath = "labels.txt";

std::string imgPath = "bus.jpg";

yolov5sGPUOpencv(msnhnetPath, msnhbinPath, imgPath,labelsPath);

getchar();

return 0;

}

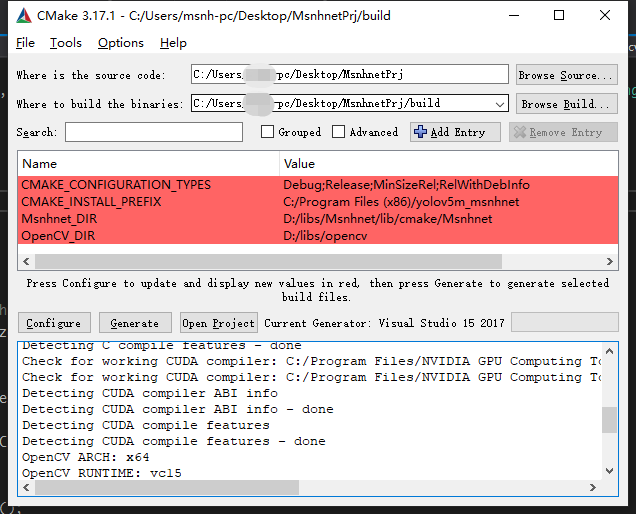

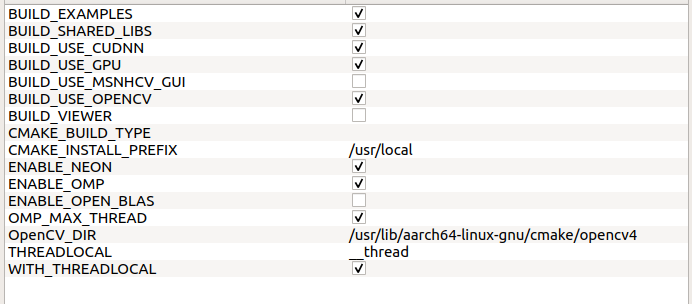

(5) Configure CMake

Open cmake-gui.exe and configure it as follows. Click Config.Generate

(6) Compile, click Open Project, select Release mode, and build directly from previous compilation of Msnhnet.

(7) Copy the executable.

Copy from MsnhnetPrj/build/Release/yolov5m_msnhnet.exe to the MsnhnetPrj directory.

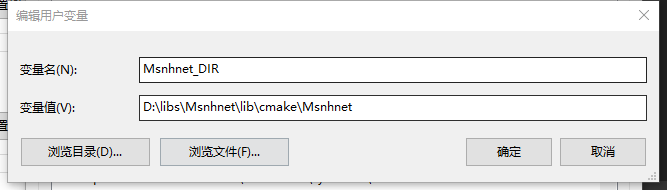

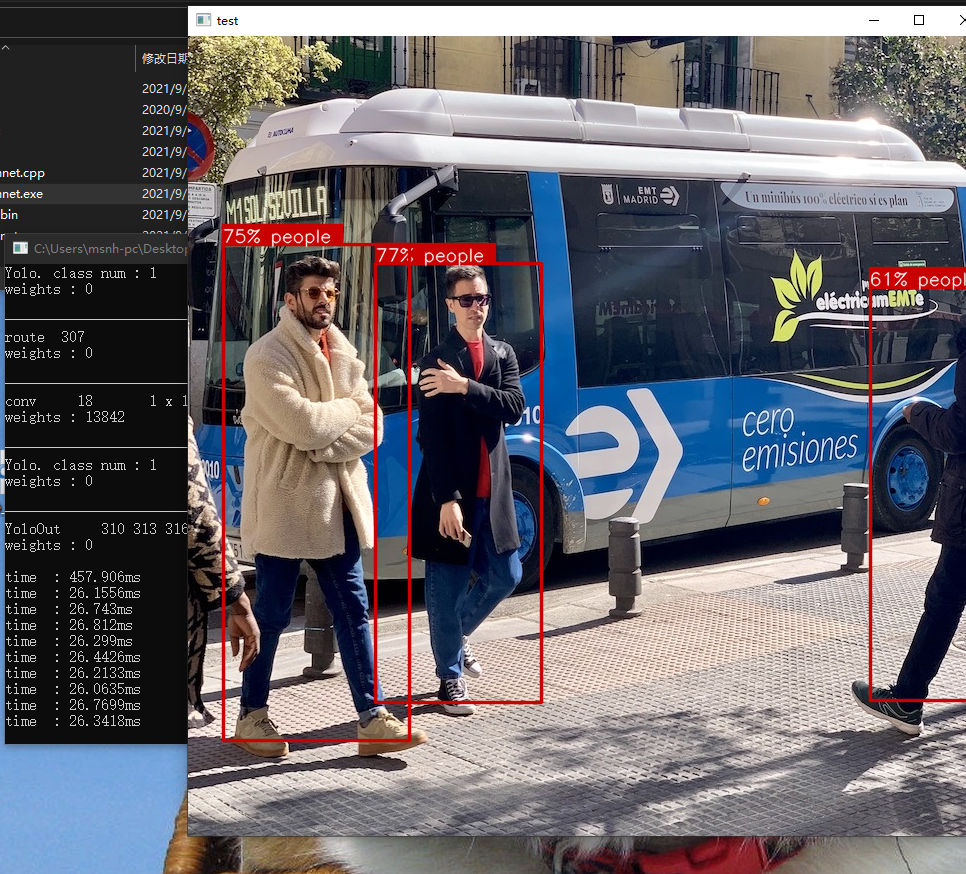

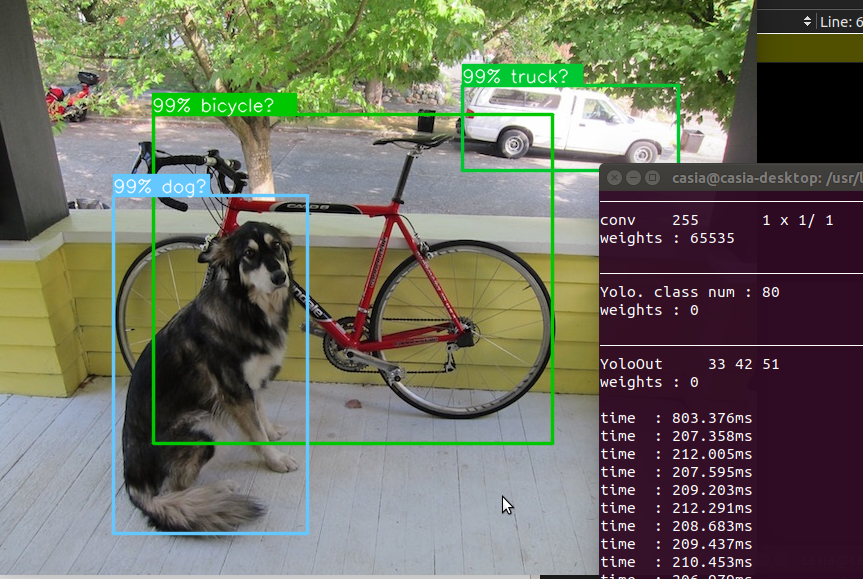

(8) Deployment results

Double-click yolov5m_msnhnet.exe to view the deployment results

Linux(Jetson NX) articles

1.Dead work

In general, Jetson already has its own cuda and cudnn, so there is no need to install them.

- Install build tools

sudo apt-get install build-essential

- Install opencv

sudo apt-get install libopencv

2.Compile Msnhnet Library

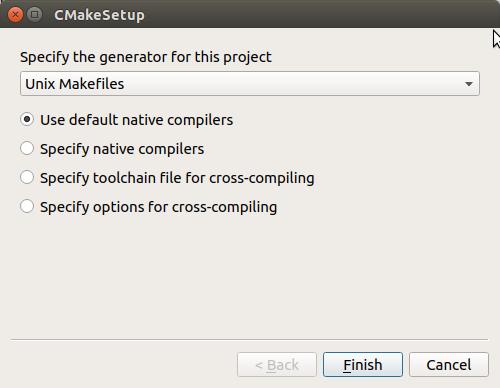

(1) The terminal opens cmake-gui.

(2) Click config to select the installed version of visual studio, select x64 (take VS2017 for example here), click Finish, and wait for the configuration to complete.

(3) Check the following parameters.

- CMAKE_INSTALL_PREFIX #Specify the installation location, such as: D:/libs/Msnhnet - BUILD_EXAMPLE #Building examples - BUILD_SHARED_LIBS #Building Dynamic Link Library - BUILD_USE_CUDNN #Use CUDNN - BUILD_USE_GPU #Use GPU - BUILD_USE_NEON #Using neon acceleration - BUILD_USE_OPENCV #Use OPENCV - ENABLE_OMP #Use OMP - OMP_MAX_THREAD #Use maximum number of cores

(4) Click generate->Generating done.

(5) Open the terminal in the Msnhnet/build folder.

make -j sudo make install

(7) Configuring system environment variables

sudo gedit /etc/ld.so.conf.d/usr.confg # Add: /usr/local/lib sudo ldconfig

(8) Testing.

- Download a series of Msnhnet test models prepared in the widget. Unzip them to a root directory such as home

Links:https://pan.baidu.com/s/1mBaJvGx7tp2ZsLKzT5ifOg

Extraction Code: x53z

cd /usr/local/bin yolov3_gpu /home/xxx/models yolov3_gpu_fp16 /home/xxx/models #fp16 reasoning

- Of course, you can test other models.

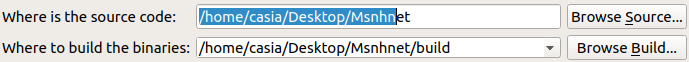

3.Deploy Msnhnet using CMake

Project file source:

Links:https://pan.baidu.com/s/1lpyNNdYqdKj8R-RCQwwCWg Extraction Code: 6agk

(1) Create a new MsnhnetPrj folder

(2) Copy yolov5m.msnhnet,yolov5m.msnhbin, labels.txt into MsnhnetPrj folder

(3) Create a new CMakeLists.txt file

cmake_minimum_required(VERSION 3.15)

project(yolov5m_msnhnet

LANGUAGES CXX C CUDA

VERSION 1.0)

find_package(OpenCV REQUIRED)

find_package(Msnhnet REQUIRED)

find_package(OpenMP REQUIRED)

add_executable(yolov5m_msnhnet yolov5m_msnhnet.cpp)

target_include_directories(yolov5m_msnhnet PUBLIC ${Msnhnet_INCLUDE_DIR})

target_link_libraries(yolov5m_msnhnet PUBLIC ${OpenCV_LIBS} Msnhnet)

(4) New yolov5m_msnhnet.cpp file

#include <iostream>

#include "Msnhnet/net/MsnhNetBuilder.h"

#include "Msnhnet/io/MsnhIO.h"

#include "Msnhnet/config/MsnhnetCfg.h"

#include "Msnhnet/utils/MsnhOpencvUtil.h"

void yolov5sGPUOpencv(const std::string& msnhnetPath, const std::string& msnhbinPath, const std::string& imgPath, const std::string& labelsPath)

{

try

{

Msnhnet::NetBuilder msnhNet;

Msnhnet::NetBuilder::setOnlyGpu(true);

//msnhNet.setUseFp16(true); //Turn on inference using FP16

msnhNet.buildNetFromMsnhNet(msnhnetPath);

std::cout<<msnhNet.getLayerDetail();

msnhNet.loadWeightsFromMsnhBin(msnhbinPath);

std::vector<std::string> labels ;

Msnhnet::IO::readVectorStr(labels, labelsPath.data(), "\n");

Msnhnet::Point2I inSize = msnhNet.getInputSize();

std::vector<float> img;

std::vector<std::vector<Msnhnet::YoloBox>> result;

img = Msnhnet::OpencvUtil::getPaddingZeroF32C3(imgPath, cv::Size(inSize.x,inSize.y));

for (size_t i = 0; i < 10; i++)

{

auto st = Msnhnet::TimeUtil::startRecord();

result = msnhNet.runYoloGPU(img);

std::cout<<"time : " << Msnhnet::TimeUtil::getElapsedTime(st) <<"ms"<<std::endl<<std::flush;

}

cv::Mat org = cv::imread(imgPath);

Msnhnet::OpencvUtil::drawYoloBox(org,labels,result,inSize);

cv::imshow("test",org);

cv::waitKey();

}

catch (Msnhnet::Exception ex)

{

std::cout<<ex.what()<<" "<<ex.getErrFile() << " " <<ex.getErrLine()<< " "<<ex.getErrFun()<<std::endl;

}

}

int main(int argc, char** argv)

{

std::string msnhnetPath = "../yolov5m.msnhnet";

std::string msnhbinPath = "../yolov5m.msnhbin";

std::string labelsPath = "../labels.txt";

std::string imgPath = "../bus.jpg";

yolov5sGPUOpencv(msnhnetPath, msnhbinPath, imgPath,labelsPath);

getchar();

return 0;

}

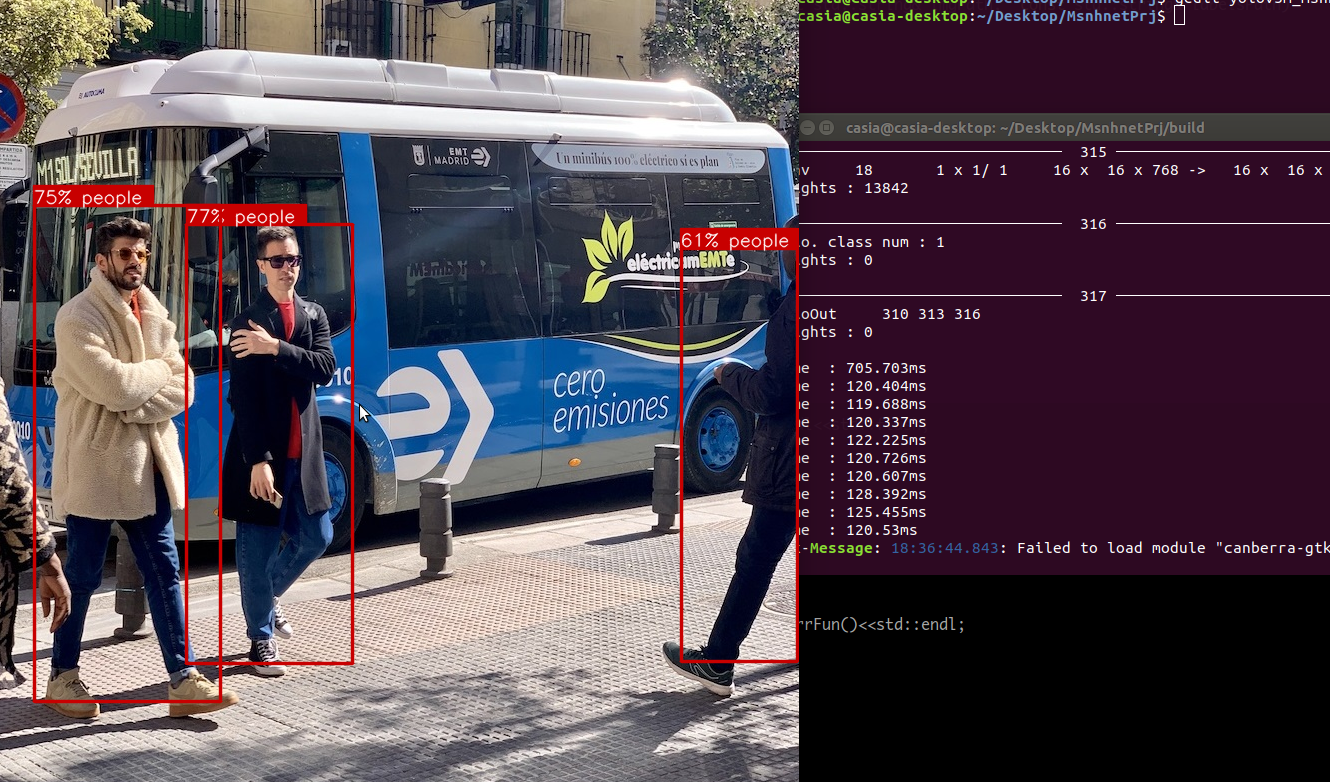

(5) Compile and open the terminal under the MsnhnetPrj folder

mkdir build cd build make ./yolov5m_msnhnet

(6) Deployment results

Linux(PC) articles

Similar to Jetson Nx deployment, the main difference is to configure cuda and cudnn on Linux first, then uninstall CMake and install CMake version 3.17.Others are the same as Jestson NX. (ps. There is no NEON item in the CMake parameter configuration, which is exclusive to the ARM platform)

This is done using Msnhnet from 0 to deploy the Yolov5 network.

Last

- Welcome to Msnhnet, an in-depth learning framework that I maintained with Buff and our Public Number partners:

- https://github.com/msnh2012/Msnhnet