Netty QuickStart best practices

Official website address: https://netty.io/wiki/user-guide-for-4.x.html

Special customs officer dying stranded mysterious connection Click to obtain knowledge password

Write in front

This paper is based on the Spring Boot framework environment. Readers can build their own environment or adjust some of the code to suit their own environment.

At the same time, you need to have a certain understanding of basic concepts. It is recommended to check my other articles on Netty. Some concepts involved in this article will only be briefly described without explanation.

Netty Architecture Overview

Write your Netty service

First, you need to build a Netty service to expose your communication address. Here, I will eventually build a simple WebSocket service, so it is called WebSocketServer

Here, by implementing applicationlistener < contextrefreshedevent >, the Spring iOC container can start our Netty service after initializing the bean

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.*;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.logging.LogLevel;

import io.netty.handler.logging.LoggingHandler;

import io.netty.handler.stream.ChunkedWriteHandler;

import io.netty.util.concurrent.DefaultThreadFactory;

import io.netty.util.internal.SystemPropertyUtil;

import lombok.extern.slf4j.Slf4j;

import org.springframework.context.ApplicationListener;

import org.springframework.context.event.ContextRefreshedEvent;

import org.springframework.stereotype.Component;

import javax.annotation.PreDestroy;

/**

* @author: wangzibin

**/

@Slf4j

@Component

public class WebsocketServer implements ApplicationListener<ContextRefreshedEvent> {

private static final int WEBSOCKET_BACKLOG = SystemPropertyUtil.getInt("star.websocket.backlog", 200);

private static final int WEBSOCKET_PORT = SystemPropertyUtil.getInt("star.websocket.port", 18680);

private ServerBootstrap serverBootstrap;

private EventLoopGroup bossGroup;

private EventLoopGroup workerGroup;

private Channel serverChannel;

@Override

public void onApplicationEvent(ContextRefreshedEvent event) {

log.info("Starting WebSocketServer on port {} ", WEBSOCKET_PORT);

long startMillis = System.currentTimeMillis();

// One boss thread is used to handle binding and connect to the worker

bossGroup = new NioEventLoopGroup(1, new DefaultThreadFactory("WebsocketBoss"));

// worker thread 2x cpu cores are used to process connected data transmission

workerGroup = new NioEventLoopGroup(0, new DefaultThreadFactory("WebsocketWorker"));

serverBootstrap = new ServerBootstrap()

.group(bossGroup, workerGroup)

//NioServerSocketChannel is used as the channel implementation of the server

.channel(NioServerSocketChannel.class)

// Initialize the size of the server connection queue. The server processes client connection requests sequentially, so only one client connection can be processed at a time.

// When multiple clients come at the same time, the server puts the client connection requests that cannot be processed in the queue for processing

// If the queue limit is exceeded at the same time, the connection will be rejected

.option(ChannelOption.SO_BACKLOG, WEBSOCKET_BACKLOG)

// Disable the congestion control of TCP Nagle algorithm on the client side. Here, real-time performance is required without waiting to block the sending of low load packets

.childOption(ChannelOption.TCP_NODELAY, true)

// Log printing

.handler(new LoggingHandler(LogLevel.TRACE))

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

// Here, pipline is the place to add custom processing logic

pipeline.addLast(new DiscardServerHandler());

}

});

ChannelFuture channelFuture = serverBootstrap.bind(WEBSOCKET_PORT);

channelFuture.syncUninterruptibly();

serverChannel = channelFuture.channel();

log.info("WebSocketServer started in {} ms", System.currentTimeMillis() - startMillis);

}

@PreDestroy

public void close() {

log.info("Closing WebSocketServer");

long startMillis = System.currentTimeMillis();

try {

if (serverChannel != null) {

serverChannel.close().syncUninterruptibly();

}

} catch (Throwable e) {

log.warn("serverChannel close error!", e);

}

try {

if (serverBootstrap != null) {

bossGroup.shutdownGracefully();

workerGroup.shutdownGracefully();

}

} catch (Throwable e) {

log.warn("close eventLoopGroup error!", e);

}

log.info("WebSocketServer closed in {} ms", System.currentTimeMillis() - startMillis);

}

}

Write data discard processor

Well, at this time, we have taken a crucial step. Our Netty service has been up. It's time to process the messages we receive.

For the Netty service, the simplest is not "Hello Word", but doing nothing. First, write a message rejection service

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

/**

* Denial of service for basic testing

*/

public class DiscardServerHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) {

// Nothing, just throw it away

((ByteBuf) msg).release();

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) {

// This method will call back when the Netty I/O error or the implemented Handler throws an exception. Usually, you can write some exception records or related handling here

cause.printStackTrace();

ctx.close();

}

}

ChannelPipeline pipeline note that we add a custom Handler pipeline, which is designed in two directions: Data Inbound and data outbound. This is a relative concept. Generally speaking, we want to process incoming and outgoing message data and directly inherit or implement the interface or class provided by Netty, Here, we choose to inherit the ChannelInboundHandlerAdapter and override its method.

ByteBuf Is a reference count object that must be explicitly released through the release() method. Remember that it is the responsibility of the handler to release any reference count objects passed to the handler. Typically, the channelRead() handler method is implemented as follows:

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) {

try {

// Do something with msg

} finally {

ReferenceCountUtil.release(msg);

}

}

At this time, the service messages and can run under your control, but obviously, we reject all messages, so the service can't do anything. Let's make a revision. Modify the channelRead method to print the data you receive.

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) {

ByteBuf in = (ByteBuf) msg;

try {

while (in.isReadable()) { // (1)

System.out.print((char) in.readByte());

System.out.flush();

}

} finally {

ReferenceCountUtil.release(msg); // (2)

}

}

Writing client services

ok, it seems that our service is ready. How to verify whether the service is running normally as we want? Here we edit the client service to send data. Logically, it is similar to the server, but there will be slight differences.

import io.netty.bootstrap.Bootstrap;

import io.netty.buffer.ByteBuf;

import io.netty.buffer.Unpooled;

import io.netty.channel.*;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

import io.netty.util.CharsetUtil;

import io.netty.util.concurrent.EventExecutorGroup;

/**

* @author: wangzibin

**/

public class NettyClient {

static class NettyClientHandler extends ChannelInboundHandlerAdapter {

/**

* This method is triggered when the client connects to the server

*

* @param ctx

* @throws Exception

*/

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

for (int i=0;i<10;i++){

ByteBuf buf = Unpooled.copiedBuffer("HelloServer", CharsetUtil.UTF_8);

// Here, the "HelloServer" is directly sent to the server

ctx.writeAndFlush(buf);

}

}

//Triggered when the channel has a read event, that is, the server sends data to the client

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

ByteBuf buf = (ByteBuf) msg;

System.out.println("Received a message from the server:" + buf.toString(CharsetUtil.UTF_8));

System.out.println("Server address: " + ctx.channel().remoteAddress());

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

cause.printStackTrace();

ctx.close();

}

}

public static void main(String[] args) throws Exception {

//The client needs an event loop group

EventLoopGroup group = new NioEventLoopGroup();

try {

//Create client startup object

//Note that the client uses Bootstrap instead of ServerBootstrap

Bootstrap bootstrap = new Bootstrap();

//Set relevant parameters

bootstrap.group(group) //Set thread group

.channel(NioSocketChannel.class) // NioSocketChannel is used as the channel implementation of the client

.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel channel) throws Exception {

//Add processor

channel.pipeline().addLast(new NettyClientHandler());

}

});

System.out.println("netty client start");

//Start the client to connect to the server

ChannelFuture channelFuture = bootstrap.connect("127.0.0.1", 18680).sync();

//Monitor closed channels

channelFuture.channel().closeFuture().sync();

} finally {

group.shutdownGracefully();

}

}

}

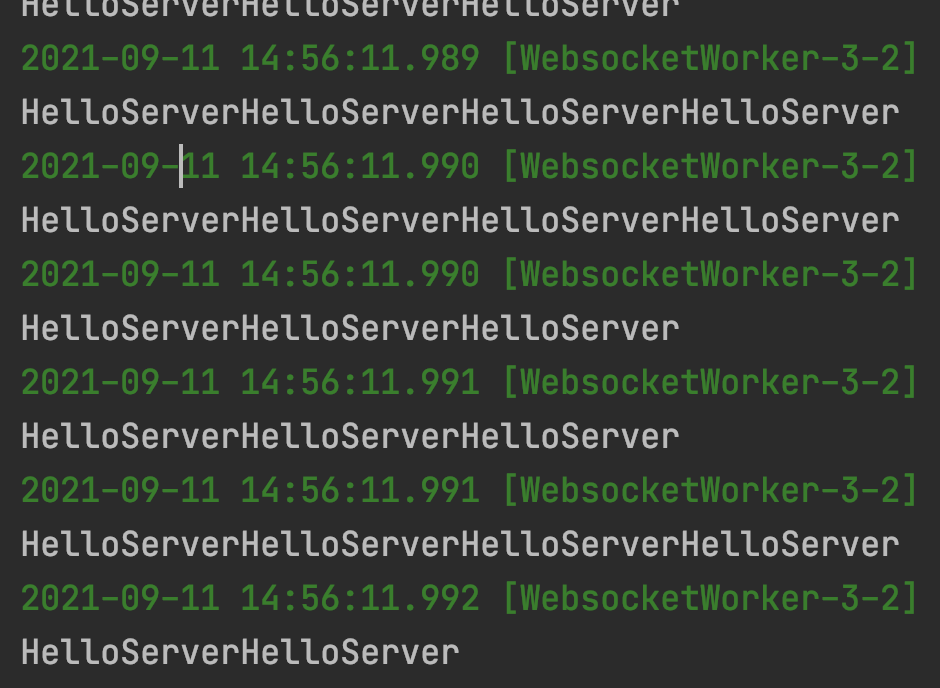

At this time, run the server before running the client. The server can print the data "HellowServer" sent by the client on the console, but there is obviously a problem. The packet sticking phenomenon caused by TCP based streaming makes the HellowServer we receive stick together.

Of course, there are many ways to solve this sticking and unpacking, which can be selected according to your business scenario, such as length flag bit, etc. Usually, we will write relevant [codec]. Here we will make a simple processing demonstration.

Upgrade your service add codec

Netty has built-in many out of the box codecs to facilitate us to directly inherit its features, so that we can focus more on business logic.

Add the helloserver ReplayingDecoder decoder. Here, we choose to directly inherit the ReplayingDecoder to use its features

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.handler.codec.ReplayingDecoder;

import java.util.List;

/**

* @author: wangzibin

**/

public class HelloServerReplayingDecoder extends ReplayingDecoder<Void> {

@Override

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List<Object> out) throws Exception {

out.add(in.readBytes(11));

}

}

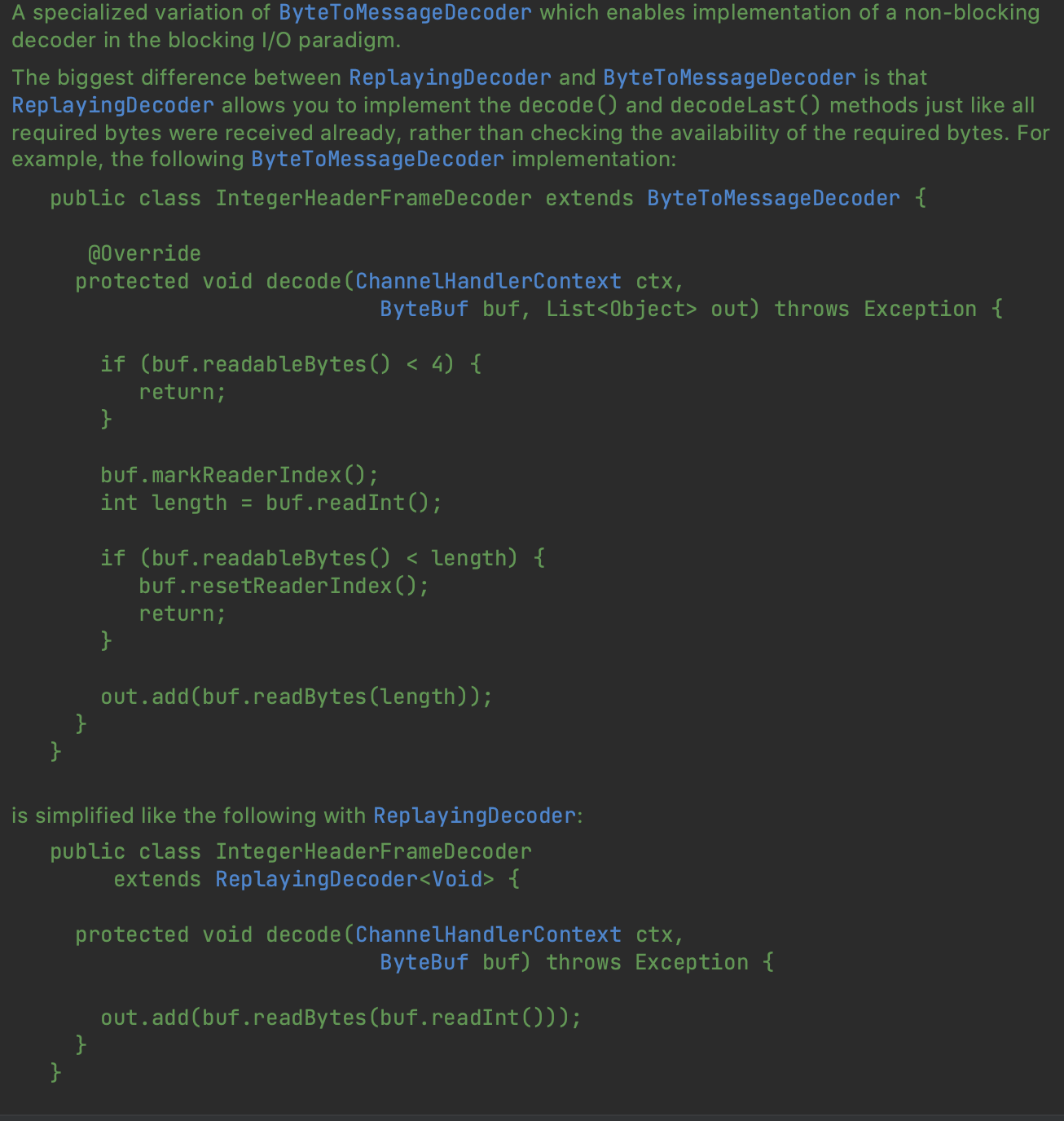

Take a brief look at the author's explanation of replaying decoder

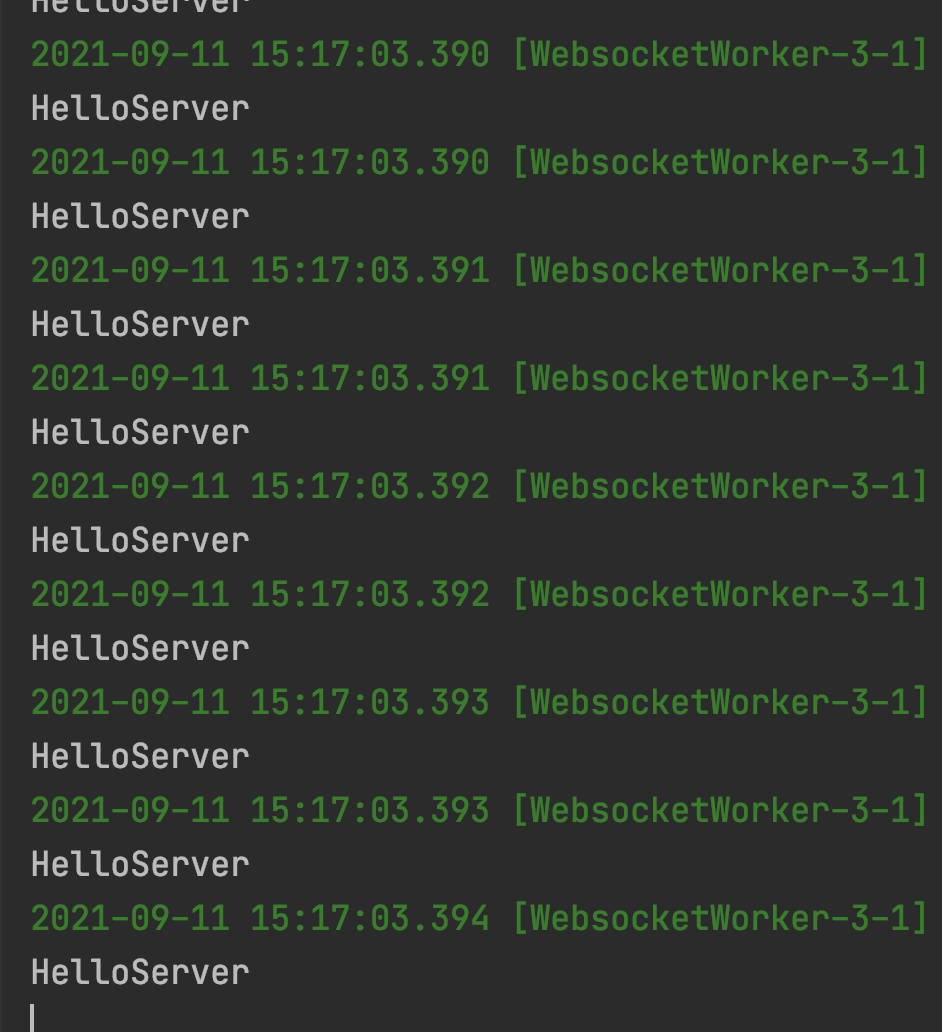

In short, the Handler will wait for 11 bytes to be passed into the next Handler for related processing. This effectively separates the HelloServer sent by the client

Don't forget to add the decoder to the pipeline. Addlast (New helloserverrelayingdecoder()) in the pipeline of your server;

Write the data back to the client. Let's improve the DiscardServerHandler again

import io.netty.buffer.ByteBuf;

import io.netty.buffer.Unpooled;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

import io.netty.util.CharsetUtil;

import io.netty.util.ReferenceCountUtil;

import lombok.extern.slf4j.Slf4j;

/**

* @description: Denial of service for basic testing

* @author: wangzibin

**/

@Slf4j

public class DiscardServerHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

// Discard the received data silently.

ByteBuf in = (ByteBuf) msg;

StringBuilder stringBuilder = new StringBuilder();

while (in.isReadable()) {

char readByte = (char) in.readByte();

stringBuilder.append(readByte);

}

System.out.println(stringBuilder.toString());

ByteBuf buf = Unpooled.copiedBuffer(stringBuilder.toString()+" return ", CharsetUtil.UTF_8);

ctx.writeAndFlush(buf);

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

// Close the connection when an exception is raised.

cause.printStackTrace();

ctx.close();

}

}

So far, a complete data exchange process has been completed. I believe you can also see an obvious problem. There are many inconveniences when we use ByteBuf to send and receive data. Generally speaking, we will choose other more convenient and efficient transmission methods, such as Google's protobuf, At the same time, in the encoding and decoding stage, the data stream is directly converted into the type entity agreed by us for unified processing.

Replace ByteBuf with POJO

Create a new type UserMessage

import lombok.AllArgsConstructor;

import lombok.Builder;

import lombok.Data;

import java.io.Serializable;

/**

* @author: wangzibin

**/

@Data

@Builder

@AllArgsConstructor

public class UserMessage implements Serializable {

private int id;

}

Rewrite decoder

import com.star.im.websocket.handler.demo.message.UserMessage;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.handler.codec.ReplayingDecoder;

import java.util.List;

/**

* @author: wangzibin

**/

public class HelloServerReplayingDecoder extends ReplayingDecoder<Void> {

@Override

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List<Object> out) throws Exception {

int age = in.readInt();

out.add(new UserMessage(age));

}

}

Optimize denial of service logic

import com.star.im.websocket.handler.demo.message.UserMessage;

import io.netty.buffer.ByteBuf;

import io.netty.buffer.Unpooled;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

import io.netty.util.CharsetUtil;

import io.netty.util.ReferenceCountUtil;

import lombok.extern.slf4j.Slf4j;

/**

* @description: Denial of service for basic testing

**/

@Slf4j

public class DiscardServerHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

// Discard the received data silently.

log.info("channelRead ctx={}, msg={}", ctx, msg);

if (msg instanceof String){

System.out.println(msg);

ctx.writeAndFlush(msg);

}else if (msg instanceof UserMessage){

UserMessage userMessage= (UserMessage) msg;

System.out.println(userMessage);

}else {

ByteBuf in = (ByteBuf) msg;

StringBuilder stringBuilder = new StringBuilder();

while (in.isReadable()) {

char readByte = (char) in.readByte();

stringBuilder.append(readByte);

}

System.out.println(stringBuilder.toString());

ByteBuf buf = Unpooled.copiedBuffer(stringBuilder.toString()+" return ", CharsetUtil.UTF_8);

ctx.writeAndFlush(buf);

}

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

// Close the connection when an exception is raised.

cause.printStackTrace();

ctx.close();

}

}

Replace client send logic code

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

for (int i = 0; i < 30; i++) {

ByteBuf buf = Unpooled.copyInt(i);

ctx.writeAndFlush(buf);

}

}

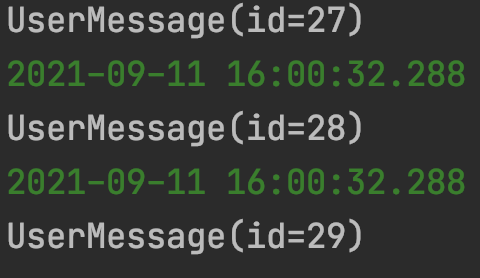

At this time, we can directly operate on the defined types

Similarly, we can do the same with the encoder, so that we can directly transfer type data at both ends. Then the real parsing logic will be converted in the initial encoding and decoding stage, and we only need to focus on business logic in the future.

Write at the end

This article takes you to simply write a Netty service. I believe you have got started. But don't worry, the construction of business level chat system has just begun.

In the next article, we will upgrade the Demo project to a formal WebSocket service to realize the communication between the web page and the server.

I'm dying stranded. Our road has just begun.

there Life is Strange