=======================================================================

[](

)2-1. Three treatment methods

[](

)2-1-1. Single loop insertion

We take 10w pieces of data for some tests. If the insertion method is program traversal loop, insert one by one. It is detected that the speed of inserting a message on mysql is between 0.01s and 0.03s.

The average insertion speed is 0.02 * 100000, that is, about 33 minutes.

The following code is a test example:

1 time test for inserting 100000 data in ordinary cycle

@Test

public void insertUsers1() {

User user = new User();

user.setUserName("Captain Timo");

user.setPassword("Dying");

user.setPrice(3150);

user.setHobby("Planting mushrooms");

for (int i = 0; i < 100000; i++) {

user.setUserName("Captain Timo" + i);

// Call insert method

userMapper.insertUser(user);

}

}

The execution speed is 30 minutes, that is, the speed of 0.018 * 100000. It's very slow

It is found that the cost of insert by insert optimization is too high. Then query the optimization method. It is found that the method of batch insertion can significantly improve the speed.

Increase the insertion speed of 100000 data to about 1-2 minutes ↓

[](

)2-1-2. Modify SQL statement and insert in batches

insert into user_info (user_id,username,password,price,hobby)

values (null,'Captain Timo 1','123456',3150,'Planting mushrooms'),(null,'Galen','123456',450,'Step on mushrooms');

Insert 100000 pieces of data in batch, and the test code is as follows:

@Test

public void insertUsers2() {

List<User> list= new ArrayList<User>();

User user = new User();

user.setPassword("Dying");

user.setPrice(3150);

user.setHobby("Planting mushrooms");

for (int i = 0; i < 100000; i++) {

user.setUserName("Captain Timo" + i);

// Put a single object into the parameter list

list.add(user);

}

userMapper.insertListUser(list);

}

Batch insertion uses 0.046s, which is equivalent to the speed of inserting one or two pieces of data. Therefore, batch insertion will greatly improve the data insertion speed. When there are large data insertion operations, batch insertion is used for optimization

How to write batch insert:

dao definition layer method:

Integer insertListUser(List<User> user);

sql writing method in mybatis Mapper:

<insert id="insertListUser" parameterType="java.util.List">

INSERT INTO `db`.`user_info`

( `id`,

`username`,

`password`,

`price`,

`hobby`)

values

<foreach collection="list" item="item" separator="," index="index">

(null,

#{item.userName},

#{item.password},

#{item.price},

#{item.hobby})

</foreach>

</insert>

This enables batch insertion:

Note: however, when there is a large amount of batch operation data. For example, if I insert 10w SQL statements to operate on more than 1M packets, MySQL will report the following error:

Error message:

Mysql You can change this value on the server by setting the max_allowed_packet' variable. Packet for query is too large (6832997 > 1048576). You can change this value on the server by setting the max_allowed_packet' variable.

Explanation:

The packet used for query is too large (6832997 > 1048576). You can set max_allowed_packet variable to change this value on the server.

You can see from the explanation that the package used for the operation is too large. The size of the SQL content data to be inserted here is 6M, so an error is reported.

resolvent:

The database is MySQL 57. The following information is a system parameter problem of MySQL:

max_allowed_packet, whose default value is 1048576(1M),

Query:

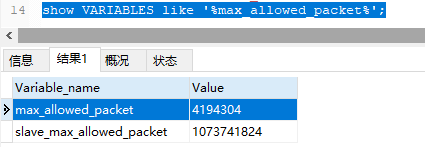

show VARIABLES like '%max_allowed_packet%';

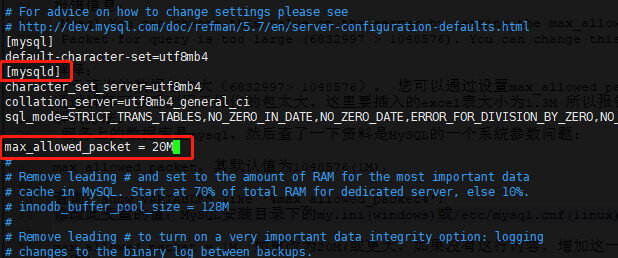

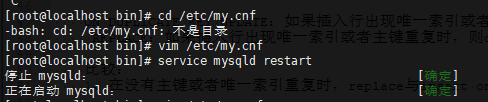

Modify the value of this variable: in the [mysqld] section of my.ini(windows) or / etc/mysql.cnf(linux) file in the MySQL installation directory

max_allowed_packet = 1M, if changed to 20m (or larger, if there is no content in this line, add this line), as shown in the following figure

Save and restart MySQL service. Now you can execute SQL statements with a size greater than 1M and less than 20M.

But what if 20M is not enough?

[](

)2-1-3. Multi cycle insertion in batches

If it is inconvenient to modify the database configuration or there are too many contents to be inserted, it can also be controlled through the back-end code, such as inserting 10w pieces of data in 100 batches and inserting 1000 pieces each time, that is, just a few seconds; Of course, if there is a lot of content in each, say another..

[](

)2-2. Other optimization approaches with slow insertion speed

A. Through show processlist; Command to query whether other long processes or a large number of short processes seize the thread pool resources? See if you can reduce the pressure on the primary database by allocating some processes to the standby database; Or, kill some useless processes first? (manual head o_O)

B. For mass data import, you can also close the index first and open the index after data import

Closing: ALTER TABLE user_info DISABLE KEYS;

Open: ALTER TABLE user_info ENABLE KEYS;

[](

)3, REPLACE INTO syntax "pit"

===================================================================================

As mentioned above, REPLACE may affect more than 3 records because there is more than one unique index in the table. In this case, REPLACE will consider each unique index, delete the duplicate record corresponding to each index, and then insert the new record. Suppose there is a table1 table with three fields a, B and C. They all have a unique index. What happens? Let's test it with some data earlier.

-- Test table creation, a,b,c All three fields have unique indexes CREATE TABLE table1(a INT NOT NULL UNIQUE,b INT NOT NULL UNIQUE,c INT NOT NULL UNIQUE); -- Insert three test data INSERT into table1 VALUES(1,1,1); INSERT into table1 VALUES(2,2,2); INSERT into table1 VALUES(3,3,3);

Reader welfare

**[CodeChina open source project: [analysis of Java interview questions of first-line large manufacturers + core summary learning notes + latest explanation Video]](

)**

More notes to share

NULL UNIQUE,c INT NOT NULL UNIQUE);

– insert three test data

INSERT into table1 VALUES(1,1,1);

INSERT into table1 VALUES(2,2,2);

INSERT into table1 VALUES(3,3,3);

# **Reader welfare** **[CodeChina Open source project: [first tier big factory] Java Analysis of interview questions+Core summary learning notes+Latest explanation Video]]( )** [External chain picture transfer...(img-Bl9hbS8u-1631177794260)] **More notes to share** [External chain picture transfer...(img-Z6SQZX1Y-1631177794262)]