If it is an original article, it cannot be reprinted without permission

Original blogger's blog address: https://blog.csdn.net/qq21497936

Original blogger blog navigation: https://blog.csdn.net/qq21497936/article/details/102478062

This article blog address: https://blog.csdn.net/qq21497936/article/details/106367317

Readers, with knowledge and manpower, can either change their needs, find professionals, or do their own research

Blog entry for Red Fatty (Red Imitation): Developing a collection of technologies (including Qt utilities, raspberry pie, 3D, OpenCV, OpenGL, ffmpeg, OSG, single-chip computer, combination of hard and soft, etc.) that are continuously updated...(Click on the portal)

OpenCV Development Column (Click Portal)

Previous: OpenCV Development Notes (59): Red Fat 8 minutes to learn more about the watershed algorithm (graphical + easy to understand + program source)>

Next: Continuing to add...

<br>

Preface

Red Fat, come on!

_For recognition, sometimes there is a need, such as identifying a triangle and finding the angle of three vertices of the triangle. This is similar to educational scenarios, but there are other scenarios, so it is very important to detect corners, detect corners and find their angles.

<br>

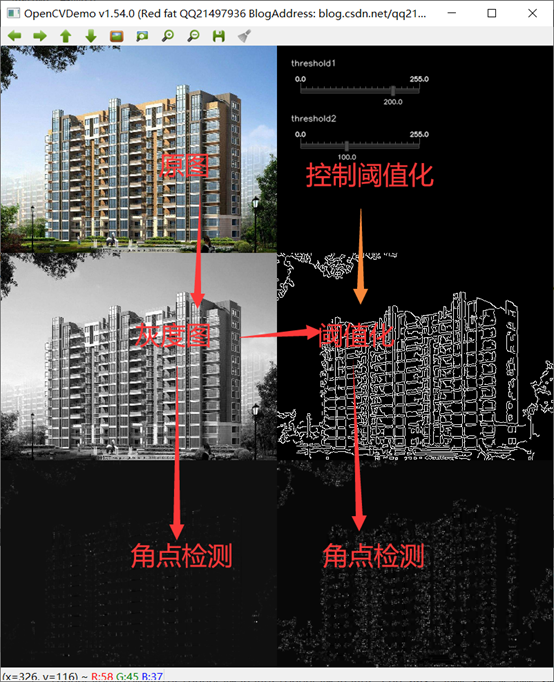

Demo

<br>

Three types of image features

- Edge: The area where the intensity of the image changes abruptly is actually a high intensity gradient area.

- Corner point: where two edges intersect, it looks like a corner;

- Speckles: Areas divided by their characteristics that are particularly strong, very low in intensity, or have a specific texture;

<br>

Harris Corner Point

Summary

_Harris corner detection is a gray-scale image-based corner extraction algorithm with high stability, and its performance in opencv is relatively low because it uses Gaussian filter.

_Corner detection based on gray image can be divided into three types: gradient-based, template-based and template-based gradient combination. Harris algorithm is a template-based algorithm based on gray image.

principle

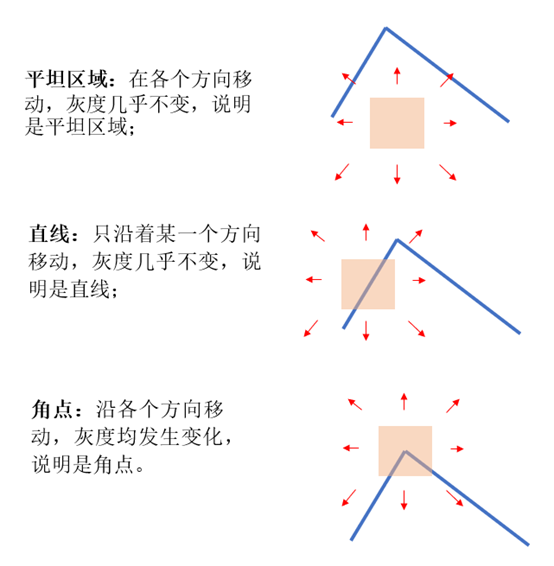

_Recognition of the diagonal point of the human eye is usually done through a small local window: if the small window is moved in all directions and the gray scale within the window changes greatly, then there are corner points in the window, which can be divided into three situations:

- If the gray scale is almost unchanged when moving in all directions, it is a flat area.

- If you move in only one direction and the gray level is almost unchanged, it is a straight line.

- If the gray scale changes as you move in all directions, it is a corner point.

The basic principles are as follows:

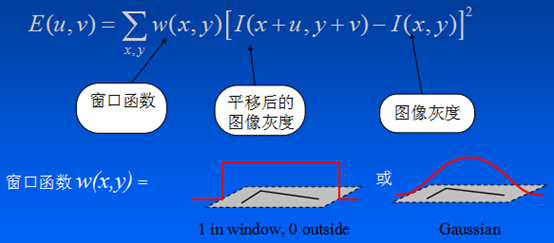

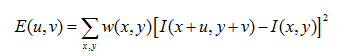

_The specific calculation formula is as follows:

_The specific calculation formula is as follows:

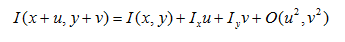

_Taylor Expansion:

_Taylor Expansion:

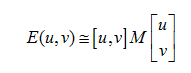

_substituted for:

_substituted for:

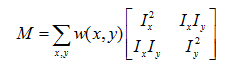

Where:

Where:

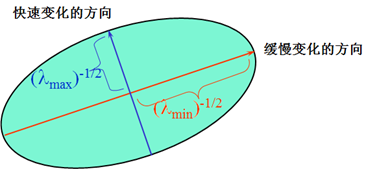

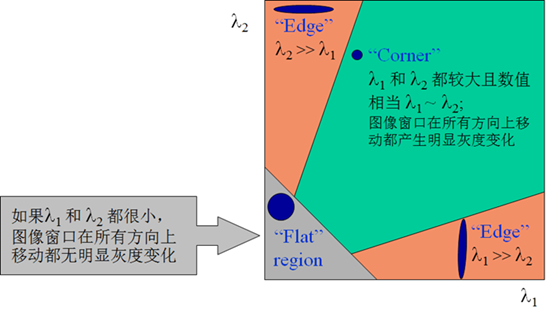

Quadratic term function is essentially an ellipse function. The flatness and size of an ellipse are determined by two eigenvalues of matrix M.

Quadratic term function is essentially an ellipse function. The flatness and size of an ellipse are determined by two eigenvalues of matrix M.

The relationship between the two eigenvalues of matrix M and the corners, edges, and flat areas in the image.

The relationship between the two eigenvalues of matrix M and the corners, edges, and flat areas in the image.

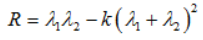

_Harris defines the corner response function: _is R=Det(M)-k*trace(M)*trace(M), K is the empirical constant 0.04~0.06.

_Defines a point that is a local maximum when R > thresholds, and is a corner point.

_is R=Det(M)-k*trace(M)*trace(M), K is the empirical constant 0.04~0.06.

_Defines a point that is a local maximum when R > thresholds, and is a corner point.

Harris Function Prototype

void cornerHarris(InputArray src, OutputArray dst, int blockSize, int ksize, double k, intborderType=BORDER_DEFAULT );

- Parameter 1: InputArray type src, input image, that is, source image, fill Mat class object, and must be a single channel 8-bit or floating point image;

- Parameter 2: dst of OutputArray type, where the result of operation after function call exists, that is, this parameter is used to store the output of Harris corner detection, which is the same size as the source picture, especially note that the output type is CV_32F;

- Parameter three: blockSize of type int, indicating the size of the neighborhood;

- Parameter 4: ksize of type int, which represents the aperture size of the Sobel() operator;

- Parameter 5: k of double type, Harris corner response function, generally 0.04~0.06;

- Parameter 6: borderType of int type, boundary mode of image pixel:

Overview of normalization

_Normalization refers to the normalization of a matrix cv::Mat.

_Normalization is a dimensionless means of transforming the absolute values of the values of a physical system into a relative value relationship.An effective way to simplify the calculation and reduce the magnitude.For example, when each frequency value in the filter is normalized by the cutoff frequency, the frequency is the relative value of the cutoff frequency, and there is no dimension.When the impedance is normalized by the internal resistance of the power supply, each impedance becomes a relative impedance value, and the dimension Ohm is gone.After all these operations, everything is restored to normalization.The nyquist frequency is often used in signal processing toolboxes, which is defined as one-half of the sampling frequency. The order selection of the filter and the cutoff frequency in the design are normalized using the nyquist frequency.For example, for a system with a sampling frequency of 500 hz, the normalized frequency of 400 Hz is 400/500=0.8, and the normalized frequency range is between [0,1].

Normalization function prototype

void normalize( InputArray src, InputOutputArray dst, double alpha = 1, double beta = 0, int norm_type = NORM_L2, int dtype = -1, InputArray mask = noArray());

- Parameter 1: src of type InputArray, generally mat;

- Parameter 2: dst of type InputOutputArray, usually mat, is the same size as src;

- Parameter three: alpha of double type, minimum normalized value, default value 1;

- Parameter 4: beta of double type, maximum normalized value, default value 0;

- Parameter 5: norm_of type int Type, normalized type, specifically look at cv::NormTypes, default is;

- Parameter 6: dtype of int type, default value -1, negative number, its output matrix is the same as SRC type, otherwise it has the same number of channels as src, and the image depth is CV_MAT_DEPTH.

- Parameter 7: InputArray type mask, optional operation mask, default value is noArray();

Enhanced Image Function Prototype

void convertScaleAbs(InputArray src, OutputArray dst, double alpha = 1, double beta = 0);

- Parameter 1: src of type InputArray, generally mat;

- Parameter 2: dst of OutputArray type, usually mat, is the same size as src;

- Parameter three: alpha of double type, maximum normalized value, default value 1;

- Parameter 4: beta of double type, maximum normalized value, default value 0;

<br>

Demo Source

void OpenCVManager::testHarris() { QString fileName1 = "E:/qtProject/openCVDemo/openCVDemo/modules/openCVManager/images/16.jpg"; int width = 400; int height = 300; cv::Mat srcMat = cv::imread(fileName1.toStdString()); cv::resize(srcMat, srcMat, cv::Size(width, height)); cv::String windowName = _windowTitle.toStdString(); cvui::init(windowName); cv::Mat windowMat = cv::Mat(cv::Size(srcMat.cols * 2, srcMat.rows * 3), srcMat.type()); int threshold1 = 200; int threshold2 = 100; while(true) { windowMat = cv::Scalar(0, 0, 0); cv::Mat mat; cv::Mat tempMat; // copy the original image to the left first mat = windowMat(cv::Range(srcMat.rows * 0, srcMat.rows * 1), cv::Range(srcMat.cols * 0, srcMat.cols * 1)); cv::addWeighted(mat, 0.0f, srcMat, 1.0f, 0.0f, mat); { // Grayscale Grayscale cv::Mat grayMat; cv::cvtColor(srcMat, grayMat, cv::COLOR_BGR2GRAY); // copy mat = windowMat(cv::Range(srcMat.rows * 1, srcMat.rows * 2), cv::Range(srcMat.cols * 0, srcMat.cols * 1)); cv::Mat grayMat2; cv::cvtColor(grayMat, grayMat2, cv::COLOR_GRAY2BGR); cv::addWeighted(mat, 0.0f, grayMat2, 1.0f, 0.0f, mat); // Mean filter cv::blur(grayMat, tempMat, cv::Size(3, 3)); cvui::printf(windowMat, width * 1 + 20, height * 0 + 20, "threshold1"); cvui::trackbar(windowMat, width * 1 + 20, height * 0 + 40, 200, &threshold1, 0, 255); cvui::printf(windowMat, width * 1 + 20, height * 0 + 100, "threshold2"); cvui::trackbar(windowMat, width * 1 + 20, height * 0 + 120, 200, &threshold2, 0, 255); // canny edge detection cv::Canny(tempMat, tempMat, threshold1, threshold2); // copy mat = windowMat(cv::Range(srcMat.rows * 1, srcMat.rows * 2), cv::Range(srcMat.cols * 1, srcMat.cols * 2)); cv::cvtColor(tempMat, grayMat2, cv::COLOR_GRAY2BGR); cv::addWeighted(mat, 0.0f, grayMat2, 1.0f, 0.0f, mat); // harris corner detection cv::cornerHarris(grayMat, grayMat2, 2, 3, 0.01); // Normalization and Conversion cv::normalize(grayMat2, grayMat2, 0, 255, cv::NORM_MINMAX, CV_32FC1, cv::Mat()); cv::convertScaleAbs(grayMat2 , grayMat2); //Linear transformation of normalized graph to 8U bit symbol integer // copy mat = windowMat(cv::Range(srcMat.rows * 2, srcMat.rows * 3), cv::Range(srcMat.cols * 0, srcMat.cols * 1)); cv::cvtColor(grayMat2, grayMat2, cv::COLOR_GRAY2BGR); cv::addWeighted(mat, 0.0f, grayMat2, 1.0f, 0.0f, mat); // harris corner detection cv::cornerHarris(tempMat, tempMat, 2, 3, 0.01); // Normalization and Conversion cv::normalize(tempMat, tempMat, 0, 255, cv::NORM_MINMAX, CV_32FC1, cv::Mat()); cv::convertScaleAbs(tempMat , tempMat); //Linear transformation of normalized graph to 8U bit symbol integer // copy mat = windowMat(cv::Range(srcMat.rows * 2, srcMat.rows * 3), cv::Range(srcMat.cols * 1, srcMat.cols * 2)); cv::cvtColor(tempMat, tempMat, cv::COLOR_GRAY2BGR); cv::addWeighted(mat, 0.0f, tempMat, 1.0f, 0.0f, mat); } // To update cvui::update(); // display cv::imshow(windowName, windowMat); // esc key exit if(cv::waitKey(25) == 27) { break; } } }

<br>

Project template: corresponding version number v1.54.0

_Corresponding version number v1.54.0

<br>

Previous: OpenCV Development Notes (59): Red Fat 8 minutes to learn more about the watershed algorithm (graphical + easy to understand + program source)>

Next: Continuing to add...

<br>

Original blogger's blog address: https://blog.csdn.net/qq21497936

Original blogger blog navigation: https://blog.csdn.net/qq21497936/article/details/102478062

This article blog address: https://blog.csdn.net/qq21497936/article/details/106367317