Spring boot integrates mybatis plus (2) multi data source Druid monitoring, Atomikos deals with transactions, cross database and linked table query

In the previous chapter, I used springboot project to integrate mp, mp basic use, code generator use, Druid data monitoring, etc., but only for single data source request stamp Spring boot integrates mybatis plus (I) basic usage and custom template code generator

Because many times, in development, there is not only one database. When saving a piece of data, you may need to save it to several databases. Then when an exception occurs, you will face transaction problems. You need to roll back the saved data. Therefore, this article begins to explain and integrate druid monitoring and transaction processing under Mp multiple data sources

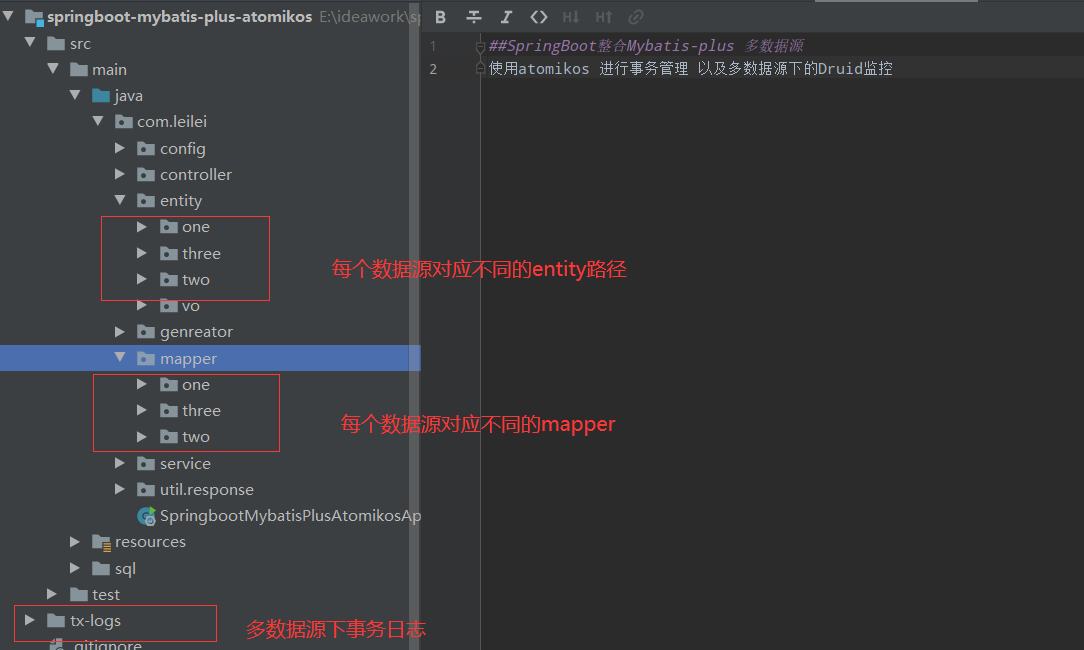

1, Add new dependency

<!--Distributed transaction-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jta-atomikos</artifactId>

</dependency>

<!--Because of me mysql It's reinstalled. There's something wrong with the 8-version driver, so I went back to 5-version-->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.47</version>

</dependency>

2, YML configuration with multiple data sources

spring:

datasource:

type: com.alibaba.druid.pool.xa.DruidXADataSource

druid:

#First data source

one:

name: oneDataSource

url: jdbc:mysql://127.0.0.1:3306/mybatis-plus?useUnicode=true&characterEncoding=utf-8&useSSL=false&serverTimezone=GMT

driver-class-name: com.mysql.jdbc.Driver

username: root

password: root

# The following is the supplementary settings of the connection pool, which are applied to all data sources above

# Initialization size, min, Max

initialSize: 5

minIdle: 5

maxActive: 20

# Configure the timeout time for getting connection waiting

maxWait: 60000

# Configure how often to check the interval. Check the idle connections that need to be closed, in milliseconds

timeBetweenEvictionRunsMillis: 60000

# Configure the minimum lifetime of a connection in the pool, in milliseconds

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 1

validationQueryTimeout: 10000

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

# Open PSCache and specify the size of PSCache on each connection

poolPreparedStatements: true

maxPoolPreparedStatementPerConnectionSize: 20

filters: stat,wall

# Open mergeSql function through connectProperties property; slow SQL record

connectionProperties: druid.stat.mergeSql=true;druid.stat.slowSqlMillis=5000

# Merge monitoring data from multiple druiddatasources

useGlobalDataSourceStat: true

#Second data source

two:

name: twoDataSource

url: jdbc:mysql://127.0.0.1:3306/mybatis-plus2?useUnicode=true&characterEncoding=utf-8&useSSL=false&serverTimezone=GMT

driver-class-name: com.mysql.jdbc.Driver

username: root

password: root

# The following is the supplementary settings of the connection pool, which are applied to all data sources above

# Initialization size, min, Max

initialSize: 5

minIdle: 5

maxActive: 20

# Configure the timeout time for getting connection waiting

maxWait: 60000

# Configure how often to check the interval. Check the idle connections that need to be closed, in milliseconds

timeBetweenEvictionRunsMillis: 60000

# Configure the minimum lifetime of a connection in the pool, in milliseconds

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 1

validationQueryTimeout: 10000

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

# Open PSCache and specify the size of PSCache on each connection

poolPreparedStatements: true

maxPoolPreparedStatementPerConnectionSize: 20

filters: stat,wall

# Open mergeSql function through connectProperties property; slow SQL record

connectionProperties: druid.stat.mergeSql=true;druid.stat.slowSqlMillis=5000

# Merge monitoring data from multiple druiddatasources

useGlobalDataSourceStat: true

#Third data source

three:

name: threeDataSource

url: jdbc:mysql://127.0.0.1:3306/mybatis-plus3?useUnicode=true&characterEncoding=utf-8&useSSL=false&serverTimezone=GMT

driver-class-name: com.mysql.jdbc.Driver

username: root

password: root

# The following is the supplementary settings of the connection pool, which are applied to all data sources above

# Initialization size, min, Max

initialSize: 5

minIdle: 5

maxActive: 20

# Configure the timeout time for getting connection waiting

maxWait: 60000

# Configure how often to check the interval. Check the idle connections that need to be closed, in milliseconds

timeBetweenEvictionRunsMillis: 60000

# Configure the minimum lifetime of a connection in the pool, in milliseconds

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 1

validationQueryTimeout: 10000

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

# Open PSCache and specify the size of PSCache on each connection

poolPreparedStatements: true

maxPoolPreparedStatementPerConnectionSize: 20

filters: stat,wall

# Open mergeSql function through connectProperties property; slow SQL record

connectionProperties: druid.stat.mergeSql=true;druid.stat.slowSqlMillis=5000

# Merge monitoring data from multiple druiddatasources

useGlobalDataSourceStat: true

jta:

atomikos:

properties:

log-base-dir: tx-logs

transaction-manager-id: txManager #The default IP address of the computer should be unique in the production environment

3, Configure Bean to read database information

Since the configuration of one two three data sources has changed the configuration path of springBoot for Mysql connection reading, we need to customize the location of data sources to successfully read database related information when starting the project

Both Mybatis and mp need data source, SqlSessionFactory and SqlSessionTemplate when accessing database

First, you need to get the data source information from the configuration file and the information Druid needs to monitor

Read the database path generation object in our configuration file and configure it as a Bean to be managed by spring

Attach my complete code

DruidConfig

package com.leilei.config;

import com.alibaba.druid.filter.stat.StatFilter;

import com.alibaba.druid.support.http.StatViewServlet;

import com.alibaba.druid.support.http.WebStatFilter;

import com.alibaba.druid.wall.WallConfig;

import com.alibaba.druid.wall.WallFilter;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.jta.atomikos.AtomikosDataSourceBean;

import org.springframework.boot.web.servlet.FilterRegistrationBean;

import org.springframework.boot.web.servlet.ServletRegistrationBean;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import org.springframework.core.env.Environment;

import java.util.Properties;

/**

* Multiple data sources and Druid configuration

*

* @author leilei

*/

@Configuration

public class DruidConfig {

/**

* Data source 1 configuration supports multi data source transactions using AtomikosDataSourceBean

*

* @param env

* @return Primary Specify the master database (you must specify a master database or an error will be reported)

*/

@Bean(name = "MybatisPlusOneDataSource")

@Primary

@Autowired

public AtomikosDataSourceBean oneDataSource(Environment env) {

AtomikosDataSourceBean ds = new AtomikosDataSourceBean();

Properties prop = build(env, "spring.datasource.druid.one.");

ds.setXaDataSourceClassName("com.alibaba.druid.pool.xa.DruidXADataSource");

ds.setUniqueResourceName("oneDataSource");

ds.setPoolSize(5);

ds.setXaProperties(prop);

return ds;

}

/**

* Data source 2 configuration supports multi data source transactions using AtomikosDataSourceBean

*

* @param env

* @return

*/

@Autowired

@Bean(name = "MybatisPlusTwoDataSource")

public AtomikosDataSourceBean twoDataSource(Environment env) {

AtomikosDataSourceBean ds = new AtomikosDataSourceBean();

Properties prop = build(env, "spring.datasource.druid.two.");

ds.setXaDataSourceClassName("com.alibaba.druid.pool.xa.DruidXADataSource");

ds.setUniqueResourceName("twoDataSource");

ds.setPoolSize(5);

ds.setXaProperties(prop);

return ds;

}

@Autowired

@Bean(name = "MybatisPlusThreeDataSource")

public AtomikosDataSourceBean threeDataSource(Environment env) {

AtomikosDataSourceBean ds = new AtomikosDataSourceBean();

Properties prop = build(env, "spring.datasource.druid.three.");

ds.setXaDataSourceClassName("com.alibaba.druid.pool.xa.DruidXADataSource");

ds.setUniqueResourceName("threeDataSource");

ds.setPoolSize(5);

ds.setXaProperties(prop);

return ds;

}

// /**

// * Inject the thing Manager

// * @return

// */

// @Bean(name = "leijta")

// public JtaTransactionManager regTransactionManager () {

// UserTransactionManager userTransactionManager = new UserTransactionManager();

// UserTransaction userTransaction = new UserTransactionImp();

// return new JtaTransactionManager(userTransaction, userTransactionManager);

// }

/**

* Load data source information from configuration file

*

* @param env

* @param prefix

* @return

*/

private Properties build(Environment env, String prefix) {

Properties prop = new Properties();

prop.put("url", env.getProperty(prefix + "url"));

prop.put("username", env.getProperty(prefix + "username"));

prop.put("password", env.getProperty(prefix + "password"));

prop.put("driverClassName", env.getProperty(prefix + "driverClassName", ""));

prop.put("initialSize", env.getProperty(prefix + "initialSize", Integer.class));

prop.put("maxActive", env.getProperty(prefix + "maxActive", Integer.class));

prop.put("minIdle", env.getProperty(prefix + "minIdle", Integer.class));

prop.put("maxWait", env.getProperty(prefix + "maxWait", Integer.class));

prop.put("poolPreparedStatements", env.getProperty(prefix + "poolPreparedStatements", Boolean.class));

prop.put("maxPoolPreparedStatementPerConnectionSize",

env.getProperty(prefix + "maxPoolPreparedStatementPerConnectionSize", Integer.class));

prop.put("maxPoolPreparedStatementPerConnectionSize",

env.getProperty(prefix + "maxPoolPreparedStatementPerConnectionSize", Integer.class));

prop.put("validationQuery", env.getProperty(prefix + "validationQuery"));

prop.put("validationQueryTimeout", env.getProperty(prefix + "validationQueryTimeout", Integer.class));

prop.put("testOnBorrow", env.getProperty(prefix + "testOnBorrow", Boolean.class));

prop.put("testOnReturn", env.getProperty(prefix + "testOnReturn", Boolean.class));

prop.put("testWhileIdle", env.getProperty(prefix + "testWhileIdle", Boolean.class));

prop.put("timeBetweenEvictionRunsMillis",

env.getProperty(prefix + "timeBetweenEvictionRunsMillis", Integer.class));

prop.put("minEvictableIdleTimeMillis", env.getProperty(prefix + "minEvictableIdleTimeMillis", Integer.class));

prop.put("filters", env.getProperty(prefix + "filters"));

return prop;

}

/**

* druid Access configuration

*

* @return

*/

@Bean

public ServletRegistrationBean druidServlet() {

ServletRegistrationBean servletRegistrationBean = new ServletRegistrationBean(new StatViewServlet(), "/druid/*");

//Console management user, add the following 2 lines to enter druid background, you need to log in

servletRegistrationBean.addInitParameter("loginUsername", "leilei");

servletRegistrationBean.addInitParameter("loginPassword", "123456");

return servletRegistrationBean;

}

@Bean

public FilterRegistrationBean filterRegistrationBean() {

FilterRegistrationBean filterRegistrationBean = new FilterRegistrationBean();

filterRegistrationBean.setFilter(new WebStatFilter());

filterRegistrationBean.addUrlPatterns("/*");

filterRegistrationBean.addInitParameter("exclusions", "*.js,*.gif,*.jpg,*.png,*.css,*.ico,/druid/*");

filterRegistrationBean.addInitParameter("profileEnable", "true");

return filterRegistrationBean;

}

@Bean

public StatFilter statFilter() {

StatFilter statFilter = new StatFilter();

//slowSqlMillis is used to configure the standard of slow SQL. If the execution time exceeds slowSqlMillis, it is slow.

statFilter.setLogSlowSql(true);

//SQL merge configuration

statFilter.setMergeSql(true);

//The default value for slowSqlMillis is 3000, which is 3 seconds.

statFilter.setSlowSqlMillis(1000);

return statFilter;

}

@Bean

public WallFilter wallFilter() {

WallFilter wallFilter = new WallFilter();

//Allow multiple SQL execution

WallConfig config = new WallConfig();

config.setMultiStatementAllow(true);

wallFilter.setConfig(config);

return wallFilter;

}

}

In DruidConfig, we can see that the Bean of each data source is of the type AtomikosDataSourceBean. As long as the data source is of this type, under multiple data sources, type the transaction annotation @ Transactional provided by Spring

AtomikosDataSourceBean type data source

/**

* Data source 1 configuration supports multi data source transactions using AtomikosDataSourceBean

*

* @param env

* @return Primary Specify the master database (you must specify a master database or an error will be reported)

*/

@Bean(name = "MybatisPlusOneDataSource")

@Primary

@Autowired

public AtomikosDataSourceBean oneDataSource(Environment env) {

AtomikosDataSourceBean ds = new AtomikosDataSourceBean();

Properties prop = build(env, "spring.datasource.druid.one.");

ds.setXaDataSourceClassName("com.alibaba.druid.pool.xa.DruidXADataSource");

ds.setUniqueResourceName("oneDataSource");

ds.setPoolSize(5);

ds.setXaProperties(prop);

return ds;

}

Configure sqlsessionfactory sqlSessionTemplate for each data source

The sqlsessionfactory sqlSessionTemplate of my first data source is pasted here. No matter how many data sources there are, you can change the package path according to my configuration, @ Qualifier can select the corresponding data source, and change the mpper.xml path

Note that there must be all related data sources (sqlsessionfactory sqlsessiontemplate) of a data source. Use the * * @ Primary * * annotation to specify a main database, or an error will be initiated.

package com.leilei.config;

import com.baomidou.mybatisplus.core.MybatisConfiguration;

import com.baomidou.mybatisplus.extension.plugins.PaginationInterceptor;

import com.baomidou.mybatisplus.extension.spring.MybatisSqlSessionFactoryBean;

import org.apache.ibatis.logging.stdout.StdOutImpl;

import org.apache.ibatis.session.SqlSessionFactory;

import org.mybatis.spring.SqlSessionTemplate;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import org.springframework.core.io.support.PathMatchingResourcePatternResolver;

import javax.sql.DataSource;

/**

* @author leilei

*/

@Configuration

@MapperScan(basePackages = "com.leilei.mapper.one", sqlSessionFactoryRef = "oneSqlSessionFactory")

public class OneDataSourceConfig {

@Primary

@Bean(name = "oneSqlSessionFactory")

public SqlSessionFactory sqlSessionFactory(@Qualifier("MybatisPlusOneDataSource") DataSource dataSource) throws Exception {

//Configure myabtisSqlSession

MybatisSqlSessionFactoryBean sessionFactoryBean = new MybatisSqlSessionFactoryBean();

// Indicate the mapper.xml location (the XML location specified in the configuration file will be invalid and replaced in this way, for unknown reasons)

sessionFactoryBean.setMapperLocations(new PathMatchingResourcePatternResolver().getResources("classpath:com/leilei/mapper/one/*/*Mapper.xml"));

// Indicates the entity scan (multiple package s are separated by commas or semicolons)

sessionFactoryBean.setTypeAliasesPackage("com.leilei.entity.one");

MybatisConfiguration mybatisConfiguration = new MybatisConfiguration();

// mybatisConfiguration.setJdbcTypeForNull(JdbcType.NULL);

//hump

mybatisConfiguration.setMapUnderscoreToCamelCase(true);

//Open cache or not

mybatisConfiguration.setCacheEnabled(false);

//Paging mode with multiple data sources

mybatisConfiguration.addInterceptor(new PaginationInterceptor());

// Configure print sql statements

mybatisConfiguration.setLogImpl(StdOutImpl.class);

sessionFactoryBean.setConfiguration(mybatisConfiguration);

//Data source injection

sessionFactoryBean.setDataSource(dataSource);

return sessionFactoryBean.getObject();

}

@Primary

@Bean(name = "oneSqlSessionTemplate")

public SqlSessionTemplate sqlSessionTemplate(@Qualifier("oneSqlSessionFactory") SqlSessionFactory sqlSessionFactory) {

return new SqlSessionTemplate(sqlSessionFactory);

}

}

The sqlsessionfactory sqlSessionTemplate of other data sources can follow my configuration. Remove the @ Primary annotation and note that the Bean name is set as unique.

In fact, transaction management and Druid monitoring under multiple data sources have been integrated

4, Points to be noted in this paper

1. Import atomikos dependency

2.mysql driver is version 5

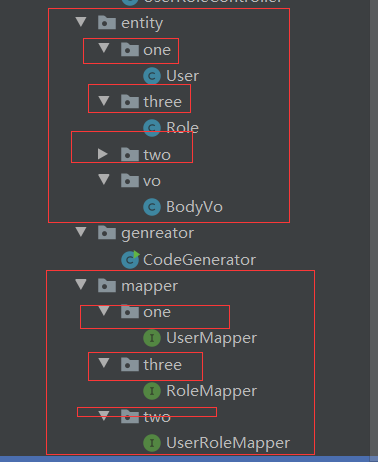

3. Packet path Division

When using the code generator, separate the mapper, entity, mapper.xml package path of each data source

4. Multiple data sources also need @ Transactional annotation

5, Multi data source transaction test

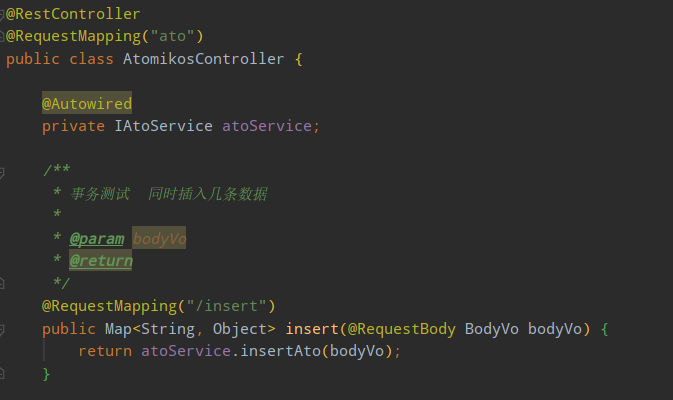

Transaction testing

In one method, call multiple data sources to insert data, and make an exception in the middle. After an error is reported, view the data rollback·

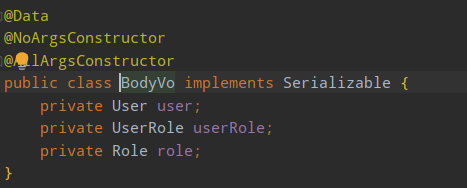

body encapsulates an object for me. Because this article designs three objects, I am going to insert data test transactions into three data sources

Operation database

@Override

@Transactional //The data source is configured with AtomikosDataSourceBean, and then this annotation can achieve multi data source transaction control

public Map<String, Object> insertAto(BodyVo bodyVo) {

userMapper.insert(bodyVo.getUser());

userRoleMapper.insert(bodyVo.getUserRole());

int a = 1 / 0; //Manufacturing exception

roleMapper.insert(bodyVo.getRole());

HashMap<String, Object> map = new HashMap<>();

map.put("user", bodyVo.getUser());

map.put("user_role", bodyVo.getUserRole());

map.put("role", bodyVo.getRole());

return map;

}

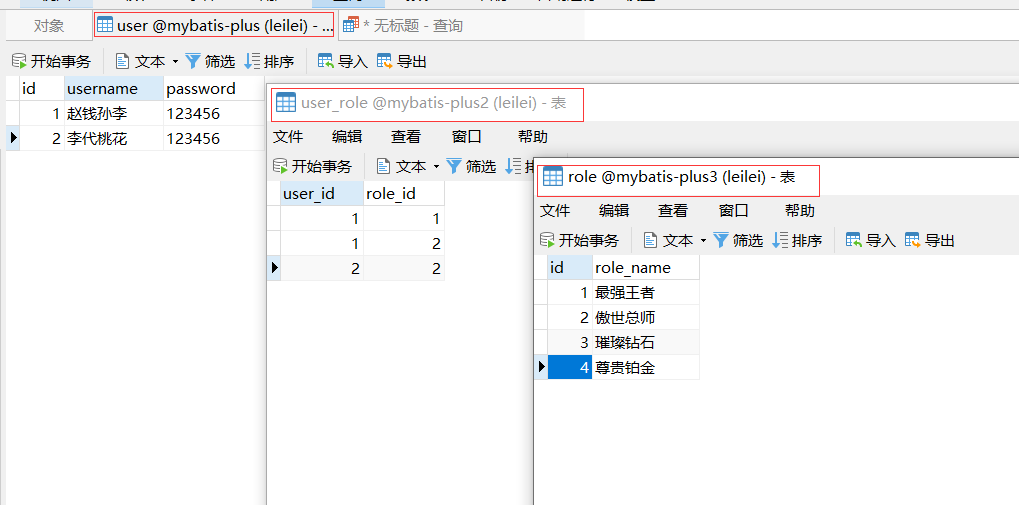

Database data before test started

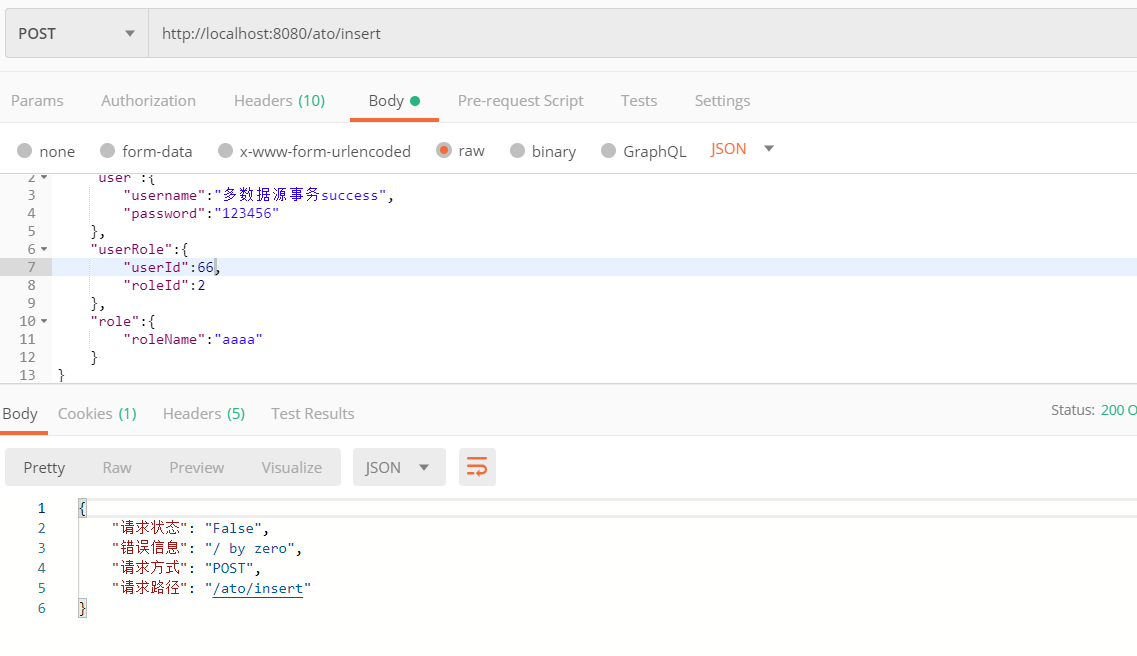

Run the test result, you can see that an exception has been reported

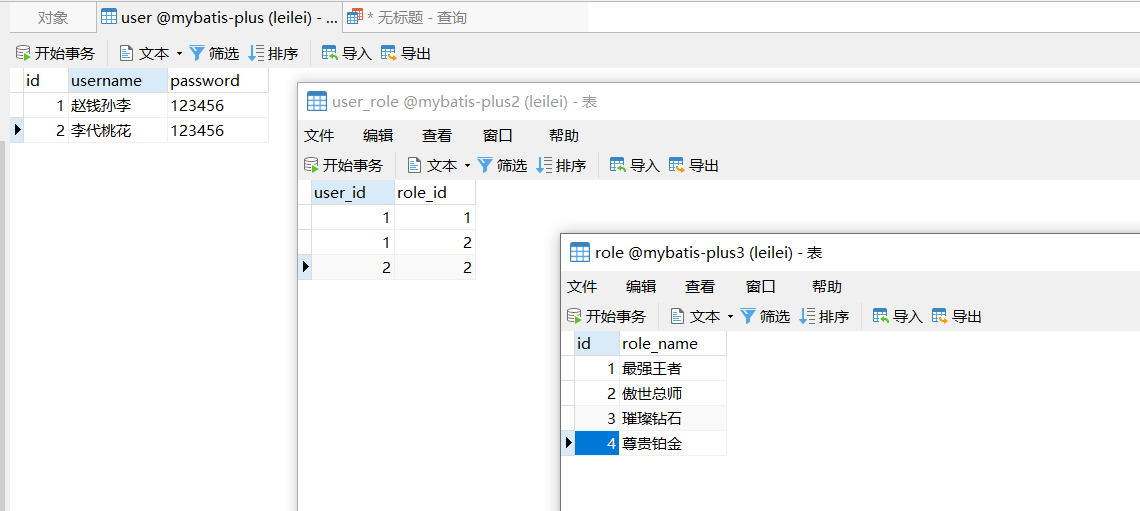

Then, check the data again. After refreshing, it is found that the data has not changed

So, the transaction problem under multiple data sources has been solved!!!!!

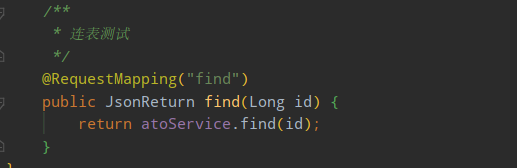

Mp paging and cross table query under multiple data sources

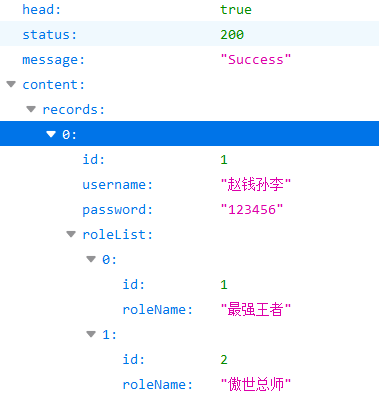

In this paper, three tables of a many to many relationship are put into three databases

User first query Perspective

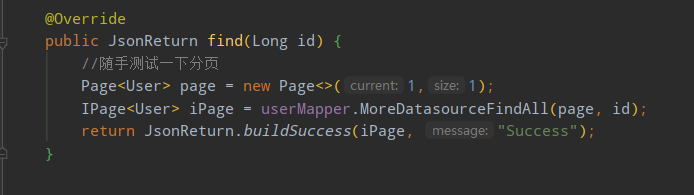

In this paper, usermapper.MoreDatasourceFindAll is a user-defined method. In order to have paging effect, a Page object must be constructed. The Page object and query criteria are passed to UserMapper.interface as parameters, and then the mapper.xml operation database

IPage<User> MoreDatasourceFindAll(Page<User> page, @Param("id") Long id);

<!-- General query mapping results -->

<resultMap id="BaseResultMap" type="com.leilei.entity.one.User">

<id column="id" property="id"/>

<result column="username" property="username"/>

<result column="password" property="password"/>

<collection property="roleList" javaType="list" ofType="com.leilei.entity.three.Role">

<id column="roleid" property="id"/>

<result column="rolename" property="roleName"/>

</collection>

</resultMap>

<select id="MoreDatasourceFindAll" resultMap="BaseResultMap">

SELECT mpu.*,mp3r.id roleid,mp3r.role_name rolename

FROM `mybatis-plus`.`user` mpu ,`

mybatis-plus2`.user_role mp2ur,

`mybatis-plus3`.role mp3r

<where>

and mp2ur.user_id=mpu.id AND mp2ur.role_id=mp3r.id

<if test="id!=null and id !='' ">

AND mpu.id=#{id}

</if>

</where>

</select>

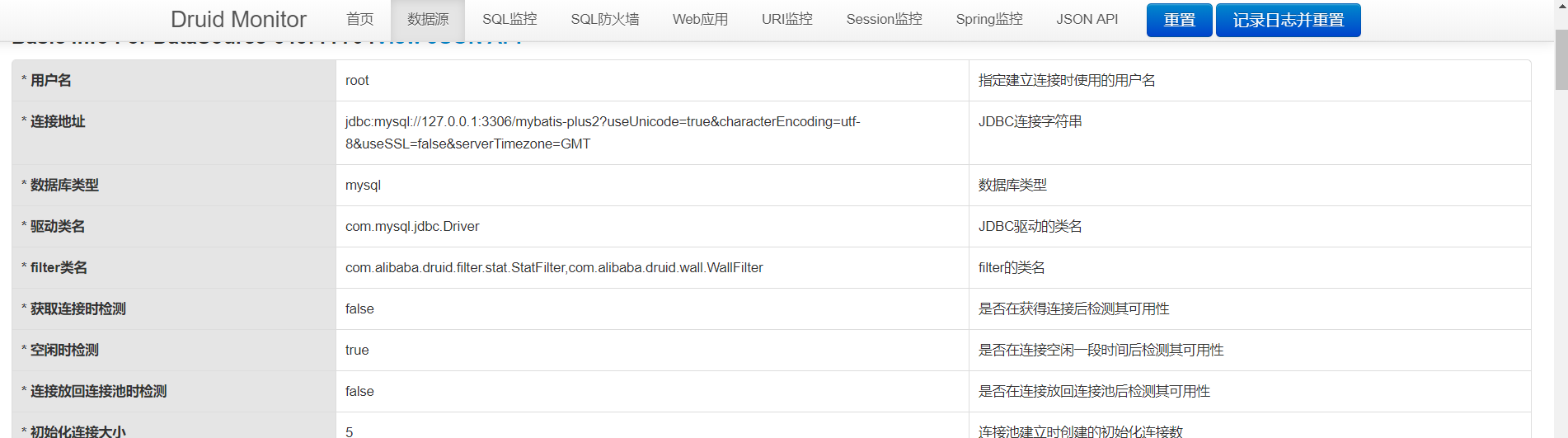

View Druid monitoring

Found three data sources arranged here

Url monitoring

epilogue

So far, the integration of this project is over, with my project source code attached

Spring boot integrates mybatis plus (2) multi data source Druid monitoring, Atomikos deals with transactions, cross database and linked table query