Using virtual nodes to improve k8s cluster capacity and flexibility

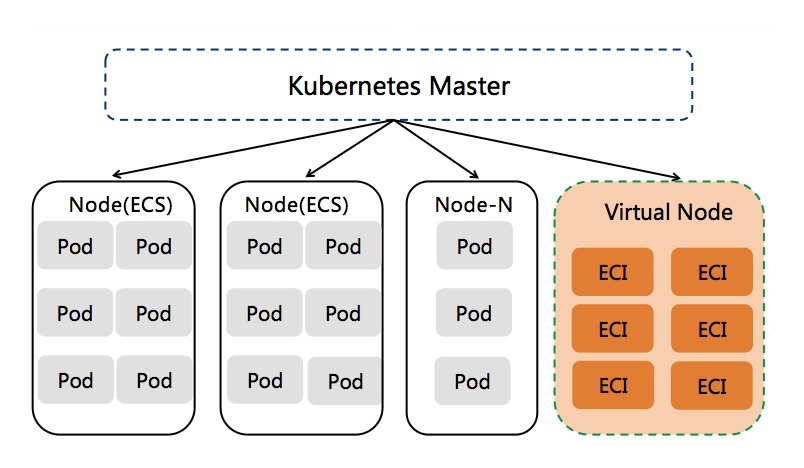

The way of adding virtual nodes in kubernetes cluster has been widely used by many customers. Based on virtual nodes, the Pod capacity and flexibility of the cluster can be greatly improved, and ECI Pod can be created flexibly and dynamically on demand, avoiding the trouble of cluster capacity planning. At present, virtual nodes have been widely used in the following scenarios.

- The peak and valley elastic demand of online business: for example, online education, e-commerce and other industries have obvious peak and valley computing features. Using virtual nodes can significantly reduce the maintenance of fixed resource pool and reduce the computing cost.

- Improve cluster Pod capacity: when the traditional flannel network mode cluster cannot add more nodes due to vpc routing table entries or vswitch network planning restrictions, the use of virtual nodes can avoid the above problems, simple and fast improve cluster Pod capacity.

- Data calculation: use virtual nodes to host Spark, Presto and other calculation scenarios, effectively reducing the calculation cost.

- CI/CD and other Job type tasks

Next, we show how to use virtual nodes to quickly create 10000 pods. These ECI pods are billed on demand and do not occupy the capacity of the fixed node resource pool.

By comparison, AWS EKS can only create up to 1000 fargate pods in a cluster. It is easy to create over 10000 ECI pods based on virtual nodes.

Create multiple virtual nodes

Please refer to the ACK product documentation to deploy the virtual node first: https://help.aliyun.com/document_detail/118970.html

Because multiple virtual nodes are often used to deploy a large number of ECI pods, we suggest to carefully confirm the configuration of vpc/vswitch / security group to ensure that there are enough vswitch ip resources (virtual nodes support the configuration of multiple vswitches to solve the ip capacity problem). Using enterprise level security group can break the 2000 instance limit of ordinary security group.

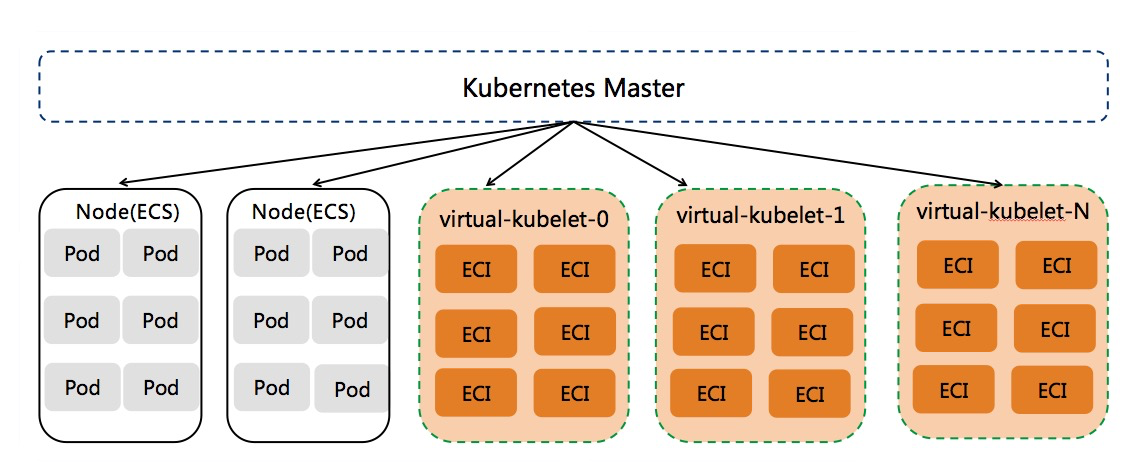

Generally speaking, if the number of ECI pods in a single k8s cluster is less than 3000, we recommend to deploy a single virtual node. If we want to deploy more pods on virtual nodes, we suggest to deploy multiple virtual nodes in k8s cluster to expand them horizontally. The deployment of multiple virtual nodes can relieve the pressure of a single virtual node and support a larger eci pod capacity. In this way, three virtual nodes can support 9000 ECI pods and 10 virtual nodes can support 30000 ECI pods.

In order to expand the virtual node level more simply, we use statefullset to deploy the vk controller. Each vk controller manages a vk node. The default number of Pod copies of statefullset is 1. When more virtual nodes are needed, only the replicas of statefullset need to be modified.

# kubectl -n kube-system scale statefulset virtual-node-eci --replicas=4 statefulset.apps/virtual-node-eci scaled # kubectl get no NAME STATUS ROLES AGE VERSION cn-hangzhou.192.168.1.1 Ready <none> 63d v1.12.6-aliyun.1 cn-hangzhou.192.168.1.2 Ready <none> 63d v1.12.6-aliyun.1 virtual-node-eci-0 Ready agent 1m v1.11.2-aliyun-1.0.207 virtual-node-eci-1 Ready agent 1m v1.11.2-aliyun-1.0.207 virtual-node-eci-2 Ready agent 1m v1.11.2-aliyun-1.0.207 virtual-node-eci-3 Ready agent 1m v1.11.2-aliyun-1.0.207 # kubectl -n kube-system get statefulset virtual-node-eci NAME READY AGE virtual-node-eci 4/4 1m # kubectl -n kube-system get pod|grep virtual-node-eci virtual-node-eci-0 1/1 Running 0 1m virtual-node-eci-1 1/1 Running 0 1m virtual-node-eci-2 1/1 Running 0 1m virtual-node-eci-3 1/1 Running 0 1m

When we create multiple nginx pod s in the vk namespace (add the specified label to the vk ns and force the pod in the ns to be scheduled to the virtual node), we can find that the pod is scheduled to multiple vk nodes.

# kubectl create ns vk # kubectl label namespace vk virtual-node-affinity-injection=enabled # kubectl -n vk run nginx --image nginx:alpine --replicas=10 deployment.extensions/nginx scaled # kubectl -n vk get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-546c47b569-blp88 1/1 Running 0 69s 192.168.1.26 virtual-node-eci-1 <none> <none> nginx-546c47b569-c4qbw 1/1 Running 0 69s 192.168.1.76 virtual-node-eci-0 <none> <none> nginx-546c47b569-dfr2v 1/1 Running 0 69s 192.168.1.27 virtual-node-eci-2 <none> <none> nginx-546c47b569-jfzxl 1/1 Running 0 69s 192.168.1.68 virtual-node-eci-1 <none> <none> nginx-546c47b569-mpmsv 1/1 Running 0 69s 192.168.1.66 virtual-node-eci-1 <none> <none> nginx-546c47b569-p4qlz 1/1 Running 0 69s 192.168.1.67 virtual-node-eci-3 <none> <none> nginx-546c47b569-x4vrn 1/1 Running 0 69s 192.168.1.65 virtual-node-eci-2 <none> <none> nginx-546c47b569-xmxx9 1/1 Running 0 69s 192.168.1.30 virtual-node-eci-0 <none> <none> nginx-546c47b569-xznd8 1/1 Running 0 69s 192.168.1.77 virtual-node-eci-3 <none> <none> nginx-546c47b569-zk9zc 1/1 Running 0 69s 192.168.1.75 virtual-node-eci-2 <none> <none>

Run 10000 ECI pods

In the above steps, we have created 4 virtual nodes, which can support 12000 ECI pods. We just need to schedule the workload to the virtual node. Here we need to pay attention to the scalability of Kube proxy.

- By default, the ECI Pod created by the virtual node supports accessing the ClusterIP Service in the cluster, so that each ECI Pod needs to keep a connection with the watch API server to listen for changes in svc/endpoints. When a large number of pods are Running at the same time, the apiserver and slb will maintain the number of concurrent pods, so it is necessary to ensure that the slb specification can support the expected number of concurrent connections.

- If the ECI Pod does not need to access the ClusterIP Service, you can set the value of the ECI Kube proxy environment variable of the virtual node ECI statefullset to "false", so that there will not be a large number of slb concurrent connections, and also reduce the pressure of the apiserver.

- We can also choose to expose the cluster IP Service accessed by ECI Pod to the intranet slb type, and then through the way of privatezone, ECI Pod can access the Service service in the cluster without being based on Kube proxy.

Reduce the number of vk virtual nodes

Because the eci pod on the vk is created on demand, when there is no eci pod, the vk virtual node will not occupy the actual resources, so generally we do not need to reduce the number of vk nodes. However, if you really want to reduce the number of vk nodes, we recommend the following steps.

It is assumed that there are four virtual nodes in the current cluster, namely virtual-node-eci-0/.../virtual-node-eci-3. If we want to reduce to one virtual node, then we need to delete the three nodes: virtual-node-eci-1/../virtual-node-eci-3.

- First, gracefully log off the vk node, expel the above pod to other nodes, and forbid more pods to be dispatched to the vk node to be deleted.

# kubectl drain virtual-node-eci-1 virtual-node-eci-2 virtual-node-eci-3 # kubectl get no NAME STATUS ROLES AGE VERSION cn-hangzhou.192.168.1.1 Ready <none> 66d v1.12.6-aliyun.1 cn-hangzhou.192.168.1.2 Ready <none> 66d v1.12.6-aliyun.1 virtual-node-eci-0 Ready agent 3d6h v1.11.2-aliyun-1.0.207 virtual-node-eci-1 Ready,SchedulingDisabled agent 3d6h v1.11.2-aliyun-1.0.207 virtual-node-eci-2 Ready,SchedulingDisabled agent 3d6h v1.11.2-aliyun-1.0.207 virtual-node-eci-3 Ready,SchedulingDisabled agent 66m v1.11.2-aliyun-1.0.207

The reason why you need to gracefully log off the vk node first is that the eci pod on the vk node is managed by the vk controller. If there is still eci pod on the vk node, delete the vk controller, which will cause the eci pod to remain, and the vk controller cannot continue to manage those pods.

- After the vk node is offline, modify the number of copies of virtual node ECI statefullset to reduce it to the number of vk nodes we expect.

# kubectl -n kube-system scale statefulset virtual-node-eci --replicas=1 statefulset.apps/virtual-node-eci scaled # kubectl -n kube-system get pod|grep virtual-node-eci virtual-node-eci-0 1/1 Running 0 3d6h

After a while, we will find that those vk nodes become NotReady.

# kubectl get no NAME STATUS ROLES AGE VERSION cn-hangzhou.192.168.1.1 Ready <none> 66d v1.12.6-aliyun.1 cn-hangzhou.192.168.1.2 Ready <none> 66d v1.12.6-aliyun.1 virtual-node-eci-0 Ready agent 3d6h v1.11.2-aliyun-1.0.207 virtual-node-eci-1 NotReady,SchedulingDisabled agent 3d6h v1.11.2-aliyun-1.0.207 virtual-node-eci-2 NotReady,SchedulingDisabled agent 3d6h v1.11.2-aliyun-1.0.207 virtual-node-eci-3 NotReady,SchedulingDisabled agent 70m v1.11.2-aliyun-1.0.207

- Manually delete virtual nodes in NotReady state

# kubelet delete no virtual-node-eci-1 virtual-node-eci-2 virtual-node-eci-3 node "virtual-node-eci-1" deleted node "virtual-node-eci-2" deleted node "virtual-node-eci-3" deleted # kubectl get no NAME STATUS ROLES AGE VERSION cn-hangzhou.192.168.1.1 Ready <none> 66d v1.12.6-aliyun.1 cn-hangzhou.192.168.1.2 Ready <none> 66d v1.12.6-aliyun.1 virtual-node-eci-0 Ready agent 3d6h v1.11.2-aliyun-1.0.207

See more: https://yqh.aliyun.com/detail/6738?utm_content=g_1000106525

Go to cloud and see yunqi No.: more cloud information, cloud cases, best practices, product introduction, visit: https://yqh.aliyun.com/