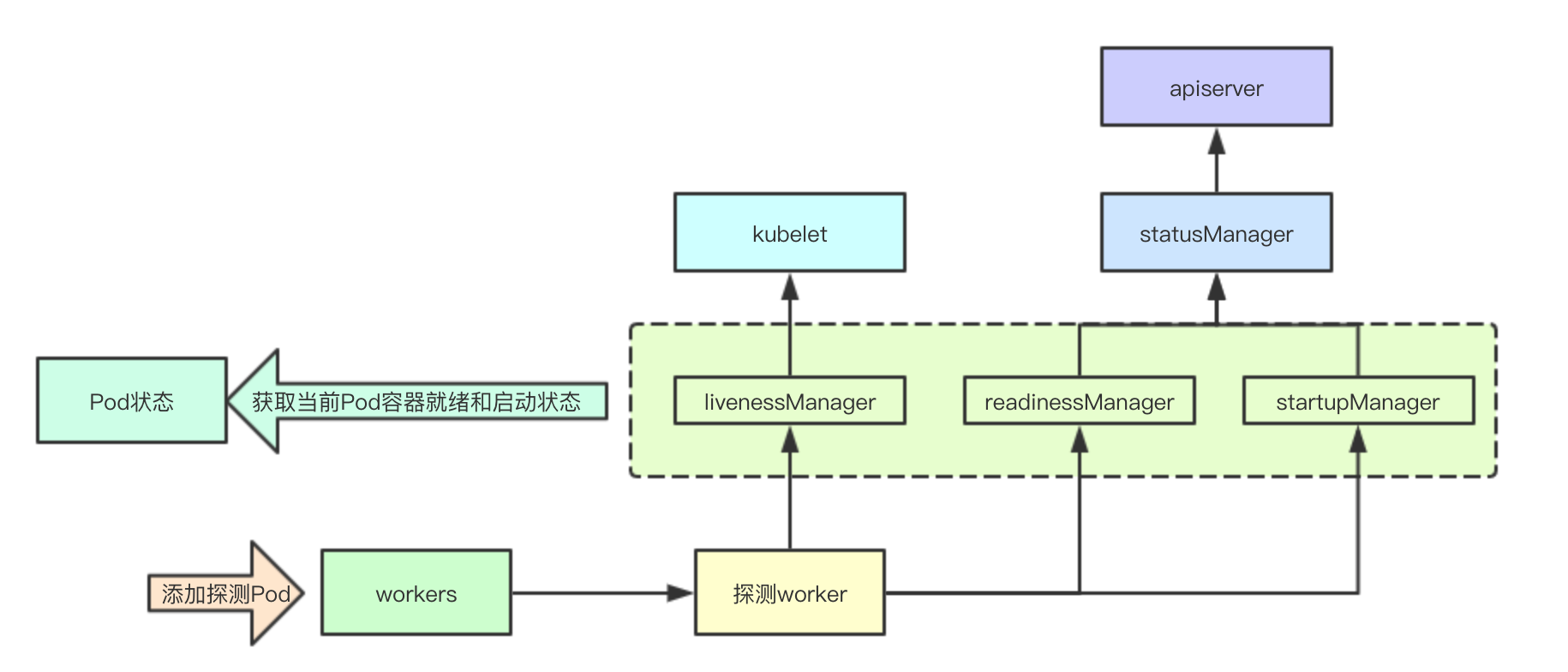

k8s builds a Manager component for the management of container sniffing worker, which is responsible for the management of the underlying sniffing worker, caches the current state of the container, and synchronizes the current state of the external container. Today we will analyze some of its core components

1. Implementation of Core Principles

The state of the Manager cache is mainly consumed by the kubelet, state components, and when the Pod is synchronized, it updates the ready state of the container and the start state of the Pod through the probing state in the current Manager. Let's take a look at some key implementations of the Manager itself.

The state of the Manager cache is mainly consumed by the kubelet, state components, and when the Pod is synchronized, it updates the ready state of the container and the start state of the Pod through the probing state in the current Manager. Let's take a look at some key implementations of the Manager itself.

2. Exploration Result Management

That is, the prober/results/results_manager component, which stores the detection results and notification detection results

2.1 Core Data Structure

Cache maintains the container's probe results, while updates subscribes to the external update status, which determines whether to notify the public by comparing the new results with the status in the cache

// Manager implementation. type manager struct { // Protect cache sync.RWMutex // Container ID-> Detection results cache map[kubecontainer.ContainerID]Result // Update Pipeline updates chan Update }

2.2 Update Cache Notification Event

Updating the cache informs the kubelet core process of external subscription container changes by comparing before and after states to publish change events

func (m *manager) Set(id kubecontainer.ContainerID, result Result, pod *v1.Pod) { // Modify internal state if m.setInternal(id, result) { // Synchronize Update Events m.updates <- Update{id, result, pod.UID} } }

Internal state modification and judgment on synchronization

// Return true to trigger updates if the previous cache does not exist or if the state is inconsistent func (m *manager) setInternal(id kubecontainer.ContainerID, result Result) bool { m.Lock() defer m.Unlock() prev, exists := m.cache[id] if !exists || prev != result { m.cache[id] = result return true } return false }

2.3 External pipeline renewal

func (m *manager) Updates() <-chan Update { return m.updates }

3. Probe Manager

Probe Manager is the Manager component of the prober/prober)manager that manages the probing components on the current kubelet, caches and synchronizes the results of the probing state, and synchronizes the apiserver state internally through the statusManager

3.1 Container Detection Key

Each probe Key contains the target information to be probed: the ID of the pod, container name, probe type

type probeKey struct { podUID types.UID containerName string probeType probeType }

3.2 Core Data Structure

The statusManager component will be analyzed in detail in subsequent chapters, stating that livenessManager is the result of the probe, so when a container probe fails, it will be handled locally by kubelet, while readlinessManager and startupManager will need to synchronize apiserver through statusManager

type manager struct { //Detect Key and worker mappings workers map[probeKey]*worker // Read-write lock workerLock sync.RWMutex //The statusManager cache provides pod IP and container id for probes. statusManager status.Manager // Store readiness detection results readinessManager results.Manager // Store liveness detection results livenessManager results.Manager // Store startup detection results startupManager results.Manager // Perform probe operation prober *prober }

3.3 Synchronous startup detection results

func (m *manager) updateStartup() { // Synchronize data from pipeline update := <-m.startupManager.Updates() started := update.Result == results.Success m.statusManager.SetContainerStartup(update.PodUID, update.ContainerID, started) }

3.4 Synchronous readiness detection results

func (m *manager) updateReadiness() { update := <-m.readinessManager.Updates() ready := update.Result == results.Success m.statusManager.SetContainerReadiness(update.PodUID, update.ContainerID, ready) }

3.5 Start Synchronous Detection Result Background Task

func (m *manager) Start() { // Start syncing readiness. go wait.Forever(m.updateReadiness, 0) // Start syncing startup. go wait.Forever(m.updateStartup, 0) }

3.6 Add Pod Detection

Adding a Pod iterates through all the containers of the Pod and builds the corresponding probe worker based on the probe type

func (m *manager) AddPod(pod *v1.Pod) { m.workerLock.Lock() defer m.workerLock.Unlock() key := probeKey{podUID: pod.UID} for _, c := range pod.Spec.Containers { key.containerName = c.Name // Construction of detection task for startupProbe if c.StartupProbe != nil && utilfeature.DefaultFeatureGate.Enabled(features.StartupProbe) { key.probeType = startup if _, ok := m.workers[key]; ok { klog.Errorf("Startup probe already exists! %v - %v", format.Pod(pod), c.Name) return } // Build a new worker w := newWorker(m, startup, pod, c) m.workers[key] = w go w.run() } // Construction of Probe Task for ReadinessProbe if c.ReadinessProbe != nil { key.probeType = readiness if _, ok := m.workers[key]; ok { klog.Errorf("Readiness probe already exists! %v - %v", format.Pod(pod), c.Name) return } w := newWorker(m, readiness, pod, c) m.workers[key] = w go w.run() } // Construction of Detection Task for LivenessProbe if c.LivenessProbe != nil { key.probeType = liveness if _, ok := m.workers[key]; ok { klog.Errorf("Liveness probe already exists! %v - %v", format.Pod(pod), c.Name) return } w := newWorker(m, liveness, pod, c) m.workers[key] = w go w.run() } } }

3.7 Update Pod Status

Updating the Pod status updates the status of the corresponding container in the Pod based on the previously cached status information in the current Manager. These states are the latest detected states of the container in the Pod. Obtaining these states detects whether the current container is ready and started, and provides basic data for subsequent update processes.

3.7.1 Container Status Update

for i, c := range podStatus.ContainerStatuses { var ready bool // Detect container state if c.State.Running == nil { ready = false } else if result, ok := m.readinessManager.Get(kubecontainer.ParseContainerID(c.ContainerID)); ok { // Detect the status inside readinessMnager, if successful it is ready ready = result == results.Success } else { // Check for detectors that are not yet running.Think ready as long as it exists _, exists := m.getWorker(podUID, c.Name, readiness) ready = !exists } podStatus.ContainerStatuses[i].Ready = ready var started bool if c.State.Running == nil { started = false } else if !utilfeature.DefaultFeatureGate.Enabled(features.StartupProbe) { // Container is running, if StartupProbe is disabled, it is assumed to be started started = true } else if result, ok := m.startupManager.Get(kubecontainer.ParseContainerID(c.ContainerID)); ok { // If the status inside startupManager is successful, it is considered started started = result == results.Success } else { // Check for detectors that are not yet running. _, exists := m.getWorker(podUID, c.Name, startup) started = !exists } podStatus.ContainerStatuses[i].Started = &started }

3.7.2 Initialize container status updates

The initialization container is considered ready if the primary container for the initialization container has terminated and exited with a status code of 0

for i, c := range podStatus.InitContainerStatuses { var ready bool if c.State.Terminated != nil && c.State.Terminated.ExitCode == 0 { // Container State ready = true } podStatus.InitContainerStatuses[i].Ready = ready }

3.8 Survival Status Notification

Survival status notifications occur primarily in the core process cycle of kubelet, and if a container's state fails to be detected, the corresponding pod's container state is synchronized immediately to determine what the next step is

case update := <-kl.livenessManager.Updates(): // If the probe state fails if update.Result == proberesults.Failure { // Omit Code handler.HandlePodSyncs([]*v1.Pod{pod}) }

Probing the whole design is probably like this, and its statusManager component will be phased in next, about to synchronize the probed state with the implementation of apiserver, k8s source reading e-book address: https://www.yuque.com/baxiaoshi/tyado3

>Microsignal: baxiaoshi2020  >Focus on bulletin numbers to read more source analysis articles

>Focus on bulletin numbers to read more source analysis articles  >More articles www.sreguide.com

>This article is a multi-article blog platform OpenWrite Release

>More articles www.sreguide.com

>This article is a multi-article blog platform OpenWrite Release