Enterprise environment deployment) K8S multi node deployment load balancing UI page

Environment to prepare:

6 platform centos7 equipment:

192.168.136.167 master01

192.168.136.168 node1

192.168.136.169 node2

192.168.136.170 master02

192.168.136.171 lb1

192.168.136.172 lb2

VIP: 192.168.1.100

Experimental steps:

1: Self signed ETCD certificate

2: ETCD deployment

3: Node install docker

4: Flannel deployment (write subnet to etcd first)

---------master----------

5: Self signed APIServer certificate

6: Deploy the APIServer component (token, csv)

7: Deploy the controller manager (specify the apiserver certificate) and scheduler components

----------node----------

8: Generate kubeconfig (bootstrap, kubeconfig and Kube proxy. Kubeconfig)

9: Deploy kubelet components

10: Deploy the Kube proxy component

----------Join the cluster----------

11: Kubectl get CSR & & kubectl certificate approve allow method certificates to join the cluster

12: Add a node

13: View the kubectl get node node node

1, etcd cluster construction

1. Operate on master01 to self sign etcd certificate

[root@master ~]# mkdir k8s

[root@master ~]# cd k8s/

[root@master k8s]# mkdir etcd-cert

[root@master k8s]# mv etcd-cert.sh etcd-cert

[root@master k8s]# ls

etcd-cert etcd.sh

[root@master k8s]# vim cfssl.sh

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

[root@master k8s]# bash cfssl.sh

[root@master k8s]# ls /usr/local/bin/

cfssl cfssl-certinfo cfssljson

[root@master k8s]# cd etcd-cert/

`Definition CA certificate`

cat > ca-config.json <<EOF

{

"signing":{

"default":{

"expiry":"87600h"

},

"profiles":{

"www":{

"expiry":"87600h",

"usages":[

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

`Implement certificate signing`

cat > ca-csr.json <<EOF

{

"CN":"etcd CA",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"Nanjing",

"ST":"Nanjing"

}

]

}

EOF

`Production certificate, generating ca-key.pem ca.pem`

[root@master etcd-cert]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2020/01/15 11:26:22 [INFO] generating a new CA key and certificate from CSR

2020/01/15 11:26:22 [INFO] generate received request

2020/01/15 11:26:22 [INFO] received CSR

2020/01/15 11:26:22 [INFO] generating key: rsa-2048

2020/01/15 11:26:23 [INFO] encoded CSR

2020/01/15 11:26:23 [INFO] signed certificate with serial number 58994014244974115135502281772101176509863440005

`Appoint etcd Communication verification among three nodes`

cat > server-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"192.168.1.11",

"192.168.1.12",

"192.168.1.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "NanJing",

"ST": "NanJing"

}

]

}

EOF

`generate ETCD certificate server-key.pem server.pem`

[root@master etcd-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

2020/01/15 11:28:07 [INFO] generate received request

2020/01/15 11:28:07 [INFO] received CSR

2020/01/15 11:28:07 [INFO] generating key: rsa-2048

2020/01/15 11:28:07 [INFO] encoded CSR

2020/01/15 11:28:07 [INFO] signed certificate with serial number 153451631889598523484764759860297996765909979890

2020/01/15 11:28:07 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

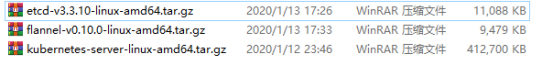

specifically, section 10.2.3 ("Information Requirements").Upload the following three compressed packages to the / root/k8s Directory:

[root@master k8s]# ls

cfssl.sh etcd.sh flannel-v0.10.0-linux-amd64.tar.gz

etcd-cert etcd-v3.3.10-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz

[root@master k8s]# tar zxvf etcd-v3.3.10-linux-amd64.tar.gz

[root@master k8s]# ls etcd-v3.3.10-linux-amd64

Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md

[root@master k8s]# mkdir /opt/etcd/{cfg,bin,ssl} -p

[root@master k8s]# mv etcd-v3.3.10-linux-amd64/etcd etcd-v3.3.10-linux-amd64/etcdctl /opt/etcd/bin/

`Certificate copy`

[root@master k8s]# cp etcd-cert/*.pem /opt/etcd/ssl/

`Enter stuck state and wait for other nodes to join`

[root@master k8s]# bash etcd.sh etcd01 192.168.1.11 etcd02=https://192.168.12.148:2380,etcd03=https://192.168.1.13:2380

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service2. Reopen a master01 terminal

[root@master ~]# ps -ef | grep etcd root 3479 1780 0 11:48 pts/0 00:00:00 bash etcd.sh etcd01 192.168.1.11 etcd02=https://192.168.1.12:2380,etcd03=https://192.168.1.13:2380 root 3530 3479 0 11:48 pts/0 00:00:00 systemctl restart etcd root 3540 1 1 11:48 ? 00:00:00 /opt/etcd/bin/etcd --name=etcd01 --data-dir=/var/lib/etcd/default.etcd --listen-peer-urls=https://192.168.1.11:2380 --listen-client-urls=https://192.168.1.11:2379,http://127.0.0.1:2379 --advertise-client-urls=https://192.168.1.11:2379 --initial-advertise-peer-urls=https://192.168.1.11:2380 --initial-cluster=etcd01=https://192.168.1.11:2380,etcd02=https://192.168.1.12:2380,etcd03=https://192.168.1.13:2380 --initial-cluster-token=etcd-cluster --initial-cluster-state=new --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem --trusted-ca-file=/opt/etcd/ssl/ca.pem --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem root 3623 3562 0 11:49 pts/1 00:00:00 grep --color=auto etcd

Copy 2 certificates node node` [root@master k8s]# scp -r /opt/etcd/ root@192.168.1.12:/opt/ root@192.168.1.12's password: etcd 100% 518 426.8KB/s 00:00 etcd 100% 18MB 105.0MB/s 00:00 etcdctl 100% 15MB 108.2MB/s 00:00 ca-key.pem 100% 1679 1.4MB/s 00:00 ca.pem 100% 1265 396.1KB/s 00:00 server-key.pem 100% 1675 1.0MB/s 00:00 server.pem 100% 1338 525.6KB/s 00:00 [root@master k8s]# scp -r /opt/etcd/ root@192.168.1.13:/opt/ root@192.168.1.13's password: etcd 100% 518 816.5KB/s 00:00 etcd 100% 18MB 87.4MB/s 00:00 etcdctl 100% 15MB 108.6MB/s 00:00 ca-key.pem 100% 1679 1.3MB/s 00:00 ca.pem 100% 1265 411.8KB/s 00:00 server-key.pem 100% 1675 1.4MB/s 00:00 server.pem 100% 1338 639.5KB/s 00:00 //Copy the startup script of etcd to 2 node s [root@master k8s]# scp /usr/lib/systemd/system/etcd.service root@192.168.1.12:/usr/lib/systemd/system/ root@192.168.1.12's password: etcd.service 100% 923 283.4KB/s 00:00 [root@master k8s]# scp /usr/lib/systemd/system/etcd.service root@192.168.1.13:/usr/lib/systemd/system/ root@192.168.1.13's password: etcd.service 100% 923 347.7KB/s 00:00

3. Take two node s to modify the configuration file of etcd and start the etcd service

node1 [root@node1 ~]# systemctl stop firewalld.service [root@node1 ~]# setenforce 0 [root@node1 ~]# vim /opt/etcd/cfg/etcd #[Member] ETCD_NAME="etcd02" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.1.12:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.1.12:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.12:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.12:2379" ETCD_INITIAL_CLUSTER="etcd01=https://192.168.1.11:2380,etcd02=https://192.168.1.12:2380,etcd03=https://192.168.1.13:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" [root@node1 ~]# systemctl start etcd [root@node1 ~]# systemctl status etcd ● etcd.service - Etcd Server Loaded: loaded (/usr/lib/systemd/system/etcd.service; disabled; vendor preset: disabled) Active: active (running) since Three 2020-01-15 17:53:24 CST; 5s ago #Status is Active

node2 [root@node1 ~]# systemctl stop firewalld.service [root@node1 ~]# setenforce 0 [root@node1 ~]# vim /opt/etcd/cfg/etcd #[Member] ETCD_NAME="etcd03" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.1.13:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.1.13:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.13:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.13:2379" ETCD_INITIAL_CLUSTER="etcd01=https://192.168.1.11:2380,etcd02=https://192.168.1.12:2380,etcd03=https://192.168.1.13:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" [root@node1 ~]# systemctl start etcd [root@node1 ~]# systemctl status etcd ● etcd.service - Etcd Server Loaded: loaded (/usr/lib/systemd/system/etcd.service; disabled; vendor preset: disabled) Active: active (running) since Three 2020-01-15 17:53:24 CST; 5s ago #Status is Active

4. Verify cluster information on master01

[root@master k8s]# cd etcd-cert/ [root@master etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.1.11:2379,https://192.168.1.12:2379,https://192.168.1.13:2379" cluster-health member 9104d301e3b6da41 is healthy: got healthy result from https://192.168.1.11:2379 member 92947d71c72a884e is healthy: got healthy result from https://192.168.1.12:2379 member b2a6d67e1bc8054b is healthy: got healthy result from https://192.168.1.13:2379 cluster is healthy

2, Deploy docker on 2 node nodes

`Install dependency package`

[root@node1 ~]# yum install yum-utils device-mapper-persistent-data lvm2 -y

`Set alicloud image source`

[root@node1 ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

`install Docker-ce`

[root@node1 ~]# yum install -y docker-ce

`start-up Docker And set it to power on and start automatically`

[root@node1 ~]# systemctl start docker.service

[root@node1 ~]# systemctl enable docker.service

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

`Check the opening of related processes`

[root@node1 ~]# ps aux | grep docker

root 5551 0.1 3.6 565460 68652 ? Ssl 09:13 0:00 /usr/bin/docke d -H fd:// --containerd=/run/containerd/containerd.sock

root 5759 0.0 0.0 112676 984 pts/1 R+ 09:16 0:00 grep --color=auto docker

`Image accelerator`

[root@node1 ~]# tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://w1ogxqvl.mirror.aliyuncs.com"]

}

EOF

#Network optimization

echo 'net.ipv4.ip_forward=1' > /etc/sysctl.cnf

sysctl -p

[root@node1 ~]# service network restart

Restarting network (via systemctl): [ Determine ]

[root@node1 ~]# systemctl restart docker

[root@node1 ~]# systemctl daemon-reload

[root@node1 ~]# systemctl restart docker3, Installing the flannel components

1. Write the allocated subnet segment in the master server to ETCD for flannel to use

[root@master etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.1.11:2379,https://192.168.1.12:2379,https://192.168.1.13:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

//View information written

[root@master etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.1.11:2379,https://192.168.1.12:2379,https://192.168.1.13:2379" get /coreos.com/network/config

{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

//Copy the software package of flannel to all node nodes (just deploy on node nodes)

[root@master etcd-cert]# cd ../

[root@master k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz root@192.168.1.12:/root

root@192.168.1.12's password:

flannel-v0.10.0-linux-amd64.tar.gz 100% 9479KB 55.6MB/s 00:00

[root@master k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz root@192.168.1.13:/root

root@192.168.1.13's password:

flannel-v0.10.0-linux-amd64.tar.gz 100% 9479KB 69.5MB/s 00:00

2. Configure flannel in two node nodes respectively

[root@node1 ~]# tar zxvf flannel-v0.10.0-linux-amd64.tar.gz

`Establish k8s working directory`

[root@node1 ~]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

[root@node1 ~]# mv mk-docker-opts.sh flanneld /opt/kubernetes/bin/

[root@node1 ~]# vim flannel.sh

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

cat <<EOF >/opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/etcd/ssl/ca.pem \

-etcd-certfile=/opt/etcd/ssl/server.pem \

-etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

`open flannel Network function`

[root@node1 ~]# bash flannel.sh https://192.168.1.11:2379,https://192.168.1.12:2379,https://192.168.1.13:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

`To configure docker Connect flannel`

[root@node1 ~]# vim /usr/lib/systemd/system/docker.service

#The service section is changed as follows

9 [Service]

10 Type=notify

11 # the default is not to use systemd for cgroups because the delegate issues s till

12 # exists and systemd currently does not support the cgroup feature set requir ed

13 # for containers run by docker

14 EnvironmentFile=/run/flannel/subnet.env #Add this line under 13

15 ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --Containerd = / run / containerd / containerd.sock add $receiver ﹐ network ﹐ options before - H in line 15

16 ExecReload=/bin/kill -s HUP $MAINPID

17 TimeoutSec=0

18 RestartSec=2

19 Restart=always

#After modification, press Esc to exit the insertion mode, enter: wq to save and exit

[root@node1 ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.32.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.32.1/24 --ip-masq=false --mtu=1450"

#Here bip specifies the subnet at startup

`restart docker service`

[root@node1 ~]# systemctl daemon-reload

[root@node1 ~]# systemctl restart docker

`See flannel network`

[root@node1 ~]# ifconfig

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.32.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::344b:13ff:fecb:1e2d prefixlen 64 scopeid 0x20<link>

ether 36:4b:13:cb:1e:2d txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 27 overruns 0 carrier 0 collisions 0

4, Deploy master components

1. To operate on the master, API server generates the certificate, and upload the master.zip to the master node first

[root@master k8s]# unzip master.zip

Archive: master.zip

inflating: apiserver.sh

inflating: controller-manager.sh

inflating: scheduler.sh

[root@master k8s]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

`Establish apiserver Self signed certificate directory`

[root@master k8s]# mkdir k8s-cert

[root@master k8s]# cd k8s-cert/

[root@master k8s-cert]# ls #k8s-cert.sh needs to be uploaded to this directory

k8s-cert.sh

`establish ca certificate`

[root@master k8s-cert]# cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

[root@master k8s-cert]# cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Nanjing",

"ST": "Nanjing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

`Certificate signature (build ca.pem ca-key.pem)`

[root@master k8s-cert]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2020/02/05 10:15:09 [INFO] generating a new CA key and certificate from CSR

2020/02/05 10:15:09 [INFO] generate received request

2020/02/05 10:15:09 [INFO] received CSR

2020/02/05 10:15:09 [INFO] generating key: rsa-2048

2020/02/05 10:15:09 [INFO] encoded CSR

2020/02/05 10:15:09 [INFO] signed certificate with serial number 154087341948227448402053985122760482002707860296

`establish apiserver certificate`

[root@master k8s-cert]# cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.1.11", #master1

"192.168.1.14", #master2

"192.168.1.100", #vip

"192.168.1.15", #lb

"192.168.1.16", #lb

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "NanJing",

"ST": "NanJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

`Certificate signature (build server.pem server-key.pem)`

[root@master k8s-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

2020/02/05 11:43:47 [INFO] generate received request

2020/02/05 11:43:47 [INFO] received CSR

2020/02/05 11:43:47 [INFO] generating key: rsa-2048

2020/02/05 11:43:47 [INFO] encoded CSR

2020/02/05 11:43:47 [INFO] signed certificate with serial number 359419453323981371004691797080289162934778938507

2020/02/05 11:43:47 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

`establish admin certificate`

[root@master k8s-cert]# cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "NanJing",

"ST": "NanJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

`Certificate signature (build admin.pem admin-key.epm)`

[root@master k8s-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

2020/02/05 11:46:04 [INFO] generate received request

2020/02/05 11:46:04 [INFO] received CSR

2020/02/05 11:46:04 [INFO] generating key: rsa-2048

2020/02/05 11:46:04 [INFO] encoded CSR

2020/02/05 11:46:04 [INFO] signed certificate with serial number 361885975538105795426233467824041437549564573114

2020/02/05 11:46:04 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

`establish kube-proxy certificate`

[root@master k8s-cert]# cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "NanJing",

"ST": "NanJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

`Certificate signature (build kube-proxy.pem kube-proxy-key.pem)`

[root@master k8s-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

2020/02/05 11:47:55 [INFO] generate received request

2020/02/05 11:47:55 [INFO] received CSR

2020/02/05 11:47:55 [INFO] generating key: rsa-2048

2020/02/05 11:47:56 [INFO] encoded CSR

2020/02/05 11:47:56 [INFO] signed certificate with serial number 34747850270017663665747172643822215922289240826

2020/02/05 11:47:56 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

2. Generate the apiserver certificate and open the scheduler and controller manager components

[root@master k8s-cert]# bash k8s-cert.sh

2020/02/05 11:50:08 [INFO] generating a new CA key and certificate from CSR

2020/02/05 11:50:08 [INFO] generate received request

2020/02/05 11:50:08 [INFO] received CSR

2020/02/05 11:50:08 [INFO] generating key: rsa-2048

2020/02/05 11:50:08 [INFO] encoded CSR

2020/02/05 11:50:08 [INFO] signed certificate with serial number 473883155883308900863805079252124099771123043047

2020/02/05 11:50:08 [INFO] generate received request

2020/02/05 11:50:08 [INFO] received CSR

2020/02/05 11:50:08 [INFO] generating key: rsa-2048

2020/02/05 11:50:08 [INFO] encoded CSR

2020/02/05 11:50:08 [INFO] signed certificate with serial number 66483817738746309793417718868470334151539533925

2020/02/05 11:50:08 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

2020/02/05 11:50:08 [INFO] generate received request

2020/02/05 11:50:08 [INFO] received CSR

2020/02/05 11:50:08 [INFO] generating key: rsa-2048

2020/02/05 11:50:08 [INFO] encoded CSR

2020/02/05 11:50:08 [INFO] signed certificate with serial number 245658866069109639278946985587603475325871008240

2020/02/05 11:50:08 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

2020/02/05 11:50:08 [INFO] generate received request

2020/02/05 11:50:08 [INFO] received CSR

2020/02/05 11:50:08 [INFO] generating key: rsa-2048

2020/02/05 11:50:09 [INFO] encoded CSR

2020/02/05 11:50:09 [INFO] signed certificate with serial number 696729766024974987873474865496562197315198733463

2020/02/05 11:50:09 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@master k8s-cert]# ls *pem

admin-key.pem ca-key.pem kube-proxy-key.pem server-key.pem

admin.pem ca.pem kube-proxy.pem server.pem

[root@master k8s-cert]# cp ca*pem server*pem /opt/kubernetes/ssl/

[root@master k8s-cert]# cd ..

`decompression kubernetes Compressed package`

[root@master k8s]# tar zxvf kubernetes-server-linux-amd64.tar.gz

[root@master k8s]# cd /root/k8s/kubernetes/server/bin

`Copy critical command file`

[root@master bin]# cp kube-apiserver kubectl kube-controller-manager kube-scheduler /opt/kubernetes/bin/

[root@master k8s]# cd /root/k8s

`Randomly generated serial number`

[root@master k8s]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

9b3186df3dc799376ad43b6fe0108571

[root@master k8s]# vim /opt/kubernetes/cfg/token.csv

9b3186df3dc799376ad43b6fe0108571,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

#Serial number, user name, id, role

`Binary, token,Certificate ready, open apiserver`

[root@master k8s]# bash apiserver.sh 192.168.1.11 https://192.168.1.11:2379,https://192.168.1.12:2379,https://192.168.1.13:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.

`Check if the process started successfully`

[root@master k8s]# ps aux | grep kube

`View profile`

[root@master k8s]# cat /opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://192.168.1.11:2379,https://192.168.18.148:2379,https://192.168.18.145:2379 \

--bind-address=192.168.1.11 \

--secure-port=6443 \

--advertise-address=192.168.1.11 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--kubelet-https=true \

--enable-bootstrap-token-auth \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

`Monitoring https port`

[root@master k8s]# netstat -ntap | grep 6443

`start-up scheduler service`

[root@master k8s]# ./scheduler.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

[root@master k8s]# ps aux | grep ku

postfix 6212 0.0 0.0 91732 1364 ? S 11:29 0:00 pickup -l -t unix -u

root 7034 1.1 1.0 45360 20332 ? Ssl 12:23 0:00 /opt/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect

root 7042 0.0 0.0 112676 980 pts/1 R+ 12:23 0:00 grep --color=auto ku

[root@master k8s]# chmod +x controller-manager.sh

`start-up controller-manager`

[root@master k8s]# ./controller-manager.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.

`See master Node state`

[root@master k8s]# /opt/kubernetes/bin/kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

5, Deploy node components

1. Copy the kubelet and Kube proxy command files to the node on master01

[root@master k8s]# cd kubernetes/server/bin/ [root@master bin]# scp kubelet kube-proxy root@192.168.1.12:/opt/kubernetes/bin/ root@192.168.1.12's password: kubelet 100% 168MB 81.1MB/s 00:02 kube-proxy 100% 48MB 77.6MB/s 00:00 [root@master bin]# scp kubelet kube-proxy root@192.168.1.13:/opt/kubernetes/bin/ root@192.168.1.13's password: kubelet 100% 168MB 86.8MB/s 00:01 kube-proxy 100% 48MB 90.4MB/s 00:00

2. Extract the file on node1

[root@node1 ~]# ls anaconda-ks.cfg flannel-v0.10.0-linux-amd64.tar.gz node.zip Public video document music flannel.sh initial-setup-ks.cfg README.md Template picture download desktop [root@node1 ~]# unzip node.zip Archive: node.zip inflating: proxy.sh inflating: kubelet.sh

3. Operate on master01

[root@master bin]# cd /root/k8s/

[root@master k8s]# mkdir kubeconfig

[root@master k8s]# cd kubeconfig/

`upload kubeconfig.sh Script to this directory and rename it`

[root@master kubeconfig]# ls

kubeconfig.sh

[root@master kubeconfig]# mv kubeconfig.sh kubeconfig

[root@master kubeconfig]# vim kubeconfig

#Delete the first 9 lines, which have been executed before when the token was generated

# Set client authentication parameters

kubectl config set-credentials kubelet-bootstrap \

--token=9b3186df3dc799376ad43b6fe0108571 \ #The serial number in the token needs to be changed. It's the token we generated earlier

--kubeconfig=bootstrap.kubeconfig

#After modification, press Esc to exit the insertion mode, enter: wq to save and exit

----How to get serial number----

[root@master kubeconfig]# cat /opt/kubernetes/cfg/token.csv

9b3186df3dc799376ad43b6fe0108571,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

#We need to use the serial number "9b3186df3dc799374ad43b6fe0108571" and the serial number of each person is different

---------------------

`Set environment variables (can be written to/etc/profile Medium)`

[root@master kubeconfig]# vim /etc/profile

#Press capital G to the last line and lowercase o to insert

export PATH=$PATH:/opt/kubernetes/bin/

#After modification, press Esc to exit the insertion mode, enter: wq to save and exit

[root@master kubeconfig]# source /etc/profile

[root@master kubeconfig]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

[root@master kubeconfig]# kubectl get node

No resources found.

#No nodes have been added at this time

[root@master kubeconfig]# bash kubeconfig 192.168.1.11 /root/k8s/k8s-cert/

Cluster "kubernetes" set.

User "kubelet-bootstrap" set.

Context "default" created.

Switched to context "default".

Cluster "kubernetes" set.

User "kube-proxy" set.

Context "default" created.

Switched to context "default".

[root@master kubeconfig]# ls

bootstrap.kubeconfig kubeconfig kube-proxy.kubeconfig

`Copy configuration files to two node node`

[root@master kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.1.12:/opt/kubernetes/cfg/

root@192.168.1.12's password:

bootstrap.kubeconfig 100% 2168 2.2MB/s 00:00

kube-proxy.kubeconfig 100% 6270 3.5MB/s 00:00

[root@master kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.1.13:/opt/kubernetes/cfg/

root@192.168.1.13's password:

bootstrap.kubeconfig 100% 2168 3.1MB/s 00:00

kube-proxy.kubeconfig 100% 6270 7.9MB/s 00:00

`Establish bootstrap Roles give permissions to connect apiserver Request signature (key steps)`

[root@master kubeconfig]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

3. Operate on node01 node

[root@node1 ~]# bash kubelet.sh 192.168.1.12 Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. //Check kubelet service startup [root@node1 ~]# ps aux | grep kube [root@node1 ~]# systemctl status kubelet.service ● kubelet.service - Kubernetes Kubelet Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled) Active: active (running) since Three 2020-02-05 14:54:45 CST; 21s ago #running

4. Verify the certificate request of node1 on master01

node1 Will automatically find apiserver Go ahead and apply for the certificate, and we can check it node01 Node's request [root@master kubeconfig]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-ZZnDyPkUICga9NeuZF-M8IHTmpekEurXtbHXOyHZbDg 18s kubelet-bootstrap Pending #At this time, the status is Pending, waiting for the cluster to issue a certificate to the node `Continue to view certificate status` [root@master kubeconfig]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-ZZnDyPkUICga9NeuZF-M8IHTmpekEurXtbHXOyHZbDg 3m59s kubelet-bootstrap Approved,Issued #At this time, the status is approved and issued has been allowed to join the cluster `View cluster nodes, successfully joined node1 node` [root@master kubeconfig]# kubectl get node NAME STATUS ROLES AGE VERSION 192.168.18.148 Ready <none> 6m54s v1.12.3

5. Operate at node1 node and start proxy service

[root@node1 ~]# bash proxy.sh 192.168.1.12 Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service. [root@node1 ~]# systemctl status kube-proxy.service ● kube-proxy.service - Kubernetes Proxy Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled) Active: active (running) since Four 2020-02-06 11:11:56 CST; 20s ago #running

6. Copy the / opt/kubernetes directory in node1 to node2, and the service files of kubelet and Kube proxy to node2

[root@node1 ~]# scp -r /opt/kubernetes/ root@192.168.1.13:/opt/

[root@node1 ~]# scp /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@192.168.1.13:/usr/lib/systemd/system/

root@192.168.1.13's password:

kubelet.service 100% 264 291.3KB/s 00:00

kube-proxy.service 100% 231 407.8KB/s 00:007. Operate on node2 and modify: delete the copied certificate first, and node2 will apply for the certificate by itself

[root@node2 ~]# cd /opt/kubernetes/ssl/ [root@node2 ssl]# rm -rf * `Modify profile kubelet kubelet.config kube-proxy(Three profiles)` [root@node2 ssl]# cd ../cfg/ [root@node2 cfg]# vim kubelet 4 --hostname-override=192.168.1.13\ #Line 4, change the host name to the IP address of node2 node #After modification, press Esc to exit the insertion mode, enter: wq to save and exit [root@node2 cfg]# vim kubelet.config 4 address: 192.168.1.13 #Line 4, change the address to the IP address of node2 node #After modification, press Esc to exit the insertion mode, enter: wq to save and exit [root@node2 cfg]# vim kube-proxy 4 --hostname-override=192.168.1.13 #Line 4, change to the IP address of node2 node #After modification, press Esc to exit the insertion mode, enter: wq to save and exit `Startup service` [root@node2 cfg]# systemctl start kubelet.service [root@node2 cfg]# systemctl enable kubelet.service Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. [root@node2 cfg]# systemctl start kube-proxy.service [root@node2 cfg]# systemctl enable kube-proxy.service Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service. //Step 8: go back to the master to view the node2 node request [root@master k8s]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-QtKJLeSj130rGIccigH6-MKH7klhymwDxQ4rh4w8WJA 99s kubelet-bootstrap Pending #A new license appears to join the cluster [root@master k8s]# kubectl certificate approve node-csr-QtKJLeSj130rGIccigH6-MKH7klhymwDxQ4rh4w8WJA certificatesigningrequest.certificates.k8s.io/node-csr-QtKJLeSj130rGIccigH6-MKH7klhymwDxQ4rh4w8WJA approved

8. View the nodes in the cluster on master01

[root@master k8s]# kubectl get node NAME STATUS ROLES AGE VERSION 192.168.1.12 Ready <none> 28s v1.12.3 192.168.1.13 Ready <none> 26m v1.12.3 #At this time, both nodes have joined the cluster

6, Deploy the second master

1. Operate on the master

Operate on master1 and copy the kubernetes directory to master2

[root@master1 k8s]# scp -r /opt/kubernetes/ root@192.168.1.14:/opt

Copy the three component startup scripts kube-apiserver.service, kube-controller-manager.service, kube-scheduler.service to master2 in master1

[root@master1 k8s]# scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service root@192.168.1.14:/usr/lib/systemd/system/2. Operate on master2, and modify the IP in the configuration file Kube API server

[root@master2 ~]# cd /opt/kubernetes/cfg/ [root@master2 cfg]# ls kube-apiserver kube-controller-manager kube-scheduler token.csv [root@master2 cfg]# vim kube-apiserver 5 --bind-address=192.168.1.14 \ 7 --advertise-address=192.168.1.14 \ #The IP address of lines 5 and 7 needs to be changed to the address of master2 #After modification, press Esc to exit the insertion mode, enter: wq to save and exit

3. Copy the existing etcd certificate on master01 to master2

[root@master1 k8s]# scp -r /opt/etcd/ root@192.168.1.14:/opt/ root@192.168.1.14's password: etcd 100% 516 535.5KB/s 00:00 etcd 100% 18MB 90.6MB/s 00:00 etcdctl 100% 15MB 80.5MB/s 00:00 ca-key.pem 100% 1675 1.4MB/s 00:00 ca.pem 100% 1265 411.6KB/s 00:00 server-key.pem 100% 1679 2.0MB/s 00:00 server.pem 100% 1338 429.6KB/s 00:00

4. Start three component services on master02

[root@master2 cfg]# systemctl start kube-apiserver.service [root@master2 cfg]# systemctl enable kube-apiserver.service Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service. [root@master2 cfg]# systemctl status kube-apiserver.service ● kube-apiserver.service - Kubernetes API Server Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled) Active: active (running) since Five 2020-02-07 09:16:57 CST; 56min ago [root@master2 cfg]# systemctl start kube-controller-manager.service [root@master2 cfg]# systemctl enable kube-controller-manager.service Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service. [root@master2 cfg]# systemctl status kube-controller-manager.service ● kube-controller-manager.service - Kubernetes Controller Manager Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled) Active: active (running) since Five 2020-02-07 09:17:02 CST; 57min ago [root@master2 cfg]# systemctl start kube-scheduler.service [root@master2 cfg]# systemctl enable kube-scheduler.service Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service. [root@master2 cfg]# systemctl status kube-scheduler.service ● kube-scheduler.service - Kubernetes Scheduler Loaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled) Active: active (running) since Five 2020-02-07 09:17:07 CST; 58min ago

5. Modify the system environment variable and view the node status to verify that master02 is running normally

[root@master2 cfg]# vim /etc/profile #End add export PATH=$PATH:/opt/kubernetes/bin/ [root@master2 cfg]# source /etc/profile [root@master2 cfg]# kubectl get node NAME STATUS ROLES AGE VERSION 192.168.1.12 Ready <none> 21h v1.12.3 192.168.1.13 Ready <none> 22h v1.12.3 #At this time, you can see the addition of node1 and node2

7, Load balancing deployment

1. Upload the keepalived.conf and nginx.sh files to the root directory of lb1 and lb2

`lb1` [root@lb1 ~]# ls anaconda-ks.cfg keepalived.conf Public video document music initial-setup-ks.cfg nginx.sh Template picture download desktop `lb2` [root@lb2 ~]# ls anaconda-ks.cfg keepalived.conf Public video document music initial-setup-ks.cfg nginx.sh Template picture download desktop

2.lb1 (192.168.1.15) operation

[root@lb1 ~]# systemctl stop firewalld.service

[root@lb1 ~]# setenforce 0

[root@lb1 ~]# vim /etc/yum.repos.d/nginx.repo

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

#After modification, press Esc to exit the insertion mode, enter: wq to save and exit

`Reload yum Warehouse`

[root@lb1 ~]# yum list

`install nginx service`

[root@lb1 ~]# yum install nginx -y

[root@lb1 ~]# vim /etc/nginx/nginx.conf

#Insert the following under line 12

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.1.11:6443; #Here is the ip address of master1

server 192.168.1.12:6443; #Here is the ip address of master2

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

#After modification, press Esc to exit the insertion mode, enter: wq to save and exit

`Testing syntax`

[root@lb1 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@lb1 ~]# cd /usr/share/nginx/html/

[root@lb1 html]# ls

50x.html index.html

[root@lb1 html]# vim index.html

14 <h1>Welcome to mater nginx!</h1> #Add master in line 14 to differentiate

#After modification, press Esc to exit the insertion mode, enter: wq to save and exit

`Startup service`

[root@lb2 ~]# systemctl start nginx

//For browser verification access, enter 192.168.18.147 to access the nginx home page of the master

//Insert picture description here

//Deploy the keepalived service

[root@lb1 html]# yum install keepalived -y

`Modify profile`

[root@lb1 html]# cd ~

[root@lb1 ~]# cp keepalived.conf /etc/keepalived/keepalived.conf

cp: Is it covered?"/etc/keepalived/keepalived.conf"? yes

#Use the keepalived.conf configuration file we uploaded before to overwrite the original configuration file after the installation

[root@lb1 ~]# vim /etc/keepalived/keepalived.conf

18 script "/etc/nginx/check_nginx.sh" #Change the directory of line 18 to / etc/nginx /, write after the script

23 interface ens33 #eth0 is changed to ens33, where the network card name can be queried with ifconfig command

24 virtual_router_id 51 #vrrp route ID instance, each instance is unique

25 priority 100 #Priority, standby server setting 90

31 virtual_ipaddress {

32 192.168.1.100/24 #vip address changed to 192.168.18.100

#Delete below 38 lines

#After modification, press Esc to exit the insertion mode, enter: wq to save and exit

`Script writing`

[root@lb1 ~]# vim /etc/nginx/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$") #Statistical quantity

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

#Match to 0, shut down the keepalived service

#After writing, press Esc to exit the insertion mode, input: wq to save and exit

[root@lb1 ~]# chmod +x /etc/nginx/check_nginx.sh

[root@lb1 ~]# ls /etc/nginx/check_nginx.sh

/etc/nginx/check_nginx.sh #At this time, the script is executable, green

[root@lb1 ~]# systemctl start keepalived

[root@lb1 ~]# ip a

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:24:63:be brd ff:ff:ff:ff:ff:ff

inet 192.168.18.147/24 brd 192.168.18.255 scope global dynamic ens33

valid_lft 1370sec preferred_lft 1370sec

inet `192.168.1.100/24` scope global secondary ens33 #At this time, the drift address is in lb1

valid_lft forever preferred_lft forever

inet6 fe80::1cb1:b734:7f72:576f/64 scope link

valid_lft forever preferred_lft forever

inet6 fe80::578f:4368:6a2c:80d7/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::6a0c:e6a0:7978:3543/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

3.lb2 (192.168.1.16) operation

[root@lb2 ~]# systemctl stop firewalld.service

[root@lb2 ~]# setenforce 0

[root@lb2 ~]# vim /etc/yum.repos.d/nginx.repo

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

#After modification, press Esc to exit the insertion mode, enter: wq to save and exit

`Reload yum Warehouse`

[root@lb2 ~]# yum list

`install nginx service`

[root@lb2 ~]# yum install nginx -y

[root@lb2 ~]# vim /etc/nginx/nginx.conf

#Insert the following under line 12

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.18.128:6443; #Here is the ip address of master1

server 192.168.18.132:6443; #Here is the ip address of master2

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

#After modification, press Esc to exit the insertion mode, enter: wq to save and exit

`Testing syntax`

[root@lb2 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@lb2 ~]# vim /usr/share/nginx/html/index.html

14 <h1>Welcome to backup nginx!</h1> #Add backup in line 14 to differentiate

#After modification, press Esc to exit the insertion mode, enter: wq to save and exit

`Startup service`

[root@lb2 ~]# systemctl start nginx

//For browser verification access, enter 192.168.18.133 to access the nginx home page of the master

//Insert picture description here

//Deploy the keepalived service

[root@lb2 ~]# yum install keepalived -y

`Modify profile`

[root@lb2 ~]# cp keepalived.conf /etc/keepalived/keepalived.conf

cp: Is it covered?"/etc/keepalived/keepalived.conf"? yes

#Use the keepalived.conf configuration file we uploaded before to overwrite the original configuration file after the installation

[root@lb2 ~]# vim /etc/keepalived/keepalived.conf

18 script "/etc/nginx/check_nginx.sh" #Change the directory of line 18 to / etc/nginx /, write after the script

22 state BACKUP #22 line role MASTER changed to BACKUP

23 interface ens33 #eth0 changed to ens33

24 virtual_router_id 51 #vrrp route ID instance, each instance is unique

25 priority 90 #Priority, standby server is 90

31 virtual_ipaddress {

32 192.168.1.100/24 #vip address changed to 192.168.18.100

#Delete below 38 lines

#After modification, press Esc to exit the insertion mode, enter: wq to save and exit

`Script writing`

[root@lb2 ~]# vim /etc/nginx/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$") #Statistical quantity

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

#Match to 0, shut down the keepalived service

#After writing, press Esc to exit the insertion mode, input: wq to save and exit

[root@lb2 ~]# chmod +x /etc/nginx/check_nginx.sh

[root@lb2 ~]# ls /etc/nginx/check_nginx.sh

/etc/nginx/check_nginx.sh #At this time, the script is executable, green

[root@lb2 ~]# systemctl start keepalived

[root@lb2 ~]# ip a

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:9d:b7:83 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.16/24 brd 192.168.1.255 scope global dynamic ens33

valid_lft 958sec preferred_lft 958sec

inet6 fe80::578f:4368:6a2c:80d7/64 scope link

valid_lft forever preferred_lft forever

inet6 fe80::6a0c:e6a0:7978:3543/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

#There is no 192.168.18.100 at this time, because the address is on lb1 (master)

At this time, the K8s multi node deployment has been completed

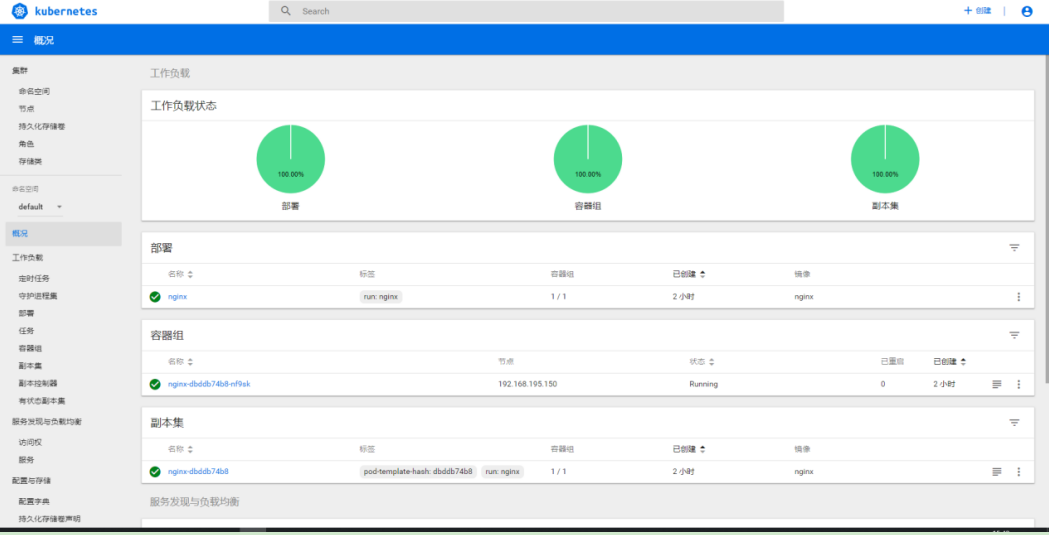

Add: the construction of k8sUI interface

1. Operate on master01

Establish dashborad working directory [root@localhost k8s]# mkdir dashboard //Copy official documents https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dashboard [root@localhost dashboard]# kubectl create -f dashboard-rbac.yaml role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created [root@localhost dashboard]# kubectl create -f dashboard-secret.yaml secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-key-holder created [root@localhost dashboard]# kubectl create -f dashboard-configmap.yaml configmap/kubernetes-dashboard-settings created [root@localhost dashboard]# kubectl create -f dashboard-controller.yaml serviceaccount/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created [root@localhost dashboard]# kubectl create -f dashboard-service.yaml service/kubernetes-dashboard created //After completion, check that the creation is under the specified Kube system namespace [root@localhost dashboard]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE kubernetes-dashboard-65f974f565-m9gm8 0/1 ContainerCreating 0 88s //See how to access [root@localhost dashboard]# kubectl get pods,svc -n kube-system NAME READY STATUS RESTARTS AGE pod/kubernetes-dashboard-65f974f565-m9gm8 1/1 Running 0 2m49s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes-dashboard NodePort 10.0.0.243 <none> 443:30001/TCP 2m24s //Access to nodeIP https://192.168.1.12:30001/

2. Note: Google browser is not accessible

[root@localhost dashboard]# vim dashboard-cert.sh

cat > dashboard-csr.json <<EOF

{

"CN": "Dashboard",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

K8S_CA=$1

cfssl gencert -ca=$K8S_CA/ca.pem -ca-key=$K8S_CA/ca-key.pem -config=$K8S_CA/ca-config.json -profile=kubernetes dashboard-csr.json | cfssljson -bare dashboard

kubectl delete secret kubernetes-dashboard-certs -n kube-system

kubectl create secret generic kubernetes-dashboard-certs --from-file=./ -n kube-system

#Add two lines of certificate to dashboard-controller.yaml, and then apply

# args:

# # PLATFORM-SPECIFIC ARGS HERE

# - --auto-generate-certificates

# - --tls-key-file=dashboard-key.pem

# - --tls-cert-file=dashboard.pem

[root@localhost dashboard]# bash dashboard-cert.sh /root/k8s/k8s-cert/

2020/02/05 15:29:08 [INFO] generate received request

2020/02/05 15:29:08 [INFO] received CSR

2020/02/05 15:29:08 [INFO] generating key: rsa-2048

2020/02/05 15:29:09 [INFO] encoded CSR

2020/02/05 15:29:09 [INFO] signed certificate with serial number 150066859036029062260457207091479364937405390263

2020/02/05 15:29:09 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

secret "kubernetes-dashboard-certs" deleted

secret/kubernetes-dashboard-certs created

[root@localhost dashboard]# vim dashboard-controller.yaml

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

- --tls-key-file=dashboard-key.pem

- --tls-cert-file=dashboard.pem

//Redeployment

[root@localhost dashboard]# kubectl apply -f dashboard-controller.yaml

Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply

serviceaccount/kubernetes-dashboard configured

Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply

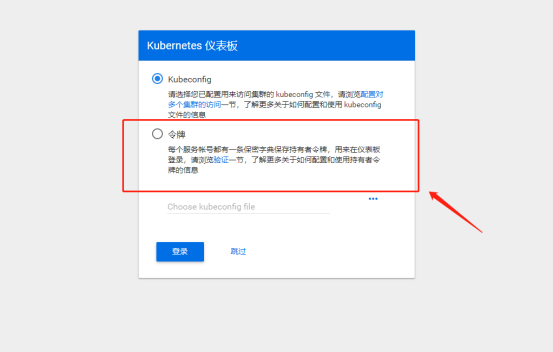

deployment.apps/kubernetes-dashboard configured3. Re access, token is required at this time

4. Generate token

[root@localhost dashboard]# kubectl create -f k8s-admin.yaml

serviceaccount/dashboard-admin created

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

//Preservation

[root@localhost dashboard]# kubectl get secret -n kube-system

NAME TYPE DATA AGE

dashboard-admin-token-qctfr kubernetes.io/service-account-token 3 65s

default-token-mmvcg kubernetes.io/service-account-token 3 7d15h

kubernetes-dashboard-certs Opaque 11 10m

kubernetes-dashboard-key-holder Opaque 2 63m

kubernetes-dashboard-token-nsc84 kubernetes.io/service-account-token 3 62m

//View token

[root@localhost dashboard]# kubectl describe secret dashboard-admin-token-qctfr -n kube-system

Name: dashboard-admin-token-qctfr

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 73f19313-47ea-11ea-895a-000c297a15fb

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1359 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tcWN0ZnIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNzNmMTkzMTMtNDdlYS0xMWVhLTg5NWEtMDAwYzI5N2ExNWZiIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.v4YBoyES2etex6yeMPGfl7OT4U9Ogp-84p6cmx3HohiIS7sSTaCqjb3VIvyrVtjSdlT66ZMRzO3MUgj1HsPxgEzOo9q6xXOCBb429m9Qy-VK2JxuwGVD2dIhcMQkm6nf1Da5ZpcYFs8SNT-djAjZNB_tmMY_Pjao4DBnD2t_JXZUkCUNW_O2D0mUFQP2beE_NE2ZSEtEvmesB8vU2cayTm_94xfvtNjfmGrPwtkdH0iy8sH-T0apepJ7wnZNTGuKOsOJf76tU31qF4E5XRXIt-F2Jmv9pEOFuahSBSaEGwwzXlXOVMSaRF9cBFxn-0iXRh0Aq0K21HdPHW1b4-ZQwA5. Copy the generated token and input it into the browser to see the UI interface