Article 3 of the series

To undertake the above: RXjava parsing (2) I gave you the source code of RXjava and this interview, and you told me that I couldn't get an offer?

(leave the GitHub link, and you can find the content you need to obtain such as interview)

https://github.com/xiangjiana/Android-MS

Back pressure problem

Backpressure refers to a strategy that tells the upstream observer to reduce the sending speed when the observed event is sent much faster than the observer's processing speed in the asynchronous scene

In short, back pressure is a strategy of velocity control.

Two points need to be emphasized:

- One of the premises of back pressure strategy is asynchronous environment, that is to say, the observed and the observed are in different thread environment.

- Backpressure is not an operator that can be used directly in the program like flatMap, it is just a strategy to control the flow rate of events.

reactive pull

First of all, we recall the most friendly article about rxjava, which actually mentioned that in the observer model of rxjava, the observed is actively pushing data to the observer, and the observer is passively receiving. On the other hand, responsive pull, in turn, means that the observer takes the initiative to pull data from the observed, and the observed becomes passive and waits for the notification to send the data.

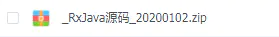

The structural diagram is as follows:

The observer can pull data on demand according to his own actual situation, instead of passively receiving (which is equivalent to telling the upstream observer to slow down), and finally realizes the control of the speed of the upstream observed sending events, and realizes the strategy of back pressure.

Source code

public class FlowableOnBackpressureBufferStategy{

...

@Override

public void onNext(T t) {

if (done) {

return;

}

boolean callOnOverflow = false;

boolean callError = false;

Deque<T> dq = deque;

synchronized (dq) {

if (dq.size() == bufferSize) {

switch (strategy) {

case DROP_LATEST:

dq.pollLast();

dq.offer(t);

callOnOverflow = true;

break;

case DROP_OLDEST:

dq.poll();

dq.offer(t);

callOnOverflow = true;

break;

default:

// signal error

callError = true;

break;

}

} else {

dq.offer(t);

}

}

if (callOnOverflow) {

if (onOverflow != null) {

try {

onOverflow.run();

} catch (Throwable ex) {

Exceptions.throwIfFatal(ex);

s.cancel();

onError(ex);

}

}

} else if (callError) {

s.cancel();

onError(new MissingBackpressureException());

} else {

drain();

}

}

...

}In this source code, different measures are taken according to different back pressure strategies. Of course, this is just an example of buffer back pressure strategy.

root

The root cause of the back pressure problem is the uneven speed of upstream transmission and downstream processing, so if you want to solve this problem, you need to match the two speeds to solve the root cause of the back pressure.

There are usually two strategies available:

- Solve from the quantity and sample the data

- Solve from the speed, reduce the rate of sending events

- Using flowable and subscriber

Using Flowable

Flowable<Integer> upstream = Flowable.create(new FlowableOnSubscribe<Integer>() {

@Override

public void subscribe(FlowableEmitter<Integer> emitter) throws Exception {

Log.d(TAG, "emit 1");

emitter.onNext(1);

Log.d(TAG, "emit 2");

emitter.onNext(2);

Log.d(TAG, "emit 3");

emitter.onNext(3);

Log.d(TAG, "emit complete");

emitter.onComplete();

}

}, BackpressureStrategy.ERROR); //Added a parameter

Subscriber<Integer> downstream = new Subscriber<Integer>() {

@Override

public void onSubscribe(Subscription s) {

Log.d(TAG, "onSubscribe");

s.request(Long.MAX_VALUE); //Pay attention to this code

}

@Override

public void onNext(Integer integer) {

Log.d(TAG, "onNext: " + integer);

}

@Override

public void onError(Throwable t) {

Log.w(TAG, "onError: ", t);

}

@Override

public void onComplete() {

Log.d(TAG, "onComplete");

}

};

upstream.subscribe(downstream);We notice that this time it is different from Observable. First, we added a parameter to create Flowable, which is used to select back pressure, that is, how to deal with the imbalance of upstream and downstream flow velocity. Here we directly use BackpressureStrategy.ERROR, which will be thrown directly when the imbalance of upstream and downstream flow velocity occurs An exception is the famous MissingBackpressureException. Other strategies will be explained later

Another difference is that what is passed to us in the downstream onSubscribe method is no longer Disposable, but subscription. What's the difference between them? First of all, they are both switches in the middle of upstream and downstream. Previously, we said that calling the Disposable.dispose() method can cut off the water pipe, and the same calling Subscription.cancel() can also cut off the water pipe. The difference lies in that Subscription adds a void request(longn) method. What's the use of this method? There's a code in the above code:

s.request(Long.MAX_VALUE);

This is because Flowable adopts a new way of design, that is, the response pull method, to better solve the problem of uneven flow velocity in the upstream and downstream, which is different from the control quantity and control speed we talked about before. This way, in plain words, is just like playing ghosts with leaves. We regard the upstream as a small Japan and the downstream as a small Japan Yewen, when calling Subscription.request(1), Yewen says I want to hit one! Then little Japan takes out a ghost to Yewen and asks him to hit it. After Yewen kills the ghost, he calls request(10) again, and Yewen says I want to hit ten! Then little Japan sent another ten ghosts to Yewen, and then they watched the side to see if Yewen could kill ten ghosts Son, wait for ye Wen to kill ten devils, then continue to ask devils to fight

So we regard request as a kind of ability, as the ability of downstream processing events. If the downstream can handle several, I will tell the upstream how many events I want. So as long as the upstream determines how many events to send according to the downstream processing ability, it will not cause a swarm of events to be sent, resulting in OOM. This also perfectly solves the defects of the two methods we have learned before Filtering events will lead to event loss, deceleration and performance loss. This way not only solves the problem of event loss, but also solves the problem of speed, perfect!

Synchronization

Observable.create(new ObservableOnSubscribe<Integer>() {

@Override

public void subscribe(ObservableEmitter<Integer> emitter) throws Exception {

for (int i = 0; ; i++) { //Infinite recurrence event

emitter.onNext(i);

}

}

}).subscribe(new Consumer<Integer>() {

@Override

public void accept(Integer integer) throws Exception {

Thread.sleep(2000);

Log.d(TAG, "" + integer);

}

});When the upstream and downstream work in the same thread, this is a synchronous subscription relationship, that is to say, each event sent upstream must wait until the downstream receives and processes before sending the next event

The difference between synchronous and asynchronous is whether there is a buffer for sending events.

Asynchronous condition

The corresponding threads are determined by subscribeOn and observeOn to achieve the asynchronous effect. When asynchronous, there will be a corresponding cache to exchange the events sent from the upstream.

public enum BackpressureStrategy {

/**

* OnNext events are written without any buffering or dropping.

* Downstream has to deal with any overflow.

* <p>Useful when one applies one of the custom-parameter onBackpressureXXX operators.

*/

MISSING,

/**

* Signals a MissingBackpressureException in case the downstream can't keep up.

*/

ERROR,

/**

* Buffers <em>all</em> onNext values until the downstream consumes it.

*/

BUFFER,

/**

* Drops the most recent onNext value if the downstream can't keep up.

*/

DROP,

/**

* Keeps only the latest onNext value, overwriting any previous value if the

* downstream can't keep up.

*/

LATEST

}Back pressure strategy:

- error, the buffer is about 128

- Buffer, buffer is about 1000

- drop, discard the events that cannot be saved

- Latest, keep only the latest

- missing, default setting, do nothing

Where does the upstream know the downstream processing capacity? Let's take a look at the most important part of the upstream. It must be flowabletwitter. We send events through it. Let's see its source code (don't worry, its code is often simple):

public interface FlowableEmitter<T> extends Emitter<T> {

void setDisposable(Disposable s);

void setCancellable(Cancellable c);

/**

* The current outstanding request amount.

* <p>This method is thread-safe.

* @return the current outstanding request amount

*/

long requested();

boolean isCancelled();

FlowableEmitter<T> serialize();

}Flowabletwitter is an interface that inherits from twitter. In twitter, there are three methods: onNext(),onComplete(), and onError(). We see that there is such a method in flowabletwitter:

long requested();

This figure means that when the upstream and downstream are in the same thread, calling request(n) in the downstream will directly change the requested value in the upstream, and multiple calls will stack the value, and each time the upstream sends an event, it will reduce the value. When the value is reduced to 0, continuing to send an event will throw an exception

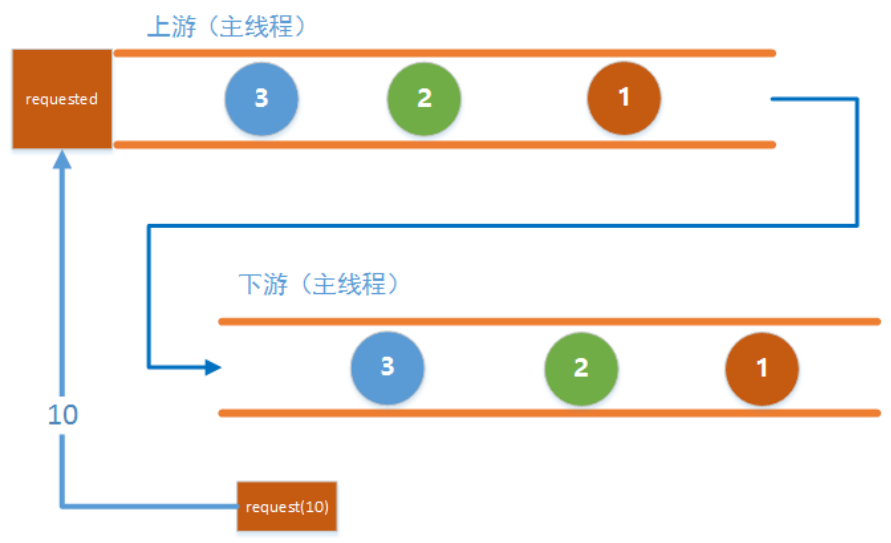

It can be seen that when upstream and downstream work in different threads, there is a requested in each thread. When we call request (1000), what we actually change is the requested in the downstream main thread, and the value of the requested in the upstream is set by the internal call request(n) of RxJava. This call will be triggered automatically when appropriate.

Development and application of Rxjava instance

- Network request processing (polling, nesting, error reconnection)

- Functional anti shake

- Get data from multilevel cache

- Merge data sources

- Joint judgement

- Used in combination with Retrofit,RxBinding,EventBus

Rxjava principle

- How Scheduler thread switching works

- Sending and receiving of data (observer mode)

- How lift works

- How map works

- Working principle of flatMap

- How merge works

- How concat works

(leave the GitHub link, and you can find the content you need to obtain such as interview)

https://github.com/xiangjiana/Android-MS