Experimental environment

rhel6.5

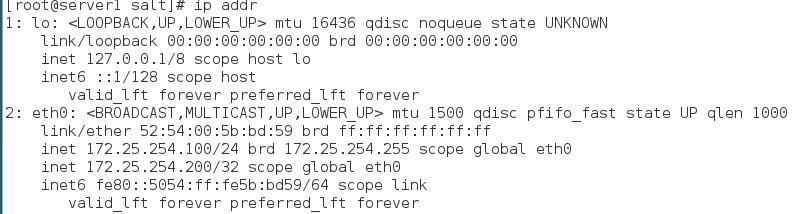

serevr1 salt-master,salt-minion keepalived+haproxy ip:172.25.254.100

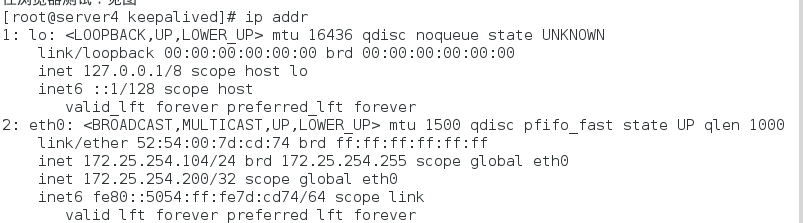

server4 salt-minion keepalived+haproxy ip :172.25.254.104

server2 salt-minion ip:172.25.254.102

server3 salt-minion ip:172.25.254.103

Virtual IP 172.25.254.200

See https://blog.csdn.net/weixin_/article/details/83478459 for the basic configuration of salt

Keep alive

[root@server1 salt]# mkdir keepalived

[root@server1 salt]# mkdir keepalived/files

[root@server1 salt]# cd keepalived/

[root@server1 keepalived]# vim install.sls

[root@server1 keepalived]# cat install.sls

keepalived-install:

pkg.installed:

- pkgs:

- pcre-devel

- gcc

- openssl-devel

kp-install:

file.managed:

- name: /mnt/keepalived-2.0.6.tar.gz

- source: salt://keepalived/files/keepalived-2.0.6.tar.gz

cmd.run:

- name: cd /mnt && tar zxf keepalived-2.0.6.tar.gz && cd keepalived-2.0.6 && ./configure --prefix=/usr/local/keepalived --with-init=SYSV &> /dev/null && make &> /dev/null && make install &> /dev/null

- creates: /usr/local/keepalived

/etc/keepalived:

file.directory:

- mode: 755

/sbin/keepalived:

file.symlink:

- target: /usr/local/keepalived/sbin/keepalived

/etc/sysconfig/keepalived:

file.symlink:

- target: /usr/local/keepalived/etc/sysconfig/keepalived

/etc/init.d/keepalived:

file.managed:

- source: salt://keepalived/files/keepalived

- mode: 755

[root@server1 keepalived]# ls files/

keepalived-2.0.6.tar.gz

On server4

[root@server4 etc]# cd /usr/local/keepalived/etc/rc.d/init.d/ [root@server4 init.d]# ls keepalived [root@server4 init.d]# scp keepalived root@172.25.254.100:/srv/salt/keepalived/files [root@server4 init.d]# cd /usr/local/keepalived/etc/ [root@server4 etc]# cd keepalived/ [root@server4 keepalived]# ls keepalived.conf samples [root@server4 keepalived]# scp keepalived.conf root@172.25.254.100:/srv/salt/keepalived/files //Push and compile keepalived on server1 [root@server1 files]# salt server4 state.sls keepalived.install

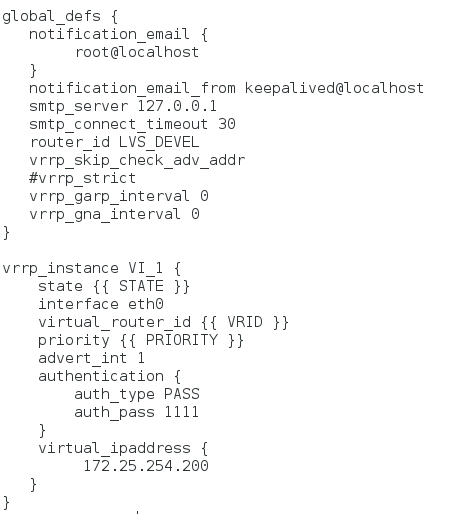

Modify the configuration file of keepalived

[root@server1 keepalived]# vim files/keepalived.conf

[root@server1 srv]# mkdir pillar

[root@server1 srv]# cd pillar/

[root@server1 pillar]# mkdir keepalived [root@server1 pillar]# cd keepalived/ [root@server1 keepalived]# vim install.sls

[root@server1 keepalived]# cat install.sls

{% if grains['fqdn'] == 'server1' %}

state: MASTER

vrid: 25

priority: 100

{% elif grains['fqdn'] == 'server4' %}

state: BACKUP

vrid: 25

priority: 50

{% endif %}

[root@server1 pillar]# cat top.sls

base:

'*':

- web.install

- keepalived.install

Multi node push

[root@server1 salt]# vim top.sls

[root@server1 salt]# cat top.sls

base:

'server1':

- haproxy.service

- keepalived.service

'server4':

- haproxy.service

- keepalived.service

'roles:apache':

- match: grain

- apache.service

'roles:nginx':

- match: grain

- nginx.service

[root@server1 salt]# cd /srv/pillar/web/ [root@server1 web]# vim install.sls

{% if grains['fqdn'] == 'server2' %}

webserver: httpd

port: 80

{% elif grains['fqdn'] == 'server3' %}

webserver: nginx

{% endif %}

[root@server1 salt]# salt '*' state.highstate

Test in browser:

High availability test

Highly available deployment successful

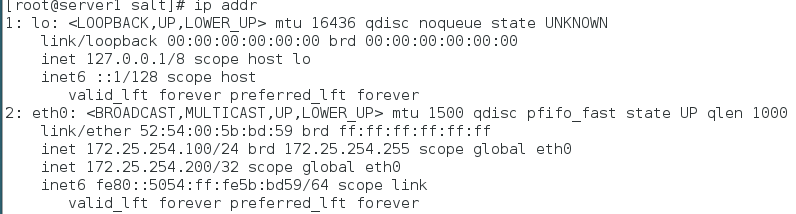

Push again will restore the virtual ip back to the previous host

[root@server1 salt]# salt '*' state.highstate

Health detection of Haproxy

[root@server1 ~]# cd /opt

[root@server1 opt]# vim check_haproxy.sh

#!/bin/bash /etc/init.d/haproxy status &> /dev/null || /etc/init.d/haproxy restart &> /dev/null

if [ $? -ne 0 ];then

/etc/init.d/keepalived stop &> /dev/null

fi

[root@server1 ~]# chmod +x check_haproxy.sh [root@server1 ~]# scp check_haproxy.sh server4:/opt/

[root@server1 ~]# cd /srv/salt/keepalived/files/ [root@server1 files]# vim keepalived.conf

! Configuration File for keepalived

vrrp_script check_haproxy {

script "/opt/check_haproxy.sh"

interval 2

weight 2

}

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state {{ STATE }}

interface eth0

virtual_router_id {{ VRID }}

priority {{ PRIORITY }}

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.25.200

}

track_script {

check_haproxy

}

}

[root@server1 files]# salt '*' state.highstate

After you turn off haproxy, check the process of haproxy. If you can restart it, you will still access the machine. The virtual ip will be on the machine. If you can't restart haproxy, you will automatically turn off the keepalived. If you go to visit another one, the virtual ip will be on the other one

Test:

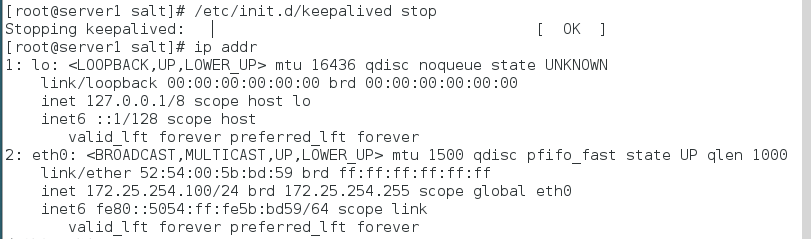

- haproxy can be restarted after it is turned off

[root@server1 files]# /etc/init.d/haproxy stop

- haproxy cannot be restarted after it is turned off

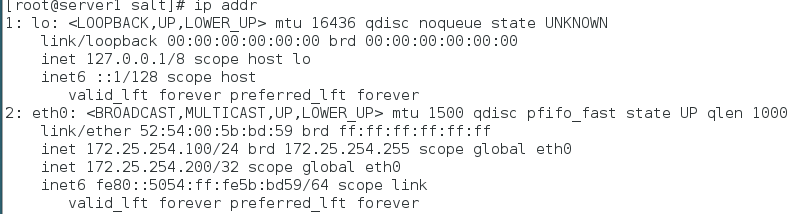

server1: [root@server1 files]# cd /etc/init.d/ [root@server1 init.d]# /etc/init.d/haproxy stop / / turn off haproxy [root@server1 init.d]# chmod -x haproxy / / remove the executable permission of haproxy to ensure that it cannot be restarted within the limited time

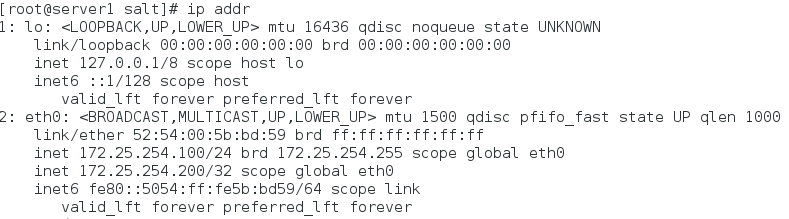

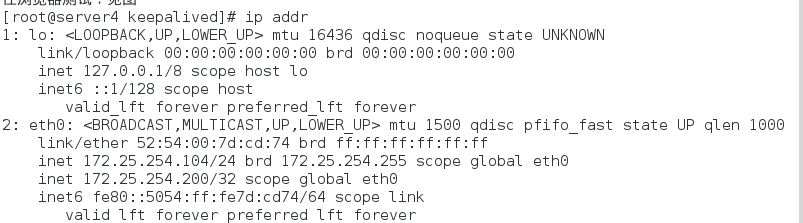

Check whether there is virtual ip on serevr4

server1 [root@server1 init.d]# chmod +x haproxy / / after the executable permission is added, the virtual ip will be automatically added to the local machine [root@server1 init.d]# /etc/init.d/haproxy start