1. Import module

import urllib.request from bs4 import BeautifulSoup

2. Add header file to prevent link rejection during crawling

def qiuShi(url,page): ################### Simulate the behavior of high fidelity browser ############## # Set multiple header file parameters and simulate it as a high fidelity browser to crawl web pages heads ={ 'Connection':'keep-alive', 'Accept-Language':'zh-CN,zh;q=0.9', 'Accept':'text/html,application/xhtml+xml,application/xml; q=0.9,image/webp,image/apng,*/*;q=0.8', 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36', } headall = [] for key,value in heads.items(): items = (key,value) # Add multiple header file parameters one by one to the header list headall.append(items) # print(headall) # print('test 1 -- ') # Create opener object opener = urllib.request.build_opener() # Add header file to opener object opener.addheaders = headall # Set opener object to global mode urllib.request.install_opener(opener) # Crawl the web page and read the data to data data = opener.open(url).read().decode() # data1 = urllib.request.urlopen(url).read().decode('utf-8') # print(data1) # print('test 2 -- ') ################## end ########################################

3. Create a soap parser object

soup = BeautifulSoup(data,'lxml') x = 0 4.Start using BeautifulSoup4 User name information extracted by parser ############### Get user name ######################## name = [] # Using bs4 parser to extract user name unames = soup.find_all('h2') # print('test 3--',unames) for uname in unames: # print(uname.get_text(), 'page', '-', str (x) + 'user name:', end = '') # Add user names one by one to the name list name.append(uname.get_text()) # print(name) # print('test 4 -- ') #################end############################# 5.Extract published content information

Published content

cont = []

data4 = soup.find_all('div',class_='content')

# print(data4)

#Remember that the second filter should be converted to string form, otherwise an error will be reported

data4 = str(data4)

#Extract content using the bs4 parser

soup3 = BeautifulSoup(data4,'lxml')

contents = soup3.find_all('span')

for content in contents:

#print('content of 'article', x, 'embarrassment:', content.get_text())

#Add content one by one to the cont list

cont.append(content.get_text())

# print(cont)

#print('test 5 -- ')

##############end####################################

**6.Extract funny index** #################Funny index########################## happy = [] # Get funny index # First filter data2 = soup.find_all('span',class_="stats-vote") # Get funny index # Second screening data2 = str(data2) # Convert list to string # print(data2) # print('test 6 -- ') soup1 = BeautifulSoup(data2,'lxml') happynumbers = soup1.find_all('i',class_="number") for happynumber in happynumbers: # print(happynumber.get_text()) # Add funny numbers one by one to the happy list happy.append(happynumber.get_text()) # print(happy) # print('test 7 -- ') ##################end#############################

If you like Python as much as I do and want to be an excellent programmer, you are also running on the road of learning python. Welcome to join the python learning group: Python group number: 491308659 verification code: Nanzhu

Every day, the group will share the latest industry information, share python free courses, and exchange learning together, so that learning becomes a habit!

7. Number of comments extracted

############## Comment number ############################ comm = [] data3 = soup.find_all('a',class_='qiushi_comments') data3 = str(data3) # print(data3) soup2 = BeautifulSoup(data3,'lxml') comments = soup2.find_all('i',class_="number") for comment in comments: # print(comment.get_text()) # Add comments one by one to the comm list comm.append(comment.get_text()) ############end#####################################

8. Use regular expression to extract gender and age

######## Access to gender and age ########################## # Using regular expressions to match gender and age pattern1 = '<div class="articleGender (w*?)Icon">(d*?)</div>' sexages = re.compile(pattern1).findall(data) # print(sexages)

9. Set the pattern setting of all user information output

################## Batch output all personal information of users ################# print() for sexage in sexages: sa = sexage print('*'*17, '=_= The first', page, 'page-The first', str(x+1) + 'Individual users =_= ','*'*17) # Output user name print('[User name]:',name[x],end='') # Export gender and age print('[Gender:',sa[0],' [Age:',sa[1]) # Output content print('[Contents:',cont[x]) # Output funny and comments print('[Funny index]:',happy[x],' [Comments]:',comm[x]) print('*'*25,' 38th dividing line ','*'*25) x += 1 ###################end##########################

10. Set cycle traversal to crawl 13 pages of user information

for i in range(1,14): # Website of embarrassing Encyclopedia url = 'https://www.qiushibaike.com/8hr/page/'+str(i)+'/' qiuShi(url,i)

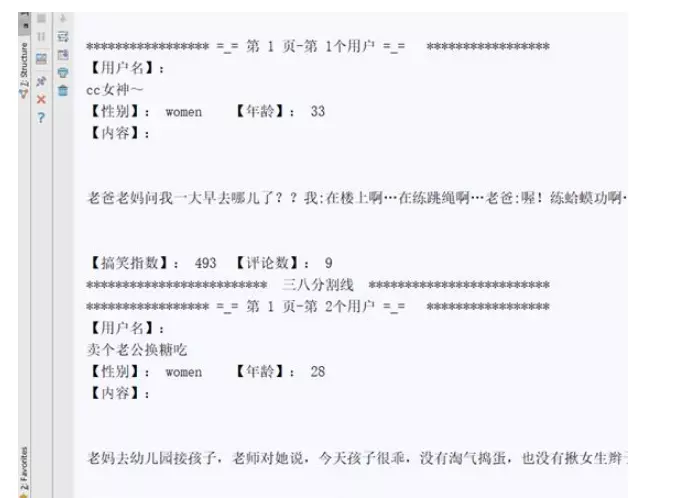

Operation result, partial screenshot: