Preface

The text and pictures of this article are from the Internet, only for learning and communication, not for any commercial purpose. The copyright belongs to the original author. If you have any questions, please contact us in time for handling.

Author: broken sesame

Because the laboratory needs some corpus for research, and the corpus is required to be the abstract of the paper on HowNet, but at present, the latest version of HowNet has some trouble to climb up, so I use another search interface of HowNet

For example, the following page:

http://search.cnki.net/Search.aspx?q = meat products

The search results are almost the same as those on HowNet. In addition, when you interview for a python job later, project experience display is the core. If you lack project practice, you can go to a small-scale Python exchange. Skirt: after a long time of martial arts, you can find a lot of new tutorial projects under the conversion of stream thinking (homophony of numbers)

On this basis, I have simply looked at the structure of some web pages, and it is easy to write crawling code (it is the most basic, rather imperfect, adding other functions can be added by myself)

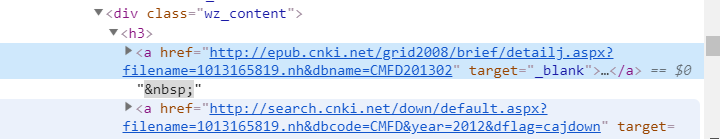

The structure of the web page is still very clear

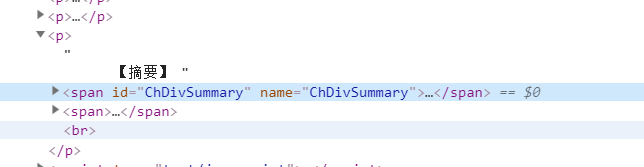

The information is also clear

I use a pymysql connected database, which is also efficient

Here is the code:

# -*- coding: utf-8 -*-

import time

import re

import random

import requests

from bs4 import BeautifulSoup

import pymysql

connection = pymysql.connect(host='',

user='',

password='',

db='',

port=3306,

charset='utf8') # Note that utf8 is not utf-8

# Get cursor

cursor = connection.cursor()

#url = 'http://epub.cnki.net/grid2008/brief/detailj.aspx?filename=RLGY201806014&dbname=CJFDLAST2018'

#This headers information must be included, otherwise the website will redirect your request to another page

headers = {

'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8',

'Accept-Encoding':'gzip, deflate, sdch',

'Accept-Language':'zh-CN,zh;q=0.8',

'Connection':'keep-alive',

'Host':'www.cnki.net',

'Referer':'http://search.cnki.net/search.aspx?q=%E4%BD%9C%E8%80%85%E5%8D%95%E4%BD%8D%3a%E6%AD%A6%E6%B1%89%E5%A4%A7%E5%AD%A6&rank=relevant&cluster=zyk&val=CDFDTOTAL',

'Upgrade-Insecure-Requests':'1',

'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36'

}

headers1 = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.143 Safari/537.36'

}

def get_url_list(start_url):

depth = 20

url_list = []

for i in range(depth):

try:

url = start_url + "&p=" + str(i * 15)

search = requests.get(url.replace('\n', ''), headers=headers1)

soup = BeautifulSoup(search.text, 'html.parser')

for art in soup.find_all('div', class_='wz_tab'):

print(art.find('a')['href'])

if art.find('a')['href'] not in url_list:

url_list.append(art.find('a')['href'])

print("Crawl first" + str(i) + "Page success!")

time.sleep(random.randint(1, 3))

except:

print("Crawl first" + str(i) + "Page failed!")

return url_list

def get_data(url_list, wordType):

try:

# Read the link through URL "results. TXT

for url in url_list:

i = 1;

if url == pymysql.NULL or url == '':

continue

try:

html = requests.get(url.replace('\n', ''), headers=headers)

soup = BeautifulSoup(html.text, 'html.parser')

except:

print("Failed to get web page")

try:

print(url)

if soup is None:

continue

# Get title

title = soup.find('title').get_text().split('-')[0]

# Get author

author = ''

for a in soup.find('div', class_='summary pad10').find('p').find_all('a', class_='KnowledgeNetLink'):

author += (a.get_text() + ' ')

# Get summary

abstract = soup.find('span', id='ChDivSummary').get_text()

# Get keywords. There is no keyword

except:

print("Partial acquisition failed")

pass

try:

key = ''

for k in soup.find('span', id='ChDivKeyWord').find_all('a', class_='KnowledgeNetLink'):

key += (k.get_text() + ' ')

except:

pass

print("The first" + str(i) + "individual url")

print("[Title]: " + title)

print("[author]: " + author)

print("[abstract]: " + abstract)

print("[key]: " + key)

# Execute SQL statement

cursor.execute('INSERT INTO cnki VALUES (NULL, %s, %s, %s, %s, %s)', (wordType, title, author, abstract, key))

# Commit to database execution

connection.commit()

print()

print("Crawl finish")

finally:

print()

if __name__ == '__main__':

try:

for wordType in {"Escherichia coli", "Total bacterial flora", "Carmine", "Sunset yellow"}:

wordType = "meat+" + wordType

start_url = "http://search.cnki.net/search.aspx?q=%s&rank=relevant&cluster=zyk&val=" % wordType

url_list = get_url_list(start_url)

print("Start crawling")

get_data(url_list, wordType)

print("One type of climbing completed")

print("All crawling completed")

finally:

connection.close()I simply chose a few keywords here. As an experiment, if there are many crawling keywords, they can be written in a txt file and read directly, which is very convenient.