plotData.m file

This function file is to distinguish the results of which the results are 0 and 1, mainly through the find function.

function plotData(X, y) %PLOTDATA Plots the data points X and y into a new figure % PLOTDATA(x,y) plots the data points with + for the positive examples % and o for the negative examples. X is assumed to be a Mx2 matrix. % Create New Figure figure; hold on; % ====================== YOUR CODE HERE ====================== % Instructions: Plot the positive and negative examples on a % 2D plot, using the option 'k+' for the positive % examples and 'ko' for the negative examples. % pos = find(y == 1);%Returns the sequence number in which the result is 1 neg = find(y == 0); plot(X(pos, 1), X(pos, 2), 'k+','LineWidth', 2,'MarkerSize', 7); plot(X(neg, 1), X(neg, 2), 'ko', 'MarkerFaceColor', 'y','MarkerSize', 7); legend(); % ========================================================================= hold off; end

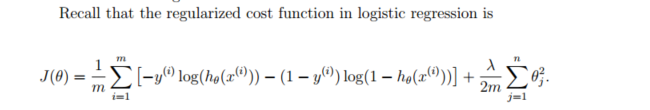

costFunctionReg.m file

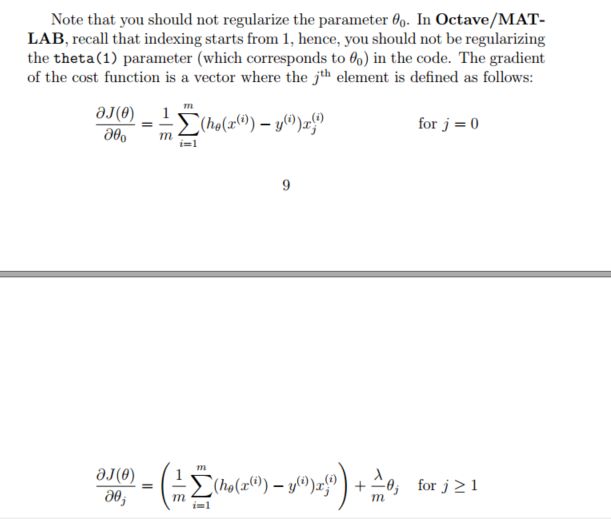

The main function of this file is to calculate the cost function and gradient of regularization. In particular, the formula used in calculating the gradient and cost functions is not the same as the standard logistic algorithm. Here are the cost function and the gradient formula:

As shown above, when adding theta, pay attention not to start from 0 (that is to say, discard the 0th theta value). In MATLAB, it means not to add the theta value in the first row.

Similarly, when calculating the gradient, it is not the same when J = 0 and j is equal to other values. When J = 0, the result can be directly brought into the formula, but when J ≥ 1, as above, it is calculated from the second theta.

function [J, grad] = costFunctionReg(theta, X, y, lambda) %COSTFUNCTIONREG Compute cost and gradient for logistic regression with regularization % J = COSTFUNCTIONREG(theta, X, y, lambda) computes the cost of using % theta as the parameter for regularized logistic regression and the % gradient of the cost w.r.t. to the parameters. % Initialize some useful values m = length(y); % number of training examples % You need to return the following variables correctly J = 0; grad = zeros(size(theta)); % ====================== YOUR CODE HERE ====================== % Instructions: Compute the cost of a particular choice of theta. % You should set J to the cost. % Compute the partial derivatives and set grad to the partial % derivatives of the cost w.r.t. each parameter in theta h = sigmoid(X * theta); J = sum( -y .* log(h) - (1 - y) .* log(1 - h)) / m + lambda / (2 * m) * sum(theta(2:end,:) .* theta(2:end,:)); % grad(1,:) = sum((h - y) .* X(:,1)) / m; grad(2:end,:) = X(:,2:end)' * (h - y) ./ m + lambda / m .* theta(2:end,:); % ============================================================= end