This article is only a summary of your own, if you need detailed information, please go around.

Note: yaml files require strict indentation. By default, two spaces are indented at different levels.

1. Create a Deployment resource object using httpd mirroring

[root@master ~]# vim lvjianzhao.yaml #Write yaml file

kind: Deployment #Specify the type of resource object to create

apiVersion: extensions/v1beta1 #Specify the API version for deployment

metadata:

name: lvjianzhao-deploy #Define the name of the deployment

spec:

replicas: 4 #Define the number of copies of the pod that need to be created

template:

metadata:

labels: #Specify label for pod

user: lvjianzhao

spec:

containers:

- name: httpd #Specify the name of the container

image: httpd #Specify which mirror to run the container on

[root@master ~]# kubectl apply -f lvjianzhao.yaml #Execute Written Files

[root@master ~]# kubectl explain deployment

#Note: If you do not know the API version for a resource object, you can view it with this command

KIND: Deployment

VERSION: extensions/v1beta1 #This is the API version for the Deployment resource

........................#Omit some content

[root@master ~]# kubectl get deployment lvjianzhao-deploy

#Determine that the yaml file executed generated the number of pod s we neededTo see if its pod tag is the label we defined:

[root@master ~]# kubectl describe deployment lvjianzhao-deploy #View the details of this resource object Name: lvjianzhao-deploy Namespace: default CreationTimestamp: Thu, 07 Nov 2019 17:50:44 +0800 Labels: "user=lvjianzhao" #This is the label of the resource object

2. Create an svc resource object to associate with the above Deployment resource object.And can provide services to the external network.The mapping node port is: 32123.

[root@master ~]# vim httpd-service.yaml #Write yaml file for service

kind: Service

apiVersion: v1

metadata:

name: httpd-service

spec:

type: NodePort #You need to specify the type "NodePort" here, otherwise the default is cluster IP

selector:

user: lvjianzhao #Associate this label with the deployment resource object

ports:

- protocol: TCP

port: 79 #This specifies the port to map to the Cluster IP

targetPort: 80 #The port specified here is to map the port in the pod

nodePort: 32123 #The port mapped to the host is specified here

[root@master ~]# kubectl apply -f httpd-service.yaml #Execute the yaml file

[root@master ~]# kubectl get svc httpd-service #View the created svc (service)

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

httpd-service NodePort 10.97.13.198 <none> 79:32123/TCP 2m1s

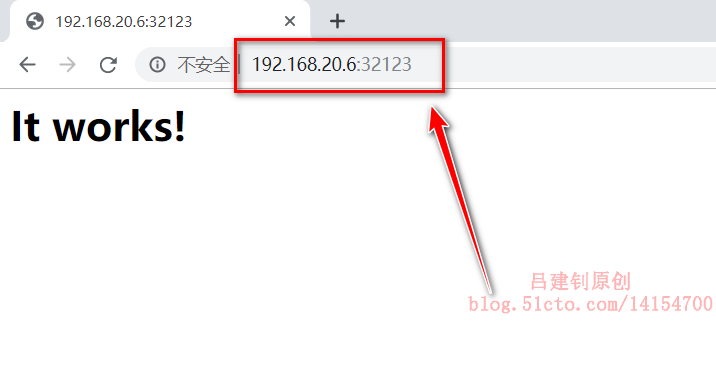

#You can see that the specified cluster port is mapped to the local 32123Now you can use client to access port 32123 of any node in the k8s cluster and see the services provided by pod as follows:

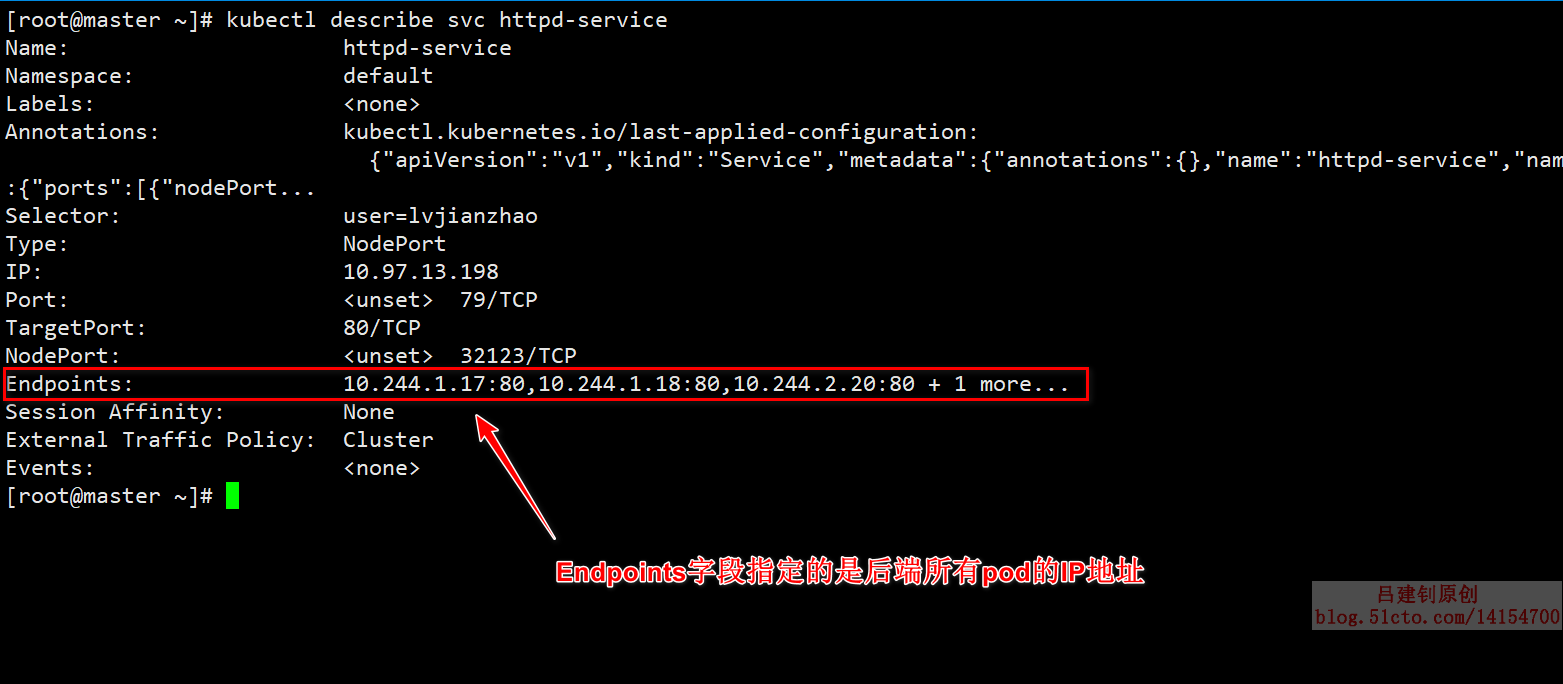

[root@master ~]# kubectl describe svc httpd-service #View details of the service

The information returned is as follows (only a small number of IP can be displayed, and the rest is omitted, not unspecified):

Now that the endpoint specifies the IP address of the backend pod as mentioned above, check to see if it is correct, as follows:

[root@master ~]# kubectl get pod -o wide | awk '{print]' #IP address of output backend pod

IP

10.244.1.18

10.244.2.21

10.244.1.17

10.244.2.20

#You can confirm that the IP you are viewing corresponds to the IP specified by the endpoint of the service aboveReview the details of the svc mapping endpoint and explain the underlying principles of load balancing.

3. When we do this, client s can access the services provided by our pod (and the effect of load balancing), so what is the implementation process?What does it depend on?

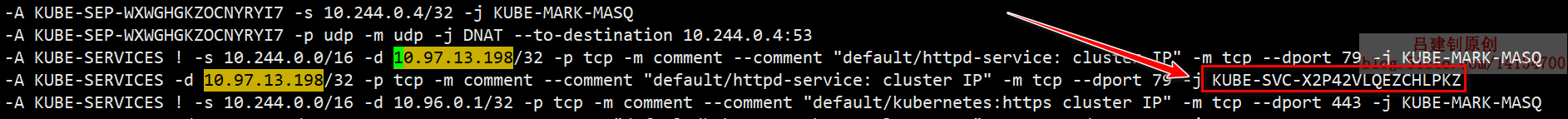

In fact, the underlying principle is not that big. kube-proxy achieves the effect of load balancing through iptables'forwarding mechanism, first defining that the target IP is the cluster IP provided by the service, then forwarding to other iptables rules using the'-j' option, as follows:

[root@master ~]# kubectl get svc httpd-service | awk '{print $3}'

#We need to see the service's cluster IP first

CLUSTER-IP

10.97.13.198

[root@master ~]# iptables-save > a.txt #Output iptables rules to file for easy searching

[root@master ~]# vim a.txt #Open iptables rulesSearching for our cluster IP, you can see that when the destination address is a cluster IP address, it will be forwarded to another rule, "KUBE-SVC-X2P42VLQEZCHLPKZ", as follows:

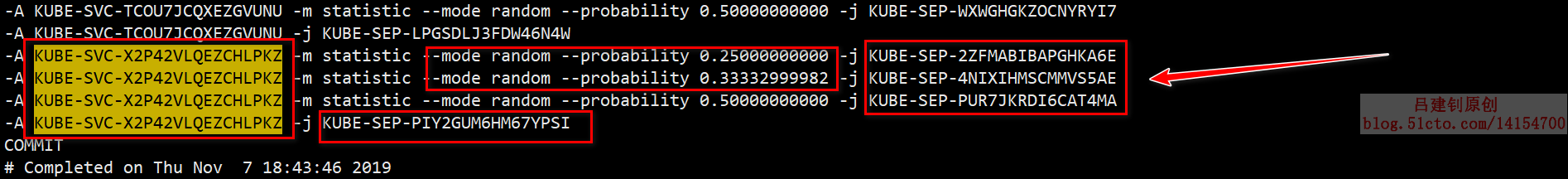

Now continue searching for the rules it forwards to, as follows:

In the figure above, there are four pods in total, so the first rule in the figure above uses the random algorithm, with a 0.25 (1/4) chance to use this rule. When the second rule is reached, there is a 0.33 chance because there are three pods left after the first one is removed, and 10/3 = 0.33, which is why this probability comes from.By analogy, when the last rule is reached, there is no need to specify a probability, it must be to process the request.