ansible, playbook, huaweiyun, ceph

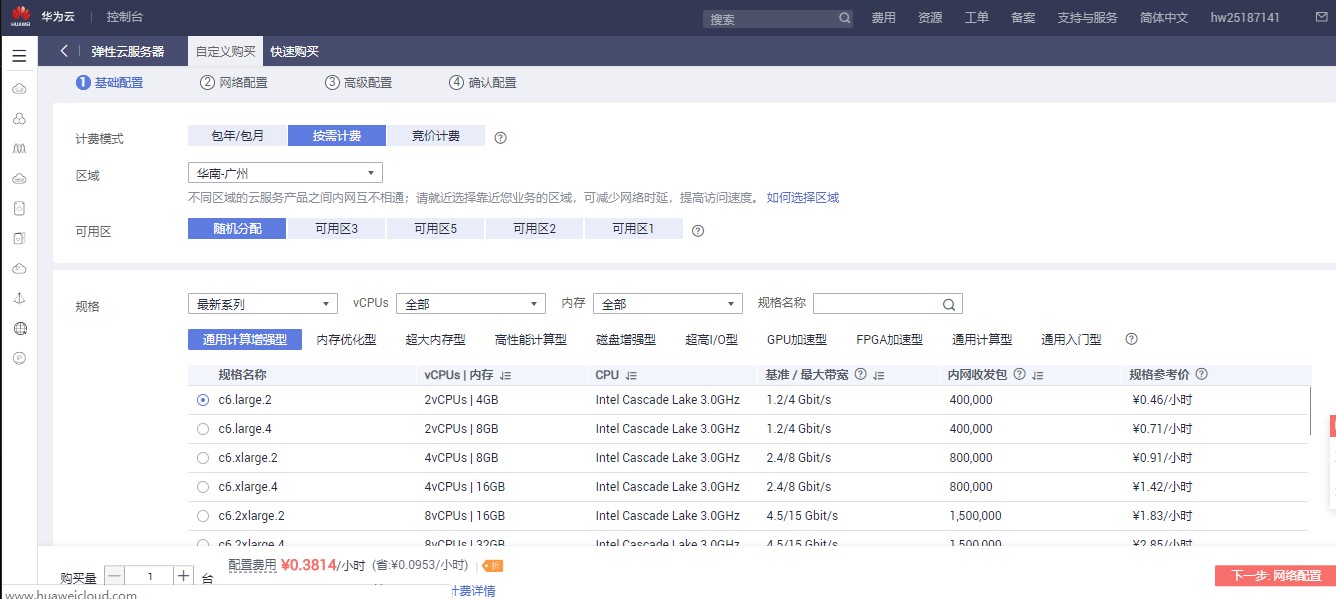

First, purchase the virtual machine needed to build the ceph cluster on Huawei cloud:

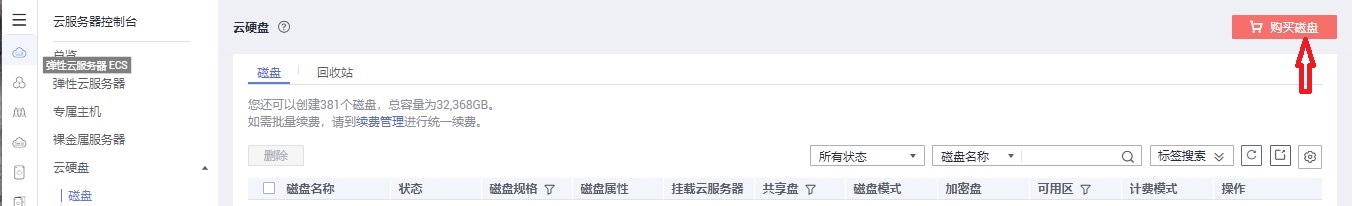

Then purchase the storage disk required by ceph

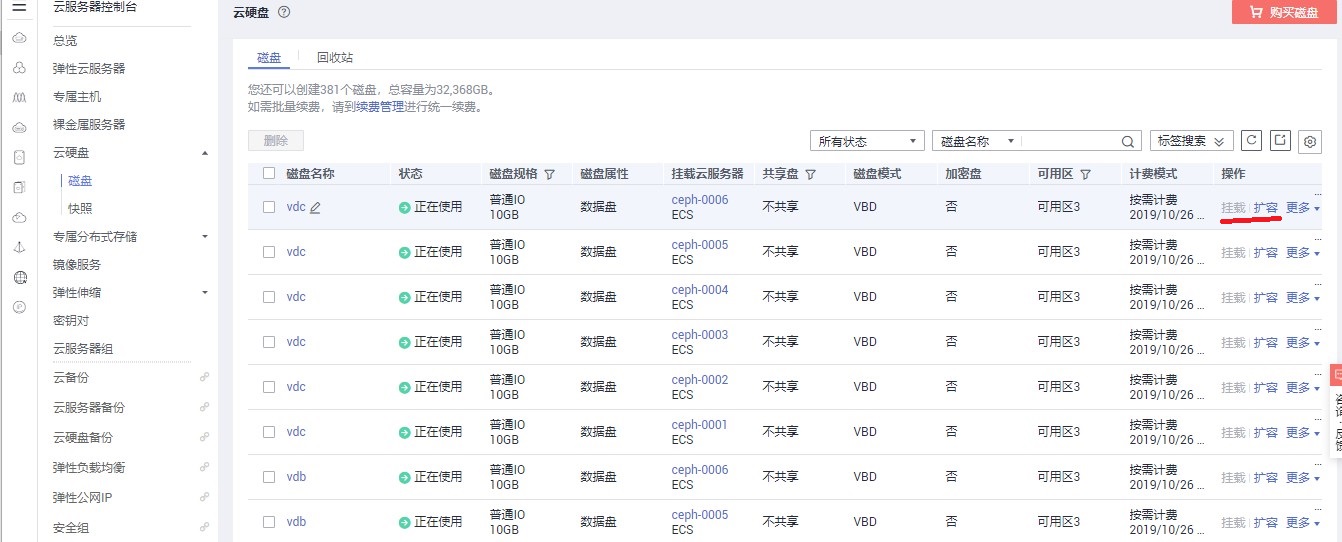

Mount the purchased disk to the virtual machine used to build ceph

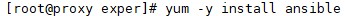

Installing ansible on the springboard machine

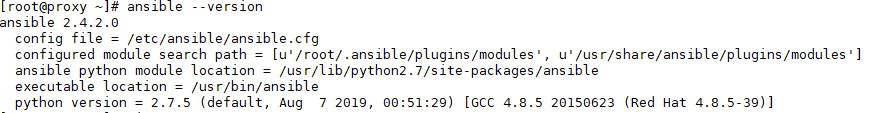

Check the ansible version and verify that ansible is installed successfully

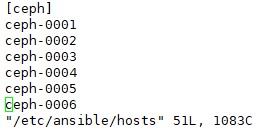

Configure host groups

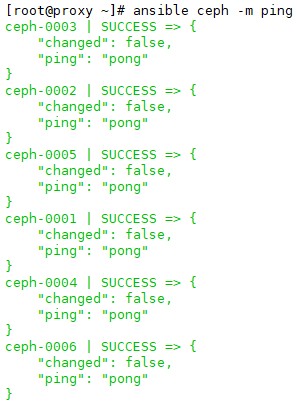

test result

Write the playbook file as follows:

1 --- 2 #Synchronize the yum file to each node 3 - hosts: ceph 4 remote_user: root 5 tasks: 6 - copy: 7 src: /etc/yum.repos.d/ceph.repo 8 dest: /etc/yum.repos.d/ceph.repo 9 - shell: yum clean all 10 #to ceph-0001 Host installation ceph-deploy,Create working directory, initialize configuration file 11 - hosts: ceph-0001 12 remote_user: root 13 tasks: 14 - yum: 15 name: ceph-deploy 16 state: installed 17 - file: 18 path: /root/ceph-cluster 19 state: directory 20 mode: '0755' 21 #Install ceph related software package for all ceph nodes 22 - hosts: ceph 23 remote_user: root 24 tasks: 25 - yum: 26 name: ceph-osd,ceph-mds 27 state: installed 28 #to ceph-0001,ceph-0002,ceph-0003 install ceph-mon 29 - hosts: ceph-0001,ceph-0002,ceph-0003 30 remote_user: root 31 tasks: 32 - yum: 33 name: ceph-mon 34 state: installed 35 #Initialize mon service 36 - hosts: ceph-0001 37 tasks: 38 - shell: 'chdir=/root/ceph-cluster ceph-deploy new ceph-0001 ceph-0002 ceph-0003' 39 - shell: 'chdir=/root/ceph-cluster ceph-deploy mon create-initial' 40 #Preparing partitions, creating journal Disk, and permanently modify device permissions,Use ceph-deploy Tool initialization data disk,Initialization OSD colony,deploy ceph file system 41 - hosts: ceph 42 remote_user: root 43 tasks: 44 - shell: parted /dev/vdb mklabel gpt 45 - shell: parted /dev/vdb mkpart primary 1 100% 46 - shell: chown ceph.ceph /dev/vdb1 47 - copy: 48 src: /etc/udev/rules.d/70-vdb.rules 49 dest: /etc/udev/rules.d/70-vdb.rules 50 - hosts: ceph-0001 51 remote_user: root 52 tasks: 53 - shell: 'chdir=/root/ceph-cluster ceph-deploy disk zap ceph-0001:vdc' 54 - shell: 'chdir=/root/ceph-cluster ceph-deploy disk zap ceph-0002:vdc' 55 - shell: 'chdir=/root/ceph-cluster ceph-deploy disk zap ceph-0003:vdc' 56 - shell: 'chdir=/root/ceph-cluster ceph-deploy disk zap ceph-0004:vdc' 57 - shell: 'chdir=/root/ceph-cluster ceph-deploy disk zap ceph-0005:vdc' 58 - shell: 'chdir=/root/ceph-cluster ceph-deploy disk zap ceph-0006:vdc' 59 - shell: 'chdir=/root/ceph-cluster ceph-deploy osd create ceph-0001:vdc:/dev/vdb1' 60 - shell: 'chdir=/root/ceph-cluster ceph-deploy osd create ceph-0002:vdc:/dev/vdb1' 61 - shell: 'chdir=/root/ceph-cluster ceph-deploy osd create ceph-0003:vdc:/dev/vdb1' 62 - shell: 'chdir=/root/ceph-cluster ceph-deploy osd create ceph-0004:vdc:/dev/vdb1' 63 - shell: 'chdir=/root/ceph-cluster ceph-deploy osd create ceph-0005:vdc:/dev/vdb1' 64 - shell: 'chdir=/root/ceph-cluster ceph-deploy osd create ceph-0006:vdc:/dev/vdb1' 65 - shell: 'chdir=/root/ceph-cluster ceph-deploy mds create ceph-0006' 66 - shell: 'chdir=/root/ceph-cluster ceph osd pool create cephfs_data 128' 67 - shell: 'chdir=/root/ceph-cluster ceph osd pool create cephfs_metadata 128' 68 - shell: 'chdir=/root/ceph-cluster ceph fs new myfs1 cephfs_metadata cephfs_data'

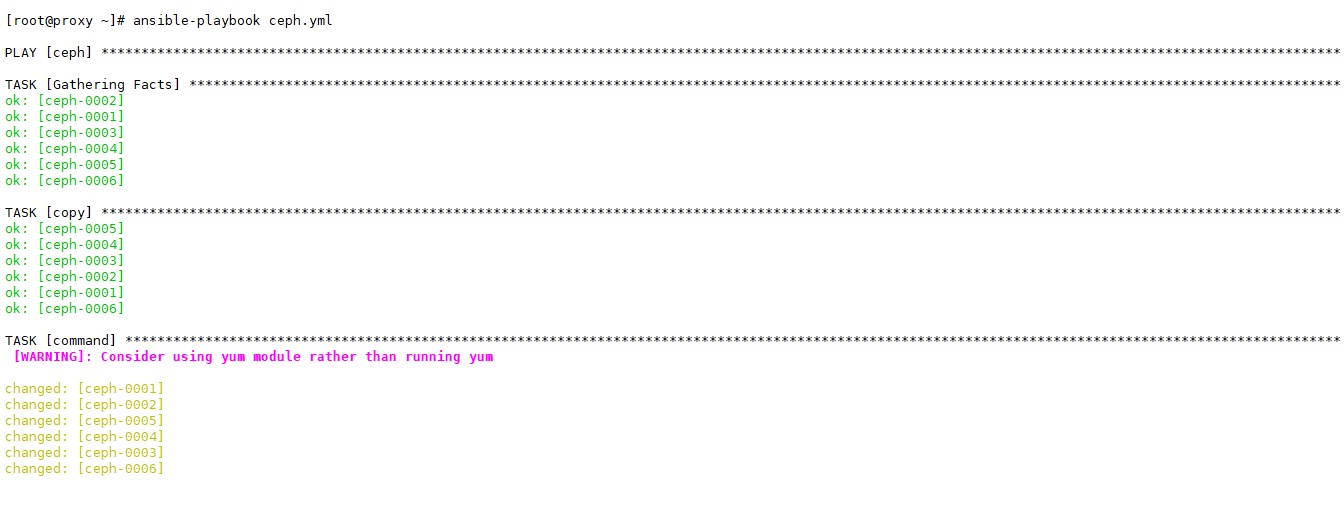

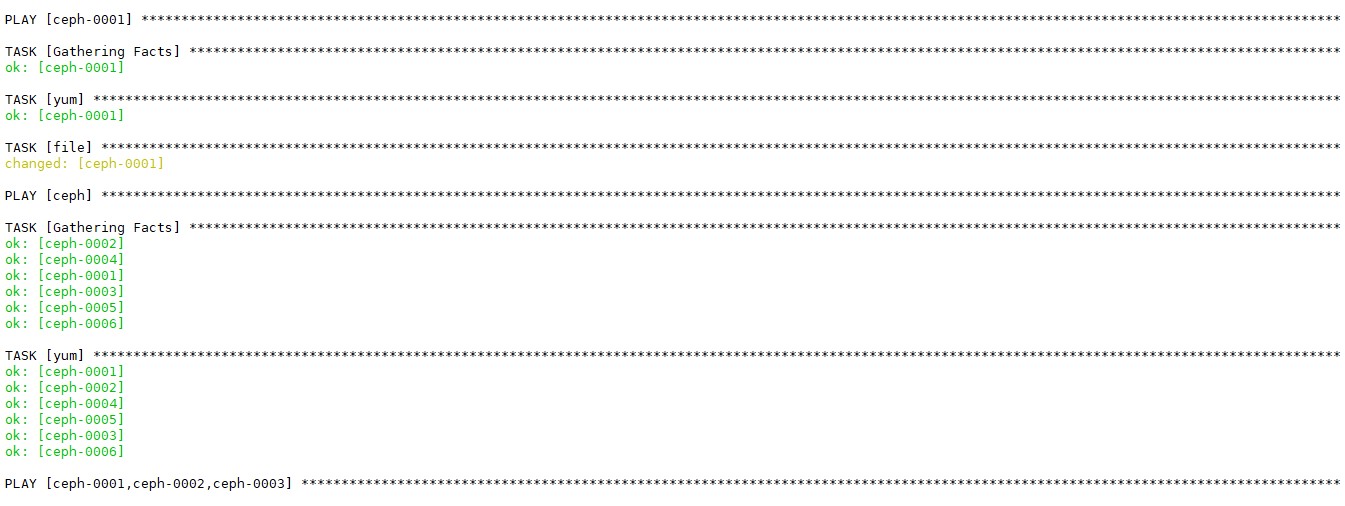

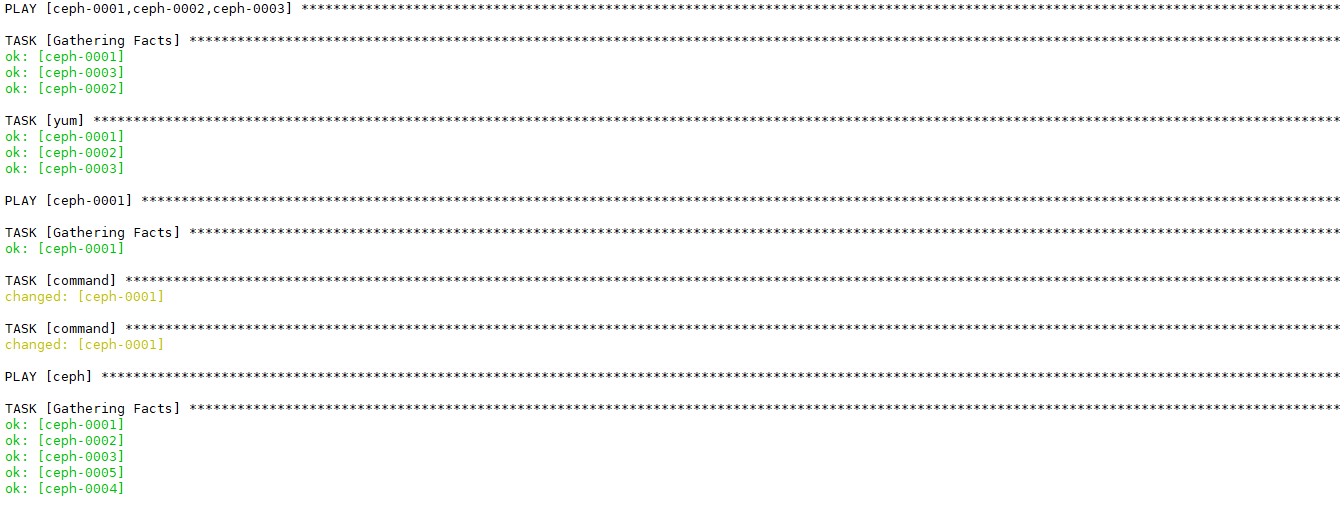

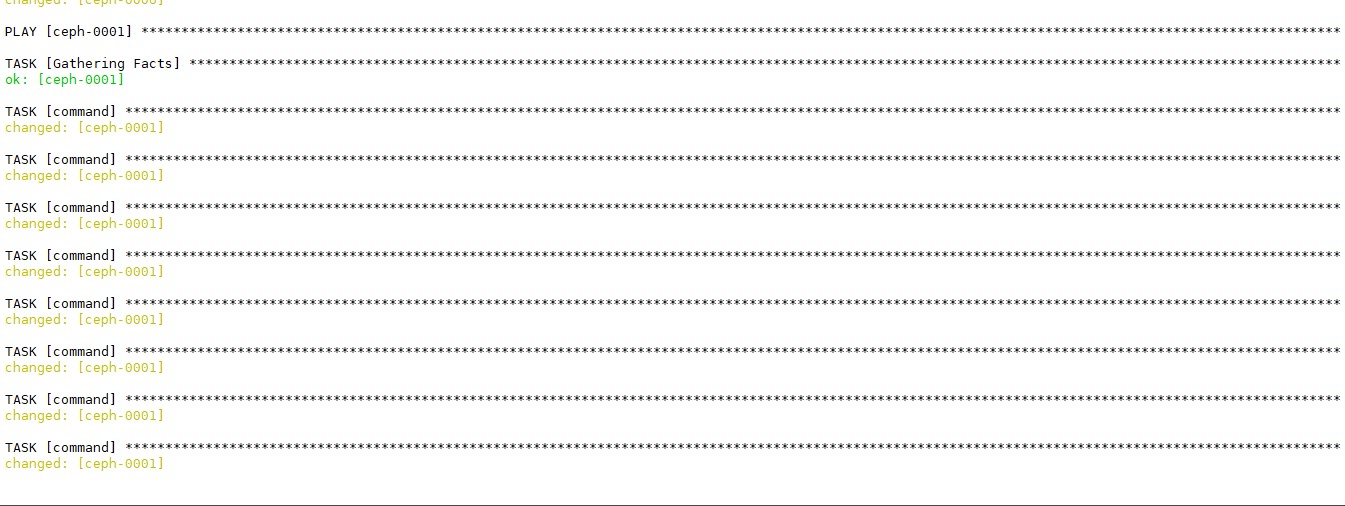

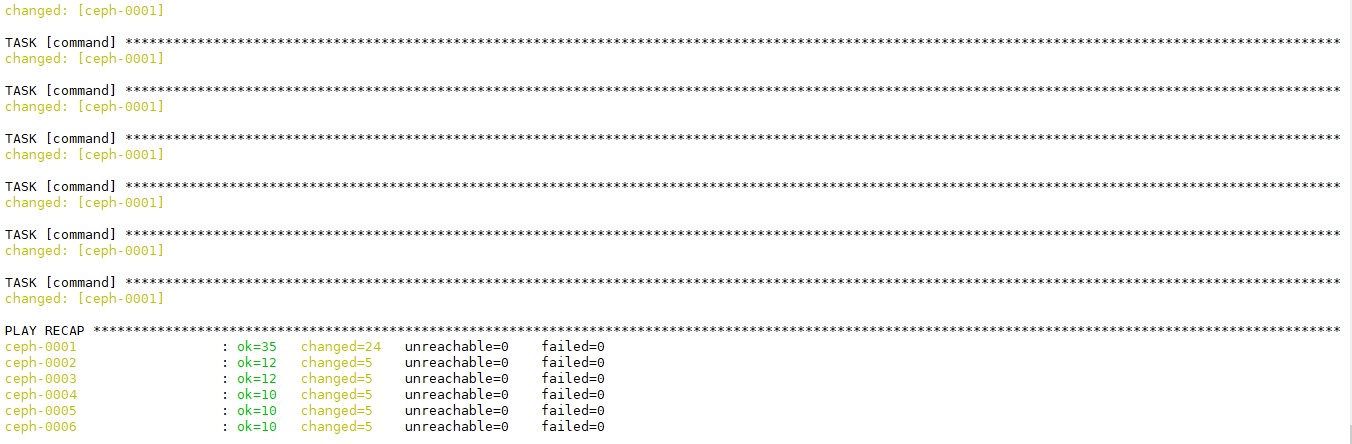

The specific implementation process of playbook is as follows:

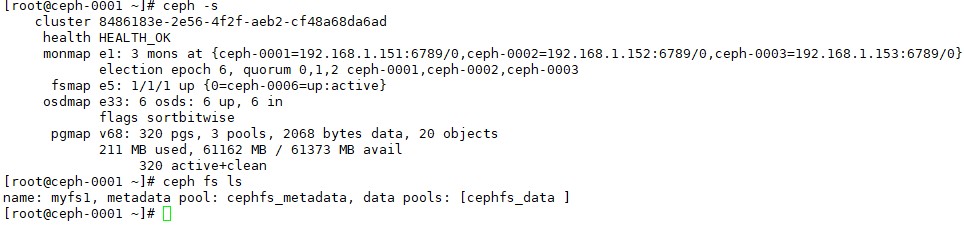

Go to ceph-0001 management host to verify that the cluster has been built successfully