This section looks at how to manage EC2 snapshots through boto3. In the actual production environment, beans use the solution EBS Snapshot Scheduler provided by AWS, import the stack of Cloudformation directly, automatically configure Lambda function and DynamoDB database, and then we can set it up by label. From a learning point of view, let's go straight to an ultra-simple version. We can write two Lambda functions directly, one for creation and one for deletion.

First, set a tag tag

Next, create the Lambda function

The specific functions are as follows:

from datetime import datetime

import boto3

def lambda_handler(event, context):

ec2_client = boto3.client('ec2')

#Get the names of all region s

regions = [region['RegionName']

for region in ec2_client.describe_regions()['Regions']]

#Loop through each region to find all instances of tagged backup

for region in regions:

print('Instances in EC2 Region {0}:'.format(region))

ec2 = boto3.resource('ec2', region_name=region)

instances = ec2.instances.filter(

Filters=[

{'Name': 'tag:backup', 'Values': ['true']}

]

)

#Get the timestamp

# ISO 8601 timestamp, i.e. 2019-01-31T14:01:58

timestamp = datetime.utcnow().replace(microsecond=0).isoformat()

#For each volume of each instance, create a snapshot

for i in instances.all():

for v in i.volumes.all():

desc = 'Backup of {0}, volume {1}, created {2}'.format(

i.id, v.id, timestamp)

print(desc)

snapshot = v.create_snapshot(Description=desc)

print("Created snapshot:", snapshot.id)

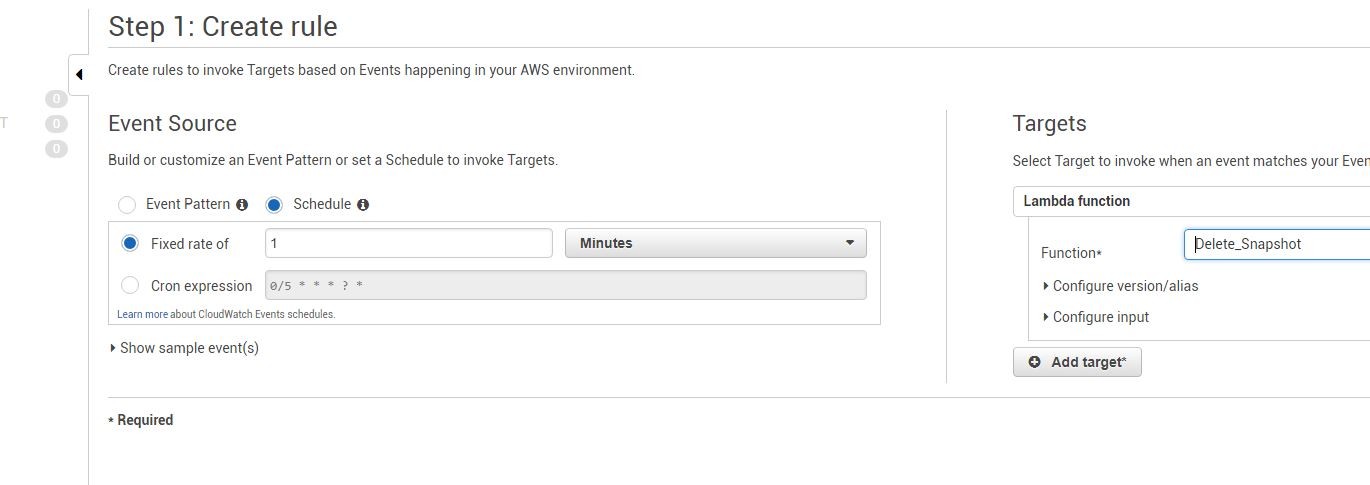

Then set up a scheduled task in Cloudwatch and execute the function regularly.

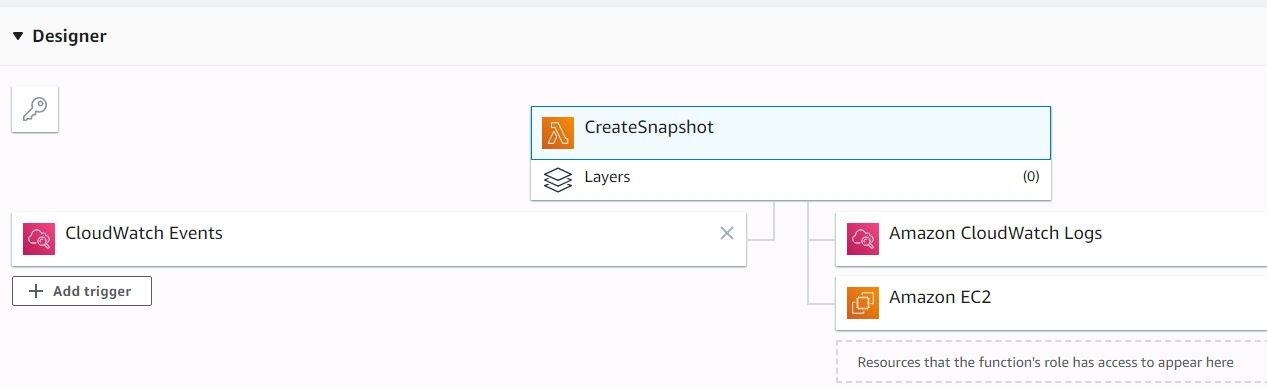

This is a sketch that binds Role and trigger.

After execution, you can view the snapshot

Looking at the print output log in Cloudwatch, you can see that it has been successfully executed

In the same way, we can create a Lambda function to delete the snapshot

Specific functions are as follows:

import boto3

def lambda_handler(event, context):

#sts returns a dictionary, gets ownerId of the current account through get, and returns None if it fails.

account_id = boto3.client('sts').get_caller_identity().get('Account')

ec2 = boto3.client('ec2')

""" :type : pyboto3.ec2 """

regions = [region['RegionName']

for region in ec2.describe_regions()['Regions']]

for region in regions:

print("Region:", region)

ec2 = boto3.client('ec2', region_name=region)

""" :type : pyboto3.ec2 """

response = ec2.describe_snapshots(OwnerIds=[account_id])

snapshots = response["Snapshots"]

print(snapshots)

#Snapshot is a long list, with each element being a dictionary structure; sort specifies sorting over time

#The following is equivalent to

# def sortTime(x):

# return x["StartTime"]

# snapshots.sort(key=sortTime)

# Sort snapshots by date ascending

snapshots.sort(key=lambda x: x["StartTime"])

# Remove snapshots we want to keep (i.e. 3 most recent)

snapshots = snapshots[:-3]

for snapshot in snapshots:

id = snapshot['SnapshotId']

try:

print("Deleting snapshot:", id)

ec2.delete_snapshot(SnapshotId=id)

except Exception as e:

print("Snapshot {} in use, skipping.".format(id))

continue

You can also create scheduled task execution functions

The print log in CloudWatch after execution